Volume 9

Issue 2

Volume 9

Issue 2

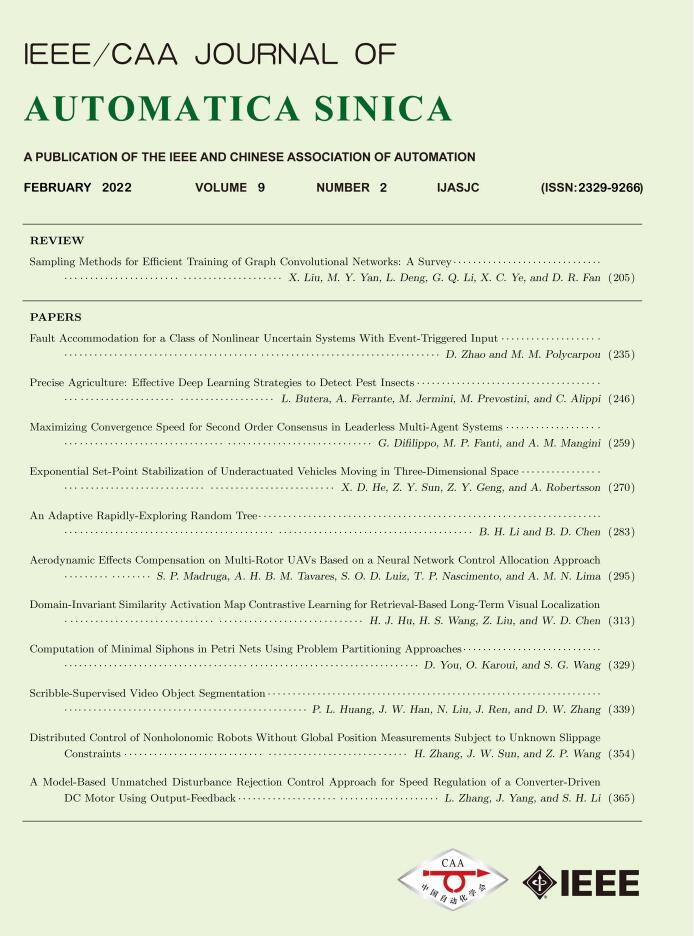

IEEE/CAA Journal of Automatica Sinica

| Citation: | X. Liu, M. Y. Yan, L. Deng, G. Q. Li, X. C. Ye, and D. R. Fan, “Sampling methods for efficient training of graph convolutional networks: A survey,” IEEE/CAA J. Autom. Sinica, vol. 9, no. 2, pp. 205–234, Feb. 2022. doi: 10.1109/JAS.2021.1004311 |

| [1] |

A. M. Fout, “Protein interface prediction using graph convolutional networks,” Ph.D. dissertation, Colorado State University, Fort Collins, Colorado, 2017.

|

| [2] |

S. Guo, Y. Lin, N. Feng, C. Song, and H. Wan, “Attention based spatial-temporal graph convolutional networks for traffic flow forecasting,” in Proc. AAAI Conf. Artificial Intelligence, 2019, vol. 33, no. 01, pp. 922–929.

|

| [3] |

Z. Cui, K. Henrickson, R. Ke, and Y. Wang, “Traffic graph convolutional recurrent neural network: A deep learning framework for network-scale traffic learning and forecasting,” IEEE Trans. Intelligent Transportation Systems, vol. 21, no. 11, pp. 4883–4894, 2019.

|

| [4] |

Z. Wang, Q. Lv, X. Lan, and Y. Zhang, “Cross-lingual knowledge graph alignment via graph convolutional networks,” in Proc. Conf. Empirical Methods in Natural Language Processing, 2018, pp. 349–357.

|

| [5] |

C. Shang, Y. Tang, J. Huang, J. Bi, X. He, and B. Zhou, “End-to-end structure-aware convolutional networks for knowledge base completion,” in Proc. AAAI Conf. Artificial Intelligence, 2019, vol. 33, no. 01, pp. 3060–3067.

|

| [6] |

J. Zhou, G. Cui, S. Hu, Z. Zhang, C. Yang, Z. Liu, L. Wang, C. Li, and M. Sun, “Graph neural networks: A review of methods and applications,” AI Open, vol. 1, pp. 57–81, 2020. doi: 10.1016/j.aiopen.2021.01.001

|

| [7] |

F. Scarselli, M. Gori, A. C. Tsoi, M. Hagenbuchner, and G. Monfardini, “The graph neural network model,” IEEE Trans. Neural Networks, vol. 20, no. 1, pp. 61–80, 2008.

|

| [8] |

P. W. Battaglia, J. B. Hamrick, V. Bapst, A. SanchezGonzalez, V. Zambaldi, M. Malinowski, A. Tacchetti, D. Raposo, A. Santoro, R. Faulkner, and C. Gulcehre, “Relational inductive biases, deep learning, and graph networks,” arXiv preprint arXiv: 1806.01261, 2018.

|

| [9] |

H. Akita, K. Nakago, T. Komatsu, Y. Sugawara, S.-i. Maeda, Y. Baba, and H. Kashima, “Bayesgrad: Explaining predictions of graph convolutional networks,” in Proc. Int. Conf. Neural Information Processing, Springer, 2018, pp. Proc. 81–92.

|

| [10] |

R. Ying, D. Bourgeois, J. You, M. Zitnik, and J. Leskovec, “Gnnexplainer: Generating explanations for graph neural networks,” in Proc. Advances in Neural Information Processing Systems, 2019, pp. 9244–9255.

|

| [11] |

F. Baldassarre and H. Azizpour, “Explainability techniques for graph convolutional networks,” arXiv preprint arXiv: 1905.13686, 2019

|

| [12] |

P. E. Pope, S. Kolouri, M. Rostami, C. E. Martin, and H. Hoffmann, “Explainability methods for graph convolutional neural networks,” in Proc. IEEE/CVF Conf. Computer Vision and Pattern Recognition, 2019, pp. 10 772–10 781.

|

| [13] |

K. Xu, J. Li, M. Zhang, S. S. Du, K.-i. Kawarabayashi, and S. Jegelka, “What can neural networks reason about?” arXiv preprint arXiv: 1905.13211, 2019.

|

| [14] |

H. Yuan, H. Yu, S. Gui, and S. Ji, “Explainability in graph neural networks: A taxonomic survey,” arXiv preprint arXiv: 2012.15445, 2020.

|

| [15] |

Y. Li, D. Tarlow, M. Brockschmidt, and R. Zemel, “Gated graph sequence neural networks,” arXiv preprint arXiv: 1511.05493, 2015.

|

| [16] |

M. Henaff, J. Bruna, and Y. LeCun, “Deep convolutional networks on graph-structured data,” arXiv preprint arXiv: 1506.05163, 2015.

|

| [17] |

J. Atwood and D. Towsley, “Diffusion-convolutional neural networks,” in Advances Neural Information Processing Systems, 2016, pp. 1993–2001.

|

| [18] |

P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Liò, and Y. Bengio, “Graph Attention Networks,” in Proc Int. Conf. Learning Representations, 2018, pp. 1–12. [Online]. Available: https://openreview.net/forum?id=rJXMpikCZ

|

| [19] |

M. Defferrard, X. Bresson, and P. Vandergheynst, “Convolutional neural networks on graphs with fast localized spectral filtering,” in Proc. Advances in Neural Information Processing Systems, 2016, vol. 29, pp. 3844–3852.

|

| [20] |

M. Niepert, M. Ahmed, and K. Kutzkov, “Learning convolutional neural networks for graphs,” in Proc. Int. Conf. Machine Learning, PMLR, 2016, pp. 2014–2023.

|

| [21] |

T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” in Proc. Int. Conf. Learning Representations (ICLR), 2017, pp. 1–14.

|

| [22] |

F. Monti, D. Boscaini, J. Masci, E. Rodola, J. Svoboda, and M. M. Bronstein, “Geometric deep learning on graphs and manifolds using mixture model cnns,” in Proc. IEEE Conf. Computer Vision and Pattern Recognition, 2017, pp. 5115–5124.

|

| [23] |

J. Bruna, W. Zaremba, A. Szlam, and Y. LeCun, “Spectral networks and locally connected networks on graphs,” arXiv preprint arXiv: 1312.6203, 2013.

|

| [24] |

F. Wu, A. Souza, T. Zhang, C. Fifty, T. Yu, and K. Weinberger, “Simplifying graph convolutional networks,” in Proc. Int. Conf. Machine Learning, PMLR, 2019, pp. 6861–6871.

|

| [25] |

X. He, K. Deng, X. Wang, Y. Li, Y. Zhang, and M. Wang, “Lightgcn: Simplifying and powering graph convolution network for recommendation,” in Proc. 43rd Int. ACM SIGIR Conf. Research and Development in Information Retrieval, 2020, pp. 639–648.

|

| [26] |

H. Nt and T. Maehara, “Revisiting graph neural networks: All we have is low-pass filters,” arXiv preprint arXiv: 1905.09550, 2019.

|

| [27] |

Y. Yang, J. Qiu, M. Song, D. Tao, and X. Wang, “Distilling knowledge from graph convolutional networks,” in Proc. IEEE/CVF Conf. Computer Vision and Pattern Recognition, 2020, pp. 7074–7083.

|

| [28] |

C. Yang, J. Liu, and C. Shi, “Extract the knowledge of graph neural networks and go beyond it: An effective knowledge distillation framework,” in Proc. Web Conf., 2021, pp. 1227–1237.

|

| [29] |

Z. Wu, S. Pan, F. Chen, G. Long, C. Zhang, and S. Y. Philip, “A comprehensive survey on graph neural networks,” IEEE Trans. Neural Networks and Learning Systems, vol. 32, no. 1, pp. 4–24, 2020.

|

| [30] |

Z. Zhang, P. Cui, and W. Zhu, “Deep learning on graphs: A survey,” arXiv preprint arXiv: 1812.04202, 2018.

|

| [31] |

S. Zhang, H. Tong, J. Xu, and R. Maciejewski, “Graph convolutional networks: A comprehensive review,” Computational Social Networks, vol. 6, no. 1, pp. 1–23, 2019. doi: 10.1186/s40649-019-0061-6

|

| [32] |

Y. LeCun and Y. Bengio, “Convolutional networks for images, speech, and time series,” The Handbook of Brain Theory and Neural Networks, vol. 3361, no. 10, Article No. 1995, 1995.

|

| [33] |

D. I. Shuman, S. K. Narang, P. Frossard, A. Ortega, and P. Vandergheynst, “The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains,” IEEE Signal Processing Magazine, vol. 30, no. 3, pp. 83–98, 2013. doi: 10.1109/MSP.2012.2235192

|

| [34] |

J. Gilmer, S. S. Schoenholz, P. F. Riley, O. Vinyals, and G. E. Dahl, “Neural message passing for quantum chemistry,” in Proc. Int. Conf. Machine Learning, PMLR, 2017, pp. 1263–1272.

|

| [35] |

W. L. Hamilton, R. Ying, and J. Leskovec, “Inductive representation learning on large graphs,” in Proc. 31st Int. Conf. Neural Information Processing Systems, 2017, pp. 1025–1035.

|

| [36] |

A. Qin, Z. Shang, J. Tian, Y. Wang, T. Zhang, and Y. Y. Tang, “Spectral–spatial graph convolutional networks for semisupervised hyperspectral image classification,” IEEE Geoscience and Remote Sensing Letters, vol. 16, no. 2, pp. 241–245, 2018.

|

| [37] |

C. Wang, B. Samari, and K. Siddiqi, “Local spectral graph convolution for point set feature learning,” in Proc. European Conf. Computer Vision (ECCV), 2018, pp. 52–66.

|

| [38] |

D. Valsesia, G. Fracastoro, and E. Magli, “Learning localized generative models for 3D point clouds via graph convolution,” in Proc. Int. Conf. Learning Representations, 2018, pp. 1–15.

|

| [39] |

Y. Wei, X. Wang, L. Nie, X. He, R. Hong, and T.-S. Chua, “Mmgcn: Multi-modal graph convolution network for personalized recommendation of micro-video,” in Proc. 27th ACM Int. Conf. Multimedia, 2019, pp. 1437–1445.

|

| [40] |

S. Cui, B. Yu, T. Liu, Z. Zhang, X. Wang, and J. Shi, “Edge-enhanced graph convolution networks for event detection with syntactic relation,” in Proc. Conf. Empirical Methods in Natural Language Processing: Findings, 2020, pp. 2329–2339.

|

| [41] |

Z. Liang, M. Yang, L. Deng, C. Wang, and B. Wang, “Hierarchical depthwise graph convolutional neural network for 3D semantic segmentation of point clouds,” in Proc. Int. Conf. Robotics and Automation (ICRA), IEEE, 2019, pp. 8152–8158.

|

| [42] |

H. Yang, “Aligraph: A comprehensive graph neural network platform,” in Proc. 25th ACM SIGKDD International Conf. Knowledge Discovery & Data Mining, 2019, pp. 3165–3166.

|

| [43] |

M. Yan, L. Deng, X. Hu, L. Liang, Y. Feng, X. Ye, Z. Zhang, D. Fan, and Y. Xie, “Hygcn: A gcn accelerator with hybrid architecture,” in Proc. IEEE Int. Symposium on High Performance Computer Architecture (HPCA), 2020, pp. 15–29.

|

| [44] |

R. Ying, R. He, K. Chen, P. Eksombatchai, W. L. Hamilton, and J. Leskovec, “Graph convolutional neural networks for web-scale recommender systems,” in Proc. 24th ACM SIGKDD Int. Conf. Knowledge Discovery & Data Mining, 2018, pp. 974–983.

|

| [45] |

K. Xu, W. Hu, J. Leskovec, and S. Jegelka, “How powerful are graph neural networks?” in Proc. Int. Conf. Learning Representations, 2019, pp. 1–17.

|

| [46] |

K. Xu, C. Li, Y. Tian, T. Sonobe, K.-i. Kawarabayashi, and S. Jegelka, “Representation learning on graphs with jumping knowledge networks,” in Proc. Int. Conf. Machine Learning, PMLR, 2018, pp. 5453– 5462.

|

| [47] |

H. Dai, Z. Kozareva, B. Dai, A. Smola, and L. Song, “Learning steady-states of iterative algorithms over graphs,” in Proc. Int. Conf. Machine Learning, PMLR, 2018, pp. 1106–1114.

|

| [48] |

J. Chen, J. Zhu, and L. Song, “Stochastic training of graph convolutional networks with variance reduction,” in Proc. Int. Conf. Machine Learning, 2018, pp. 941–949.

|

| [49] |

J. Chen, T. Ma, and C. Xiao, “Fastgcn: Fast learning with graph convolutional networks via importance sampling,” in Proc. Int. Conf. Learning Representations, 2018, pp. 1–15.

|

| [50] |

W. Huang, T. Zhang, Y. Rong, and J. Huang, “Adaptive sampling towards fast graph representation learning,” in Proc. Advances in Neural Information Processing Systems, 2018, vol.31, pp.4558–4567.

|

| [51] |

D. Zou, Z. Hu, Y. Wang, S. Jiang, Y. Sun, and Q. Gu, “Layer-dependent importance sampling for training deep and large graph convolutional networks,” Advances in Neural Information Processing Systems, vol. 32, pp. 11249–11259, 2019.

|

| [52] |

W.-L. Chiang, X. Liu, S. Si, Y. Li, S. Bengio, and C.-J. Hsieh, “Cluster-GCN: An efficient algorithm for training deep and large graph convolutional networks,” in Proc. 25th ACM SIGKDD Int. Conf. Knowledge Discovery & Data Mining, 2019, pp. 257–266.

|

| [53] |

H. Zeng, H. Zhou, A. Srivastava, R. Kannan, and V. Prasanna, “GraphSAINT: Graph sampling based inductive learning method,” in Proc. Int. Conf. Learning Representations, 2020, pp. 1–19.

|

| [54] |

J. Bai, Y. Ren, and J. Zhang, “Ripple walk training: A subgraph-based training framework for large and deep graph neural network,” arXiv preprint arXiv: 2002.07206, 2020.

|

| [55] |

H. Zeng, H. Zhou, A. Srivastava, R. Kannan, and V. Prasanna, “Accurate, efficient and scalable graph embedding,” in Proc. IEEE Int. Parallel and Distributed Processing Symposium (IPDPS), 2019, pp. 462–471.

|

| [56] |

A. Li, Z. Qin, R. Liu, Y. Yang, and D. Li, “Spam review detection with graph convolutional networks,” in Proc. 28th ACM Int. Conf. Information and Knowledge Management, 2019, pp. 2703–2711.

|

| [57] |

C. Zhang, D. Song, C. Huang, A. Swami, and N. V. Chawla, “Heterogeneous graph neural network,” in Proc. 25th ACM SIGKDD Int. Conf. Knowledge Discovery & Data Mining, 2019, pp. 793–803.

|

| [58] |

Z. Hu, Y. Dong, K. Wang, and Y. Sun, “Heterogeneous graph transformer,” in Proc. Web Conf., 2020, pp. 2704–2710.

|

| [59] |

H. Zhang and J. Zhang, “Text graph transformer for document classification,” in Proc. Conf. Empirical Methods in Natural Language Processing (EMNLP), 2020, pp. 8322–8327.

|

| [60] |

P. Sen, G. Namata, M. Bilgic, L. Getoor, B. Galligher, and T. Eliassi-Rad, “Collective classification in network data,” AI magazine, vol. 29, no. 3, pp. 93–93, 2008. doi: 10.1609/aimag.v29i3.2157

|

| [61] |

M. Zitnik and J. Leskovec, “Predicting multicellular function through multi-layer tissue networks,” Bioinformatics, vol. 33, no. 14, pp. i190–i198, 2017. doi: 10.1093/bioinformatics/btx252

|

| [62] |

J. McAuley, C. Targett, Q. Shi, and A. Van Den Hengel, “Image-based recommendations on styles and substitutes,” in Proc. 38th International ACM SIGIR Conf. Research and Development in Information Retrieval, 2015, pp. 43–52.

|

| [63] |

T. Mikolov, I. Sutskever, K. Chen, G. S. Corrado, and J. Dean, “Distributed representations of words and phrases and their compositionality,” in Proc. Advances in Neural Information Processing Systems, 2013, pp. 3111– 3119.

|

| [64] |

Y. Bengio, J. Louradour, R. Collobert, and J. Weston, “Curriculum learning,” in Proc. 26th Annual Int. Conf. Machine Learning, 2009, pp. 41–48.

|

| [65] |

C. Eksombatchai, P. Jindal, J. Z. Liu, Y. Liu, R. Sharma, C. Sugnet, M. Ulrich, and J. Leskovec, “PIXIE: A system for recommending 3+ billion items to 200+ million users in real-time,” in Proc. World Wide Web Conf., 2018, pp. 1775–1784.

|

| [66] |

Z. Liu, Z. Wu, Z. Zhang, J. Zhou, S. Yang, L. Song, and Y. Qi, “Bandit samplers for training graph neural networks,” arXiv preprint arXiv: 2006.05806, 2020.

|

| [67] |

G. Karypis and V. Kumar, “A fast and high quality multilevel scheme for partitioning irregular graphs,” SIAM Journal on Scientific Computing, vol. 20, no. 1, pp. 359–392, 1998. doi: 10.1137/S1064827595287997

|

| [68] |

I. S. Dhillon, Y. Guan, and B. Kulis, “Weighted graph cuts without eigenvectors a multilevel approach,” IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 29, no. 11, pp. 1944–1957, 2007. doi: 10.1109/TPAMI.2007.1115

|

| [69] |

B. Ribeiro and D. Towsley, “Estimating and sampling graphs with multidimensional random walks,” in Proc. 10th ACM SIGCOMM Conf. Internet Measurement, 2010, pp. 390–403.

|

| [70] |

J. Zhang, H. Zhang, C. Xia, and L. Sun, “Graph-bert: Only attention is needed for learning graph representations,” arXiv preprint arXiv: 2001.05140, 2020.

|

| [71] |

G. Li, M. Muller, A. Thabet, and B. Ghanem, “Deepgcns: Can GCNs go as deep as CNNs?” in Proc. IEEE/CVF Int. Conf. Computer Vision, 2019, pp. 9267–9276.

|

| [72] |

Q. Li, Z. Han, and X.-M. Wu, “Deeper insights into graph convolutional networks for semi-supervised learning,” in Proc. 32nd AAAI Conf. Artificial Intelligence, 2018, pp. 3538–3545.

|

| [73] |

W. Cong, R. Forsati, M. Kandemir, and M. Mahdavi, “Minimal variance sampling with provable guarantees for fast training of graph neural networks,” in Proc. 26th ACM SIGKDD Int. Conf. Knowledge Discovery & Data Mining, 2020, pp. 1393– 1403.

|

| [74] |

Q. Cheng, M. Wen, J. Shen, D. Wang, and C. Zhang, “Towards a deep-pipelined architecture for accelerating deep GCN on a multi-FPGA platform,” in Proc. Int. Conf. Algorithms and Architectures for Parallel Processing, Springer, 2020, pp. 528–547.

|

| [75] |

B. Zhang, H. Zeng, and V. Prasanna, “Hardware acceleration of large scale GCN inference,” in Proc. 31st IEEE Int. Conf. Application-Specific Systems, Architectures and Processors (ASAP), 2020, pp. 61–68.

|

| [76] |

B. Zhang, H. Zeng, and V. Prasanna, “Accelerating large scale GCN inference on FPGA,” in Proc. 28th IEEE Annual Int. Symposium on Field-Programmable Custom Computing Machines (FCCM), 2020, pp. 241–241.

|

| [77] |

Y. Meng, S. Kuppannagari, and V. Prasanna, “Accelerating proximal policy optimization on CPU-FPGA heterogeneous platforms,” in Proc. 28th IEEE Annual Int. Symposium on Field-Programmable Custom Computing Machines (FCCM), 2020, pp. 19–27.

|

| [78] |

H. Zeng and V. Prasanna, “Graphact: Accelerating gcn training on cpu-fpga heterogeneous platforms,” in Proc. ACM/SIGDA Int. Symposium on Field-Programmable Gate Arrays, 2020, pp. 255–265.

|

| [79] |

T. Geng, A. Li, R. Shi, C. Wu, T. Wang, Y. Li, P. Haghi, A. Tumeo, S. Che, S. Reinhardt, and M. C. Herbordt, “AWB-GCN: A graph convolutional network accelerator with runtime workload rebalancing,” in Proc. 53rd Annual IEEE/ACM Int. Symposium on Microarchitecture (MICRO), IEEE, 2020, pp. 922–936.

|

| [80] |

A. Auten, M. Tomei, and R. Kumar, “Hardware acceleration of graph neural networks,” in Proc. 57th ACM/IEEE Design Automation Conf. (DAC), IEEE, 2020, pp. 1–6.

|

| [81] |

S. Liang, Y. Wang, C. Liu, L. He, L. Huawei, D. Xu, and X. Li, “Engn: A high-throughput and energy-efficient accelerator for large graph neural networks,” IEEE Trans. Computers, vol. 70, no. 9, pp. 1511–1525, 2020.

|

| [82] |

K. Kiningham, C. Re, and P. Levis, “GRIP: A graph neural network accelerator architecture,” arXiv preprint arXiv: 2007.13828, 2020.

|

| [83] |

F. Zhang, X. Liu, J. Tang, Y. Dong, P. Yao, J. Zhang, X. Gu, Y. Wang, B. Shao, R. Li, and K. Wang, “OAG: Toward linking large-scale heterogeneous entity graphs,” in Proc. 25th ACM SIGKDD Int. Conf. Knowledge Discovery & Data Mining, 2019, pp. 2585–2595.

|

| [84] |

R. Hussein, D. Yang, and P. Cudré-Mauroux, “Are meta-paths necessary? revisiting heterogeneous graph embeddings,” in Proc. 27th ACM Int. Conf. Information and Knowledge Management, 2018, pp. 437–446.

|

| [85] |

H.-C. Shin, H. R. Roth, M. Gao, L. Lu, Z. Xu, I. Nogues, J. Yao, D. Mollura, and R. M. Summers, “Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning,” IEEE Trans. Medical Imaging, vol. 35, no. 5, pp. 1285–1298, 2016. doi: 10.1109/TMI.2016.2528162

|

| [86] |

K. Zhang, W. Zuo, Y. Chen, D. Meng, and L. Zhang, “Beyond a Gaussian denoiser: Residual learning of deep CNN for image denoising,” IEEE Trans. Image Processing, vol. 26, no. 7, pp. 3142–3155, 2017. doi: 10.1109/TIP.2017.2662206

|

| [87] |

K. Zhang, W. Zuo, S. Gu, and L. Zhang, “Learning deep CNN denoiser prior for image restoration,” in Proc. IEEE Conf. Computer Vision and Pattern Recognition, 2017, pp. 3929–3938.

|

| [88] |

Y. Rong, W. Huang, T. Xu, and J. Huang, “Dropedge: Towards deep graph convolutional networks on node classification,” in Proc. Int. Conf. Learning Representations, 2020, pp. 1–17.

|

| [89] |

K. Oono and T. Suzuki, “Graph neural networks exponentially lose expressive power for node classification,” in Proc. Int. Conf. Learning Representations, 2020, pp. 1–37.

|

| [90] |

A. Hasanzadeh, E. Hajiramezanali, S. Boluki, M. Zhou, N. Duffield, K. Narayanan, and X. Qian, “Bayesian graph neural networks with adaptive connection sampling,” in Proc. Int. Conf. Machine Learning, PMLR, 2020, pp. 4094–4104.

|

| [91] |

S. Abu-El-Haija, B. Perozzi, A. Kapoor, N. Alipourfard, K. Lerman, H. Harutyunyan, G. Ver Steeg, and A. Galstyan, “Mixhop: Higher-order graph convolutional architectures via sparsified neighborhood mixing,” in Proc. International Conf. Machine Learning, PMLR, 2019, pp. 21–29.

|

| [92] |

S. Luan, M. Zhao, X.-W. Chang, and D. Precup, “Break the ceiling: Stronger multi-scale deep graph convolutional networks,” in Proc. Advances in Neural Information Processing Systems, 2019, vol.32, pp. 10945–10955.

|

| [93] |

M. Liu, H. Gao, and S. Ji, “Towards deeper graph neural networks,” in Proc. 26th ACM SIGKDD Int. Conf. Knowledge Discovery & Data Mining, 2020, pp. 338–348.

|

| [94] |

H. Zeng, M. Zhang, Y. Xia, A. Srivastava, A. Malevich, R. Kannan, V. Prasanna, L. Jin, and R. Chen, “Deep graph neural networks with shallow subgraph samplers,” arXiv preprint arXiv: 2012.01380, 2020.

|