Volume 6

Issue 6

Volume 6

Issue 6

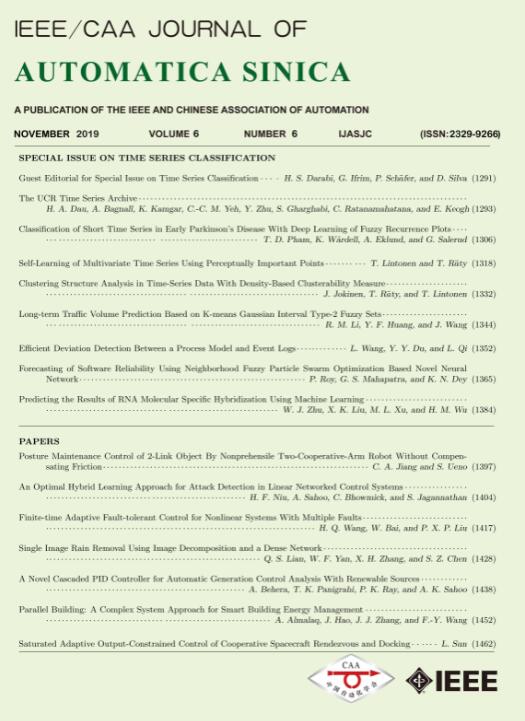

IEEE/CAA Journal of Automatica Sinica

| Citation: | Juho Jokinen, Tomi Räty and Timo Lintonen, "Clustering Structure Analysis in Time-Series Data With Density-Based Clusterability Measure," IEEE/CAA J. Autom. Sinica, vol. 6, no. 6, pp. 1332-1343, Nov. 2019. doi: 10.1109/JAS.2019.1911744 |

| [1] |

A. K. Jain and R. C. Dubes, Algorithms for Clustering Data. Upper Saddle River, NJ, USA: Prentice-Hall, Inc., 1988.

|

| [2] |

J. W. Tukey, Exploratory Data Analysis. Reading, Mass, USA: Addison-Wesley, 1977.

|

| [3] |

K. P. Murphy, Machine Learning: A Probabilistic Perspective. Cambridge, Mass, USA: The MIT Press, 2012.

|

| [4] |

C. Hennig, " What are the true clusters?” Pattern Recognit. Lett., vol. 64, pp. 53–62, Oct. 2015. doi: 10.1016/j.patrec.2015.04.009

|

| [5] |

V. Estivill-Castro, " Why so many clustering algorithms: a position paper,” ACM SIGKDD Explorations Newsl., vol. 4, no. 1, pp. 65–75, Jun. 2002. doi: 10.1145/568574.568575

|

| [6] |

A. K. Jain, M. N. Murty, and P. J. Flynn, " Data clustering: a review,” ACM Comput. Surv., vol. 31, no. 3, pp. 264–323, Sep. 1999. doi: 10.1145/331499.331504

|

| [7] |

W. Stuetzle, " Estimating the cluster tree of a density by analyzing the minimal spanning tree of a sample,” J. Classif., vol. 20, no. 1, pp. 25–47, May 2003. doi: 10.1007/s00357-003-0004-6

|

| [8] |

J. Goldberger and S. Roweis, " Hierarchical clustering of a mixture model,” in Proc. 17th Int. Conf. Neural Information Processing Systems, Vancouver, British Columbia, Canada, 2004, pp. 505–512.

|

| [9] |

M. Ester, H. P. Kriegel, J. Sander, and X. W. Xu, " A density-based algorithm for discovering clusters in large spatial databases with noise,” in Proc. 2nd Int. Conf. Knowledge Discovery and Data Mining, Portland, Oregon, 1996, pp. 226–231.

|

| [10] |

B. P. Kent, A. Rinaldo, and T. Verstynen, " DeBaCl: a python package for interactive density-based clustering,” 2013.

|

| [11] |

G. Menardi, " A review on modal clustering,” Int. Stat. Rev., vol. 84, no. 3, pp. 413–433, Dec. 2016. doi: 10.1111/insr.12109

|

| [12] |

J. A. Hartigan, Clustering Algorithms. New York, NY, USA: John Wiley & Sons, Inc., 1975.

|

| [13] |

H. P. Kriegel, P. Kröger, J. Sander, and A. Zimek, " Density-based clustering,” WIREs:Data Min. Knowl. Discovery, vol. 1, no. 3, pp. 231–240, May–Jun. 2011. doi: 10.1002/widm.30

|

| [14] |

M. Rosenblatt, " Remarks on some nonparametric estimates of a density function,” Ann. Math. Stat., vol. 27, no. 3, pp. 832–837, Sep. 1956. doi: 10.1214/aoms/1177728190

|

| [15] |

E. Parzen, " On estimation of a probability density function and mode,” Ann. Math. Stat., vol. 33, no. 3, pp. 1065–1076, Sep. 1962. doi: 10.1214/aoms/1177704472

|

| [16] |

D. O. Loftsgaarden and C. P. Quesenberry, " A nonparametric estimate of a multivariate density function,” Ann. Math. Stat., vol. 36, no. 3, pp. 1049–1051, Jun. 1965. doi: 10.1214/aoms/1177700079

|

| [17] |

E. Fix and J. L. Jr. Hodges, " Discriminatory analysis-nonparametric discrimination: consistency properties,” U.S. Air Force School of Aviation Medicine, Randolph Field, Texas, USA, Tech. Rep. 4, 1951.

|

| [18] |

X. Y. Wang, A. Mueen, H. Ding, G. Trajcevski, P. Scheuermann, and E. Keogh, " Experimental comparison of representation methods and distance measures for time series data,” Data Min. Knowl. Discovery, vol. 26, no. 2, pp. 275–309, Mar. 2013. doi: 10.1007/s10618-012-0250-5

|

| [19] |

G. Biau and L. Devroye, Lectures on the Nearest Neighbor Method. Cham, Germany: Springer, 2015.

|

| [20] |

R. E. Bellman, Adaptive Control Processes: A Guided Tour. Princeton, NJ, USA: Princeton University Press, 1961.

|

| [21] |

R. J. G. B. Campello, D. Moulavi, A. Zimek, and J. Sander, " Hierarchical density estimates for data clustering, visualization, and outlier detection,” ACM Trans. Knowl. Discovery Data, vol. 10, no. 1, pp. 5, Jul. 2015.

|

| [22] |

R. Azencott, V. Muravina, R. Hekmati, W. Zhang, and M. Paldino, " Automatic clustering in large sets of time series,” in Contributions to Partial Differential Equations and Applications, B. N. Chetverushkin, W. Fitzgibbon, Y. A. Kuznetsov, P. Neittaanmaki, J. Periaux, and O. Pironneau, Eds. Cham, Germany: Springer, 2019, pp. 65–75.

|

| [23] |

D. C. Kozen, The Design and Analysis of Algorithms. New York, USA: Springer-Verlag, 1992.

|

| [24] |

Y. L. Hu and X. W. Liu, " Optimization of grouping evacuation strategy in high-rise building fires based on graph theory and computational experiments,” IEEE/CAA J. Autom. Sinica, vol. 5, no. 6, pp. 1104–1112, Nov. 2018. doi: 10.1109/JAS.2018.7511231

|

| [25] |

K. Chaudhuri, S. Dasgupta, S. Kpotufe, and U. von Luxburg, " Consistent procedures for cluster tree estimation and pruning,” IEEE Trans. Inf. Theory, vol. 60, no. 12, pp. 7900–7912, Dec. 2014. doi: 10.1109/TIT.2014.2361055

|

| [26] |

L. Wasserman, " Topological data analysis,” Ann. Rev. Stat. Appl., vol. 5, no. 1, pp. 501–532, Mar. 2018. doi: 10.1146/annurev-statistics-031017-100045

|

| [27] |

T. W. Liao, " Clustering of time series data—a survey,” Pattern Recognit., vol. 38, no. 11, pp. 1857–1874, Nov. 2005. doi: 10.1016/j.patcog.2005.01.025

|

| [28] |

C. A. Ratanamahatana and E. Keogh, " Everything you know about dynamic time warping is wrong,” in Proc. 3rd Workshop on Mining Temporal and Sequential Data, Seattle, WA, USA, 2004.

|

| [29] |

F. Petitjean, G. Forestier, G. I. Webb, A. E. Nicholson, Y. P. Chen, and E. Keogh, " Faster and more accurate classification of time series by exploiting a novel dynamic time warping averaging algorithm,” Knowl. Inf. Syst., vol. 47, no. 1, pp. 1–26, Apr. 2016. doi: 10.1007/s10115-015-0878-8

|

| [30] |

S. Fröhwirth-Schnatter and S. Kaufmann, " Model-based clustering of multiple time series,” J. Bus. Econ. Stat., vol. 26, no. 1, pp. 78–89, Jan. 2008. doi: 10.1198/073500107000000106

|

| [31] |

Y. N. Wang and R. S. Tsay, " Clustering multiple time series with structural breaks,” J. Time Ser. Anal., vol. 40, no. 2, pp. 182–202, Mar. 2019. doi: 10.1111/jtsa.12434

|

| [32] |

E. J. Keogh and M. J. Pazzani, " Scaling up dynamic time warping for datamining applications,” in Proc. 6th ACM SIGKDD Int. Conf. Knowledge Discovery and Data Mining, Boston, USA, 2000, pp. 285pozhehap-289.

|

| [33] |

E. Keogh and C. A. Ratanamahatana, " Exact indexing of dynamic time warping,” Knowl. Inf. Syst., vol. 7, no. 3, pp. 358–386, Mar. 2005. doi: 10.1007/s10115-004-0154-9

|

| [34] |

M. Shokoohi-Yekta, B. Hu, H. X. Jin, J. Wang, and E. Keogh, " Generalizing DTW to the multi-dimensional case requires an adaptive approach,” Data Min. Knowl. Discovery, vol. 31, no. 1, pp. 1–31, Jan. 2017. doi: 10.1007/s10618-016-0455-0

|

| [35] |

H. Sakoe and S. Chiba, " Dynamic programming algorithm optimization for spoken word recognition,” IEEE Trans. Acoust.,Speech,Signal Process., vol. 26, no. 1, pp. 43–49, Feb. 1978. doi: 10.1109/TASSP.1978.1163055

|

| [36] |

F. Y. Wang, J. Zhang, Q. L. Wei, X. H. Zheng, and L. Li, " PDP: parallel dynamic programming,” IEEE/CAA J. Autom. Sinica, vol. 4, no. 1, pp. 1–5, Jan. 2017. doi: 10.1109/JAS.2017.7510310

|

| [37] |

Y. P. Chen, B. Hu, E. J. Keogh, and G. E. A. P. A. Batista, " DTW-D: time series semi-supervised learning from a single example,” in Proc. 19th ACM SIGKDD Int. Conf. Knowledge Discovery and Data Mining, Chicago, USA, 2013.

|

| [38] |

J. A. Hartigan and M. A. Wong, " Algorithm as 136: a k-means clustering algorithm,” J. Roy. Stat. Soc. Ser. C (Appl. Stat.)

|

| [39] |

M. Ackerman and S. Ben-David, " Clusterability: a theoretical study,” in Proc. 12th Int. Conf. Artificial Intelligence and Statistics, Clearwater Beach, USA, 2009, pp. 1–8.

|

| [40] |

E. Nowakowska, J. Koronacki, and S. Lipovetsky, " Clusterability assessment for Gaussian mixture models,” Appl. Math. Comput., vol. 256, pp. 591–601, Apr. 2015.

|

| [41] |

S. Epter, M. Krishnamoorthy, and M. J. Zaki, " Clusterability detection and cluster initialization,” in Proc. Workshop on Clustering High Dimensional Data and Its Applications at the 2nd SIAM Int. Conf. Data Mining, Arlington, USA, 2002, pp. 47–58.

|

| [42] |

S. Even, Graph Algorithms. 2nd ed. Cambridge, UK: Cambridge University Press, 2011.

|

| [43] |

A. Adolfsson, M. Ackerman, and N. C. Brownstein, " To cluster, or not to cluster: an analysis of clusterability methods,” Pattern Recognit., vol. 88, pp. 13–26, Apr. 2019. doi: 10.1016/j.patcog.2018.10.026

|

| [44] |

B. W. Silverman, " Using kernel density estimates to investigate multimodality,” J. Roy. Stat. Soc.,Ser. B:Methodol., vol. 43, no. 1, pp. 97–99, Sep. 1981.

|

| [45] |

J. A. Hartigan and P. M. Hartigan, " The dip test of unimodality,” Ann. Stat., vol. 13, no. 1, pp. 70–84, Mar. 1985. doi: 10.1214/aos/1176346577

|

| [46] |

R. L. Wasserstein and N. A. Lazar, " The ASA statement on p-values: Context, process, and purpose,” Am. Stat., vol. 70, no. 2, pp. 129–133, Mar. 2016. doi: 10.1080/00031305.2016.1154108

|

| [47] |

B. Hopkins and J. G. Skellam, " A new method for determining the type of distribution of plant individuals,” Ann. Bot., vol. 18, no. 2, pp. 213–227, Apr. 1954. doi: 10.1093/oxfordjournals.aob.a083391

|

| [48] |

S. Theodoridis and K. Koutroumbas, Pattern recognition. 4th ed. Orlando, FL, USA: Academic Press, Inc., 2009.

|

| [49] |

M. J. Zaki and W. Jr. Meira, Data Mining and Analysis: Fundamental Concepts and Algorithms. New York, NY, USA: Cambridge University Press, 2014.

|

| [50] |

A. Bagnall, J. Lines, J. Hills, and A. Bostrom, " Time-series classification with cote: The collective of transformation-based ensembles,” IEEE Trans. Knowl. Data Eng., vol. 27, no. 9, pp. 2522–2535, Sep. 2015. doi: 10.1109/TKDE.2015.2416723

|

| [51] |

R. J. G. B. Campello, D. Moulavi, and J. Sander, " Density-based clustering based on hierarchical density estimates,” in Proc. 17th Pacific-Asia Conf. Knowledge Discovery and Data Mining, Gold Coast, Australia, 2013, pp. 160–172.

|

| [52] |

L. McInnes and J. Healy, " Accelerated hierarchical density based clustering,” in Proc. IEEE Int. Conf. Data Mining Workshops, New Orleans, USA, 2017, pp. 33–42.

|

| [53] |

J. A. Hartigan, " Estimation of a convex density contour in two dimensions,” J. Am. Stat. Assoc., vol. 82, no. 397, pp. 267–270, Mar. 1987. doi: 10.1080/01621459.1987.10478428

|

| [54] |

D. W. Müller and G. Sawitzki, " Excess mass estimates and tests for multimodality,” J. Am. Stat. Assoc., vol. 86, no. 415, pp. 738–746, Sep. 1991.

|

| [55] |

K. Chaudhuri and S. Dasgupta, " Rates of convergence for the cluster tree,” in Proc. 24th Ann. Conf. Neural Information Processing Systems, Vancouver, British Columbia, Canada, 2010, pp. 343–351.

|

| [56] |

D. Birant and A. Kut, " ST-DBSCAN: An algorithm for clustering spatial–temporal data,” Data Knowl. Eng., vol. 60, no. 1, pp. 208–221, Jan. 2007. doi: 10.1016/j.datak.2006.01.013

|

| [57] |

L. McInnes, J. Healy, and S. Astels, " hdbscan: Hierarchical density based clustering,” J. Open Source Softw., vol. 2, no. 11, pp. 205, Mar. 2017. doi: 10.21105/joss.00205

|

| [58] |

K. Johnsson, M. Linderoth, and M. Fontes, " What is a unimodal cell population? using statistical tests as criteria for unimodality in automated gating and quality control,” Cytometry Part A, vol. 91, no. 9, pp. 908–916, Sep. 2017. doi: 10.1002/cyto.a.23173

|

| [59] |

Q. Li and J. S. Racine, Nonparametric Econometrics: Theory and Practice. Princeton, USA: Princeton University Press, 2007.

|

| [60] |

C. Hennig, M. Meila, F. Murtagh, and R. Rocci, Handbook of Cluster Analysis, 1st Ed. CRC Press, Dec., 2015.

|

| [61] |

E. Keogh and S. Kasetty, " On the need for time series data mining benchmarks: a survey and empirical demonstration,” Data Min. Knowl. Discovery, vol. 7, no. 4, pp. 349–371, Oct. 2003. doi: 10.1023/A:1024988512476

|

| [62] |

T. Rakthanmanon, B. Campana, A. Mueen, G. Batista, B. Westover, Q. Zhu, J. Zakaria, and E. Keogh, " Searching and mining trillions of time series subsequences under dynamic time warping,” in Proc. 18th ACM SIGKDD Int. Conf. Knowledge Discovery and Data Mining, Beijing, China, 2012, pp. 262–270.

|

| [63] |

G. E. A. P. A. Batista, E. J. Keogh, O. M. Tataw, and V. M. A. de Souza, " CID: an efficient complexity-invariant distance for time series,” Data Min. Knowl. Discovery, vol. 28, no. 3, pp. 634–669, May 2014. doi: 10.1007/s10618-013-0312-3

|

| [64] |

A. Ultsch, " Clustering with SOM: U*c,” in Proc. 5th Workshop on Self-Organizing Maps, Paris, France, 2005, pp. 75–82.

|

| [65] |

F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and E. Duchesnay, " Scikit-learn: Machine learning in Python,” J. Mach. Learn. Res., vol. 12, pp. 2825–2830, Feb. 2011.

|

| [66] |

H. A. Dau, E. Keogh, K. Kamgar, C. C. M. Yeh, Y. Zhu, S. Gharghabi, C. A. Ratanamahatana, Y. P. Chen, B. Hu, N. Begum, A. Bagnall, A. Mueen, and G. Batista, " UCR time series classification archive,” 2018, [Online]. Available: https://www.cs.ucr.edu/~eamonn/time_series_data_2018/BriefingDocument2018.pdf

|

| [67] |

A. Manninen, T. Kääriäinen, T. Parviainen, S. Buchter, M. Heiliö, and T. Laurila, " Long distance active hyperspectral sensing using high-power near-infrared supercontinuum light source,” Opt. Express, vol. 22, no. 6, pp. 7172–7177, Mar. 2014. doi: 10.1364/OE.22.007172

|

| [68] |

F. R. S. Karl Pearson, " LIII. on lines and planes of closest fit to systems of points in space,” London,Edinburgh,Dublin Philosoph. Mag. J. Sci., vol. 2, no. 11, pp. 559–572, Nov. 1901. doi: 10.1080/14786440109462720

|

| [69] |

H. Hotelling, " Analysis of a complex of statistical variables into principal components,” J. Edu. Psychol., vol. 24, no. 6, pp. 417–441, 1933. doi: 10.1037/h0071325

|

| [70] |

I. T. Jollife, Principal Component Analysis. 2nd ed. New York, USA: Springer Verlag, 2002.

|

| [71] |

J. Q. Gu, H. F. Hu, and H. X. Li, " Local robust sparse representation for face recognition with single sample per person,” IEEE/CAA J. Autom. Sinica, vol. 5, no. 2, pp. 547–554, Mar. 2018. doi: 10.1109/JAS.2017.7510658

|