2. Research Center of Computational Experiments and Parallel Systems, The National University of Defense Technology, Changsha 410073, China

3. with the Department of Electrical and Computer Engineering, Ritchie School of Engineering and Computer Science, University of Denver, Denver, CO 80210, USA

4. the Department of Computer Science and Engineering, University of Minnesota, Minneapolis, MN 55414, USA

5. with the Qingdao Academy of Intelligent Industries (QAII), Qingdao, Shandong, China, and SKL-MCCS, CASIA, Beijing 10019, China

6. with the Department of Electrical and Computer Engineering, Colorado State University, Fort Collins, CO 80523, USA

The match of AlphaGo vs. Lee Sedol is a history making event and a milestone in the quest of artificial intelligence (AI). The computer Go program AlphaGo by DeepMind has won 4:1 in a five game match against one of the world's best players,Lee Sedol,from Korea. The victory has come considerably sooner than anyone has expected and has astonished many in the AI field. Nolan Bushnell,the founder of Atari and a Go Guru himself,was so impressed by AlphaGo's feat: "Go is the most important game in my life",he said,"It is the only game that truly balances the left and right sides of the brain. The fact that it has now yielded to computer technology is massively important"[1]. The defeat over humanity by a machine has also generated huge public interests in AI technology around the world,especially in China,Korea,U.S.,and U.K. To many people,IT has a new meaning from this moment: it stands not just for Information Technology or Industrial Technology,it is Intelligent Technology now,and the age of new IT is coming[2].

The game of Go is originated from ancient China and its earliest documentation dated back to the Western Zhou Dynasty (1046 BC to 771 BC). It was introduced to the Korean Peninsula and Japan during 420 AD to 589 AD and 618 AD to 907 AD,respectively. The rule of Go is extremely simple,however,its complexity is prohibitively high. The number of theoretically possible games is in the order of 10700[3],which defeats any attempt of applying only brute-force computing,and demands real intelligence in Go programs against human players. Furthermore,the victory of Go relies heavily on fuzzy and objective interpretation of the overall game situations,such as thickness,liberty and potential,which creates tremendous obstacles for computers to succeed. This is the reason why Go is so challenging and attractive to AI researchers.

However,very few studies and little progress in AI for Go have been made before the 1990 s[4,5,6,7]. Prior to 2015,the best achievement Go programs could obtain is merely an amateur dan level[8,9,10,11,12]. A breakthrough was made by AlphaGo in October 2015 after it defeated 5:0 the European Go champion,Fan Hui,of professional two dan[8]. Today many top players believe that AlphaGo is above nine dan,the highest professional level in Go.

Here we would like to consider the impact and significance of AlphaGo's success from a different perspective,i.e.,in terms of the fundamental issue of complexity and intelligence. Similar to the Church-Turing Thesis in early history of computing machinery,we believe that an AlphaGo Thesis can be postulated for intelligent technology,and it will play an important role for intelligent industries and smart societies.

Ⅱ. IMPACT OF ALPHAGO: THE COMING ERA OF NEW ITIn terms of technical innovation,AlphaGo did not create new AI concepts or methods. However,its greatest contribution lies in the massive integration and implementation of recent data-driven AI approaches,especially deep learning (DL) and advanced search techniques,and demonstrates to the world the power of these technological advancements. For this reason,the AlphaGo's victory and achievement is beyond the technology and more of psychological nature.

Deep neural networks (DNN) based DL has played a vital role in AlphaGo's success,but,the features learned automatically by DNN can not be understood intuitively by human. How to interpret the feature spaces generated by the DL process? What are their characteristics in terms of reliability,stability and convergence?

To a large extend,the incomprehensibility of the features and their spaces might be precisely the reason why the AlphaGo possesses the intelligence beating human players in Go games. This also verifies the belief of many AI experts that the intelligence must emerge from the process of computing and interacting. As stated by one of AI's Founding Fathers,the late MIT professor Marvin Minsky,"What magical trick makes us intelligent? The trick is that there is no trick. The power of intelligence stems from our vast diversity,not from any single perfect principle"[13].

Compared with other Go programs,the AlphaGo is unique in its ability of relating the immediate action with the final outcome of the game through its value network,providing a mechanism for closed-loop feedback in decision-making. This is very similar to the adaptive dynamic programming (ADP) developed in control[14,15,16],except that normal neural networks instead of DNN are used in ADP.

In addition,the data generated and improvements obtained by reinforcement learning through a huge amount of self-play games are extremely impressive. Actually,it is possible that the number of self-play games by AlphaGo is even larger than the number of games played by the entire human race since the creation of Go,which is unimaginable to any single human being in the past as well as in the future[17].

Obviously,the AlphaGo approach can be applied in many other areas other than the game of Go. Clearly,after the "old" IT (Industrial Technology) and the "past" IT (Information Technology),the human society will enter the era of the "new" IT (Intelligent Technology) represented mainly by AI and robotics. Therefore,AlphaGo goes to an updated state of IT,i.e.,

| \begin{equation} \mbox{IT = Old IT + Past IT + New IT}. \end{equation} | (1) |

Correspondingly,we have to be moved from Big Materials,Big Manufacturing and Big Markets with Cyber-Physical Systems (CPS),to Big Data,Big Computing and Big Decision with Cyber-Physical-Social Systems (CPSS).

However,to complete this transition,we need a paradigm shift in our way of thinking. In the old IT time,we need the confirmation by casuality from science for our action. In the era of new IT,the confirmation by association from data and computing is sufficient before making decisions. Such computational thinking supported by New IT will lead to a computational culture and eventually pave the road to a computational and smart society.

Ⅲ. GO VS. COMPLEXITY AND INTELLIGENCE: HISTORICAL RETROSPECTIVEStarting from Aristotle's formal logic,Leibniz's binary number and symbolic thought,Boole's algebra and laws of thought,as well as Babbage's difference and analytic engines,many outstanding scholars and scientists before the 20th century had been striving for machines with human intelligence. Over 100 years ago,new efforts have been inspired and intensified by Hilbert's problems and program for axiomatization and mechanization of mathematics. Among them, Russell and Whitehead's Principia Mathematica (PM) was the most successful one. Under direct and heavy influence of the PM,Wiener,McCulloch and Pitts worked individually as well as jointly for development of cybernetics,brain modeling and artificial neurons,which laid the foundation of cognitive science, artificial intelligence and computational intelligence,especially initiated the road to deep learning that has been employed by AlphaGo[18].

However,in 1930,Gödel's incompleteness theorems smashed Hilbert's grand plan as well as Russell and Whitehead's vision in PM for mathematics. In 1936,Turing reformulated Gödel's results on the limits of proof and computation using his simple device (Turing machine),and proved that Turing machine is able to perform any conceivable computation for all representable programs. In this process,Turing also solved Hilbert's Entscheidungs problem,the decision problem,by showing that the halting problem for Turing machine is undecidable. Turing's work was published shortly after Church's equivalent work using his more elaborated $\lambda$-calculus. The concepts of Turing's computability and Church's effective calculability led to the Church-Turing Thesis,postulating that all representable functions can be calculated by Turing machines. Inspired by the Church-Turing Thesis,and assisted by some of Wiener's ideas[18],von Neumann designed Electronic Discrete Variable Automatic Computer (EDVAC),one of the earliest electronic computers,and established the von Neumann architecture which is still used by modern computers today.

In 1956,Wiener's colleagues at MIT,especially,Oliver Selfridge, one of Wiener's graduate students,helped with organizing the first workshop on cognitive science in MIT,and later in the summer,he participated in the Dartmouth conference that officially launched the AI field. Although Wiener did not personally take part in those activities,he still exerted a great influence[18]. Unfortunately,the idea of feedback,central to his cybernetics,does not take a significant role in AI.

Very soon,the quest of intelligence met the complexity of decision-making. And people found that Turing machine is too unrealistic to be useful for modeling any practical decision-making process[19,20,21,22]. In the late 1950s and entire 1960s,research efforts were shifted from Turing machines to finite automata and net[21,22,23],and significant progress had been made on complexity of decision-making problems with automata. In 1959,Rabin and Scott pioneered the field of computational complexity for decision problems with non-deterministic finite state automata[23,24]. For this work,they received A.M. Turing award in 1976. In 1971,Cook advanced their work on computational complexity and laid the foundation for the theory of NP-Completeness and received the A.M. Turing Award in 1982[25]. Although these studies had advanced our understanding of complexity significantly, not much progress has been made in actual computation in the quest for optimization and intelligence. In the attempt to deal with the complexity of constructing intelligent machines,the concept of $\epsilon$-computational complexity based on entropy and Kolmogorov's measure was introduced by considering specific computational resource available[26],however,no further investigation had been made since.

In the 1990s,significant efforts had been launched in dealing with NP-hard or NP-complete problems using neural networks,evolutionary computing,and other methods in computational intelligence[27,28,29,30,31]. However,these investigations revealed that even finding approximate solutions to NP-hard problems by neural networks is still hard.

From the perspective of the historical development of AI,many successes of AlphaGo is necessity of fortuity,and it is the natural result combining the efforts and wisdom from talents from various fields. AlphaGo utilizes two types of deep learning networks,the policy-networks and value-networks,for creating a closed-loop evaluation mechanism for the overall situation as well as the optimization for the current action,which is the evidence of Wiener's vision on feedback to his cybernetics. AlphaGo utilizes deep neural networks which can be dated back to the era of Wiener, McCulloch and Pitts. The deep learning is a great advancement on top of the achievements on neural networks from 1950s to 1990s,and also indicates that neural networks need to be "deep" in order to be effective for fighting decision-making complexity problems. AlphaGo uses heuristic search algorithms,the Monte Carlo tree search,for searching its strategic space,which corresponds to the complexity theory and more recent computational research efforts in tackling complex problems. One more essential element in AlphaGo that should not be overlooked is the CPU and GPU based large-scale and parallel computing. It is the latest development reflecting the nature of Church-Turing thesis,and provides the foundation for bringing the deep learning,search and feedback mechanism to the real world. With the massive integration of all the elements from the AI history, AlphaGo succeeded in possessing intelligence in Go,however,one question remains,i.e.,whether AlphaGo's success can be replicated in other AI domains,and even eventually leads to the coming of the true era of AI?

Ⅳ. FROM CHURCH-TURING THESIS TO ALPHAGO THESIS AND BEYONDGödel's incompleteness theorems completely smashed the hope and denied the mechanism that a system can derive generic mathematical truth,which was postulated by Hilbert,Russell and Whitehead. Although Turing provides a "machine" that can perform any conceivable computation for all representable programs,the computational procedure's "halting problem",or the decision problem,is still undecidable. The subsequent complexity research provided us a deep understanding on the structures of decision complexity problems,such as NP,NP-Complete,co-NP,etc., however,in a general sense,enumeration,or "brute force" computation,which is powerless in solving complex problems as in the case of "combinatorial explosion" and "curse of dimensionality",seems to be the only measure for search and decision-making. Holland's genetic algorithms (GA) and the later computational methods,such as the evolutionary computation (EC), received considerable achievements in decision optimization and complexity problems,but,until now,they have not exhibited the ability in solving problems with the complexity that reaches the level of intelligence required by AI.

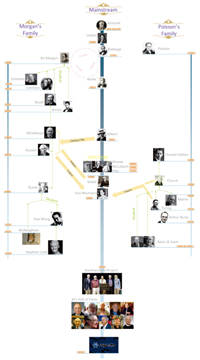

Complexity is the source of intelligence. Pierre Teilhard de Chardin views evolution as a process resulted from increasing complexity[32],and with such argument,intelligence is computation with increasing complexity! The nature of complexity is the unity of contradiction,and Go is the symbol for unity of contradiction,making it a perfect game of complexity (Fig. 1). Perhaps,we can find out a general solution to all practical computational complexity problems through analyzing the structure and algorithms employed by AlphaGo. And this is the objective for establishing the AlphaGo Thesis[17].

|

Download:

|

| Fig. 1. A game of complexity and a symbol for unity of contradiction. | |

|

Download:

|

| Fig. 2. Historical milestones in AI: A retrospective from two academic families. | |

Specifically,the conjecture of the AlphaGo Thesis states:

The AlphaGo Thesis. A decision problem for intelligence is tractable by a human being with limited resources if and only if it is tractable by an AlphaGo-like program.

Here,AlphaGo-like programs are computer programs that use similar system architectures,methods and resources as in AlphaGo. Deep learning (DL) and Monte Carlo tree search (MCTS) are key to AlphaGo,where deep neural networks provide sufficient numbers of parameters for AlphaGo to conduct complex computation for human-level intelligence on specific tasks. This is achieved by its reduction and learning capacities in decision space and searching processes.

In fact,if the number of adjustable parameters is large enough, and the methods and procedures for learning and searching are effective,there will be no restriction on using only DNN,DL or MCTS. One could certainly work out other equivalent models and methods to duplicate the success of AlphaGo. Therefore,we would be able to establish the Extended AlphaGo Thesis.

The Extended AlphaGo Thesis. A decision problem is tractable by a human being with limited resources if and only if it is tractable by a network-like system with sufficient numbers of adjustable parameters.

More discussions on this can be found in [17]. In the next section, we will propose a deep network that can be conveniently understood by a human being,but might be equally useful for decision-making as DNN for DL.

Ⅴ. DEEP RULE-BASED NETWORKS FOR DECISION AND EVALUATIONAlphaGo utilizes DNN to construct its policy-networks and value-networks,however,the meaning of the feature extraction procedure by DNN is difficult to understand. Earlier works in [33,34,35] provided a method which embeds knowledge architecture into neural networks. It utilized fuzzy logic and genetic evolutionary computing to derive corresponding learning algorithms and methods for eliminating inapplicable rules or generating new rules[11,12,36]. Based on this,the deep rule-based network is provided in Fig. 3,where Classification Network classifies input vector x,and generates M patterns; the Association Network associates the patterns with K decision rules; through the Deduction Network,N patterns are designated as the input to the next stage; finally,the Generation Network generates output vector y. In this way,we do not have to specify how to implement the Classification,Association,Deduction and Generation Networks,and they might be convolutional neural network (CNN),deep belief network (DBN),stack autoencoder (SAE),recursive neural network (RNN),or other forms of networks,however,the nodes $S_m$,$R_k$ and $U_n$ can be interpreted as the following set of Fuzzy Logic Rules:

| ${\rm{Rule}} - {\bf{r}}:{\rm{If }}x{\rm{ is }}{S_r},{\rm{ then }}y{\rm{ is }}{U_r},1 \le r \le M \times N$ | (2) |

where $S_r \subset \{S_1,\ldots,S_M\}$ and $U_r \subset \{U_1,\ldots,U_N\}$ denotes the input pattern and output pattern in rule $\textbf{r}$,respectively.

|

Download:

|

| Fig. 3. Deep rule-based networks: Building a understandable decision language for deep networks. | |

In practical applications,the DRN shown in Fig. 3 can be implemented by complex hardware or cloud computing,while the rule set in (2),the fuzzy logic decision rules,can be implemented by low-cost and simple hardware,and the combination of these two forms represents the design philosophy of "Simple Locally, Complex Remotely" or "Simple Online,Complex Offline". The detailed description and corresponding learning algorithm of DRN are to be discussed in our other articles.

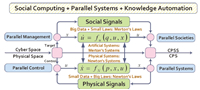

Ⅵ. PARALLEL INTELLIGENCE: A DATA-DRIVEN APPROACH FOR INTELLIGENT DECISION-MAKINGFig. 4 demonstrates the architecture of parallel control and management for complex systems developed over a decade ago by Wang[34,37]. AlphaGo provides new realistic and effective support to the architecture,and based on this,a new technical path is paved for parallel intelligence and data-driven intelligent decision. In parallel intelligent systems,every physical process corresponds to one or more software-defined systems (SDS). A SDS can be a DNN,DRN or other artificial systems. The construction process of SDS is the process of learning,mainly based on existing knowledge,data,experience or even intuition. With SDS, computational experiments can be conducted,i.e.,self-play, self-running or self-operations,various self-X,and from the SDS computational experiments,huge amounts of data are generated. The data are then used for reinforcement learning to enhance intelligence and decision capabilities,meanwhile,the decisions are evaluated against various conditions. In the end,the physical processes and systems interact with the software-defined processes and systems,forming a closed-loop feedback decision-making process to control and manage the complex situations. This is the core concept of the ACP-based parallel intelligent systems,which is a more generalized form of the AlphaGo program. In ACP,"A" stands for "artificial systems",which works for building complex models; "C" denotes "computational experiments",which aims at accurate analysis and reliable evaluation; and "P" represents "parallel execution",which targets at innovative decision-making. And they correspond to descriptive,predictive and prescriptive learning, analytics and decision-making. As indicated in Fig. 5,such parallel intelligence can be used in three modes of operations: 1) Learning and training,2) Experiment and evaluation,3) Control and management. The details of the ACP approach and parallel intelligence are depicted as following.

|

Download:

|

| Fig. 4. Parallel intelligence for decision-making: An architecture of parallel control and management for complex systems. | |

|

Download:

|

| Fig. 5. The Virtual-Real Duality: The execution framework of the parallel systems. | |

The core philosophy of ACP is that,through combining "artificial systems","computational experiments" and "parallel execution", one can turn the virtual artificial space,i.e.,the "cyberspace", into the another space for solving complexity problems. The virtual artificial space and the real physical space construct the "complex space" for solving complex system problems. The most recent development of new IT technology,such as high-performance and cloud computing,deep learning,and Internet of Things,provides a technical foundation for the ACP approach. In essence,the ACP approach aims to establish both the "virtual" and "real" parallel systems of a complex system,and both of them are utilized to solve complex system problems through quantifiable and implementable real-time computing and interacting.

In summary,the ACP approach is consist of three major steps. 1) Using artificial systems to model complex systems; 2) Using computational experiments to evaluate complex system; and 3) Interacting the real physical system with the virtual artificial system,and through the virtual-real system interaction,realizing effective control and management over the complex system.

In a sense,the ACP approach solves the "paradox of scientific methods" in complex system's "scientific solutions". In most complex systems,due to high complexity,conducting experiments is infeasible. In most cases,one has to rely on the results,i.e., the output from the complex system,for evaluating the solution. However,"scientific solutions" need to satisfy two conditions: triable and repeatable. In a complex system that involves human and societies,not being triable is a major issue. This is due to multi-faceted of reasons of prohibitive costs,legal restriction, ethics,and most importantly,impossible to have unchanged experiment conditions. The above inevitably results in the "paradox of scientific methods" in complex system's "scientific solutions".

As a result,the ACP pursues the sub-optimal approach of "computational experiments". The computational experiments substitute the simulations for physical systems when simulations are not feasible. In this way,the process of solving to complex system issues becomes controllable,observable and repeatable,and thus the solution also becomes triable and repeatable,which meets the basic requirements of scientific methods.

Using the ACP approach to interpret AlphaGo,we provide the following remarks. AlphaGo uses deep learning from Big Data of existing Go games to construct its software-defined Go games. It uses computational experiments,i.e.,self-play games,for further exercising on possible situations of the games. Finally,in the match vs. Lee,AlphaGo searches its strategy space in the virtual games. After that AlphaGo applies the search result in the real game. After one iteration with the real physical world (one step), evaluation of the strategy is assessed in real-time for determining its next action.

B. AlphaGo and Parallel IntelligenceBased on the ACP approach,the parallel intelligence can be defined as one form of intelligence that is generated from the interactions and executions between real and virtual systems. The parallel intelligence is characterized by being data-driven, artificial systems based modeling,and computational experiments based system behavior analytics and evaluation.

The core philosophy of parallel intelligence is,for a complex system,constructing a parallel system which is consist of real physical systems and artificial systems. The final goal of parallel intelligence is to make decisions to drive the real system so that it tends to the virtual system. In this way,the complex system problems are simplified utilizing the virtual artificial system,and the management and control of the complex system are achieved.

Fig. 5 demonstrates a framework of a parallel system. In this framework,parallel intelligence can be used in three operation modes,i.e.,1) Learning and training: the parallel intelligence is used for establishing the virtual artificial system. In this mode, the artificial system might be very different from the real physical system,and not much interaction is required; 2) Experiment and evaluation: the parallel intelligence is used for generating and conducting computational experiments in this mode,for testing and evaluating various system scenarios and solutions. In this mode,the artificial system and the real system interact to determine the performance of a proposed policy; 3) Control and management: parallel execution plays a major role in this operation mode. The virtual artificial system and real physical system interact and refer between each other in parallel and in real-time,and thus achieve control and management of the complex system.

We would like to point out that one single physical system can interact with multiple virtual artificial systems. For example, corresponding to different demands from different applications, one physical system can interact simultaneously or in a time-sharing manner with the data visualization artificial system, ideal artificial system,experimental artificial system, contingency artificial system,optimization artificial system, evaluation artificial system,training artificial system,learning artificial system,etc.

Using the framework of parallel systems,AlphaGo's parallel intelligence lies in the fact that 1) In the learning and training operation mode,AlphaGo is able to effectively learn from historical Go game data to establish its artificial system; 2) In the experiment and evaluation operation mode,AlphaGo is able to generate a huge amount of self-play games for reinforcement learning; and 3) In the control and management operation mode, Alphago is able to effectively search for decision strategies in real-time within its virtual system space,and interact with the physical system for making the optimal decision.

Ⅶ. CONCLUDING REMARKSRecently,a Go match between an intelligent computer program, AlphaGo,and a top-tier human player,Lee Sedol,brought a tremendous and extensive impact to the field of AI. Due to the prohibitively high complexity of Go games and the need for fuzzy interpretation of the overall game situations,computer Go programs were considered to be incompetent in achieving intelligence in this field. However,AlphaGo made the breakthrough by defeating 4:1 a professional nine dan human player.

We discuss the potential prospects of the technology impacts brought by the victory of AlphaGo,rather than discussing its technical details. Obviously,the AlphaGo approach can be applied in many areas other than the Go game,and the further development of related AI approach may lead to the new intelligent technology (New IT) era. The victory of AlphaGo is also a persuasive augment for shifting the paradigm from scientific casuality to data association by computing in decision making,especially for complex systems.

A historical retrospective is provided on the important milestones which are related to the AlphaGo approach. From the retrospective, it is pointed out that the success of AlphaGo is necessity of fortuity,standing on the achievements of AI. For this reason,if we consider the AlphaGo within the context of AI history,one can provide a better vision in projecting the AlphaGo techniques into the future of AI.

Therefore,we propose a conjecture of the AlphaGo Thesis,which states an AlphaGo-like program is equivalent to a human-being with limited resources. Further,we propose another conjecture of the Extended AlphaGo Thesis,which states that a network-like system with sufficient number of adjustable parameters is equivalent to a human-being with limited resources. The network-like system,in the form of deep rule-based networks,is described in Section V utilizing Fuzzy Logic Rule sets. The DRN represents the design philosophy of "Simple Locally,Complex Remotely" with the implication of an implementable technical scheme.

Combined with the theoretical frameworks of the ACP approach and parallel systems,the implication of The AlphaGo Thesis is brought to the level of complex system intelligence. The ACP approach, aiming to mitigate the "paradox of scientific methods" in complexity systems' "scientific solutions",provides a triable and repeatable study methodology to complex systems,especially the ones involving human and societies. The ACP approach can be deemed as a generalized form of the AlphaGo approach,and it is described in details in Section Ⅵ. The "parallel intelligence" is directly derived and generated from applying the ACP approach in a complex system. With the strong evidence provided by AlphaGo's victory, together with the theoretical concepts such as software-defined systems,computational experiments and closed-loop feedback between parallel virtual-real systems,we foresee that parallel intelligence becomes a low-hanging fruit of AI in the near future.

Where does AlphaGo go? Where does the AlphaGo Thesis lead to? Where does the concept of parallel intelligence imply? The answers to these questions may need decades of efforts of AI researchers. However,it is foreseeable and unquestionable that many complex system problems in engineering management,economic management and society management are going to benefit from the generalized AlphaGO approach,the ACP,and parallel system framework while working towards the answers of these questions.

ACKNOWLEDGMENTThis article is a direct result of discussion between two groups of professors and graduate students in Beijing,China,and Denver, Colorado,USA. We thank many others participated in this interesting and stimulating process.

| [1] | Taves M. Google’s AlphaGo isn’t taking over the world, yet [Online], Available: http://www.cnet.com/news/googles-alphago-isnt-taking-overthe-world-yet/, March 13, 2016. |

| [2] | Wang F Y. The Era of New IT, Human still cannot be replaced by machines [Online], Available: http://opinion.huanqiu.com/1152/2016-03/8689740.html, March 11, 2016. |

| [3] | Tromp J. Number of legal Go positions [Online], Available: https://tromp.github.io/go/legal.html, 2016. |

| [4] | Zobrist A L. Feature Extraction and Representation for Pattern Recognition and the Game of Go [Ph. D. dissertation], University of Wisconsin, USA, 1970 |

| [5] | Millen J K. Programming the game of Go. Byte, 1981, 6(4): 102-114 |

| [6] | Green H S. Go and artificial intelligence. Computer Game-Playing: Theory and Practice. New York: Halsted Press, 1983. 141-151 |

| [7] | Webster B. A Go board for the macintosh. Byte, 1984, 9(12): 125-128 |

| [8] | Silver D, Huang A, Maddison C J, Guez A, Sifre L, van den Driessche G, Schrittwieser J, Antonoglou I, Panneershelvam V, Lanctot M, Dieleman S, Grewe D, Nham J, Kalchbrenner N, Sutskever I, Lillicrap T, Leach M, Kavukcuoglu K, Graepel T, Hassabis D. Mastering the game of Go with deep neural networks and tree search. Nature, 2016, 529(7587): 484-489 |

| [9] | Wedd N. Human-Computer Go challenges [Online], Available: http://www.computer-go.info/h-c/index.html, 2011. |

| [10] | Rimmel A, Teytaud O, Lee C S, Yen S J, Wang M H, Tsai S R. Current frontiers in computer Go. IEEE Transactions on Computational Intelligence and AI in Games, 2010, 2(4): 229-238 |

| [11] | Tian Y D, Zhu Y. Better computer Go player with neural network and long-term prediction. arXiv: 1511.06410, 2016. |

| [12] | Coulom R. Efficient selectivity and backup operators in Monte-Carlo tree search. In: Proceedings of the 5th International Conference on Computers and Games. Turin, Italy: Springer, 2007. 72-83 |

| [13] | Minsky M. The Society of Mind. New York: Simon & Schuster, 1988. |

| [14] | Wang F Y, Saridis G N. Suboptimal control for nonlinear stochastic systems. In: Proceedings of the 31st IEEE Conference on Decision and Control. Tucson, AZ: IEEE, 1992. 1856-1861 |

| [15] | Wang F Y, Zhang H G, Liu D R. Adaptive dynamic programming: an introduction. IEEE Computational Intelligence Magazine, 2009, 4(2): 39-47 |

| [16] | Wang F Y. Let’s Go: From AlphaGo to parallel intelligence. Science & Technology Review, 2016, 34(7): 72-74 (in Chinese) |

| [17] | Wang F Y. Complexity and intelligence: From Church-Turing Thesis to AlphaGo Thesis and beyond (1). Journal of Command and Control, 2016, 2(1): 1-4 (in Chinese) |

| [18] | Conway F, Siegelman J. Dark Hero of the Information Age: In Search of Norbert Wiener, the Father of Cybernetics. New York: Basic Books, 2005. |

| [19] | Burks A W, Wright J B. Theory of logical nets. Proceedings of the IRE, 1953, 41(10): 1357-1365 |

| [20] | Shannon C E. Computers and automata. Proceedings of the IRE, 1953, 41(10): 1234-1241 |

| [21] | Burks A W, McNaughton R, Pollmar C H, Warren D W, Wright J B. Complete decoding nets: general theory and minimality. Journal of the Society for Industrial and Applied Mathematics, 1954, 2(4): 201-243 |

| [22] | Burks A W, Wang H. The logic of automata. Journal of the ACM, 1957, 4(3): 279-297 |

| [23] | Rabin M O, Scott D. Finite automata and their decision problems. IBM Journal of Research and Development, 1959, 3(2): 114-125 |

| [24] | Rabin M O. Complexity of computations. ACM Turning Award Lectures. New York, NY, USA: ACM, 2007. |

| [25] | Cook S A. The complexity of theorem-proving procedures. In: Proceedings of the 3rd Annual ACM Symposium on Theory of Computing, 1971, 151-158 |

| [26] | Wang F Y, Saridis G, McNaughton R. On Complexity of Intelligent Machines: an "-complexity Approach. RPI RAL Report, 1988. |

| [27] | Blum A L, Rivest R L. Training a 3-node neural network is NP-complete. Neural Networks, 1992, 5(1): 117-127 |

| [28] | Budinich M. Neural networks for NP-complete problems. Nonlinear Analysis: Theory, Methods & Applications, 1997, 30(3): 1617-1624 |

| [29] | Ghaziri H, Osman I H. A neural network algorithm for the traveling salesman problem with backhauls. Computers and Industrial Engineering, 2003, 44(2): 267-281 |

| [30] | Potvin J Y. The traveling salesman problem: a neural network perspective. ORSA Journal on Computing, 1993, 5(4): 328-348 |

| [31] | Yao X. Finding approximate solutions to NP-hard problems by neural networks is hard. Information Processing Letters, 1992, 41(2): 93-98 |

| [32] | de Chardin P T. The Phenomenon of Man. London: William Collins Sons, 1955. |

| [33] | Wang F Y, Kim H M. Implementing adaptive fuzzy logic controllers with neural networks: a design paradigm. Journal of Intelligent & Fuzzy Systems, 1995, 3(2): 165-180 |

| [34] | Wang F Y. Parallel control: a method for data-driven and computational control. Acta Automatica Sinica, 2013, 39(4): 293-302 (in Chinese) |

| [35] | Wang F Y. Building knowledge structure in neural nets using fuzzy logic. in Robotics and Manufacturing: Recent Trends in Research Education and Applications, edited by M. Jamshidi, New York, NY, ASME (American Society of Mechanical Engineers) Press, 1992. |

| [36] | Wang F Y. Hierarchical Structure and Linguistic Description for Complex Decision Networks: A Evaluation Programming Approach. PARCS Report, University of Arizona, Tucson, Arizona, USA, May 1999. |

| [37] | Wang F Y. Parallel system methods for management and control of complex systems. Control and Decision, 2004, 19(5): 485-489 (in Chinese) |

2016, Vol.3

2016, Vol.3