I. INTRODUCTION

With the development of society,all kinds of project presenting,establishing,quality checking,completing and other related activities are increasing in China. More and more projects need to be reviewed every year. At present,the selection of project review experts is mainly adopted by artificial means. The artificial selection not only requires that funding organizations are very familiar with project topics and review experts' research areas,but also brings about some disadvantages,such as high manpower cost,unfairness and so on. To avoid the drawbacks,it is very significant and essential to propose a solution that can automatically recommend experts for project review activities.

Currently,recommendation methods and techniques in the field of electronic commerce have had a considerable development and a wide range of application. More and more researches on recommending algorithms and techniques have laid a very good theoretical foundation for review expert recommendation of project activities. Among these recommendation methods,collaborative filtering algorithm has been the most widely used[1]. The method renders its recommendation by analyzing products' historical ratings data made by users. At present,collaborative filtering recommendation algorithms are mainly classified as two types,the method based on nearest neighbor and the other based on model. The collaborative filtering algorithm based on k-nearest neighbor (also known as KNN) makes its recommendation by calculating the similarity between users or products. It includes user-oriented KNN method (UKNN)[2] and item-oriented KNN method (IKNN)[3]. The other collaborative filtering algorithms based on model[4, 5, 6, 7, 8, 9] learn a corresponding model on users' rating data to predict a product rating. These models mainly include clustering model,probabilistic correlation model,singular value decomposition (SVD),latent semantic analysis model (LSA) and so on. Recently,relevant scholars have put forward a matrix factorization technique[10, 11, 12, 13, 14],which learns low-dimensional feature vectors of users and products from a sparse rating matrix and then uses the low-dimensional rating matrix to predict product rating given by users. Their studies have shown that the matrix factorization algorithm is more effective than the nearest-neighbor algorithm. Similarly,in the case of sparse rating data,the recommendation effect of matrix factorization algorithm is better than that of the algorithm based on model.

The traditional collaborative recommendation algorithm mentioned above assumes that users/products are mutually independent and ignores structural relationships between users (products). Thus,some scholars excavated relevant relationships between users (products),and added them into the existing collaborative filtering algorithm to improve the accuracy of recommendation[15, 16, 17, 18, 19]. Among them,Guo et al.[18],on the basis of the rating matrix,added a users' social relationship matrix,which improved the algorithm's recommendation effect greatly. Wu et al.[19] proposed a matrix factorization model based on neighborhood relationships,which calculated similar neighbors by label information,added prior neighbor relationships for users' (products') feature vectors,and achieved a good recommendation effect.

Up to now,there are a lot of historical project review information which were retained in project application' and project evaluation' activities. Every evaluated project contains its content information,its decision (status) information (such as funded after meeting review,unfunded after meeting review,unfunded before meeting review),its review expert information,its rating information from review experts. There is no doubt that the historical project information would play an important supporting role on recommending review experts to new projects. In this study,therefore,we use the historical project information,and employ the collaborative filtering recommendation algorithm to recommend review experts. Using the project status information and the project rating information given by review experts,we build a project-expert rating matrix through some rules. In addition,for each reviewed project,its review experts must have been a few,thus will result in a serious data sparseness problem of the rating matrix; new experts do not have any historical rating records,thus they cannot be recommended by using collaborative filtering technology,known as "cold start" problem about new experts. To solve the above problems effectively,therefore,we combine content features of projects or experts,assume that those projects/experts with similar topics have similar feature vectors,introduce the relationships on topics between projects/experts,and propose a matrix factorization algorithm based on topic relationship for review expert recommendation (in short Topic_MF). The method,firstly,through the topic relationship between projects/experts,finds the neighbor collection which shares the largest similarity with the target project/expert. Then,it integrates the neighbor set into the collaborative filtering recommendation algorithm based on matrix factorization. Finally,experiments are conducted on a real dataset,and the results show that the proposed Topic_MF can predict a review expert rating for a new project more effectively and consequently improve recommendation effect.

II. PROBLEM DEFINITION AND MATRIX FACTORIZATION MODEL

At first,let us explain two important concepts which will be mentioned in the paper. One is project rating and the other is expert rating. Project rating is a score or a rating that an expert gives to a reviewed project and it represents the viewpoint that an expert holds on how good a project is. Expert rating is a score or a rating that a reviewed project feedbacks to its review expert and it represents the expert's working ability.

In this study,the collaborative recommendation algorithm mainly makes corresponding calculation using the project-expert rating matrix to predict the rating of review experts. If there is a set $P$ of $M$ projects,$P=\{p_{1},\ldots,p_{M}\}$,a set $E$ of $N$ experts,$E=\{e_{1},\ldots,e_{N}\}$,and a project-expert rating matrix $R(p,e)_{M\times N}$,its element $r_{ij}$ represents the expert rating that the project $i$ gives the expert $j$. In fact,in the historical information of a reviewed project,there are a final decision (status) and project ratings made by its each expert. And in our accessible project database,the project rating is from 0 to 7. To some extent,the final decision and a project rating made by an expert could reflect the expert working ability which is labeled as expert rating. Therefore,according to the status information of a reviewed project,we turn a project rating from an expert into an expert rating that the project inversely gives the expert through some rules,and set the expert rating value as $r_{ij}$,$r_{ij}\in[0, 7].

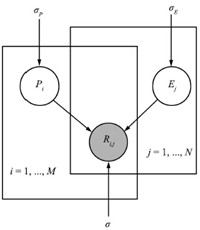

In the collaborative filtering algorithm based on matrix factorization model,it is generally assumed that expert rating is only influenced by a few factors. The projects/experts are mapped to a low-dimensional feature space. In the space,by rebuilding a low-dimensional rating matrix,the algorithm predicts the review expert rating to make an appropriate recommendation. Assume that $P\in {\bf R}^{D\times M}$ represents the feature matrix of projects,$E\in {\bf R}^{D\times N}$ represents the feature matrix of experts,and $D$ expresses the feature vector dimension. The model may learn the feature vectors of projects and experts,then predict the review expert rating based on the feature vectors. The matrix factorization graph model is shown in Fig. 1.

| Download: |

| Fig. 1 The matrix factorization graph model. | |

In the model,the conditional probability of the rating data of the projects and experts is defined as (1):

| \begin{align} p(R|P,E,\sigma^{2})=\prod_{i=1}^{M}\prod_{j=1}^{N}[{{\rm N}}(R_{ij}|P_{i}^{\rm T}E_{j},\sigma^{2})]^{I_{ij}^{R}}. \end{align} | (1) |

| \begin{equation*}I^{R}_{ij}=\begin{cases} 1,& {\rm if~ there~ is~ a~ rating~ to ~expert}\\ 0,& {\rm otherwise} \end{cases}.\end{equation*} | ( ) |

| \begin{align} &p(P|\sigma_{p}^{2})= \prod_{i=1}^{M}{{\rm N}}(P_{i}|0,\sigma_{P}^{2}{\rm I}),\notag\\ &p(E|\sigma_{E}^{2})= \prod_{j=1}^{N}{{\rm N}}(E_{i}|0,\sigma_{E}^{2}{\rm I}). \end{align} | (2) |

| \begin{align} & p(P,E|R,\sigma^{2},\sigma^{2}_{P},\sigma^{2}_{E})\propto p(R|P, E,\sigma^{2})p(P|\sigma_{P}^{2})p(E|\sigma_{E}^{2})\notag\\ &\qquad =\prod_{i=1}^{M}\prod_{j=1}^{N}\left[{{\rm N}}(R_{ij}|P_{i}^{\rm T}E_{j},\sigma^{2})\right]^{I_{ij}^{R}}\times \prod_{i=1}^{M}{{\rm N}}(P_{i}|0,\sigma_{P}^{2}{ I})\notag\\ &\qquad \times \prod_{j=1}^{N}{{\rm N}}(E_{i}|0,\sigma^{2}_{E}{ I}). \end{align} | (3) |

III. REVIEW EXPERT COLLABORATIVE RECOMMENDATION ALGORITHM BASED ON TOPIC RELATIONSHIP

A. Rating Matrix Construction

In the historical information of the reviewed projects,there is an important field,project rating from experts which represents how good a project is. However,when recommending review experts for projects,we need an expert rating which characterizes expert working ability. For project application,funding agencies firstly do a project preliminary examination or carryout a project peer review to decide whether a project can be accepted for a review meeting so as to further discuss it for approval. And for the above selected candidate projects,after discussion in a meeting,they will be given a final decision whether to be funded or not. So every declared project mainly takes on three status "unfunded before meeting review","unfunded after meeting review" and "funded after meeting review". To some extent,project status and project rating from experts could reflect experts' working ability (That is expert rating). For example,a reviewed project's status is "unfunded before meeting review" while a review expert gave a high rating to this project,this indicates that the review expert's evaluation has a deviation or his working capability is low,thus,he should get a low expert rating from the project inversely. If a reviewed project's status is "funded after meeting review" and a review expert gave a high rating to this project,this indicates that the review expert's evaluation is good and rational or his working capability is high,thus,he should get a high expert rating from the project,conversely,he should get a very low rating from this project. Here,we use status information and rating information of the historical projects reviewed to build a project-expert rating matrix $R(p,e)$ by the above rules,that is,according to status information of the reviewed projects,we turn a project rating from an expert into its expert rating from the project. The transformation rules of the expert rating are defined in Table I. The expert rating after being transformed could reflect the experts' working ability effectively.

For those experts who have not participated in the project,in order not to bring additional information,their expert ratings are set as zero. Using the above rules,we construct a rating matrix for projects and experts.

B. Matrix Factorization Model Based on Topic Relationship

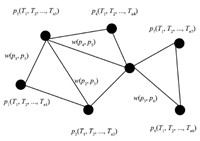

In the matrix factorization model based on topic relationship,one key step is to obtain topic relationship of projects and experts. In order to find potential topic relationship between projects/experts,in this study,using LDA[20],we extract project topics from project application documents. In order to improve extraction accuracy of project topics,it is necessary to preprocess project application documents by removing noises including author introductions and references. Then we use topic similarity to define topic relationship between projects/experts. The topic relationship network of projects is shown in Fig. 2.

| Table I THE TRANSFORMATION RULES OF THE EXPERT RATING |

| Download: |

| Fig. 2 Topic relationship network of projects. | |

The relationship network of project topics in Fig. 2 may be described as $G=\{P,E,W\}$. $P$ is the project set; $E$ is the edge set; $W$ represents the weight of the edge,which is the topic similarity between projects; $(T_{1},T_{2},\ldots,T_{ni})$ is the project $p_{i}$'s topics. According to this relationship network,we define the similarity weight between projects as (4).

| \begin{equation} w(p_{i}, p_{j})=\frac{\sum\limits_{i=1}^{ni}\sum\limits_{j=1}^{nj}Sim(T_{i}, T_{j})}{ni\times nj}. \end{equation} | (4) |

| \begin{equation} Sim(T_{i},T_{j})=\frac{\sum\limits_{k=1}^{n}w_{Ti_{k}}w_{Tj_{k}}}{\sqrt{\sum^{n}\limits_{k=1}w_{Ti_{k}}^{2}}\sqrt{\sum\limits_{k=1}^{n}w_{Tj_{k}}^{2}}}. \end{equation} | (5) |

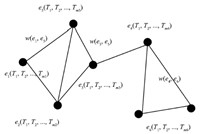

Likewise,using ES-LDA model[21],we extract expert topics from expert evidence documents such as experts' homepages,experts' blog pages,experts' Baidu Encyclopedia pages,experts' Wikipedia pages and experts' papers. In these expert evidence documents,there is some important information,such as page-link,expert-metadata,expert-metadata relationship,expert-metadata co-occurrence and so on. This information has a very good guiding function,and may greatly support expert topic clustering,thus can improve accuracy of expert topic extraction. We integrate this guiding information into LDA model and finally obtain expert topics. For the case of homonymous experts,it is necessary to do name disambiguation to obtain high-quality expert evidence documents. According to the characteristics of Chinese names and expert attributes,we use spectral clustering disambiguation method for Chinese experts by fusing page relationship[22]. The main idea can be concluded as follows: First,we use term frequency-inverse document frequency (TF-IDF) method to calculate feature weight based on word,utilize cosine angle formula to calculate page similarity,and obtain an initial similarity matrix of experts' pages; then,we take expert relationship features as semi-supervised information to correct the initial similarity matrix. The experiments show that the method has a good effect in the related work. Using these expert topics,we build a topic relationship network of experts shown in Fig. 3. Similar to topic relationship network of projects,$(T_{1},T_{2},\ldots,T_{mi})$ is the expert $e_{i}$'s topics. The similarity computation between experts is similar to that between projects,and we define the similarity weight between experts as (6).

| \begin{equation} w(e_{i}, e_{j})=\frac{\sum\limits_{i=1}^{mi}\sum\limits_{j=1}^{mj}Sim(T_{i}, T_{j})}{mi\times mj}. \end{equation} | (6) |

| Download: |

| Fig. 3 Topic relationship network of experts. | |

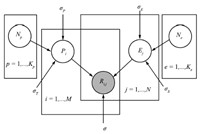

Using topic similarity degree,we look for the neighbor set of the maximum similarity for a target project (expert). In order to avoid that the nearest neighbors with low similarity impact recommendation results,using the dynamic threshold method,we take those projects/experts whose similarity exceeds the threshold as the nearest neighbors of the target project (expert). Assume that the similarity threshold is $\beta$,the set $N_{i}=\{i|w(i,i_{k})\geq \beta,i\neq i_{k}\}$ represents the nearest neighbors of the target project or expert. Using the dynamic method,we find the nearest neighbor set $N_{p}$ of the project and the nearest neighbor set $N_{e}$ of the expert,$p=1,2,\ldots,K_{p},e=1,2,\ldots,K_{e}$. Further,we integrate the neighbor set $N_{p}$ and $N_{e}$ into the collaborative filtering model based on matrix factorization to improve the recommendation accuracy of project review experts. The probabilistic graph model is shown in Fig. 4.

| Download: |

| Fig. 4 The matrix factorization graph model based on topic relationship. | |

In this model,we assume that the feature vector of each project (expert) not only obeys Gaussian distribution with zero mean to prevent over-fitting,but also is similar to the feature vectors of other projects/experts in the nearest neighbor set. The formulas for the same are as follow:

| \begin{equation} P_{i}=\sum_{j\in N_{p}}w(p_{i},p_{j})\times P_{j}+\theta_{P}, ~~\theta_{P}\sim {{\rm N}}(0,\sigma_{P}^{2}{I}), \end{equation} | (7) |

| \begin{equation} E_{i}=\sum_{j\in N_{e}}w(e_{i},e_{j})\times E_{j}+\theta_{E}, ~~\theta_{E}\sim {{\rm N}}(0,\sigma_{E}^{2}{I}). \end{equation} | (8) |

| \[\begin{array}{*{20}{l}} {p(P|{N_p},\sigma _P^2,\sigma _T^2) \propto p(P|\sigma _P^2) \times p(P|{N_p},\sigma _T^2)}\\ {\; = \prod\limits_{i = 1}^M {\rm{N}} ({P_i}|0,\sigma _P^2I) \times \prod\limits_{i = 1}^M {\rm{N}} \left( {{P_i}|\sum\limits_{j \in {N_p}} w ({p_i},{p_j}){P_j},\sigma _T^2I} \right).} \end{array}\] | (9) |

| \[\begin{array}{*{20}{l}} {p(E|{N_e},\sigma _E^2,\sigma _S^2) \propto p(E|\sigma _E^2) \times p(E|{N_e},\sigma _S^2)}\\ {\; = \prod\limits_{i = 1}^N {\rm{N}} ({E_i}|0,\sigma _E^2I) \times \prod\limits_{i = 1}^N {\rm{N}} \left( {{E_i}|\sum\limits_{j \in {N_e}} w ({e_i},{e_j}){E_j},\sigma _S^2I} \right).} \end{array}\] | (10) |

| \begin{align} & p(P,E|R,{N_p},{N_e},{\sigma ^2},\sigma _P^2,\sigma _E^2,\sigma _T^2,\sigma _S^2)\notag\\ &\qquad\propto p(R|P,E,\sigma^{2})p(P|N_{p},\sigma_{P}^{2}, \sigma_{T}^{2})p(E|N_{e},\sigma_{E}^{2},\sigma_{S}^{2})\notag\\ &\qquad =\prod_{i=1}^{M}\prod_{j=1}^{N}[{{\rm N}}(R_{ij}|P_{i}^{\rm T}E_{j},\sigma^{2})]^{I_{ij}^{R}}\notag\\ &\qquad \times \prod_{i=1}^{M}{{\rm N}}(P_{i}|\sum_{j\in N_{p}}w(p_{i},p_{j})P_{j},\sigma_{T}^{2}{I})\notag\\ &\qquad \times \prod_{i=1}^{N}{{\rm N}}({{E_i}|\sum\limits_{j\in N_{e}} {w({e_i},{e_j})} {E_j},\sigma _s^2{I}}) \times \prod\limits_{i = 1}^M {{\rm N}({P_i}|0,\sigma _p^2{I})} \notag \\ &\qquad \times \prod\limits_{i = 1}^N {{\rm N}({E_i}|0,\sigma _E^2{ I})}. \end{align} | (11) |

In order to maximize the posterior probability,we apply logarithm on both sides of (11) and get (12).

| \begin{align} &\ln p(P,E|R,{N_p},{N_e},{\sigma ^2},\sigma _P^2,\sigma _E^2,\sigma _T^2,\sigma _S^2)\notag\\ &\qquad= - \frac{1}{{2{\sigma ^2}}}\sum\limits_{i=1}^M {\sum\limits_{j=1}^N {I_{ij}^R{{({R_{ij}} - P_i^{\rm T}{E_j})}^2} - \frac{1}{{2{\sigma ^2_{P}}}}} } \sum\limits_{i = 1}^M {P_i^{\rm T}{P_i}}\notag\\ &\qquad-\frac{1}{2\sigma_{E}^{2}}\sum_{i=1}^{N}E_{i}^{\rm T}E_{i}-\frac{1}{2\sigma_{T}^{2}} \sum_{i=1}^{M}\bigg(\big(P_{i}\notag\\ &\qquad-\sum_{j\in N_{P}}w(p_{i},p_{j})P_{j}\big)^{\rm T}\big(P_{i}-\sum_{j\in N_{P}}w(p_{i},p_{j})P_{j}\big)\bigg)\notag\\ &\qquad- \frac{1}{2\sigma_{S}^{2}}\sum_{i=1}^{N}\bigg((E_{i}-\sum_{j\in N_{e}}w(e_{i},e_{j})E_{j})^{\rm T}\notag\\ &\qquad\times (E_{i}-\sum_{j\in N_{e}}w(e_{i},e_{j})E_{j})\bigg)-\frac{1}{2}\bigg( {\sum\limits_{i = 1}^M {\sum\limits_{j = 1}^N {I_{ij}^R} } } \bigg)\ln\sigma^2\notag\\ &\qquad-\frac{1}{2}\bigg((M\times D)\ln \sigma_{P}^{2}+(N\times D)\ln \sigma_{E}^{2}\notag\\ &\qquad+(M\times D)\ln \sigma_{T}^{2}+(N\times D)\ln \sigma_{S}^{2}\bigg)+C. \end{align} | (12) |

| \begin{align*} L=&\ \frac{1}{2}\sum_{i=1}^{M}\sum_{j=1}^{N}I_{ij}^{R}(R_{ij}-P_{i}^{\rm T}E_{j})^{2}+\frac{\lambda_{P}}{2}\sum_{i=1}^{M}P_{i}^{\rm T}P_{i}\\ &\ +\frac{\lambda_{E}}{2}\sum_{i=1}^{N}E_{i}^{\rm T}E_{i} +\frac{\lambda_{T}}{2}\sum_{i=1}^{M}\bigg(\big(P_{i}-\sum_{j\in N_{p}}w(p_{i},p_{j})P_{j}\big)^{\rm T}\\ &\ \times \big(P_{i}-\sum_{j\in N_{p}}w(p_{i},p_{j})P_{j}\big)\bigg)\end{align*} \begin{align}&+\frac{\lambda_{S}}{2}\sum_{i=1}^{N}\bigg(\big(E_{i}-\sum_{j\in N_{e}}w(e_{i},e_{j})E_{j}\big)^{\rm T}\notag\\ &\times \big(E_{i}-\sum_{j\in N_{e}}w(e_{i},e_{j})E_{j}\big)\bigg). \end{align} | (13) |

| \[\begin{array}{*{20}{l}} {\frac{{\partial L}}{{\partial {P_i}}} = }&{\;\sum\limits_{j = 1}^N {I_{ij}^R({R_{ij}} - P_i^{\rm{T}}{E_j})( - {E_j}) + {\lambda _P}{P_i}} }\\ {}&{\; + {\lambda _T}({P_i} - \sum\limits_{j \in {N_p}} {w({p_i},{p_j})} {P_j})}\\ {}&{\; - {\lambda _T}\sum\limits_{j \in {N_p}} {w({p_i},{p_j})({P_j} - \sum\limits_{k \in {N_p}} {w({p_k},{p_j}){P_k}} )} .} \end{array}\] | (14) |

| \[\begin{array}{*{20}{l}} {\frac{{\partial L}}{{\partial {E_i}}} = }&{\;\sum\limits_{j = 1}^M {I_{ij}^R({R_{ij}} - P_j^{\rm{T}}{E_i})( - {P_j}) + {\lambda _E}{E_i}} }\\ {}&{\; + {\lambda _s}({E_i} - \sum\limits_{j \in {N_e}} {w({e_i},{e_j})} {E_j})}\\ {}&{\; - {\lambda _s}\sum\limits_{j \in {N_e}} {w({p_i},{p_j})({E_j} - \sum\limits_{k \in {N_e}} {w({e_k},{e_j}){E_k}} )} .} \end{array}\] | (15) |

The initial value of feature vector $P$ and $E$ is randomly obtained by Gaussian distribution with zero mean. Then,in each operation,the value of feature vector $P$ and $E$ is iteratively updated based on the value of the previous generation,until it converges. We get the project feature vector $P$ and expert rating feature vector $E$ by gradient descent algorithm. Finally,the predicted rating of expert is as follows (16):

| \[Rating(p,e) = P_p^{\rm{T}}{E_e}.{\rm{ }}\] | (16) |

In the review expert collaborative recommendation algorithm based on topic relationship,the recommending process will be divided into three steps: The first step is to build the project-expert rating matrix. The second step is to establish the topic relationship network of projects and that of experts. The third step is to make these topic relationships converge to the matrix factorization algorithm to achieve a recommendation. If there are $M$ projects and $N$ experts,the time complexity of building the project-expert rating matrix is ${\rm O}(M\times N)$. For construction of topic relationship networks of projects and experts,the time complexity of obtaining project topics and expert topics is ${\rm O}((M^{2}+ N^{2})\times V)$,in which $V$ indicates vocabulary size; and the time complexity of computing similarity between projects/experts is ${\rm O}((M(M-1)+N(N-1))/2)$. For the third step,according to the analysis of [23],the time complexity of calculating gradient is ${\rm O}(N\bar{p}D+NK^{2}D+M\bar{e}D+MK^{2}D)$,in which $K$ represents the neighbor number of target project (expert),and $\bar{p},\bar{e}$ respectively represent the average rating for each project and that for each expert. From the above analysis,it is concluded that the time complexity of the proposed algorithm is not high. IV. EXPERIMENT AND RESULT ANALYSIS

A. Experimental Data and Analysis

Because there is no open authoritative corpus resource,our historical project data are taken from the Science and Technology Department of Yunnan Province. We sorted out 4 129 historical project data and 8 124 experts' basic information from the project document library. All these data are from five domains,information,metallurgy,chemical industry,biology and medicine. The data contain content information of projects,project rating information from review experts,status information of projects and so on. Rating range is [0, 7]. As expert's basic information cannot well reflect its topic information,in order to ensure the accuracy of obtained expert topic information,we collect these experts' evidence documents manually,including experts' homepages,experts' blog pages,experts' Baidu Encyclopedia pages and experts' Wikipedia pages. Experimental data sets are shown in Table II.

| Table II EXPERIMENTAL DATASETS |

| Table III ANALYSIS OF DATA SPARSITY (%) |

For experimental data in Table II,let us analyze sparsity of the rating matrix. Actually,sparsity of a rating matrix is mainly measured by density of the rating matrix. The density is formulized as (17):

| \begin{equation} Density=\frac{N_{s}}{N_{e}\times N_{p}}. \end{equation} | (17) |

B. Evaluation Standard

Because root mean squared error (RMSE) can intuitively measure recommendation quality and is also easily understandable,it has become a popular measuring method for recommendation quality. We also take RMSE[24] as our evaluation standard. In our experiments,RMSE is used to measure the predicting accuracy by calculating deviation between the predicted expert rating and the actual expert rating. The smaller RMSE value is,the higher recommendation quality is. Assume that the predicted expert rating vector set is $\{r_{e1},r_{e2},\ldots,r_{en}\}$ and the corresponding actual expert rating vector set is $\{r'_{e1},r'_{e2},\ldots,r'_{en}\}$,RMSE can be expressed as formula (18).

| \begin{equation} RMSE=\sqrt{\frac{\sum\limits_{i=1}^{n}\left(r_{ei}-r'_{ei}\right)^{2}}{n}}. \end{equation} | (18) |

In experiments,the history project data in Table II are randomly divided into training dataset and test dataset. We respectively conduct experiments according to differently-sized training data. The training data are obtained by random selection. Then,on them,five-fold cross-validation is used. Finally the experimental results on five different test datasets are taken by taking their average as the RMSE value. In order to reduce the complexity of the algorithm,according to the experimental result in [11],we set $\lambda _{P}=\lambda_{E}=0.001,\lambda_{T}=\lambda_{S}=\lambda$. In order to verify the effectiveness of the proposed algorithm,in this study,we design six groups of comparative experiments: 1) the recommendation effect of the algorithm under different feature dimension numbers; 2) the influence of different values of parameter $\beta$ on the algorithm performance; 3) the influence of different values of parameter $\lambda$ on the algorithm performance; 4) the algorithm's recommendation performance under different rating sparseness; 5) the algorithm's recommendation performance on new experts; 6) the algorithm's recommendation performance on differently-sized training datasets.

Experiment 1. the recommendation effect of the algorithm under different feature dimension numbers

In this experiment,we will compare the recommendation effects of SVD[8],Gaussian pLSI[7],PMF[11] and Topic_MF under different feature dimension numbers. Here,we set feature dimension number $D$ as 5,10,20 and 30 in sequence,and respectively conduct experiments to observe what influence these different feature dimension numbers have on the algorithms. In order to do these comparable experiments under the same parameters,except $D$,the other parameters are set to the corresponding values of the optimal algorithm performance,so we set $\beta=0.8,\lambda=20$. These experiments are made on the whole dataset,of which 80 % are randomly extracted as training data and the others as test data. The experimental results are listed in Table IV.

| Table IV RMSE COMPARISON UNDER DIFFERENT FEATURE VECTOR DIMENSION $D$ |

From Table IV,the following conclusions are drawn: 1) as feature dimension number $D$ increases,the accuracies of the four algorithms have some improvement; but with the dimension $D$ increasing continuously,their speed of improving accuracy slows down while their time complexity goes up. 2) Under the same $D$,PMF's RSME values are smaller than those of SVD and Gaussian pLSI; this further proves that PMF algorithm has better recommendation effect than other model-based collaborative filtering recommendation algorithms in the condition of sparse data. 3) Topic_MF's RSME values are much smaller than those of the former three algorithms; this shows that in the case of rating data sparseness,introducing topic relationship between projects/experts into our algorithm plays an important role on improving recommendation quality.

Experiment 2. the influence of different values of parameter ${\pmb \beta}$ on the algorithm performance

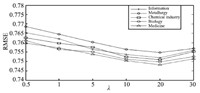

Because the rating matrix has serious data sparseness problem,in order to avoid that the nearest neighbors with low similarity impact the recommendation results,in this study,a dynamic threshold method is used to select the nearest neighbors of target project (expert). Given similarity threshold $\beta$ as 0.9,0.8,0.7,0.6,0.5,0.4 in sequence,the experiments are respectively performed on the five domain datasets to observe the influence of $\beta$ variation on the recommendation results from the proposed algorithm. In order to conduct the comparable experiments under the same parameters and reduce the complexity of the algorithm,we set $\lambda=1$ ,$D=5$. The experimental results are shown in Fig. 5.

| Download: |

| Fig. 5 The impact of parameter $\beta$ on the algorithm performance. | |

From Fig. 5,it is observed that the parameter $\beta$ has a great impact on the algorithm performance. With the parameter $\beta$ increasing,the RMSE value has constantly decreased; consequently,the recommendation quality has unceasingly improved. This also shows that the nearest neighbors with low similarity would affect the recommendation effect. In addition,when $\beta>0.8$,the RMSE begins to rise,which indicates that the accuracy begins to fall. The reason is that when the similarity threshold is too high,the nearest neighbors of target project (expert) decrease greatly,thereupon,the recommendation quality of review experts also falls.

Experiment 3. the influence of different values of parameter ${\pmb \lambda}$ on the algorithm performance

In the proposed algorithm,parameter $\lambda$ is a value to measure the degree that the feature vector of a project (an expert) is influenced by its nearest neighbors. The greater the value is,the greater the influence on the feature vector caused by its nearest neighbors,and the greater the effect on our algorithm caused by topic relationship between projects/experts. Setting $\lambda$ as 0.5,1,5,10,20,30 in sequence,we conduct experiments on the five domain datasets respectively to observe the influence of parameter $\lambda$ on the algorithm performance. In order to reduce the complexity of the algorithm,we set $D=5,\beta=0.8$,in the experiments. The experimental results are shown in Fig. 6.

| Download: |

| Fig. 6 The impact of parameter $\lambda$ on the algorithm performance. | |

According to the experimental results we can see that the parameter $\lambda$ has a great impact on algorithm performance. With parameter $\lambda$ increasing,the accuracy of the algorithm has continuously improved,and this fully proves that topic relationship between projects/experts is very effective on review expert recommendation. When $\lambda>20$,the algorithm accuracy begins to decrease; the reason is that a too large $\lambda$ leads to the algorithm over-fitting and thus affects the recommendation effect.

Experiment 4. the algorithm’s recommendation performance under different rating sparseness

In order to compare the effects of the algorithm under different sparse datasets,in this study,we divide the five domain data into three groups based on the density of rating matrix. The three groups of datasets and the density of their rating matrix are shown in Table V.

| Table V THREE GROUPS OF DATASETS AND THEIR RATING MATRIX SPARSENESS |

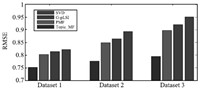

To compare the recommendation effects of SVD,Gaussian pLSI,PMF and Topic_MF under different rating sparsity,we do experiments on the three groups of datasets respectively to observe the impact of different rating sparseness on the algorithm performance. Similarly,given $\beta=0.8,\lambda=20,D=5$,the experimental results are shown in Fig. 7. As can be seen from Fig. 7,with the increasing of rating data sparseness degree,the accuracies of the four algorithms decrease. On any dataset,Topic_MF algorithm is significantly better than the other algorithms,and it can get better recommendation effect. This further proves that introducing topic relationship between projects/experts can significantly improve the performance of recommendation system.

| Download: |

| Fig. 7 RMSE comparison on different sparse dataset. | |

Experiment 5. the algorithm’s recommendation performance on new experts

In order to prove that the Topic_MF algorithm proposed in this paper can solve the recommendation problem of new experts,we randomly pick out 300 experts from the five domain data sets,remove their rating records,and then do experiments to observe the recommendation performance of SVD,G-pLSI,PMF and Topic_MF on the 300 experts. In the experiments,similarly,we set parameters $\beta=0.8,\lambda=20,D=5$ ,and the experimental results are shown in Table VI. From the experimental results we can find that Topic_MF can successfully recommend new experts while the other algorithms cannot achieve this goal.

| Table VI THE ALGORITHM' RECOMMENDATION PERFORMANCE ON NEW EXPERTS |

Experiment 6. the algorithm’s recommendation performance on differently-sized training datasets

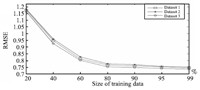

In order to verify whether the expert recommendation effect of the proposed Topic_MF algorithm is obvious related to the size of training dataset,we averagely divide the whole data set into three groups of data (Dataset 1,Dataset 2 and Dataset 3),then change the proportion of training data and test data in each group dataset,and conduct our experiments on the three groups of data respectively to observe the impact of differently-sized training data on the algorithm performance. In order to make comparable experiments under the same condition,we set $\beta=0.8,\lambda=20,D=5$,and the experimental results are shown in Fig. 8. As can be seen from Fig. 8,with size of training data increasing,the recommendation accuracy is continuously improved. When the size of training dataset reaches 80 % of its own data group,the change of RSME will be smaller and smaller. After that,with training data size increasing continually,the corresponding RSME nearly remains unchanged. Thus it can be seen that the proposed Topic_MF algorithm is relatively stable.

| Download: |

| Fig. 8 RMSE comparison on differently-sized training data. | |

V. CONCLUSION

This paper focuses on the problems of rating data sparseness and new experts' "cold start",introduces topic relationship between projects and experts,supposes that projects/experts with similar topics would have similar feature vectors,and puts forward a collaborative filtering recommendation algorithm based on topic relationship. The algorithm uses LDA to extract the topic information of projects/experts,constructs the topic relationship network of projects/experts,finds the nearest neighbor set that has the maximum similarity with target project (expert),and then integrates the neighbors into the collaborative filtering algorithm based on matrix factorization to improve the predicting accuracy of expert rating. The experimental results show that this algorithm can solve the problems of rating data sparseness and new expert recommendation well,can predict the review expert rating more effectively than the mentioned traditional algorithms,and consequently improve recommendation accuracy of review experts. In this paper,we just consider the topic relationship between projects/experts,but we do not consider the influence relationship between them. Our further work will focus on the influence relationship between projects/experts.

| [1] | Adomavicius G, Tuzhilin A. Toward the next generation of recommender systems: a survey of the state-of-the-art and possible extensions. IEEE Transactions on Knowledge and Data Engineering, 2005, 17(6): 734-749 |

| [2] | Herlocker J L, Konstan J A, Borchers A, Riedl J. An algorithmic framework for performing collaborative filtering. In: Proceedings of the 22nd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR'99. New York, NY, USA: ACM, 1999. 230-237 |

| [3] | Sarwar B, Karypis G, Konstan J, Riedl J. Item-based collaborative filtering recommendation algorithms. In: Proceedings of the 10th International Conference on World Wide Web, WWW'01. New York, NY, USA: ACM, 2001. 285-295 |

| [4] | Ungar L H, Foster D P. Clustering methods for collaborative filtering. In: Proceedings of the 1998 Workshop on Recommender Systems. Menlo Park: AAAI, 1998. 114-129 |

| [5] | Getoor L, Sahami M. Using probabilistic relational models for collaborative filtering. In: Proceedings of the 1999 Workshop on Web Usage Analysis and User Profiling. San Diego: Springer-Verlag, 1999. 83-96 |

| [6] | Chien Y H, George E I. A Bayesian model for collaborative filtering. In: Proceedings of the 7th International Workshop on Artificial Intelligence and Statistics. San Francsico, CA: Morgan Kaufmann, 1999. 187-192 |

| [7] | Hofmann T. Collaborative filtering via Gaussian probabilistic latent semantic analysis. In: Proceedings of the 26th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR'03. New York, NY, USA: ACM, 2003. 259-266 |

| [8] | Sarwar B M, Karypis G, Konstan J A, Riedl J. Application of dimensionality reduction in recommender system — a case study. In: Proceedings of the 2000 ACM-SIGKDD Conference on Knowledge Discovery in Databases, Web Mining For E-Commerce Workshop, WEBKDD'2000. Boston, MA, USA: ACM, 2000. 1-12 |

| [9] | Hofmann T. Latent semantic models for collaborative filtering. ACM Transactions on Information Systems, 2004, 22(1): 89-115 |

| [10] | Koren Y, Bell R, Volinsky C. Matrix factorization techniques for recommender systems. IEEE Computer, 2009, 42(8): 30-37 |

| [11] | Salakhutdinov R, Mnih A. Probabilistic matrix factorization. In: Proceedings of the 2007 Conference on Advances in Neural Information Processing Systems 20. Vancouver, BC, CA: Curran Associates Inc., 2007. 1257-1264 |

| [12] | Takács G, Pilászy I, Németh B, Tikk D. Matrix factorization and neighbor based algorithms for the netflix prize problem. In: Proceedings of the 2008 ACM Conference on Recommender Systems. New York, NY, USA: Association for Computing Machinery, 2008. 267-274 |

| [13] | Zhen Y, Li W J, Yeung D Y. Tagicofi: tag informed collaborative filtering. In: Proceedings of the 3rd ACM Conference on Recommender Systems. New York, NY, USA: Association for Computing Machinery, 2009. 69-76 |

| [14] | Zhou T C, Ma H, King I, Lyu M R. Tagrec: leveraging tagging wisdom for recommendation. In: Proceedings of the 12th IEEE International Conference on Computational Science and Engineering, CSE 2009. Vancouver, BC, CA: IEEE Computer Society, 2009. 194-199 |

| [15] | Ma H, Yang H X, Lyu M R, King I. SoRec: social recommendation using probabilistic matrix factorization. In: Proceedings of the 17th ACM Conference on Information and Knowledge Management, CIKM'08. New York, NY, USA: ACM, 2008. 978-991 |

| [16] | Ma H, King I, Lyu M R. Learning to recommend with social trust ensemble. In: Proceedings of the 32nd International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR'09. New York, NY, USA: ACM, 2009. 203-210 |

| [17] | Jamali M, Ester M. TrustWalker: a random walk model for combining trust-based and item-based recommendation. In: Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD'09. New York, NY, USA: ACM, 2009. 397-406 |

| [18] | Guo L, Ma J, Chen Z M, Jiang H R. Learning to recommend with social relation ensemble. In: Proceedings of the 21st ACM International Conference on Information and Knowledge Management, CIKM'12. New York, NY, USA: ACM, 2012. 2599-2602 |

| [19] | Wu L, Chen E H, Liu Q, Xu L L, Bao T F, Zhang L. Leveraging tagging for neighborhood-aware probabilistic matrix factorization. In: Proceedings of the 21st ACM International Conference on Information and Knowledge Management, CIKM'12. New York, NY, USA: ACM, 2012. 1854-1858 |

| [20] | Blei D M, Ng A, Jordan M. Latent Dirichlet allocation. Journal of Machine Learning Research, 2003, 3(1): 993-1022 |

| [21] | Zhang Y M. Research on Expert-level Network Relationships Construction Integrating Explicit and Implicit Relationships [Master dissertation], Kunming University of Science and Technology, China, 2013. |

| [22] | Tian W, Shen T, Yu Z T, Guo J Y, Xian Y T. A Chinese expert name disambiguation approach based on spectral clustering with the expert page-associated relationships. In: Proceedings of the 2013 Chinese Intelligent Automation Conference. Yangzhou, JS, China: Springer, 2013. 245-253 |

| [23] | Jamali M, Ester M. A matrix factorization technique with trust propagation for recommendation in social networks. In: Proceedings of the 4th ACM Conference on Recommender Systems. New York, NY, USA: ACM, 2010. 135-142 |

| [24] | Koren Y. Factorization meets the neighborhood: a multifaceted collaborative filtering model. In: Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD'08. New York, NY, USA: ACM, 2008. 426-434 |

2015, Vol.2

2015, Vol.2