2. School of Computer Science and Electronic Engineering, University of Essex, Colchester CO4 3SQ, UK;

3. School of Information Science and Electrical Engineering, Ludong University, Yantai 264025, China

In applications of cyber-physical systems (CPS),the dataset classification is the essential fundament of computational intelligence. The dataset in general can be achieved from sensors,images,videos and audio files. The clustering is anun supervised learning process for dataset classification,which makes a cluster including more similar data than that of other clusters. The unsupervised learning as a main algorithm in the classification can be applied to image segmentation,pattern recognition,data mining and bioinformatics.

For the dataset classification,fuzzy clustering methods aredivided into hard c-means (HCA)[1] and fuzzy c-means(FCM)[2] in the unsupervised learning. In contrast to theHCA,the FCM has better performance on the significant data presentation and clustering effect. Based on it,Wu et al.proposed a new metric which is called alternative hard c-means(AHCM) and alternative fuzzy c-means (AFCM) clusteringalgorithms[3]. The two algorithms actually modify the distance function,but the clustering result is fuzzy,because the value is less than 1 during iteration. Ahmed et al. presented a bias corrected FCM (BCFCM) algorithm[4]. This method introduces a spatial term of immediate neighborhood which allows a point to be influenced by the labels. Its weakness is high time consumption in the iteration. In addition,Chen et al.investigated a preprocessing image smoothing step based on theBCFCM,but it cannot control the trade-off between smoothing and clustering[5]. Szilagyi et al. presented an enhanced FCM(EnFCM) algorithm using biases to solve the piecewise homogeneouslabeling problem[6]. Although the relevant classification is effective,it fails in the piecewise partition according to the label interval. A semi-supervised FCM (SS-FCM) algorithm was introduced by Li et al.[7],which provided a new iterative procedure to calculate the membership labeled and unlabeledsamples with the drawback of the complex calculation. It is noted that the above mentioned FCM clustering methods fail to computer the non-robust Euclidean distance,and cannot reject noises which are inconvenient in practical applications.

Although FCM has been successfully applied in many fields,it is unable to select the fuzzy weighting exponent and to determine the priori knowledge of the cluster prototype. An example for an approach is hierarchical multi-relational clusteringalgorithm[8],which is adopted to solve one-to-manyrelationship of dataset classification. The order production scheduling method has been employed based on the subspace clusteringmixed model (SCMM)[9],and the “kernel method” was a feasible direction for the dataset classification. Kernel fuzzy c-means(KFCM) with automatic variable weighting was investigated,which uses the adaptive distances to learn the weights of the variables during the clustering[10]. Krinidis et al.,according to the window size information,presented a robust fuzzy local informationc-means (FLICM) clustering algorithm[11]. However,the noiseswere amplified due to the window size information. To extend FLICMto the higher dimensions,Krinidis et al. introduced ageneralization clustering algorithm based on the fuzzy localinformation c-means (GFLIM)[12]. On the basis of FLICM,Gong etal. developed a trade-off weighted fuzzy factor for controllingadaptively the local spatial relationship[13]. To detect the moving objects,Chiranjeevi et al. used multi-channel kernel fuzzycorrelogram based on background subtraction[14]. Alipour et al.proposed a fast spatial kernel fuzzy c-means (FKFCM) to realizeautomatic medical image segmentation by connecting it with level set method[15]. This FKFCM summarizes that kernel function attaches more weight to nearer pixels. Lu et al. reported a modified fuzzyc-means algorithm which involves a novel density-induced distancemetric based on the relative density degree to improveclassification[16]. Qiu et al. introduced an enhanced intervaltype-2 fuzzy c-means (EIT2FCM) algorithm with improved initialcenter[17]. In the aspect of robustness estimation[18, 19, 20, 21],the theory and analysis processing were described. Nevertheless,these FCM algorithms based on kernel are difficult to reduce the time consumption and optimize data center resources.

To deal with the above mentioned problems,a new robust dataset classification approach is presented based on neighbor searching and kernel fuzzy c-means (NSKFCM). The approach first adopts the neighbor searching method with the dissimilarity matrix to normalize the dataset,and the number of clusters is determined by controlling clustering shape. To ensure the stability and consistency of classification,the maximum peak method is then used to initialize the membership and the cluster prototype. The adaptive distancekernel function is next employed to get the variable weights,followed by the convergence analysis of the objective function.Because dataset classification is an essential fundament of computational intelligence in CPS,numerical experiments are validated from practical MEMS accelerometer and the image segmentation to reduce the impact of parameters uncertainties with the complex datasets. The experimental results demonstrate the feasibility and robustness of NSKFCM.

The remainder of the paper is organized as follows. Section IIbriefly describes the fundamental kernel FCM algorithms. TheNSKFCM approach and robustness estimation are described in SectionIII. Experimental results and comparative analysis are given inSection IV and the conclusions are summarized in Section V.

II. KERNEL FUZZY C-MEANS ALGORITHMThis section presents the notion of the fundamental kernel fuzzyc-means (KFCM) clustering algorithms[14].

A. Clustering Objective FunctionLet $R = \left\{ {{x_1},{x_2},...,{x_n}} \right\}$ be a dataset,the objective function based on fuzzy clusteringis[2]

| \[O\left( {U,V} \right) = \sum\limits_{k = 1}^n {\sum\limits_{i = 1}^c {{{\left( {{u_{ik}}} \right)}^m}{{\left( {{d_{ik}}} \right)}^2}} } ,\] | (1) |

where the array $U = \left\{ {{u_{ik}}} \right\}$ represents a membership matrix satisfying

| \[\left\{ {\begin{array}{*{20}{l}} {{u_{ik}} \in \left[ {0,1} \right],\forall i,k,} \\ {\sum\limits_{i = 1}^c {{u_{ik}}} = 1,\forall k,} \end{array}} \right.{\text{ }}\] | (2) |

and $V = \left\{ {{v_i}} \right\}_{i = 1}^c$ denotes the vector of the cluster prototype. The parameter $m \in \left( {1,\infty }\right)$ is the weighting exponent,which determines the fuzziness number of classification. ${d_{ik}}$ is the distance measure between ${x_k}$ and ${v_i}$,defined by

| \[{\left( {{d_{ik}}} \right)^2} = {\left\| {{x_k} - {v_i}} \right\|^2}_A = {\left( {{x_k} - {v_i}} \right)^{\text{T}}}A\left( {{x_k} - {v_i}} \right).{\text{ }}\] | (3) |

Here,${\left[A \right]_{n \times n}}$ is a symmetric positivedefinite matrix. If $A$ is a unit matrix,the distance measure denotes the Euclidean distance.

FCM is non-robust because it ignores spatial contextual information and uses Euclidean distance. The feature space is the ninvestigated,which uses a nonlinear mapping $\Phi $ from the input space dataset $R = \left\{ {{x_1},{x_2},... ,{x_n}}\right\}$ to a higher-dimensional space dataset $F$. So that thenonlinear mapping is simply represented by $\Phi : R \to F$. Since the key idea of kernel method is unnecessary to explicitly specify$\Phi $,the objective function of KFCM in feature space (KFCM-F)is expressed by [22]

| \[O\left( {U,V} \right) = \sum\limits_{k = 1}^n {\sum\limits_{i = 1}^c {{{\left( {{u_{ik}}} \right)}^m}{{\left\| {\Phi \left( {{x_k}} \right) - \Phi \left( {{v_i}} \right)} \right\|}^2}} } .\] | (4) |

The kernel function $K$ is symmetric (i.e.,$K\left( {{x_k},{x_i}}\right) = K\left( {{x_i},{x_k}} \right)$),and $K\left({{x_k},{x_i}} \right) = \Phi {\left( {{x_k}} \right)^{\rm T}}\Phi\left( {{x_i}} \right)$. Setting $d\left( {{x_k},{v_i}} \right)\buildrel \Delta \over = \left\| {\Phi \left( {{x_k}} \right) -\Phi \left( {{v_i}} \right)} \right\|$,the objective function ofKFCM-F is equal to

| \[\begin{array}{*{20}{l}} {O\left( {U,V} \right) = \sum\limits_{k = 1}^n {\sum\limits_{i = 1}^c {{{\left( {{u_{ik}}} \right)}^m}{{\left\| {\Phi \left( {{x_k}} \right) - \Phi \left( {{v_i}} \right)} \right\|}^2}} } } \\ { = \sum\limits_{k = 1}^n {\sum\limits_{i = 1}^c {{{\left( {{u_{ik}}} \right)}^m}\left\{ {K\left( {{x_k},{x_k}} \right) - 2K\left( {{x_k},{v_i}} \right) + K\left( {{v_i},{v_i}} \right)} \right\}} } .} \end{array}\] | (5) |

For any $i \in \left\{ {1,2,... ,c} \right\}$,${u_{ik}}$ is the fuzzy membership degree and it satisfies $\sum\nolimits_{i =1}^c {{u_{ik}}} = 1$. Based on the FCM membership[22]${u_{ik}}$,which is given by

| \[{u_{ik}} = {\left( {\sum\limits_{j = 1}^c {{{\left( {\frac{{\left\| {{x_k} - {v_i}} \right\|}}{{\left\| {{x_k} - {v_j}} \right\|}}} \right)}^{\frac{2}{{m - 1}}}}} } \right)^{ - 1}}.\] | (6) |

The KFCM-F membership degree is defined as follow:

| \[\begin{array}{*{20}{l}} {{u_{ik}} = {{\left( {\sum\limits_{j = 1}^c {{{\left( {\frac{{\left\| {\Phi \left( {{x_k}} \right) - \Phi \left( {{v_i}} \right)} \right\|}}{{\left\| {\Phi \left( {{x_k}} \right) - \Phi \left( {{v_j}} \right)} \right\|}}} \right)}^{\frac{2}{{m - 1}}}}} } \right)}^{ - 1}}} \\ { = {{\left( {\sum\limits_{j = 1}^c {{{\left( {\frac{{\tilde K\left( {{x_k},{x_k}} \right) - 2\tilde K\left( {{x_k},{v_i}} \right) + \tilde K\left( {{v_i},{v_i}} \right)}}{{\tilde K\left( {{x_k},{x_k}} \right) - 2\tilde K\left( {{x_k},{v_j}} \right) + \tilde K\left( {{v_j},{v_j}} \right)}}} \right)}^{\frac{1}{{m - 1}}}}} } \right)}^{ - 1}}.} \end{array}\] | (7) |

The cluster prototype ${v_i}$ is obtained as

| \[{v_i} = \frac{{\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} {x_k}\tilde K\left( {{x_k},{v_i}} \right)}}{{\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} \tilde K\left( {{x_k},{v_i}} \right)}},\] | (8) |

where $\tilde K\left( {{x_k},{v_i}} \right)$ expresses different kernel function. The distance measure is rewritten as following by using the Gaussian kernel function

| \[\begin{array}{*{20}{l}} {d{{\left( {{x_k},{v_i}} \right)}^2} = {{\left\| {\Phi \left( {{x_k}} \right) - \Phi \left( {{v_i}} \right)} \right\|}^2}} \\ { = 2\left( {1 - K\left( {{x_k},{v_i}} \right)} \right),} \\ {O\left( {U,V} \right) = \sum\limits_{k = 1}^n {\sum\limits_{i = 1}^c {{{\left( {{u_{ik}}} \right)}^m}{{\left\| {\Phi \left( {{x_k}} \right) - \Phi \left( {{v_i}} \right)} \right\|}^2}} } } \\ { = 2\sum\limits_{k = 1}^n {\sum\limits_{i = 1}^c {{{\left( {{u_{ik}}} \right)}^m}\left( {1 - K\left( {{x_k},{v_i}} \right)} \right)} } .} \end{array}\] | (9) |

Note that the KFCM algorithm based on Gaussian kernel function is sensitive to the noise.

III. THE ROBUST DATA CLASSIFICATION APPROACHSeveral methods based on FCM need to determine the number of clusters,the iterations and the membership initialized randomly,in advance. Although it is difficult to exactly determine the separameters,they have a great effect on the results. Zhanget.al.[23] adopt the weighted and hierarchical affinitypropagation (WAP) to detect the changes of the data stream in realtime,but the robustness does not be verified. Taking into accountthese reasons,a robust dataset classification approach isproposed based on neighbor searching and kernel FCM (NSKFCM).

A. Neighbor SearchingDefinition 1. The dissimilarity matrix (also called distancematrix)[24] describes pairwise distinction between $n$objects. It is a square symmetrical $n \times n$ matrix and can berepresented by ${\left[ {d\left( {i,j} \right)} \right]_{n \times n}}$,where $d\left( {i,j} \right) \ge 0$,$d\left( {i,i} \right)= 0$ and $d\left( {i,j} \right) = d\left( {j,i} \right)$.

If an asymmetrical relation exists between two objects (i.e.${x_i}$ and ${x_j}$),employing a simplicial scalar (such as distance) is insufficient to further analyze the correlation between these two objects. The dissimilarity matrix is thus introduced to the initial data in NSKFCM approach. Given a dataset$R = \left\{ {{x_1},{x_2},... ,{x_n}} \right\}$ consisting of$n$ objects,$\max \left( x \right)$ and $\min \left( x \right)$represent the maximum and minimum of the dataset,respectively.${x_i}'$ represents the normalized object of ${x_i}$,which is defined as ${x_i}^\prime = \frac{{{x_i} - \min \left( x \right)}}{{\max \left( x \right) - \min \left( x \right)}}$. It represents the relative location of ${x_i}$ between the maximum and minimum. Hence the dissimilarity distance $d\left( {i,j}\right)$ is given as $d\left( {i,j} \right) = \sqrt {\alpha{{\left( {{x_i}' - {x_j}'} \right)}^2}} $,where $\alpha $ is the positive weighting coefficient. The dissimilarity matrix $D$ is accordingly structured as

| \[D = \left[ {\begin{array}{*{20}{l}} {d\left( {1,1} \right)d\left( {1,2} \right)...d\left( {1,n} \right)} \\ {d\left( {2,2} \right)...d\left( {2,n} \right)} \\ { \ddots \vdots } \\ {...d\left( {n,n} \right)} \end{array}} \right].\] | (10) |

Equation (10) establishes the relative distance of the distinct objects,but it is difficult to make the subsequent clustering analysis. In order to facilitate the subsequent analysis,the dissimilarity matrix needs to be normalized. Based on the dissimilarity distance,the Jaccard measure[25, 26] is usedfor interpreting the similarity of the distinct objects. The normalization matrix ${\left[G \right]_{n \times n}}$ is the nspecified as

| \[{G_{i,j}} = 1 - \frac{1}{n}\sum\limits_{j = 1}^n {\left| {{x_i}^\prime - {x_j}^\prime } \right|} ,\] | (11) |

here the mean distance of the normalized objects is involved,which can better demonstrate the object's detailed information.The normalization matrix is a symmetric matrix,which takes values from 0 to 1,and it contains the relationship of ${G_{i,j}} ={G_{j,i}}$. With the similarity matrix,the original dataset $R$is normalized by ${\left[G \right]_{n \times n}}$.

Definition 2. For the dataset $R = \left\{ {{x_1},{x_2},... ,{x_n}} \right\}$ and any object ${x_i}$,$2 \le i \le n -1$,objects ${x_{i - 1}}$ and ${x_{i + 1}}$ are called the neighbors of ${x_i}$. If $i = 1$,the neighbor of ${x_1}$ is${x_2}$,and if $i = n$,the neighbor of ${x_n}$ is ${x_{n - 1}}$.

Definition 3. Referring to [27$-$28],for a dataset $R =\left\{ {{x_1},{x_2},... ,{x_n}} \right\}$,setting the object$y$ and the distance $r$,if any object ${x_i}$ satisfies $d\left({{x_i},y} \right) \le r$,then ${x_i}$ is the direct neighbor of$y$. The entire neighbors set of object $y$ are represented by${D_y}$,and $0 \le r \le 1$ adopts the Jaccard coefficient. It is the maximum distance if two objects are neighbors.

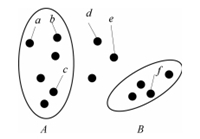

As shown in Fig. 1,the given dataset is divided into two clusters,$A = \left\{ {a,b,c,... } \right\}$ and $B = \left\{{f,... } \right\}$.

|

Download:

|

| Fig. 1. Clustering example. | |

The neighbor searching method is able to traverse the objects. If$d\left( {x,y} \right) \le r$,the object $x$ and $y$ are neighborin the same neighbor set. Supposing $r = 0.65$,$d\left( {a,b}\right) = 0.50$,$d\left( {b,c} \right) = 0.60$,$d\left( {c,d}\right) = 0.63$,$d\left( {d,e} \right) = 0.50$ and $d\left( {e,f}\right) = 0.62$,the objects $b$,$c$,$d$,$e$ and $f$ will be sequentially merged into $A = \left\{ {a,b,c,d,e,f,... }\right\}$. Considering this,the neighbor searching depending only on the distance cannot be properly achieved. The parameter of controlling cluster shape is then presented in Definition 4.

Definition 4. Given a dataset $A = \left\{ {{x_1},{x_2},...,{x_m}} \right\}$ includes $m$ objects,and $y$ is another object,if $d\left( {{x_i},y} \right) \le r$,then ${X_i} = 1$,else ${X_i} = 0$. The other object $y$ is merged into $A$satisfying $\left( {\sum\nolimits_{i = 1}^m {{X_i}} } \right)/m \geqslant \xi $[28]. $\xi$ is named the parameter of controlling clustering shape and $0 \le \xi \le 1$.

Considering the above definitions and Fig. 1,the neighbor sets of$a$,$b$ and $c$ are expressed as ${D_a}$,${D_b}$ and ${D_c}$,respectively. The given three neighbors have $m = 3$. The analysisfor $e$ can be merged into $A$ are shown as follows.

1) If $\xi m = 1$,then $\sum\nolimits_{i = 1}^m {{X_i}} = 1$,i.e.,$d\left( {a,e} \right) \le r$,or $d\left( {b,e} \right) \leqslant r$,or $d\left( {c,e} \right) \le r$. The condition is $e \in\left( {{D_a} \cup {D_b} \cup {D_c}} \right)$.

2) If $\xi m = 2$,then $\sum\nolimits_{i = 1}^m {{X_i}} = 2$,i.e.,$d\left( {a,e} \right) \leqslant r \cap d\left( {b,e} \right) \leqslant r$,or $d\left( {b,e} \right) \leqslant r \cap d\left( {c,e} \right) \leqslant {\text{r}}$,or $d\left( {c,e} \right) \leqslant r \cap d\left( {a,e} \right) \leqslant r$. The condition satisfies $e \in \left( {\left( {{D_a} \cap{D_b}} \right) \cup \left( {{D_b} \cap {D_c}} \right) \cup \left({{D_a} \cap {D_c}} \right)} \right)$.

3) If $\xi m = 3$,then $\sum\nolimits_{i = 1}^m {{X_i}} = 3$,i.e.,$d\left( {a,e} \right) \le r$,$d\left( {b,e} \right) \le r$and $d\left( {c,e} \right) \le r$,satisfying $e \in \left( {{D_a}\cap {D_b} \cap {D_c}} \right)$.

The analysis indicates that if $\xi$ is larger,the clustering region is smaller,and the number of clusters is more. The neighbor searching method is used to ascertain the number of clusters.

B. Initialization of Membership and Cluster PrototypeThe majority of FCM clustering algorithms employ the member shipinitialized randomly,the clustering result is then varied each time. NSKFCM involves the maximum peak method to search the cluster prototype. Given a dataset $R = \left\{ {{x_1},{x_2},... ,{x_n}} \right\}$,the maximum peak method,whose datasetis defined as $P = \left\{ {{p_1},{p_2},... ,{p_k}}\right\}_{k = 2}^{n - 1}$,will be executed in the following steps.

1) Search the maximum peak by $\mathop {p_k} = \arg{\max\nolimits_x} \left\{ {{x_{i - 1}},{x_i},{x_{i + 1}}} \right\}\subseteq P,2 \le i \le n - 1$,here $k$ expresses the number of maximum peak.

2) Calculate the sum of each neighbor set.

| \begin{array}{l}\sum {{p_k}} = \sum\limits_{i = r}^t {{x_i}} ,\\r = \frac{{p_{k - 1}} + {p_k}}{2}+ 1,\\t = \frac{{p_k} + {p_{k + 1}}}{2}.\end{array} | (12) |

3) Remove the minimum peak. $c$ is the number of clusters with $c\in \left[{1,n} \right]$. If $k > c$,the minimum peak is removedfrom $P$. Set as $k = k - 1$ and return to 2).

4) Confirm the initialized cluster prototype. If $k = c$,it is defined as

| \begin{align}{v_i} = \frac{1}{t}\sum\limits_{j = 1}^t {{x_j}} ,\quad i = 1,2,... ,c ,\end{align} | (13) |

where $t$ is the interval of every cluster prototype.

5) Calculate the initialized membership given in (6),wherein${d_{ik}} \buildrel \Delta \over = \left| {{x_{ik}} - {v_i}}\right|$ represents the distance.

The cluster prototype vector and membership matrix have been initialized,consequently,they are the fixed values to ensure the stability of classification.

C. Optimization of Membership and Cluster PrototypeThe traditional kernel clustering methods do not take into a ccountthe relevance weights of the variables[14]. NSKFCM adapts theGaussian kernel function to apply each variable. The kernel function is written as (14).

| \[K\left( {{x_k},{v_i}} \right) = \exp \left( { - \frac{{{{\left\| {{x_k} - {v_i}} \right\|}^2}}}{{2{\sigma ^2}}}} \right).\] | (14) |

The approach investigates an optimized adequacy criterion of objective function,which can be defined as

| \[O = \sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}{\varphi ^2}\left( {{x_k},{v_i}} \right)} } .\] | (15) |

In accordance with the dissimilarity matrix,${\varphi ^2}\left({{x_k},{v_i}} \right)$ is equal to

| \[{\varphi ^2}\left( {{x_k},{v_i}} \right) = \sum\limits_{j = 1}^t {{\lambda _j}{{\left\| {\phi \left( {{x_{kj}}} \right) - \phi \left( {{v_{ij}}} \right)} \right\|}^2}} ,\] | (16) |

where $\lambda = \left\{ {{\lambda _1},{\lambda _2},...,{\lambda _t}} \right\}$ is the variable set,and $\left\{\begin{array}{l}{\lambda _j} > 0,\forall j,\\\prod\limits_{j = 1}^t {{\lambda _j}} = 1\end{array} \right. .$

In the adaptive case,the objective function depending on the kernel function and variable set is rewritten as

| \[\begin{array}{*{20}{l}} {O\left( {U,V,\lambda } \right) = \sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}{\varphi ^2}\left( {{x_k},{v_i}} \right)} } } \\ { = \sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}\sum\limits_{j = 1}^t {{\lambda _j}{{\left\| {\phi \left( {{x_{kj}}} \right) - \phi \left( {{v_{ij}}} \right)} \right\|}^2}} } } } \\ { = \sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}\sum\limits_{j = 1}^t {{\lambda _j}\left( {2\left( {1 - K\left( {{x_{kj}},{v_{ij}}} \right)} \right)} \right)} } } } \\ { = \sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}\sum\limits_{j = 1}^t {{\lambda _j}} } } } \\ {\left( {2\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} \right).} \end{array}\] | (17) |

Proposition 1. For the distance function (16),and if$K\left( { \cdot ,\cdot } \right)$ is the Gaussian kernel,then with fixed $V$ and $\lambda $,the fuzzy membership degree${u_{ik}}\left( {k = 1,2,...,n,i = 1,2,... ,c} \right)$,which minimizes the objective function $O\left( {U,V,\lambda }\right)$ given in (17),under ${u_{ik}} \in \left[{0,1}\right],\forall i,k$ and $\sum\nolimits_{i = 1}^c {{u_{ik}}} = 1,\forall k$,satisfies the local optimum expressed by

| \[{u_{ik}} = \sum\limits_{h = 1}^c {{{\left( {\frac{{\sum\limits_{j = 1}^t {{\lambda _j}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{hj}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}{{\sum\limits_{j = 1}^t {{\lambda _j}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}} \right)}^{\frac{1}{{m - 1}}}}} .\] | (18) |

The proof is given in Appendix A.

Proposition 2. For the distance function (16),and $K\left({ \cdot ,\cdot } \right)$ as the Gaussian kernel,and with fixed$U$ and $\lambda $,then the cluster prototype ${v_{ij}}\left( {i= 1,2,... ,c,j = 1,2,... ,t} \right)$,which minimizes the objective function $O\left( {U,V,\lambda } \right)$ given in (17),is optimized by the following expression:

| \[{v_{ij}} = \frac{{\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} \sum\limits_{j = 1}^t {{\lambda _j}\left( {\exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} {x_{kj}}}}{{\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} \sum\limits_{j = 1}^t {{\lambda _j}\left( {\exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}.\] | (19) |

The proof is given in Appendix B.

NSKFCM based on adaptive distances is used for determining the weights of the variables. Having fixed the fuzzy membership matrix$U$ and cluster prototype vector $V$,minimizing the objective function $O\left( {U,V,\lambda } \right)$ is constrained by the weights of the variables.

Proposition 3. If the adaptive distance function is given by(16),the vector of weight $\lambda = \left( {{\lambda_1},{\lambda _2},... ,{\lambda _t}} \right)$,which minimizes the objective function (17) under ${\lambda _j} > 0,\forall j$ and$\prod\nolimits_{j = 1}^t {{\lambda _j}} = 1$,${\lambda_j}\left( {j = 1,2,... ,t} \right)$ is updated according to the following expression:

| \[\begin{array}{*{20}{l}} {{\lambda _j} = } \\ {\frac{{{{\left( {\prod\limits_{h = 1}^t {\left( {\sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {u_{ik}^m\left( {1 - \exp \left( { - \frac{{{x_{kj}} - {v_{hj}}{^2}}}{{2{\sigma ^2}}}} \right)} \right)} } } \right)} } \right)}^{\frac{1}{t}}}}}{{\sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {u_{ik}^m\left( {1 - \exp \left( { - \frac{{{x_{kj}} - {v_{ij}}{^2}}}{{2{\sigma ^2}}}} \right)} \right)} } }}.} \end{array}\] | (20) |

The proof is achieved in Appendix C.

Remark 1. It is important to note that NSKFCM converges to alocal minimum or a saddle point.

Considering the convergence theory for fuzzy c-means[29],the relationship of the objective function (17) between neighboring objects,under Propositions 1,2,and 3 given in (18),(19),and(20),is presented as the following form:

| \[\begin{gathered} O\left( {{U^q},{V^q},{\lambda ^q}} \right) \geqslant O\left( {{U^{q + 1}},{V^q},{\lambda ^q}} \right) \hfill \\ \quad \geqslant O\left( {{U^{q + 1}},{V^{q + 1}},{\lambda ^q}} \right) \geqslant O\left( {{U^{q + 1}},{V^{q + 1}},{\lambda ^{q + 1}}} \right). \hfill \\ \end{gathered} \] | (21) |

This conclusion holds that the bounded objective function $O\left({U,V,\lambda } \right)$ is a decreasing function during iteration.NSKFCM will eventually converge to a local minimum or a saddle point.

D. Robustness EstimationAn excellent clustering algorithm should be robust to reject theubiquitous exogenous noises with the uncertainoutliers[18, 19]. Since the objective functions of NSKFCMprobably have several local optimums,it is necessary to establishrobust estimator for evaluating the impacts of the critical parameters. The considered fundamental robust estimator isM-estimator,which can facilitate an optimizer for the objective function[30].

Given a random dataset as $R = \left\{ {{x_i},i = 1,2,... ,n}\right\}$,with the aid of M-estimator,the estimation parameter$\theta $ is designed as

| \[\hat \theta = \frac{{\sum\limits_{i = 1}^n {w\left( {{d_i}} \right){x_i}} }}{{\sum\limits_{i = 1}^n {w\left( {{d_i}} \right)} }},\] | (22) |

where ${d_i} = d\left( {{x_i},\theta } \right)$,w$\left( {{d_i}} \right)$= $F\left( \theta \right)/\theta $and $F\left( \theta \right) = {\frac{{\rm d}\rho( \theta)}{{\rm d}\theta }}$.

The robust estimator can be constructed by designing a suitable$\rho \left( \theta \right)$,which could be engaged to evaluatethe impact of parameters uncertainties. The M-estimator hasdemonstrated the influence function (IF) of the local optimization[3] with

| \[IF\left( {x,F,\theta } \right) = \frac{{\varphi \left( {x - \theta } \right)}}{{\int {\varphi '\left( {x - \theta } \right)d{F_X}\left( x \right)} }},\] | (23) |

where ${F_X}\left( x \right)$ represents the distribution functionof $X$. The influence function is the important measure of robustness,and if the estimator is bounded,the parameters uncertainties are robust.

Based on (18) $-$ (20),it is concluded that the unified form(i.e.,$\left( {{x_{kj}} - {v_{ij}}} \right)$) can be involved in the Gaussian kernel. Therefore,according to the NSKFCM approach,a narbitrary uncertain function $F\left( \theta \right)$ is considered as

| \[F\left( \theta \right) = \sum\limits_{i = 1}^n {\rho \left( {{x_i} - \theta } \right)} .\] | (24) |

With the M-estimator[31],the differential of $F\left( \theta\right)$ over $\theta $ is obtained as

| \[\frac{{\partial F\left( \theta \right)}}{{\partial \theta }} = \sum\limits_{i = 1}^n {\frac{\partial }{{\partial \theta }}\rho \left( {{x_i} - \theta } \right)} = \sum\limits_{i = 1}^n {\varphi \left( {{x_i} - \theta } \right)} .\] | (25) |

Due to the Gaussian kernel includes the unified form given in(24),design $\rho \left( {x - \theta } \right) = \lambda \left({1 - K\left( {x,\theta } \right)} \right) = \lambda \left( {1 -K\left( {x - \theta } \right)} \right)$ and have the M-estimator as

| \[\begin{gathered} \varphi \left( {x - \theta } \right) = \frac{\partial }{{\partial \theta }}\lambda \left( {1 - K\left( {x,\theta } \right)} \right) \hfill \\ \quad = \frac{\partial }{{\partial \theta }}\lambda \left( {1 - \exp \left( { - \frac{{{{\left\| {x - \theta } \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right) \hfill \\ \quad = - \frac{{\lambda \left( {x - \theta } \right)}}{{{\sigma ^2}}}\exp \left( { - \frac{{{{\left\| {x - \theta } \right\|}^2}}}{{2{\sigma ^2}}}} \right). \hfill \\ \end{gathered} \] | (26) |

Applying the L'Hopital's rule,the bounded value is obtained as

| \[\mathop {\lim }\limits_{x \to \infty } \varphi \left( {x - \theta } \right) = \mathop {\lim }\limits_{x \to - \infty } \varphi \left( {x - \theta } \right) = 0.\] | (27) |

The function $\varphi \left( {x - \theta } \right)$ with (26) is bounded and continuous. It is proved that the involved M-estimatordesigns a bounded and continuous influence function,and the impacts of parameters uncertainties are robust.

To qualitatively evaluate the robustness against the parameters uncertainties,the impact of $\rho \left( {x - \theta } \right)$is analyzed by the following numerical examples.

The calculated results of prototype function and M-estimator are illustrated in Figs. 2 (a) and 2 (b),respectively. Fig. 2(a) shows that the maximum of $\rho \left( {x - \theta } \right)$ isexisted and bounded. Fig. 2 (b) demonstrates that the parameter$\theta $ fluctuates smoothly due to $\mathop {\lim }\nolimits_{x\to \infty } \varphi \left( {x - \theta } \right) = 0$ and the evolution curve converges to the equivalent point 0. From(18) $-$ (20),it indicates that the proposed method reflects the good robustness against the parameters uncertainties of ${u_{ij}}$,$v{}_{ij}$ and ${\lambda _j}$.

|

Download:

|

| Fig. 2. Convergence effect analysis. | |

The proposed NSKFCM approach is executed as the following steps.

Algorithm 1. NSKFCM algorithm.

Input. The distance $r$ and the factor for controlling cluster shape $\xi $;

The kernel method parameter $\sigma $;

The stop threshold $\varepsilon $ and fuzzy weighting exponent$m$;

Output.

The fuzzy membership matrix $U$,the cluster prototype vector $V$ and the objective value $O\left( {U,V,\lambda} \right)$;

1) Determine the number of clusters by setting $r$ and $\xi $adopting to the neighbor searching method.

2) Initialize $U$ and $V$ in the $i$-th cluster dataset,under(6),(13) and the number of clusters.

3) Set the bandwidth parameter $\sigma $ and the fuzzy weighting exponent $m$,with $0 < \varepsilon \ll 1$.

4) Update $U$ in accordance with (18).

5) Update $V$ according to (19).

6) Update $\lambda $ depending on (20).

7) If the stopping criterion satisfies $\left\| {{O^{iter + 1}} -{O^{iter}}} \right\| \leq \varepsilon $,then stop the iteration,and output the fuzzy membership matrix $U$ and cluster prototypevector $V$. Otherwise,set as $iter = iter + 1$ and return to 4).

8) return $U$,$V$ and $O\left( {U,V,\lambda } \right)$.}

IV. EXPERIMENT VERIFICATIONThe experiment section will describe the dataset classification approach which is achieved to the dynamic behavior of MEMSaccelerometer and the landscape images segmentation.

A. Experiment 1:MEMS Accelerometer DatasetMEMS accelerometer is a kind of sensor. The top view of the accelerometer is shown as Fig. 3 The accelerometer is an ARMCortex-M3 micro controller.

|

Download:

|

| Fig. 3. The detectable direction. | |

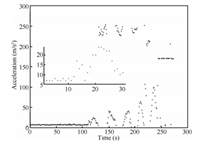

The datasets achieved from the MEMS accelerometer are three matrices on the $X/Y/Z$ axes directions. The dynamics in the horizontal and vertical orientations are shown in Fig. 4,which represents the movement time and acceleration,respectively. Froma large number of experiments,the movement directions including horizontal,vertical,up and down,are obtained,whereas the runtime of the accelerometer is 5 minutes.

|

Download:

|

| Fig. 4. The dynamic behavior on $Y$axis. | |

NSKFCM is able to solve how to classify the dataset from noise sand error values. A group of experiments are performed to compareNSKFCM with other methods,including AFCM[3],EnFCM[6],KFCM[14],FKFCM[15],FLICM[11].

The parameters are set in accordance with a large number of verifications,in general,given the weighting exponent $m = 2$,thestop threshold $\varepsilon = 1 \times {10^{ - 5}}$,the bandwidth parameter $\sigma = 15$,the maximum of iteration $iter = 200$,andthe number of clusters $cluster\_num = 4$. In addition,for theNSKFCM,when the critical parameter $r$ and $\xi $ are set as different values,the number of clusters is different. The numerical experiments are shown as Figs. 5 (a)-(h).

|

Download:

|

| Fig. 5.The dataset classification of eachalgorithm. | |

Comparing the experimental results of the six methods,the classification effects are reflected by the following aspects.

1) AFCM employs a new metric distance whose value is less than 1during iteration. The dataset is classified to one class,consequently,the algorithm cannot accomplish the dataset classification for the accelerometer dynamic behavior.

2) EnFCM is efficient,which involves the biased solution toward piecewise homogeneous labeling. Since the result is piecewise partition according to the label interval,the algorithm does notdistinguish the dynamic behavior of acceleration dataset.

3) The distance metric of KFCM adopts the space data kernelfunction. This factor depends on the spatial symmetry data,sothat the classification is influenced by more noises and outliers.

4) The major characteristic of the FKFCM is the kernel function which has given greater weight value to the neighbor data.However,the number of clusters needs to be judged based on experience.

5) More local context information are used to reduce the iterations for FLICM algorithm. However,the local coefficient of variation is unreasonable to ignore the influence of spatial constraints.

6) NSKFCM sets three groups of parameters. Given different $r$ and$\xi $,the dataset are divided into different classes. The clustering results are accurate. MEMS accelerometer dataset is able to be automatically judged by the number of clusters and accurately classified the dynamic behavior of acceleration dataseton different times.

The optimal effectiveness indicators (OEI) are proposed to analyze the robustness. It expresses the average of the correctly classified data,OEI is greater as well as the classification is more accurate.

| \[OEI = \frac{1}{n}\sum\limits_{k = 1}^n {\sum\limits_{i = 1}^c {{{\left( {{u_{ik}}} \right)}^2}} } .\] | (28) |

Table I shows the quantitative comparison indicators of the experiments on the MEMS accelerometer dataset for the accuracy of the dataset classification.

|

|

Table I NUMERICAL RESULTS ON THE PRACTICAL MEMS DATASET |

From the measures of Table I,it indicates that NSKFCM is superior to the other compared algorithms on the aspects of OEI,the time consumption and the number of iterations. The results illustrate that the proposed NSKFCM approach possesses the optimal performance index for dataset classification.

B. Experiment 2: Landscape Images SegmentationThe dataset classification is applied to CPS. In the computervision,the 2D dataset from $X/Y$ axis of accelerometer is similar to the 2D image. Therefore,these data are treated as classification for image segmentation.

In the coming experiments,four methods are applied to threelandscape images. The first natural image contains mountain and cloud,with 304 $\times$ 208 pixels.

The number of clusters is 4 as shown as Figs. 6 (b)-(d),which are the segmentation results obtained by EnFCM,KFCM and FLICM,respectively. Figs. 6 (e)-(f) display the NSKFCM segmentation results. The numbers of clustering are 2 and 4,when $r = 0.5$,$\xi= 0.4$ and $r = 0.5$,$\xi = 0.7$. The image segmentation results illustrate that the proposed approach is robust and maintains the clear image edges and the more details.

|

Download:

|

| Fig. 6. The segmentation result of original landscape contains mountain and cloud. | |

Fig. 7 (a) shows the original landscape image containing cloud,plain and forest,with 308 $\times$ 200 pixels.Figs. 7 (b)-(d) demonstrate the segmentation results,the number of clusters is 4. For NSKFCM,when $r = 0.6$,$\xi = 0.3$ and $r =0.6$,$\xi = 0.7$,the numbers of clustering are 3 and 7. From Figs. 7 (e)-(f),the experiment results show that NSKFCM is able to reject noises while preserving significant image details and obtain superior performance. In particular,it is relatively independent of the type of textures.

|

Download:

|

| Fig. 7. The segmentation result of original landscape contains cloud,plain and forest. | |

Finally,Figs. 8 (a)-(f) validate the segmentation results of original landscape image including cloud,mountain,plain and tree,with 308 $\times$ 207 pixels. Figs. 8 (b)$-$(d) are the segmentation results obtained by EnFCM,KFCM and FLICM. Shown in Figs. 8 (e)-(f),the factors are modified as $r = 0.6$,$\xi =0.5$ and $r = 0.6$,$\xi = 0.9$,the segmentation results are 4classes and 7 classes,respectively. The clear image edges are obtained and the landscape types are segmented.

|

Download:

|

| Fig. 8. The segmentation result of original landscape contains cloud,mountain,plain,and tree. | |

In order to evaluate the performance of these four methods andanalyze the robustness,some optimal indicators are introduced.The reconstruction rate (RR) defines the image reconstructedindicator as

| \[\begin{gathered} RR\left( I \right) = \frac{1}{{size}} \times \sum\limits_{i = 1}^{row} {\sum\limits_j^{column} {{{\left( {\frac{{\sum\limits_{k = 1}^c {{{\left( {{u_{ijk}}} \right)}^m} \times {v_k}} }}{{\sum\limits_{k = 1}^c {{{\left( {{u_{ijk}}} \right)}^m}} }} - {I_{ij}}} \right)}^2}} } , \hfill \\ i = 1,...,row,j = 1,...,column, \hfill \\ size = row \times column. \hfill \\ \end{gathered} \] | (29) |

Average max membership (AMM) is expressed as

| \[AMM\left( I \right) = \frac{1}{{size}}\max \left( {{u_k}} \right).\] | (30) |

The quality (Q) of the image segmentation is described as

| \[Q\left( I \right) = \frac{1}{{size}}\sum\limits_{i = 1}^{row} {\sum\limits_{j = 1}^{column} {\sqrt {\frac{k}{{count\left( {{I_k}} \right)}}} {{\left( {{v_k} - {I_{ij}}} \right)}^2}} } .\] | (31) |

The average of membership variance (AMV) is represented as

| \[AMV\left( I \right) = \frac{1}{{size}}\sum\limits_{k = 1}^c {\sum\limits_{i = 1}^{row} {\sum\limits_{j = 1}^{column} {{{\left( {{u_{ijk}}} \right)}^2}} } } .\] | (32) |

The average of the membership difference between maximum value and current value (AMD) is shown

| \[AMD\left( I \right) = \frac{1}{{size}}\sum\limits_{k = 1}^c {\sum\limits_{i = 1}^{row} {\sum\limits_{j = 1}^{column} {\left( {\max \left( {{u_k}} \right) - {u_{ijk}}} \right)} } } .\] | (33) |

Table II compares the quantitative comparison indicators of the experiments on three natural images for the accuracy of imagesegmentation. From the performance parameters shown in Table II,NSKFCM adopts the neighbor searching method with the dissimilarity matrix,and the adaptive distance kernel function is employed to evolve the variable weights. It does not require a preset the number of clusters,which is determined by $r$ and $\xi $.Furthermore,kernel function performs a nonlinear dissimilarity matrix into high dimensional feature space,it increases the probability of the linear segmentation within the transformedspace. To ensure the stability and consistency of the classification,the maximum peak method is used for initializing the membership degree and cluster prototype. The segmentation results of NSKFCM possesses more excellent performance,lessiterations number,lower time consumption and higher efficiency than the other methods.

|

|

Table II NUMERICAL RESULTS OF THE FOUR METHODS ON THREE LANDSCAPE IMAGES |

With the aid of neighbor searching and kernel fuzzy c-means methods,the corresponding dataset classification application forCPS is discussed in this work. A novel robust dataset classification approach is investigated for accurately classifying the complex dataset of CPS. Compared with the existingliteratures,the proposed method shows better adaptiveness androbustness in complex dataset classification. As a theoretical result,the dynamic behavior of acceleration dataset is improved and the impact of parameters uncertainties is reduced. In numerical validations,several images-induced datasets are employed,and the results show that the desired image edges are clarified and texture details are effectively obtained. Based on this paper,our future work will concentrate on further improving the accuracy of the accelerometer,the high-dimension dataset classification,and the 3D segmentation for spatial points and images.

APPENDIX A PROOF OF PROPOSITION 1For the distance function (16),and if $K\left( { \cdot ,\cdot }\right)$ is the Gaussian kernel,then with fixed $V$ and $\lambda$,the fuzzy membership degree ${u_{ik}}\left( {k = 1,2,...,n,i = 1,2,... ,c} \right)$,which minimizes the objective function $O\left( {U,V,\lambda } \right)$ given in (17),under${u_{ik}} \in \left[ {0,1} \right],\forall a,k$ and$\sum\nolimits_{i = 1}^c {{u_{ik}}} = 1,\forall k$,satisfies the local optimum expressed by

${u_{ik}} = \sum\limits_{h = 1}^c {{{\left( {\frac{{\sum\limits_{j = 1}^t {{\lambda _j}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{hj}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}{{\sum\limits_{j = 1}^t {{\lambda _j}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}} \right)}^{\frac{1}{{m - 1}}}}} .$

Proof. If $K\left( { \cdot ,\cdot } \right)$ is theGaussian kernel,then ${\left\| {\phi \left( {{x_{kj}}} \right) - \phi \left( {{v_{ij}}} \right)} \right\|^2} = 2\left( {1 - K\left( {{x_{kj}},{v_{ij}}} \right)} \right)$. Referring to [32],the Lagrange equation corresponding with the condition $\sum\nolimits_{i = 1}^c {{u_{ik}}} = 1$ is accordingly constructed by

| \[L\left( {U,\zeta } \right) = {O_i}\left( {U,V,\lambda } \right) + \zeta \left( {\sum\limits_{i = 1}^c {{u_{ik}}} - 1} \right).\] | (A.1) |

The necessary condition,according to the Gaussian kernel and the localoptimum,$\frac{{\partial L}}{{\partial {u_{ik}}}} = 0$ must be set up. So,

| \[\begin{array}{*{20}{l}} {\frac{{\partial L}}{{\partial {u_{ik}}}} = \frac{\partial }{{\partial {u_{ik}}}}\left( {{O_i}\left( {U,V,\lambda } \right) + \zeta \left( {\sum\limits_{i = 1}^c {{u_{ik}}} - 1} \right)} \right)} \\ { = \frac{\partial }{{\partial {u_{ik}}}}\left( {\sum\limits_{i = 1}^c {{{\left( {{u_{ik}}} \right)}^m}\sum\limits_{j = 1}^t {{\lambda _j}} } } \right.} \\ { \times \left( {2\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} \right)} \\ { + \left. {\zeta \left( {\sum\limits_{i = 1}^c {{u_{ik}}} - 1} \right)} \right) = m{{\left( {{u_{ik}}} \right)}^{m - 1}}\sum\limits_{j = 1}^t {{\lambda _j}} } \\ { \times \left( {2\left( {1 - \exp \left( { - {\text{ }}\frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} \right)} \\ { + \zeta = 0.} \end{array}\] | (A.2) |

Then,

| \[{u_{ik}} = {\left( { - \frac{\zeta }{{2m\sum\limits_{j = 1}^t {{\lambda _j}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}} \right)^{\frac{1}{{m - 1}}}}.\] | (A.3) |

Similarly,using ${u_{hk}},h = 1,2,... ,c$ replaces${u_{ik}}$,and the restricted condition is satisfied by

| \[\sum\limits_{h = 1}^c {{u_{hk}}} = 1 = \sum\limits_{h = 1}^c {{{\left( { - \frac{\zeta }{{2m\sum\limits_{j = 1}^t {{\lambda _j}\left( {1 - \exp \left( { - {\text{ }}\frac{{{{\left\| {{x_{kj}} - {v_{hj}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}} \right)}^{\frac{1}{{m - 1}}}}} .\] | (A.4) |

Therefore,

| \[{\left( { - \zeta } \right)^{\frac{1}{{m - 1}}}} = \sum\limits_{h = 1}^c {{{\left( {\frac{1}{{2m\sum\limits_{j = 1}^t {{\lambda _j}\left( {1 - \exp \left( { - {\text{ }}\frac{{{{\left\| {{x_{kj}} - {v_{hj}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}} \right)}^{\frac{{ - 1}}{{m - 1}}}}} .\] | (A.5) |

Next,the membership can be obtained according to (A.3) and (A.5)

| \[{u_{ik}} = \sum\limits_{h = 1}^c {{{\left( {\frac{{\sum\limits_{j = 1}^t {{\lambda _j}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{hj}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}{{\sum\limits_{j = 1}^t {{\lambda _j}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}} \right)}^{\frac{1}{{m - 1}}}}} .\] | (A.6) |

Finally,the sufficient condition is proved using the Hessian matrix. Set as $\varphi \left( U \right) = O\left( {U,V,\lambda } \right)$,considering (A.1),(A.2),(A.6),the Hessian matrix $H\left( {\varphi \left( U \right)} \right)$ is shown by (A.7)

| \[\begin{array}{*{20}{l}} {H\left( {\varphi \left( U \right)} \right) = {h_{jq,ik}} = \frac{\partial }{{\partial {u_{jq}}}}\left[ {\frac{{\partial \varphi \left( U \right)}}{{\partial {u_{ik}}}}} \right]} \\ { = \frac{\partial }{{\partial {u_{jq}}}}[m{{\left( {{u_{ik}}} \right)}^{m - 1}}} \\ { \times \sum\limits_{j = 1}^t {{\lambda _j}\left( {2\left( {1 - \exp \left( { - {\text{ }}\frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} \right)} + \zeta ]} \\ { = \left\{ {\begin{array}{*{20}{l}} {2m\left( {m - 1} \right){{\left( {{u_{ik}}} \right)}^{m - 2}}} \\ { \times \sum\limits_{j = 1}^t {{\lambda _j}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} ,} \\ {{\text{if}}j = i,q = k} \\ {0,{\text{ otherwise}}.} \end{array}} \right.} \end{array}\] | (A.7) |

Based on the matrix theory,the Hessian matrix $H\left( {\varphi\left( U \right)} \right)$ is a diagonal matrix. Due to $m > 1$and $0 \le {u_{ik}} \le 1$,$H\left( {\varphi \left( U \right)}\right)$ is a symmetric positive definite matrix.

APPENDIX B PROOF OF PROPOSITION 2For the distance function (16),and $K\left( { \cdot ,\cdot }\right)$ as the Gaussian kernel,and with fixed $U$ and $\lambda$,then the cluster prototype ${v_{ij}}\left( {i = 1,2,...,c,j = 1,2 ... ,t} \right)$,which minimizes the objective function $O\left( {U,V,\lambda } \right)$ given in (17),is optimized by the following expression:

${v_{ij}} = \frac{{\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} \sum\limits_{j = 1}^t {{\lambda _j}\left( {\exp \left( { - {\text{ }}\frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} {x_{kj}}}}{{\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} \sum\limits_{j = 1}^t {{\lambda _j}\left( {\exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}.$

Proof. The Lagrange equation is constructed depending on the objective function

| \[L\left( {V,\zeta } \right) = O\left( {U,V,\lambda } \right) + \zeta \left( {\sum\limits_{k = 1}^c {{u_{ik}}} - 1} \right).\] | (B.1) |

First,set up the local optimum.

| \[\begin{array}{*{20}{l}} {\frac{{\partial L}}{{\partial {v_{ij}}}} = \frac{\partial }{{\partial {v_{ij}}}}\left( {O\left( {U,V,\lambda } \right) + \zeta \left( {\sum\limits_{k = 1}^c {{u_{ik}}} - 1} \right)} \right)} \\ { = \frac{\partial }{{\partial {v_{ij}}}}\left( {\sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}\sum\limits_{j = 1}^t {{\lambda _j}} } } } \right.} \\ { \times \left( {2\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} \right)} \\ {\left. { + \zeta \left( {\sum\limits_{k = 1}^c {{u_{ik}}} - 1} \right)} \right) = 2\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} \sum\limits_{j = 1}^t {{\lambda _j}\frac{\partial }{{\partial {v_{ij}}}}} } \\ { \times \left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right) = 2\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} } \\ { \times \sum\limits_{j = 1}^t {{\lambda _j}\left( {\frac{{\left( {{x_{kj}} - {v_{ij}}} \right)}}{{{\sigma ^2}}}\exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} = 0.} \end{array}\] | (B.2) |

The cluster prototype ${v_{ij}}$ is then evolved as

| \[{v_{ij}} = \frac{{\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} \sum\limits_{j = 1}^t {{\lambda _j}\left( {\exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} {x_{kj}}}}{{\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} \sum\limits_{j = 1}^t {{\lambda _j}\left( {\exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}.\] | (B.3) |

In order to prove the sufficient condition,the Hessian matrix is involved. Setting $\varphi \left( V \right) = O\left( {U,V,\lambda} \right)$,the Hessian matrix $H\left( {\varphi \left( V \right)}\right)$ corresponding to $\varphi \left( V \right)$ is shown referring to (B.1),(B.2) and (B.3)

| \[\begin{array}{*{20}{l}} {H\left( {\varphi \left( V \right)} \right) = {h_{jq,ik}} = \frac{\partial }{{\partial {v_{jq}}}}\left[ {\frac{{\partial \varphi \left( V \right)}}{{\partial {v_{ik}}}}} \right]} \\ { = \frac{\partial }{{\partial {v_{jq}}}}\left[ { - 2\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} } \right.} \\ { \times \left. {\sum\limits_{j = 1}^t {{\lambda _j}\left( {\frac{{\left( {{x_{kj}} - {v_{ij}}} \right)}}{{{\sigma ^2}}}\exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} } \right]} \\ { = \left\{ {\begin{array}{*{20}{l}} {\frac{2}{{{\sigma ^4}}}\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}} \sum\limits_{j = 1}^t {{\lambda _j}\left( {\left( {{x_{kj}} - {v_{ij}}} \right)\left( {{v_{ij}} - 1} \right) + {\sigma ^2}} \right)} } \\ { \times \left( {\exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right),{\text{if}}j = i,q = k} \\ {0,{\text{ otherwise}}.} \end{array}} \right.} \end{array}\] | (B.4) |

It is obtained that $H\left( {\varphi \left( V \right)} \right)$is a diagonal matrix,from (B.4),and also a symmetric positivedefinite matrix.

APPENDIX C PROOF OF PROPOSITION 3.If the adaptive distance function is given by (16),the vector of weight $\lambda = \left( {{\lambda _1},{\lambda _2},...,{\lambda _t}} \right)$,which minimizes the objective function(17) under ${\lambda _j} > 0,\forall j$ and $\prod\nolimits_{j = 1}^t {{\lambda _j}} = 1,{\lambda _j}\left( {j = 1,2,...,t} \right)$ is updated according to the following expression:

$\begin{array}{*{20}{l}} {{\lambda _j}} \\ {\quad = \frac{{{{\left( {\prod\limits_{h = 1}^t {\left( {\sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{hj}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} } } \right)} } \right)}^{\frac{1}{t}}}}}{{\sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} } }}.} \end{array}$

Proof. The minimizing objective function with respect to$\prod\nolimits_{j = 1}^t {{\lambda _j}} = 1$ is rewritten as

| \[\begin{array}{*{20}{l}} {O\left( {U,V,\lambda } \right) = O\left( {{\lambda _1},{\lambda _2},...,{\lambda _c}} \right) = \sum\limits_{i = 1}^c {{O_i}\left( {{\lambda _i}} \right)} } \\ { = 2\sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}\sum\limits_{j = 1}^t {{\lambda _j}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} } } ,} \\ {{\lambda _i} = \left( {{\lambda _{i1}},{\lambda _{i2}},...,{\lambda _{ij}},...,{\lambda _{it}}} \right),\quad j = 1,2,...,t.} \end{array}\] | (C.1) |

Let ${f_i}\left( {{\lambda _{i1}},{\lambda _{i2}},... ,{\lambda_{it}}} \right) = \prod\nolimits_{j = 1}^t {{\lambda _{ij}}} - 1 ={\lambda _{i1}} \times {\lambda _{i2}} \times ... \times({\lambda _{it}}) - 1$. The extremum of ${O_i}\left( {{\lambda _i}}\right)$ with the restriction condition ${f_i}\left( {{\lambda_{i1}},{\lambda _{i2}},... ,{\lambda _{it}}} \right) = 0$applies and the Largrange multipliers to be solved as

| \[\nabla {O_i}\left( {{\lambda _{i1}},{\lambda _{i2}},...,{\lambda _{it}}} \right) = \nabla {f_i}\left( {{\lambda _{i1}},{\lambda _{i2}},...,{\lambda _{it}}} \right).\] | (C.2) |

Due to $\nabla {O_i}\left( {{\lambda _{i1}},{\lambda _{i2}},... ,{\lambda _{it}}} \right) = \left( {{O_{i1}},{O_{i2}},... ,{O_{it}}} \right)$ and $\nabla {f_i}\left( {{\lambda_{i1}},{\lambda _{i2}},... ,{\lambda _{it}}} \right) = \left({\frac{1}{{{\lambda _{i1}}}},\frac{1} {{{\lambda _{i2}}}},...,\frac{1}{{{\lambda _{it}}}}} \right)$,(C.2) is then equal to

| \[\begin{array}{*{20}{l}} {\left( {{O_{i1}},{O_{i2}},...,{O_{it}}} \right) = \mu \left( {\frac{1}{{{\lambda _{i1}}}},\frac{1}{{{\lambda _{i2}}}},...,\frac{1}{{{\lambda _{it}}}}} \right),} \\ {{O_{ij}} = \mu \frac{1}{{{\lambda _{ij}}}},{\lambda _{ij}} = \frac{\mu }{{{O_{ij}}}}.} \end{array}\] | (C.3) |

Considering the condition $\prod\nolimits_{j = 1}^t {{\lambda _j}}= 1$,there are the equations given by (C.4)

| \[\begin{array}{*{20}{l}} {\prod\limits_{h = 1}^t {{\lambda _{ih}}} = \prod\limits_{h = 1}^t {\frac{\mu }{{{O_{ih}}}}} = 1,} \\ {\frac{{{\mu ^t}}}{{\prod\limits_{h = 1}^t {{O_{ih}}} }} = 1,} \\ {\mu = {{\left( {\prod\limits_{h = 1}^t {{O_{ih}}} } \right)}^{\frac{1}{t}}}.} \end{array}\] | (C.4) |

Next,an extremum of ${O_j}$ is solved when

| \[{\lambda _{ij}} = \frac{{{{\left( {\prod\limits_{h = 1}^t {{O_{ih}}} } \right)}^{\frac{1}{t}}}}}{{{O_{ij}}}} = \frac{{{{\left( {\prod\limits_{h = 1}^t {\left( {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{hj}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} } \right)} } \right)}^{\frac{1}{t}}}}}{{\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} }}.\] | (C.5) |

The extremum of ${O_i}$ is

| \[\begin{array}{*{20}{l}} {{O_i}\left( {{\lambda _{i1}},{\lambda _{i2}},...,{\lambda _{it}}} \right) = \sum\limits_{j = 1}^t {{\lambda _{ij}}} {O_{ij}}} \\ { = t{{\left( {{O_{i1}} \times {O_{i2}} \times \cdots \times {O_{it}}} \right)}^{\frac{1}{t}}}.} \end{array}\] | (C.6) |

if $\max \left( {{\lambda _{i1}},{\lambda _{i2}},... ,{\lambda_{it}}} \right) = \left( {1,1,... ,1} \right)$,(C.6) isreplaced by (C.7)

| \[\begin{array}{*{20}{l}} {{O_i}\left( {{\lambda _{i1}},{\lambda _{i2}},...,{\lambda _{it}}} \right) < \sum\limits_{j = 1}^t {{O_{ij}}} ,} \\ {t{{\left( {{O_{i1}} \times {O_{i2}} \times \cdots \times {O_{it}}} \right)}^{\frac{1}{t}}} < {O_{i1}} + {O_{i2}} + \cdots + {O_{it}}.} \end{array}\] | (C.7) |

Therefore,the extremum is the minimizing objective function. The vector of weight ${\lambda _j}$ is updated by the following expression

| \[{\lambda _j} = \frac{{{{\left( {\prod\limits_{h = 1}^t {\left( {\sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{hj}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} } } \right)} } \right)}^{\frac{1}{t}}}}}{{\sum\limits_{i = 1}^c {\sum\limits_{k = 1}^n {{{\left( {{u_{ik}}} \right)}^m}\left( {1 - \exp \left( { - \frac{{{{\left\| {{x_{kj}} - {v_{ij}}} \right\|}^2}}}{{2{\sigma ^2}}}} \right)} \right)} } }}.\] | (C.8) |

The authors would like to thank SENODIA Technologies In corporationfor providing the MEMS accelerometer sensor.

| [1] | Dunn J. A graph theoretic analysis of pattern classification via Tamura's fuzzy relation. IEEE Transactions on Systems, Man, and Cybernetics, 1974, SMC-4(3):310-313 |

| [2] | Bezdek J C. Pattern Recognition with Fuzzy Objective Function Algorithms. New York:Springer, 1981. |

| [3] | Wu K L, Yang M S. Alternative c-means clustering algorithms. Pattern Recognition, 2002, 35(10):2267-2278 |

| [4] | Ahmed M N, Yamany S M, Mohamed N, Farag A A, Moriarty T. A modified fuzzy c-means algorithm for bias field estimation and segmentation of MRI data. IEEE Transactions on Medical Imaging, 2002, 21(3):193-199 |

| [5] | Chen S C, Zhang D Q. Robust image segmentation using FCM with spatial constraints based on new kernel-induced distance measure. IEEE Transactions on Systems, Man, and Cybernetics, Part B:Cybernetics, 2004, 34(4):1907-1916 |

| [6] | Szilagyi L, Benyo Z, Szilagyi S M, Adam H S. MR brain image segmentation using an enhanced fuzzy c-means algorithm. In:Proceedings of the 25th Annual International Conference on Engineering in Medicine and Biology Society. Cancun, Mexico:IEEE, 2003. 724-726 |

| [7] | Li C F, Liu L Z, Jiang W L. Objective function of semi-supervised fuzzy c-means clustering algorithm. In:Proceedings of the 6th IEEE International Conference on Industrial Informatics. Daejeon, Korea:IEEE, 2008. 737-742 |

| [8] | Huang S B, Cheng Y, Wan Q S, Liu G F, Shen L S. A hierarchical multi-relational clustering algorithm based on IDEF1x. Acta Automatica Sinica, 2014, 40(8):1740-1753(in Chinese) |

| [9] | Wang L, Gao X W, Wang W, Wang Q. Order production scheduling method based on subspace clustering mixed model and time-section ant colony algorithm. Acta Automatica Sinica, 2014, 40(9):1991-1997(in Chinese) |

| [10] | Ferreira M R, De Carvalho F D A T. Kernel fuzzy c-means with automatic variable weighting. Fuzzy Sets and Systems, 2014, 237:1-46 |

| [11] | Krinidis S, Chatzis V. A robust fuzzy local information c-means clustering algorithm. IEEE Transactions on Image Processing, 2010, 19(5):1328-1337 |

| [12] | Krinidis S, Krinidis M. Generalised fuzzy local information c-means clustering algorithm. Electronics Letters, 2012, 48(23):1468-1470 |

| [13] | Gong M G, Liang Y, Shi J, Ma W P, Ma J J. Fuzzy c-means clustering with local information and kernel metric for image segmentation. IEEE Transactions on Image Processing, 2013, 22(2):573-584 |

| [14] | Chiranjeevi P, Sengupta S. Detection of moving objects using multichannel kernel fuzzy correlogram based background subtraction. IEEE Transactions on Cybernetics, 2014, 44(6):870-881 |

| [15] | Alipour S, Shanbehzadeh J. Fast automatic medical image segmentation based on spatial kernel fuzzy c-means on level set method. Machine Vision and Applications, 2014, 25(6):1469-1488 |

| [16] | Lu C H, Xiao S Q, Gu X F. Improving fuzzy c-means clustering algorithm based on a density-induced distance measure. The Journal of Engineering, 2014, 1(1):1-3 |

| [17] | Qiu C Y, Xiao J, Han L, Naveed Iqbal M. Enhanced interval type-2 fuzzy c-means algorithm with improved initial center. Pattern Recognition Letters, 2014, 38:86-92 |

| [18] | Candés E J, Li X D, Ma Y, Wright J. Robust principal component analysis. Journal of the ACM (JACM), 2011, 58(3):Article No. 11 |

| [19] | Yang M S, Lai C Y, Lin C Y. A robust EM clustering algorithm for Gaussian mixture models. Pattern Recognition, 2012, 45(11):3950-3961 |

| [20] | Elhamifar E, Vidal R. Robust classification using structured sparse representation. In:Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Providence, RI, USA:IEEE, 2011. 1873-1879 |

| [21] | Xie X M, Wang C M, Zhang A J, Meng X F. A robust level set method based on local statistical information for noisy image segmentation. Optik-International Journal for Light and Electron Optics, 2014, 125(9):2199-2204 |

| [22] | Filippone M, Camastra F, Masulli F, Rovetta S. A survey of kernel and spectral methods for clustering. Pattern Recognition, 2008, 41(1):176-190 |

| [23] | Zhang J P, Chen F C, Li S M, Liu L X. Data stream clustering algorithm based on density and affinity propagation techniques. Acta Automatica Sinica, 2014, 40(2):277-288(in Chinese) |

| [24] | Kantardzic M. Data Mining:Concepts, Models, Methods, and Algorithms. Second Edition. New York:John Wiley & Sons, 2011. 249-259 |

| [25] | Anderson M J, Ellingsen K E, McArdle B H. Multivariate dispersion as a measure of beta diversity. Ecology Letters, 2006, 9(6):683-693 |

| [26] | Anderson M J, Santana-Garcon J. Measures of precision for dissimilarity-based multivariate analysis of ecological communities. Ecology Letters, 2015, 18(1):66-73 |

| [27] | Cormen T H, Leiserson C E, Rivest R L, Stein C. Introduction to Algorithms. Cambridge:MIT Press, 2001. 1-7 |

| [28] | Qian J B, Dong Y S. A clustering algorithm based on broad first searching neighbors. Journal of Southeast University (Natural Science Edition), 2004, 34(1):109-112(in Chinese) |

| [29] | Bezdek J C, Hathaway R J, Sabin M J, Tucker W T. Convergence theory for fuzzy c-means:counterexamples and repairs. IEEE Transactions on Systems, Man, and Cybernetics, 1987, 17(5):873-877 |

| [30] | Shahriari H, Ahmadi O. Robust estimation of the mean vector for high-dimensional data set using robust clustering. Journal of Applied Statistics, 2015, 42(6):1183-1205 |

| [31] | Kinoshita N, Endo Y. EM-based clustering algorithm for uncertain data. Knowledge and Systems Engineering, 2014, 245:69-81 |

| [32] | Gao J, Wang S T. Fuzzy clustering algorithm with ranking features and identifying noise simultaneously. Acta Automatica Sinica, 2009, 35(2):145-153(in Chinese) |

2015, Vol.2

2015, Vol.2