2. Department of Electronic Engineering, Tsinghua University, Beijing 100084, China;

3. College of Computer Science and Technology Beijing University of Technology, Beijing 100124, China

POOR visibility becomes a major problem for most outdoor vision applications.Bad weather,such as fog and haze,can significantly degrade the visibility of a scene.The low visibility inevitably handicaps visual recognition and comprehension.The goal of defogging methods is to remove the effects of fog and recover details and colors of scene from foggy image.

Most traditional image enhancement methods,such as histogram equalization,Retinex,the wavelet transform,usually cannot obtain ideal defogging result.These methods mainly focus on enhancing low brightness and low contrast features in digital images,and they are simple,efficient,and can be applied to most real-time scenes.However,they do not consider the reason why the image is degraded by fog and cannot compensate effectively.Therefore,these methods are limited to perform the defogging task and even may introduce halo artifacts or distort the color.

Alternatively,another method,which improves image color and contrast through understanding the physical model of image degradation[1],has become a research hotspot that has been widely researched in computer vision and image processing.The atmospheric scattering model is usually used to describe the formation of a foggy or hazy image.These methods based on this physical model are reliable,highly targeted and can produce better quality defogging results.Some of these methods require multiple images of the same scene,taken under different atmospheric conditions[2¡3]or combined with near-infrared version.In practice,unfortunately it is usually difficult to satisfy such special conditions,so these methods are restricted if there is not enough additional information to be used.

Recently,several fog removal methods based on single image have made significant progress. Tan$'$s work[4] is based on the assumption that the clear-day images have higher contrast compared with the input fog image,which removes the haze by maximizing the local contrast of the restored image. This method generates compelling images with enhanced contrast,but it may also result in a physically-invalid excessive haze removal. Fattal[5] assumes that image albedo is a constant vector in local region and that the transmission is locally statistically uncorrelated. Fattal's approach can produce impressive results,but it cannot well handle heavy haze images and may fail when the assumption is not satisfied. He et al.[6] propose dark channel prior to estimation of the transmission map and use soft matting to refine it. The results are visually compelling,but both the time and space complexity of this method can be quite high. They further improve their method by estimating the transmission map using guided filter[7]. Tarel et al.[8] use the median filtering method to estimate atmospheric veil. This method performs faster than the previous approaches,but its detail restoration is not ideal. Yu et al.[9] use the bilateral filter to estimate the atmospheric veil,improving the defogging performance to some cases. An approach based on factorial MRF is proposed in [10] to handle the two types of fields of unknown variables: scene albedo and depth,which has high complexity. Based on planar road constraint,[11] introduces another efficient MRF approach to improve the restoration of the road area,assuming an approximately flat road.

In this paper,we introduce a novel method based on local extrema to remove fog from a single image. We use local extrema method[12] to estimate the atmospheric veil and adopt multi-scale tone manipulation algorithm to regulate the expression of details. Through the fusion of multi-scale information,we can create more visual effects on the restored image. The proposed method is physically valid and can significantly increase the visibility of the scene.

The article is structured as follows. Section Ⅱ describes the atmospheric scattering model and atmospheric veil. In Section Ⅲ, we present a detailed description of the proposed method. In Section IV,a comparison is provided with current defogging methods to illustrate the advantage of the proposed method. Finally,concluding remarks are made in Section V.

Ⅱ. BACKGROUNDThe attenuation of luminance through the atmosphere was studied by Nayar and Narasimhan[1],who derived a foggy image degradation model called atmospheric scattering model. This model relates the apparent luminance I(x,y) of an object located at distance d(x,y) to the luminance R(x,y) measured close to this object:

| $\begin{align} I\left( {x,y} \right) = R\left( {x,y} \right){{\rm e}^{ - \beta d\left( {x,y} \right)}} + A\left( {1 - {{\rm e}^{ - \beta d\left( {x,y} \right)}}} \right), \end{align}$ | (1) |

In the atmospheric scattering model,the calculation of scene depth and atmospheric scattering coefficient generally requires additional information such as the vanishing points from the infinite plane. The location confirmation of vanishing points relies on the subjective judgment or is realized through the image processing algorithm (e.g.,Hough transform,Curvelet transform). In many cases, vanishing points are difficult to be accurately estimated,which may cause the bad image visibility restoration. As a consequence,we introduce the atmospheric veil to avoid solving $d$ and $\beta$, which can be expressed by

| $\begin{align} V(x,y) = A(1 - t(x,y)), \end{align}$ | (2) |

In this paper,we introduce a novel defogging technique based on local extrema method to remove fog or haze from a single image. Our method can be decomposed into three steps: estimating the skylight and correcting color through white balance; estimating the atmospheric veil based on local extrema method and recovering the image visibility by inverting atmospheric scattering model; Controlling the visibility by multi-scale tone manipulation algorithm.

A. Skylight Estimation and White Balance Skylight A is typically assumed to be a global constant.We estimate A through the method based on the dark channel prior[6].The dark channel of input image can be defined as| $\begin{align} {I_{dark}}(x,y) = \mathop {\min }\limits_{(x,y) \in \psi } (\mathop {\rm \min }\limits_{c \in \{ R,G,B\} } {I_c}(x,y)), \end{align}$ | (3) |

The color of foggy image sometimes suffers from illumination variations. For example,the foggy day is often accompanied by cloudy weather which causes the scene to be prone to partial color. Therefore,to improve the skylight estimation,we will correct fog to to be pure white. The calculation can be performed as

| $\begin{align} A = \frac{A_{\rm mean}^c}{\mathop {\max }\limits_{c \in R,G,B} (A_{\rm mean}^c)}, \end{align}$ | (4) |

| $\begin{align} I'(x,y) = \frac {I(x,y)}{A}. \end{align}$ | (5) |

From (1) and (2),we can find the atmospheric veil estimation becomes the key step to calculate the restored image R. Due to its physical properties,the atmospheric veil is subject to two constraints: i) $0 \le V\left( {x,y} \right) \le 1$,and ii) $V(x,y)$ is not higher than the minimal component of $I'(x,y)$. We thus take the min operation among three color channels and acquire a rough estimation of the atmospheric veil:

| $\begin{align} \tilde V\left( {x,y} \right) = \mathop {\min }\limits_{c \in \{ R,G,B\} } (I{'_c}(x,y)), \end{align}$ | (6) |

Atmospheric veil depends solely on the depth of the objects and has nothing to do with scene albedo[10]. The scene depth is changing smoothly across small neighboring areas except at the edges. Therefore,the refinement of atmospheric veil can be considered a smoothing problem. In this paper,in order to generate an ideal estimation of the atmospheric veil,we apply an edge-preserving smoothing approach based on the local extrema for refinement.

Our method based local extrema is inspired from Subr[12]'s technique,which uses edge-aware interpolation to compute envelopes. A smoothed mean layer is obtained by averaging the envelopes. The method can extract fine-scale detail regardless of contrast[13]. However,single mean layer is not sufficient to well approximate atmospheric veil,and it is solved by iterative calculation,which is time-consuming.

Our non-iterative method consists of three steps: 1) identification of local extrema of $\tilde V$; 2) inference of extremal envelopes; 3) visibility enhancement of the result by multi-scale tone manipulation algorithm.

First,we locate the extrema in $\tilde V$. Pixel $p$ is considered as a maxima if at most $k-1$ elements in the $k \times k$ neighborhood around $p$ are greater than the value at pixel $p$. In the same way we can locate the minima. Image details whose extrema are detected by using a $k \times k$ kernel have wavelengths of at least $k/2$ pixels. We choose $k=5$ as the size of the extrema-location kernel in this paper.

As for the next step,we will employ the interpolation of the local minima and maxima to compute minimal and maximal extremal envelopes resp ectively. Let $S$ be the pixel set of local extrema,we compute an extremal envelope $E$ using an interpolation technique proposed by Levin et al.[12, 13, 14] for image colorization. It assumes that the $E$ is a linear function of the $\tilde V$,and seeks an interpolant $E$ such that neighboring pixels $E(r)$ and $E(s)$ have similar values if $\tilde V(r)$ and $\tilde V(s)$ are similar. The calculation of extremal envelope is to minimize the energy function:

| $\begin{align} {\sum\limits_r {\left( {E(r) - \sum\limits_{s \in N(r)} {{w_{rs}}E(s)} } \right)} ^2}, \end{align}$ | (7) |

| $\begin{align} \forall p \in S,\qquad E(p) \in \tilde V(p). \end{align}$ | (8) |

Equation (7) is designed to minimize the distance between the $E(r)$ at pixel $r$ and the weighted average of the $E(s)$ at neighboring pixels $N(r)$. $N(r)$ denotes the neighbors of $r$, and weights

| $\begin{align} {w_{rs}} \propto \exp \left( { - \frac{{{{(\tilde V(r) - \tilde V(s))}^2})}}{{2\sigma _r^2}}} \right), \end{align}$ | (9) |

After obtaining the minimal envelope,maximal envelope and the mean layer computed by these two envelopes,we can obtain the three-scale atmospheric veil $V_i$,$i=1,2,3$. After calculating the skylight A through Section Ⅲ-A,we can directly recover the scene information in the ideal weather conditions:

| $\begin{equation} {R_i}(x,y) = \frac{{I(x,y) - q{V_i}(x,y)}}{{1 - {V_i}(x,y)}}\times A \end{equation}$ | (10) |

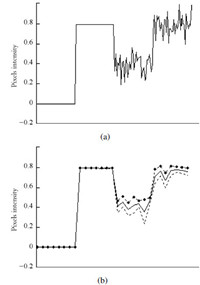

By observation and experimentation,we find that not all the processes of three scales are suitable to refine atmospheric veil. From Fig. 1,we can see that all the smoothing operations of three scales are effective in preserving high-gradient edges,so the smoothed results will contain few halo artifacts along depth discontinuities. The difference is that the detail-preserving,the maximal envelope can extract detail more accurately in bright regions than in dark regions; the minimal envelope can effectively smooth detail even with large amplitude variation. Especially,it can preserve the significant edges in dark regions better than the other two scales; the mean layer has the compromise effect between the other two envelopes,it can strictly preserves edges without blurring detail. Atmospheric veil is locally smooth except at edges, which has higher intensity in regions with denser haze. Therefore, minimal envelope is most suitable for atmospheric veil refinement; the maximal envelope and mean layer can be used to highlight the detail in the input image.

|

Download:

|

| Fig. 1 Image smoothing effect in three scales.(a) The input image signal (b) The smoothed results by the minimal envelope (dash line),maximal envelope (dot-dash line) and the mean layer (continuous curve) computed by these two envelopes. | |

With three-scale restored results,we adopt the multi-scale tone manipulation algorithm of Farbman et al.[15] to improve the visual effect. According to the above analysis,we will manipulate tone and contrast of details at three scales,which include including restored images ${R_1}$ ,${R_2}$ ,${R_3}$ obtained from minimal envelope,maximal envelope,mean layer,respectively. We first construct a three-level decomposition of the CIELAB lightness channel,which includes coarse base level $b$ from ${R_1}$,two detail levels ${d^1}$ and ${d^2}$(${d^1} = {R_1} - {R_2}$,${d^2} = {R_2} - {R_3}$). The result of the manipulation R at each pixel $p$ is then given by

| $\begin{align} {R_p} = \mu + C({\delta _0},\eta {b_p} - \mu ) + C({\delta _1},d_p^1) + C({\delta _2},d_p^2), \end{align}$ | (11) |

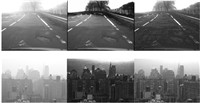

Fig. 2 is a tone adjustment example,which is obtained with the parameters ${\delta _0}={\delta _1}={\delta _2}=1,\mu =1$. As we can see,using the multi-scale tone manipulation algorithm,our method can correct brightness and enhance image details effectively. Through further experiments we find that three levels are sufficient for detail display. Using more scales will enable finer control,but will consume more time.

|

Download:

|

| Fig. 2 Tone adjustment.(a) is the input foggy image,(b)-(d) are the three-scale defogging results,(e) is our result. | |

In order to verify the effectiveness of the defogging method,we will evaluate the proposed method on the real scene and the virtual scene. The experiments will be conducted on the matlab platform and mainly deal with low resolution images for better detail comparison. Firstly,in the aspect of restoring real world foggy images,our method will compare with two classical methods: Tarel$'$s noblack-pixel constraint (NBPC)[8] and He$'$s method based on guided filter[7]. Tarel et al. introduced a complete inference of atmospheric veil in [8]. He et al first proposed dark channel prior to initialize transmission estimation in [6]. However,the matting Laplacian regularization might lead to an overall reduction of contrast at the distant regions and had high time and space complexity. Reference [7] was their improved method free from these problems. Both methods are known for their robustness and can produce visually pleasing restored results. The time consumption of [7, 8] is mainly associated with the estimation of transmission map or atmospheric veil. Both of their calculations can reach O(N). From top to bottom,the dimensions of images provided in Fig. 3 are 315 $\times$ 315,460 $\times$ 380, 600 $\times$ 400 and 600 $\times$ 450,and the four groups of experimental images are expressed as Fig. 3(a) $\sim$ (d).

|

Download:

|

| Fig. 3 Fog removal results of real world image.From left to right:the input foggy images,Tarel0s results,He0s results,and our results | |

As seen from Fig. 3,three methods have strong defogging ability. Some objects which were barely visible in foggy image appear clearly in enhanced images. However,Tarel$'$s results have lower contrast and prone to be saturated; He$'$s method has a good ability of fog removal,but its detail enhancement ability is limited. Compared with the two methods,our method can better restore image and achieve much greater color consistency. Experimental results also show that proposed method has a obvious superiority in preserving detail and can avoid the halo effect.

In order to objectively assess these methods,we use the assessment method dedicated for visibility restoration proposed in [16]. This method computes two indicators $e$,$\bar r$ to assess the performances of a contrast restoration method through comparing two gray level images: the input image and the restored image. The value of $e$ evaluates the ability of the method to restore edges which were not visible in the input image but are in the restored image. The value of $\bar r$ expresses the average visibility effect enhanced by the restoration method.

Furthermore,a good image restoration method should also make the restored image look natural and real,therefore,color retention degree is an important index for evaluating the defogging methods, which is denoted as $h$. We evaluate the color retention ability by histogram comparison. The more similar the histogram shapes,the stronger color retention ability is. We use the color histogram intersection method to calculate the histogram similarity between original image and the restored image,which is expressed as a percentage.

We use the edge preserved index (EPI) $s$ to measure the edge-preserving ability,which is obtained by calculating the ratio value of gradient-sum of pixels in the original image and restored image.

These indicators $e$,$\bar r$,$h$ and $s$ are evaluated for the comparison experiments on four images of Fig. 3,see Tables Ⅰ-Ⅳ.

|

|

Table Ⅰ COMPARISON OF THE e VALUES |

|

|

Table Ⅱ COMPARISON OF THE $\bar r$ VALUES |

|

|

Table Ⅲ COMPARISON OF THE h VALUES |

|

|

Table Ⅳ COMPARISON OF THE s VALUES |

As can be observed from Tables Ⅰ and Ⅱ,our method is able to achieve fog removal while restoring more visible edges. Table Ⅲ that the histogram results of restored images obtained by our method are very close to the histogram results of the original images, which means our method can accurately retains the color of the objects. From Table Ⅳ we can deduce that our method can preserve edge characteristics effectively. In conclusion,our method clearly outperforms other methods. The color is more homogeneous and small details are better restored.

In the next experiments,we employ the database named FRIDA2[17] for the evaluation of the proposed method under the ideal condition. FRIDA2 is available for research purpose and in particular to allow other researchers to rate their own visibility enhancement methods. This database provides 66 images of the same virtual scene with and without fog. The proposed method will be compared with 5 other methods[17]: multi-scale retinex (MSR), adaptive histogram equalization (CLAHE),free space segmentation (FSS),noblack-pixel constraint (NBPC)[8] and dark channel prior (DCP)[6].

To quantitatively assess those methods,we use the method of computing the average absolute error ${E_{avg}}$ between the image without fog and the enhanced image. Table V shows the comparative results of 6 defogging methods using 66 virtual images with uniform fog. The subjective comparison results of one typical example are presented in Fig. 4. Table 5 shows that our result is the second closest to the clear day image only inferior to FSS. From the subjective observation in Fig. 4,we find that MSR and CLAHE are not suitable for foggy images,that vertical objects appears too dark with FSS,far away objects are more foggy with DCP or NBPC. By comparison,the details in our result appear much cleaner,and the visibility of distant objects is also improved obviously. Experimental results also demonstrate that our method can maintain the dominant color styles of the original image in the restored image.

|

Download:

|

| Fig. 4 Fog removal results of virtual image. | |

|

|

Table Ⅴ COMPARISON OF THE Eavg VALUES |

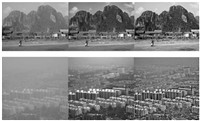

Finally,we will show a comparison of our proposed method with two state-of-art methods by Caraffa[11] and Li[18]. As shown in Fig. 5,Caraffa$'$s method can remove fog robustly and the restored results show higher contrast in remote areas. However,many latent image details in Caraffa$'$s results are lost. From Fig. 6, we can see that Li's method can improve the visibility and achieve more natural recovery. Compared with the two methods,our method can significantly improve image contrast and clarity. Its main advantage is the more accurate and effective detail restoration.

|

Download:

|

| Fig. 5 Comparison with Caraffa's work. From left to right: the input foggy images, Caraffa's results, and our results. | |

|

Download:

|

| Fig. 6 Comparison with Li's work. From left to right: the input foggy images, Li's results, and our results. | |

In this paper,we propose a novel defogging method based on local extrema,which relies only on the assumption that the depth map tends to be smooth everywhere except at edges. Inspired by techniques in empirical data analysis and morphological image analysis,we use the method based local extrema to estimate atmospheric veil. After obtaining multi-scale restoration results, then we improve the visual effects through multi-scale tone manipulation algorithm,which is very effective for controlling the amount of local contrast at different scales. Compared with other defogging methods,the experiment results demonstrate our method can achieve more ideal defogging results without requiring any additional information (e.g.,depth information) or any user interactions. This method can be efficient for practical application in real fog fields.

On the other hand,our work shares the common limitation of most image defogging methods,that is,does not always obtain equally good results on heavy haze scenes. Our work may result in poor effect when failing to identify the local minima and local maxima accurately. Therefore,the parameter settings under different weather conditions are very important.

In future work,we are going to investigate more optimal schemes for determining the parameters of multi-scale tone manipulation algorithm and the calculation of extremal envelope in order to further improve the visual effects and the operational efficiency.

| [1] | Nayar S K, Narasimhan S G. Vision in bad weather. In:Proceedings of the 7th International Conference on Computer Vision 1999. Kcrkyra:IEEE Computer Vision, 1999. 820-827 |

| [2] | Narasimhan S G, Nayar S K. Contrast restoration of weather degraded images. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2003, 25(6):713-724 |

| [3] | Shwartz S, Namer E, Schechner Y Y. Blind haze separation. In:Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. New York, USA:IEEE Press, 2006. 1984-1991 |

| [4] | Tan R T. Visibility in bad weather from a single image. In:Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition. Anchorage:IEEE Computer Society, 2008. 1-8 |

| [5] | Fattal R. Single image dehazing. ACM Transactions on Graphics (TOG), 2008, 27(3):1-9 |

| [6] | He K M, Sun J, Tang X O. Single image haze removal using dark channel prior. In:Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition. Miami, USA:IEEE Press, 2009. 1956-1963 |

| [7] | He K M, Sun J, Tang X O. Guided image filtering. In:Proceedings of the 2010 European Conference on Computer Vision (ECCV). Berlin, Germany:Springer-Verlag, 2010. 1-14 |

| [8] | Tarel J P, Hauti N. Fast visibility restoration from a single color or gray level image. In:Proceedings of the 12th International Conference on Computer Vision. Kyoto, Japan:IEEE, 2009. 20-28 |

| [9] | Yu J, Li D P, Liao Q M. Physics-based fast single image fog removal. Acta Automatica Sinica, 2011, 37(2):143-149 |

| [10] | Nishino K, Kratz L, Lombardi S. Bayesian defogging. International Journal of Computer Vision, 2012, 98(3):263-278 |

| [11] | Caraffa L, Tarel J P. Markov random field model for single image defogging. In:Proceedings of the 2013 Intelligent Vehicles Symposium. Gold Coast, QLD:IEEE, 2013. 994-999 |

| [12] | Subr K, Soler C, Durand F. Edge-preserving multiscale image decomposition based on local extrema. ACM Transactions on Graphics, 2009, 28(5):Article No. 147 |

| [13] | Xu L, Lu C W, Xu Y, Jia J Y. Image smoothing via L0 gradient Minimization. ACM Transactions on Graphics, 2011, 30(5):Article No. 174 |

| [14] | Levin A, Lischinski D, Weiss Y. Colorization using optimization. ACM Transactions on Graphics, 2004, 23(3):1-6 |

| [15] | Farbman Z, Fattal R, Lischinski D, Szeliski R. Edge-preserving decompositions for multi-scale tone and detail manipulation. ACM Transactions on Graphics, 2008, 27(3), Article No. 67. |

| [16] | Hautiere N, Tarel J P, Aubert D, Dumont É. Blind contrast enhancement assessment by gradient ratioing at visible edges. Image Analysis and Stereology, 2008, 27(2):87-95 |

| [17] | Hautire N, Tarel J P, Halmaoui H, Brémond R, Aubert D. Enhanced fog detection and free space segmentation for car navigation. Machine Vision and Applications, 2014, 25(3):667-679 |

| [18] | Li P, Xiao C B, Yu J. Single image fog removal based on dark channel prior and local extrema. American Journal of Engineering and Technology Research, 2012, 12(2):96-104 |

2015, Vol.2

2015, Vol.2