2. College of Computer Science and Technology, Harbin Engineering University, Harbin 150001, China, and also with Wuhan Second Ship Design and Research Institute, Wuhan 430064, China;

3. National Key Laboratory of Science and Technology on Autonomous Underwater Vehicle, Harbin Engineering University, Harbin 150001, China;

4. College of Computer Science and Technology, Harbin Engineering University, Harbin 150001, China

UNMANNED surface vehicles (USVs) are autonomous marine robots that have the advantages of small size,good concealment,high mobility,and low cost. At present,USVs are widely used in hydrographic survey,channel detection,environment monitoring,multi-AUV (autonomous underwater vehicle) communication and coordination,general robotics,etc[1, 2, 3, 4, 5]. USVs have also been successfully applied to military missions, such as searching,rescuing,and battlefield surveillance[6]. As we know,a safe autonomous navigation system is the base of robots systems[7]. Thus,designing a safe and reliable obstacle avoidance algorithm is an important research topic.

The existing algorithms for the local obstacle avoidance problem are mainly divided into two categories,i.e.,path searching-based algorithms[8, 9, 10, 11] and behavior-based algorithms[12, 13, 14, 15, 16, 17]. Path searching-based algorithms are usually applied to static obstacle avoidance for low-speed USVs[8]. These algorithms are poor in flexibility and cannot quickly cope with the obstacle avoidance problem. Behavior-based algorithms can quickly respond and are widely used in the local obstacle avoidance of USVs. However,all these algorithms only focus on the objects of obstacles and USVs,and ignore the other disturbance factors from complicated external marine environments.

In actual marine environment,USVs are affected by sea wind, currents,waves,and other external interference factors [18, 19, 20]. In the voyage,the motion of USVs is disturbed by sea wind and currents[21]. Thus,the above-mentioned obstacle avoidance algorithms are not valid in real marine environment.

Against the interference from sea wind and currents in complicated marine environments,the adaptive obstacle avoidance algorithm based on Sarsa on-policy reinforcement learning (AABSRL) is proposed in this study to enhance the performance of USVs. The "divide and conquer" strategy-based architecture is adopted in the design of AABSRL. The newly developed AABSRL is composed of two modules,behavior-based obstacle avoidance module and Sarsa on-policy reinforcement learning algorithm-based adaptive learning module (ALM). The local avoidance module focuses on obstacle avoidance in the environment and ignores external disturbance factors,while the disturbances from sea wind and currents are dealt with in the ALM. The performance of AABSRL is demonstrated by simulations and real boat sea trials of USVs in three real sea-state marine environments.

Ⅱ. ADAPTIVE OBSTACLE AVOIDANCE ARCHITECTURE BASED ON THE "DIVIDE AND CONQUER" STRATEGYThe existing studies have shown that the disturbance regularities from sea wind and currents to USV cannot be obtained from the data and that the threats from obstacles can be obtained according to the distribution of obstacles. Meanwhile,the mechanisms of impact from obstacles and outer disturbance factors are different. Therefore,the "divide and conquer" strategy-based architecture is proposed for constructing AABSRL. AABSRL is organized by the local obstacle avoidance module (LOAM) and the ALM. The diagram of the architecture is shown in Fig. 1.

|

Download:

|

| Fig. 1. Architecture of AABSRL. | |

LOAM is the base of AABSRL. Its responsibility is to generate the avoidance behavior (guidance angle and guidance translational velocity are used to guide USVs to realize the obstacle avoidance) according to the distribution data of obstacles and the state data of USVs. However,USVs are disturbed by sea wind and currents in complicated marine environments. The real course angle of USVs deviates from the guidance angle. Thus,the avoidance behavior from LOAM cannot be directly input into the basic motion control module (BMCM). Therefore,ALM is introduced into AABSRL to deal with the disturbances from sea wind and currents for USV.

The responsibility of ALM is to search for a course compensation angle to offset the course deviation angle that is generated by external disturbance factors. The course deviation angle is expressed by the deviation between the guidance angle and real course angle of USV. The core of ALM is the Sarsa on-policy reinforcement learning-based adaptive learning algorithm,which is used to explore the course compensation angle of USV. The input data of ALM include the state data of USV,the state data of sea wind and currents,and the guidance angle from LOAM. The trained course compensation strategy converges through a certain period of learning. The trained course compensation angle from ALM is combined into the navigation compensation module,and the combined avoidance behavior is output into BMCM.

Meanwhile,the "divide and conquer" adaptive obstacle avoidance architecture can enhance the security of USVs. If LOAM and ALM are designed together,the course compensation strategy must be trained in the disturbance obstacle environment. The course angle is selected by random exploration to search for the optimal course strategy. The random course angle inevitably leads USVs into collision situations. However,the "divide and conquer" architecture allows USVs to realize the disturbance regularity learning in non-obstacle environment. In addition,the trained course compensation angle can be used to directly offset the disturbances from sea wind and currents in disturbance obstacle environment.

Ⅲ. BEHAVIOR-BASED LOCAL OBSTACLE AVOIDANCE ALGORITHMThe dynamic characteristics of high-speed USVs are different from those of normal land wheel robots in that the basic motion control system is highly nonlinear. According to the current intelligent control methods[22],the course angle and translational velocity are set as input parameters of BMCM of high-speed USV, and the basic motion is realized by direction and speed control[23, 24, 25]. In this study,a typical behavior-based local obstacle avoidance algorithm based on heading window (OAABHW)[14] is chosen as the LOAM of AABSRL to deal with the issues in local obstacle avoidance. The rotational velocity and translational velocity-based input parameters of BMCM are improved in this research to fit the need of AABSRL and the basic motion characteristics of high-speed USV. However,the basic obstacle avoidance mechanisms of OAABHW are not changed after the improvement. Only the guidance parameters from OAABHW are replaced by course angle and translational velocity. Meanwhile,the original translational velocity model of OAABHW is constructed on the rotational velocity. Thus,it also needs to be improved. The improved OAABHW algorithm is denoted as TOAABHW.

Definition 1[14]. The span of translational velocity around the current translational velocity $v_c$ that can be reached by translational acceleration $a$ in the time-window $\Delta t$ is defined as translational velocity window

| $ \begin{align}\label{eq:1} {V}_{\mathrm{LineVelocity}}=\{v|v \in[v_c - a\Delta t,v_c + a \Delta t]\}. \end{align} $ | (1) |

Definition 2[14]. The space of heading angle around the current heading angle $\theta_{\mathrm{USV}}$ that can be reached within the time-window $\Delta t$ is defined as heading-window

| $ \begin{align}\label{eq:2} &{V}_{\mathrm{Heading}}=\big \{\theta | \theta \in\notag\\ &\quad[\theta_{\mathrm{USV}} + \omega_c \Delta t - \frac{1}{2}\dot{\omega}\Delta t^2,\theta_{\mathrm{USV}} + \omega_c \Delta t + \frac{1}{2}\dot{\omega}\Delta t^2]\big \}, \end{align} $ | (2) |

where $\theta_{\mathrm{USV}}$ is the current heading angle of USV in the absolute coordinate system,$\omega_c$ is the current rotational velocity of USV,and $\dot{\omega}$ is the rotational acceleration.

Definition 3. At time step $t$,the value difference between guidance angle $\theta_{\mathrm{Guidance}}^t$ and course angle $\theta_{\mathrm{Sailing}}^t$ is defined as the guidance deviation angle denoted as

| $ \begin{align}\label{eq:3} \theta_{\mathrm{delta}}^t = \theta_{\mathrm{Guidance}}^t - \theta_{\mathrm{Sailing}}^t, \end{align} $ | (3) |

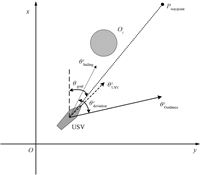

where $\theta_{\mathrm{delta}}^t \in [-\pi,\pi]$, $\theta_{\mathrm{Guidance}}^t$ is the output from TOAABHW and guides the USV to realize the safe navigation, $\theta_{\mathrm{Sailing}}^t$ is the course angle which is the moving direction of USV in the absolute coordinates, $\theta_{\mathrm{USV}}^t$ is the heading angle of USV. The diagram of these angles are shown in Fig. 2,where $O_i$ represents the obstacles in the environment.

|

Download:

|

| Fig. 2. Diagram of angles of USV in the voyage. | |

In TOAABHW,the course angle is set as the input parameter of BMCM,and the optimal course angle $\theta_{\mathrm{AvoidBest}}$ is used as the guidance angle directly. $\theta_{\mathrm{AvoidBest}}$ is obtained by calculating the heading deviation angle-based constraint optimization problem (details are provided in [14]).

| $ \begin{equation}\label{eq:4} \theta_{\mathrm{Guidance}}^t = \theta_{\mathrm{AvoidBest}}^t \end{equation} $ | (4) |

The translational velocity model of TOAABHW is constructed according to the guidance deviation angle $\theta_{\delta}^t$,and the model is shown as follows.

1) The translational velocity model in non-obstacle navigation is

| $ \begin{equation}\label{eq:5} v_{\mathrm{Guidance}}^t = v_{\mathrm{max}} \cdot \eta \cdot \left ( 1 - |\theta_{\mathrm{delta}}^t| / \frac{\pi}{2}\right). \end{equation} $ | (5) |

2) The translational velocity model in obstacle avoidance navigation is

| $ \begin{equation}\label{eq:6} v_{\mathrm{Guidance}}^t = v_{\mathrm{max}} \cdot \eta \cdot \frac{d_{\mathrm{obs}}^{\mathrm{min}}}{d_{\mathrm{safe}}}\cdot \left ( 1 - |\theta_{\mathrm{delta}}^t| / \frac{\pi}{2}\right), \end{equation} $ | (6) |

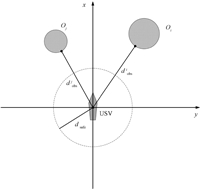

where $v_{\mathrm{Guidance}}^t$ is the guidance translational velocity,$\eta$ is a positive parameter, $\theta_{\mathrm{delta}}^t$ is the guidance deviation angle, $v_{\mathrm{max}}$ is the maximum translational velocity of USV in ${V}_{\mathrm{LineVelocity}}$,$d_{\mathrm{safe}}$ is the radius of safe region in the navigation, $d_{\mathrm{obs}}^{\mathrm{min}}$ is the distance from the USV to the nearest obstacle,and $v_{\mathrm{max}}$ and $d_{\mathrm{obs}}^{\mathrm{min}}$ are

| $ \begin{equation}\label{eq:7} v_{\mathrm{max}} = \max \{{V}_{\mathrm{LineVelocity}}\}, \end{equation} $ | (7) |

| $ \begin{equation}\label{eq:8} d_{\mathrm{obs}}^{\mathrm{min}} = \min \{d_{\mathrm{obs}}^1,d_{\mathrm{obs}}^2,\cdots,d_{\mathrm{obs}}^N\}, \end{equation} $ | (8) |

where $d_{\mathrm{obs}}^i$ is the distance from the USV to the $i$th obstacle around the USV. The diagram of $d_{\mathrm{safe}}$ and $d_{\mathrm{obs}}^i$ is shown in Fig. 3.

|

Download:

|

| Fig. 3. Diagram of $d_{\mathrm{safe}}$ and $d_{\mathrm{obs}}^i$. | |

The algorithm flow of TOAABHW is shown in Algorithm 1. Details are provided in [14].

Ⅳ. SARSA ON-POLICY STRATEGY REINFORCEMENT LEARNING-BASED ALMALM is the module of AABSRL for dealing with the external disturbance factors from sea wind and currents in complicated marine environment. In this section,we focus on the design of ALM based on the Sarsa on-policy strategy reinforcement learning algorithm.

A. Sarsa On-policy Reinforcement Learning AlgorithmReinforcement learning algorithms are mainly divided into two types,on-policy and off-policy algorithms[26, 27, 28]. The purpose of on-policy strategy reinforcement learning algorithms is to establish the relationship between exploration strategies and optimal behavior. At present,research has proved that on-policy algorithms have better performance than off-policy algorithms[27, 29]. The Sarsa on-policy strategy reinforcement learning algorithm (Sarsa algorithm) is a typical on-policy strategy reinforcement learning[29]. In learning,the value function of the Sarsa algorithm is updated according to the actual observation data based on Markov decision process (MDP),and the iteration of value function is determined by exploring the strategy.

The Sarsa algorithm is the core of ALM,and its responsibility is to search for the optimal compensation strategy. The Sarsa algorithm is founded on the theory of MDP,which includes state space,action space,state transition probability,payoff function,and action selection policy[30, 31]. Therefore,the Sarsa algorithm-based ALM of AABSRL comprises four parts,i.e., the state space of complicated marine environment,the space of course compensation angle action,the payoff function,and the selection policy.

The iteration formula of the state behavior value function of the Sarsa algorithm is denoted as

| $ \begin{align}\label{eq:9} Q(s_t,a_t)= &(1 - \alpha_t) Q(s_t,a_t) + \notag\\ &\alpha_t[r(s_t,a_t) + \gamma Q (s_{t+1},a_{t+1})]. \end{align} $ | (9) |

Here,

| $ \begin{align}\label{eq:10} \begin{cases} \alpha_{t+1} = \xi \alpha_t,\\ 0 \leq \alpha_t \leq 1,\\ \sum\limits_{t=0}^\infty \alpha_t = \infty,\\ \sum\limits_{t=0}^\infty \alpha_t^2 < \infty, \end{cases} \end{align} $ | (10) |

where $s_t$ is the state at time step $t$,$a_t$ is the action at time step $t$,$Q(s_t,a_t)$ is the state behavior value function,$\alpha_t$ is the learning factor,$\xi~(0 \leq \xi \leq 1)$ is the updating factor of $\alpha_t$,$\gamma~(0 < \gamma < 1)$ is the discount factor,and $r(s_t,a_t)$ is the payoff value function.

Algorithm 1. The Flow of TOAABHW

1. $while$ (USV is not reaching the destination)

2. $Calculate$ : ${V}_{\mathrm{LineVelocity}}$ ,${V}_{\mathrm{Heading}}$ ,${A}_{\mathrm{Earth}}^{\mathrm{ObsTan}}$;

3. ${V}_{\mathrm{FHead}} = {V}_{\mathrm{Heading}} - {A}_{\mathrm{Earth}}^{\mathrm{ObsTan}}$;

/* ${A}_{\mathrm{Earth}}^{\mathrm{ObsTan}}$is the infeasible direction set which caused by obstacles around the USV*/

4. $if$ ${V}_{\mathrm{Heading}} \neq \varnothing$

5. $\theta_{\mathrm{Guidance}}^t = \theta_{\mathrm{AvoidBest}}^t$;

6. $else$

7. if $|\theta_{\mathrm{goal}} - \theta_{\mathrm{max}}| \leq |\theta_{\mathrm{goal}} - \theta_{\mathrm{min}}|$

8. $\theta_{\mathrm{AvoidBest}}^t = \theta_{\mathrm{max}}$;

9. else

10. $\theta_{\mathrm{AvoidBest}}^t = \theta_{\mathrm{min}}$ ;

/* $\theta_{\mathrm{max}} = \max\{{V}_{\mathrm{Heading}}\}$, $\theta_{\mathrm{min}} = \min\{{V}_{\mathrm{Heading}}\}$, $\theta_{\mathrm{goal}}$ is the angle from the position of USV to the waypoint $P_{\mathrm{waypoint}}$. The diagram is shown in Fig. 2*./

11. $end if$

12. $end if $

13. $if $ $d_{\mathrm{obs}}^{\mathrm{min}} > d_{\mathrm{safe}}$ /* USV is in the safe situation*/

14. $v_{\mathrm{Guidance}}^t = v_{\mathrm{max}} \cdot \eta \cdot \left ( 1 - |\theta_{\mathrm{delta}}^t| / \frac{\pi}{2}\right )$;

15. $else $ /* USV is in the dangerous situation*/

16. $v_{\mathrm{Guidance}}^t = v_{\mathrm{max}} \cdot \eta \cdot \frac{d_{\mathrm{obs}}^{\mathrm{min}}}{d_{\mathrm{safe}}}\cdot \left ( 1 - |\theta_{\mathrm{delta}}^t| / \frac{\pi}{2}\right )$;

17. $end if$

18. $Output$: $\theta_{\mathrm{Guidance}}^t$ and $v_{\mathrm{Guidance}}^t$;

19. $end while$

B. Analysis of State Space of Complicated Marine EnvironmentThe motion of USVs is mainly disturbed by sea wind and currents in complicated marine environment. Thus,the state space of ALM of AABSRL is the state space of sea wind and currents. The disturbances from sea wind and currents to the hull are independent; thus,the states of sea wind and currents can be analyzed separately.

When USV sails at a high speed,the force suffered from sea wind is denoted as[21]

| $ \begin{equation}\label{eq:11} R_A = 0.5 \cdot \rho_a \cdot C_D \cdot A \cdot (v_{\mathrm{wind}}^2), \end{equation} $ | (11) |

where $\rho_a$ is the density of air,$A$ is the projected area of the hull above the water,$C_D$ is the drag coefficient of hull, and $v_{\mathrm{wind}}$ is the velocity of sea wind in the absolute coordinates.

The hull of USV is a nonuniform rigid body,and the mass of bow is relatively small. Under the side force from sea wind,the heading and course angles of USV are easily changed. The diagram of side force from sea wind is shown in Fig. 4,and the side force is denoted as

| $ \begin{align} R_{\mathrm{side}} = & f_{\mathrm{sign}}(\theta_{\mathrm{wind}}) \cdot 0.5 \cdot \rho_a \cdot \notag\\ &C_D \cdot A_{\mathrm{side}} \cdot (v_{\mathrm{wind}} \times \sin(\theta_{\mathrm{wind}}) )^2, \end{align} $ | (12) |

| $ \begin{equation}\label{eq:13} f_{\mathrm{sign}}(\theta_{\mathrm{wind}}) = \begin{cases} 1,\qquad & \theta_{\mathrm{wind}} \geq 0,\\ -1,& \theta_{\mathrm{wind}} < 0, \end{cases} \end{equation} $ | (13) |

where $\theta_{\mathrm{wind}}$ is the direction of sea wind in the relative coordinates of USV.

|

Download:

|

| Fig. 4. Diagram of the side force from sea wind. | |

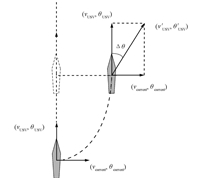

Meanwhile,USVs are disturbed by currents in complicated marine environment. Under the interference of currents,the heading angle is constant,whereas the course angle of the hull is changed. The affection is reflected by sub-velocity $v_{\mathrm{current}}$,which has the same direction as the currents. The deviation of USV under the action of currents is shown in Fig. 5.

|

Download:

|

| Fig. 5. Diagram of the deviation of USV under the action of currents. | |

The translational velocity and course angle of USV under the action of currents are respectively denoted as $v'_{\mathrm{USV}}$ and $\theta'_{\mathrm{USV}}$:

| $ \begin{align} v'_{\mathrm{USV}}=&[(v_{\mathrm{USV}}\cos (\theta_{\mathrm{USV}}) + v_{\mathrm{current}}\cos(\theta_{\mathrm{current}}))^2+\notag\\ &(v_{\mathrm{USV}}\sin (\theta_{\mathrm{USV}}) + v_{\mathrm{current}}\sin(\theta_{\mathrm{current}}))^2]^{1/2}, \end{align} $ | (14) |

| $ \begin{equation}\label{eq:15} \theta'_{\mathrm{USV}} = \mathrm{atan} \left ( \frac{v_{\mathrm{USV}}\cos (\theta_{\mathrm{USV}}) + v_{\mathrm{current}}\cos(\theta_{\mathrm{current}})}{v_{\mathrm{USV}}\sin (\theta_{\mathrm{USV}}) + v_{\mathrm{current}}\sin(\theta_{\mathrm{current}})} \right ), \end{equation} $ | (15) |

| $ \begin{equation}\label{eq:16} \Delta \theta = |\theta_{\mathrm{USV}} - \theta'_{\mathrm{USV}}|, \end{equation} $ | (16) |

where $\Delta \theta$ is the deviation angle between heading angle and course angle under the disturbance of currents, $v_{\mathrm{current}}$ is the velocity of currents,and $\theta_{\mathrm{current}}$ is the direction of currents.

According to the analysis above,the disturbance principles from sea wind and currents are completely different. These disturbance factors should be processed separately. Functions (12),(14) and (15) are continuous and differentiable. Thus,the states of sea wind and currents can be discretized. The discretized direction and velocity space of sea wind are $\theta_W$ and $V_W$,and the discredited direction and velocity space of currents are $\theta_C$ and $V_C$,i.e.,

| $ \begin{equation}\label{eq:17} \begin{cases} \theta_W = \theta_{W_1} \cup \theta_{W_2} \cup \cdots \cup \theta_{W_m},\\ V_W = V_{W_1} \cup V_{W_2} \cup \cdots \cup V_{W_n},\\ \theta_W \subset [-\pi,\pi],\\ V_W \subset [0,v_{\mathrm{wind}}^{\mathrm{max}}], \end{cases} \end{equation} $ | (17) |

| $ \begin{equation}\label{eq:18} \begin{cases} \theta_C = \theta_{C_1} \cup \theta_{C_2} \cup \cdots \cup \theta_{C_t},\\ V_C = V_{C_1} \cup V_{C_2} \cup \cdots \cup V_{C_p},\\ \theta_C \subset [-\pi,\pi],\\ V_C \subset [0,v_{\mathrm{current}}^{\mathrm{max}}], \end{cases} \end{equation} $ | (18) |

where $v_{\mathrm{wind}}^{\mathrm{max}}$ is the max velocity of sea wind and $v_{\mathrm{current}}^{\mathrm{max}}$ is the max velocity of currents.

C. Space of Course Angle Compensation ActionUnder the disturbances of sea wind and currents,the course angle deviates from the guidance angle. To keep the safe navigation of USV,the ALM of AABSRL needs to search for a certain course compensation angle $\Delta \theta_\mathrm{H}$ to offset the course deviation,and the action $\Delta \theta_\mathrm{H}$ needs to satisfy the following functions:

| $ \begin{align}\label{eq:19} \!\!\!\!\!\!\!\!\left\{\begin{array}{*{20}llll} \!\!\!\!0.5 \rho_a C_D A_{\mathrm{side}} f_{\mathrm{sign}} (\theta_{\mathrm{wind}} - \Delta \theta_\mathrm{H})\times \\ \qquad (v_{\mathrm{wind}} \sin (\theta_{\mathrm{wind}} - \Delta \theta_\mathrm{H}))^2 + \xi \sin (\Delta \theta_\mathrm{H}) F_p\!=\!0,\\ \!\!\!\! \sin (\Delta \theta_\mathrm{H}) v_{\mathrm{USV}} + v_{\mathrm{current}} \sin (\theta_{\mathrm{current}} -\theta_\mathrm{USV}) = 0, \end{array}\right. \end{align} $ | (19) |

| $ \begin{equation}\label{eq:20} f_{\mathrm{sign}}(\theta) = \begin{cases} 1,\qquad & {\rm if} \quad \theta \geq 0,\\ -1,& {\rm otherwise}, \end{cases} \end{equation} $ | (20) |

where $v_{\mathrm{wind}}$ and $v_{\mathrm{current}}$ are the velocities of sea wind and currents in the absolute coordinates, respectively,$\rho_{a}$ is the density of air,$A$ is the projected area of the hull above the water,$C_D$ is the drag coefficient of hull,$\theta_{\mathrm{wind}}$ and $\theta_{\mathrm{current}}$ are the directions of sea wind and currents in the relative coordinates,respectively,$F_p$ is the thrust force generated by the propeller of USV,and $\xi~(\xi > 0)$ is a proportion factor.

Building mathematical models for winds and currents is impossible. Therefore,the solution to $\Delta \theta_\mathrm{H}$ of (19) cannot be obtained through analytical methods. Therefore,the responsibility of the Sarsa algorithm-based ALM is used to search for an optimal solution for (19). The space of the course compensation action is defined as $A_\mathrm{H}$,i.e.,

| $ \begin{equation}\label{eq:21} \begin{cases} A_\mathrm{H} = \{0,\pm \varphi,\pm 2 \varphi,\cdots,\pm n \varphi\},\\ n \varphi \in [0,\pi],\\ A_\mathrm{H} \subset {V}_{\mathrm{Heading}}, \end{cases} \end{equation} $ | (21) |

where $\varphi$ is the resolution of course compensation angle and ${V}_{\mathrm{Heading}}$ is the heading window used to make the constraint on $\Delta \theta_\mathrm{H}$.

D. Selection Strategy of Course Compensation ActionThe Boltzmann distribution-based selection strategy is used to select the action of course compensation angle in the learning process,because it is typically greedy in the limit and infinite exploration (GLIE). Under the action of GLIE,the Sarsa algorithm can converge to the optimal action strategy with probability 1[27]. The probability of Boltzmann distribution is

| $ \begin{equation}\label{eq:22} p(s_t,a_t) = \frac{{\rm e}^{Q(s_t,a_t)/T}}{\sum\limits_{a \in A_\mathrm{H}}{\rm e}^{Q(s_t,a)/T}}, \end{equation} $ | (22) |

| $ \begin{equation}\label{eq:23} T = T_{\max} - \tau(T_{\max} - T_{\min}), \end{equation} $ | (23) |

where $p(s_t,a_t)$ is the probability of USV in the selection of course compensation action $a_t$ at state $s_t$,$Q(s_t,a_t)$ is the state behavior value function,$T~(T>0)$ is the distribution control factor,$T_{\max}$ and $T_{\min}$ are the maximum and minimum values of $T$,respectively,$\tau$ is the discount factor of $T$,and $A_\mathrm{H}$ is the space of course compensation action.

E. Payoff Value FunctionIn the trial-critics learning process,the implementation results of course compensation action $\Delta \theta_\mathrm{H}^t$ at $t$ are evaluated at $t+1$,and the action $\Delta \theta_\mathrm{H}^t$ is rewarded or penalized by payoff value function $r(s_t,a_t)$ at $t+1$. The guidance angle $\theta_{\mathrm{Guidance}}^t$ is set as the standard to evaluate the actual course angle $\theta_{\mathrm{Sailing}}^{t+1}$ of USV at $t+1$.

Definition 4. The absolute difference between $\theta_{\mathrm{Sailing}}^{t+1}$ and $\theta_{\mathrm{Guidance}}^t$ is defined as the course deviation angle of USV at $t+1$ and denoted as

| $ \begin{equation}\label{eq:24} \theta_{\mathrm{deviation}}^{t+1} = |\theta_{\mathrm{Sailing}}^{t+1} - \theta_{\mathrm{Guidance}}^t|. \end{equation} $ | (24) |

Definition 5. The difference value between $\theta_{\mathrm{deviation}}^{t+1}$ and $\theta_{\mathrm{deviation}}^{t}$ is defined as the tendency of course deviation angle of USV at $t+1$ and denoted as

| $ \begin{equation}\label{eq:25} \Delta \theta_{\mathrm{deviation}}^{t+1} = \theta_{\mathrm{deviation}}^{t+1} - \theta_{\mathrm{deviation}}^{t}. \end{equation} $ | (25) |

In the ALM of AABSRL,the course deviation angle $\theta_{\mathrm{deviation}}^{t+1}$ and its tendency $\Delta \theta_{\mathrm{deviation}}^{t+1}$ at $t+1$ are used to build the payoff value function as

| $ \begin{align}\label{eq:26} & r(s_t,a_t) =\notag\\ &\quad \begin{cases} 0.5,\qquad & {\rm if} \Delta \theta_{\mathrm{deviation}}^{t+1} \leq 0 \wedge \Delta \theta_{\mathrm{deviation}}^{t+1} \geq \theta_{\mathrm{gate}},\\ 1,& {\rm if} \Delta \theta_{\mathrm{deviation}}^{t+1} \leq 0 \wedge \Delta \theta_{\mathrm{deviation}}^{t+1} < \theta_{\mathrm{gate}},\\ -0.5,& {\rm if} \Delta \theta_{\mathrm{deviation}}^{t+1} > 0 \wedge \Delta \theta_{\mathrm{deviation}}^{t+1} < \theta_{\mathrm{gate}},\\ -1,& {\rm if} \Delta \theta_{\mathrm{deviation}}^{t+1} > 0 \wedge \Delta \theta_{\mathrm{deviation}}^{t+1} \geq \theta_{\mathrm{gate}},\\ \end{cases} \end{align} $ | (26) |

where $\theta_{\mathrm{gate}}$ is the threshold value to evaluate the course deviation angle $\Delta \theta_{\mathrm{deviation}}^{t+1}( t \in N)$.

Ⅴ. FLOW OF AABSRLAABSRL is composed of TOAABHW-based LOAM and Sarsa algorithm-based ALM. The flow of AABSRL is shown in Algorithm 2.

Algorithm 2. The flow of AABSRL1. $Calculate$: ${\theta}_W$ ,${V}_W$ ,${\theta}_c$,${V}_C$ according to (17),(18);

3. $Design$: $A_\mathrm{H}$ according to (21);

4. $Initialize$: $t = 0$,$Q(s_0,a_0)$,$\alpha_0$;

5. Select $a_0$,using (22);

6. $while $ (USV is not reaching the destination)

7. Calculate $\theta_{\mathrm{Guidance}}^t$ and $v_{\mathrm{Guidance}}^t$ using TOAABHW Algorithm 2;

8. $\theta_{\mathrm{out}}^t = \theta_{\mathrm{Guidance}}^t + a_t$;

9. $v_{\mathrm{out}}^t = v_{\mathrm{Guidance}}^t$;

/*The course compensation angle is only used to offset the disturbances from sea wind and currents.*/

10. Output $\theta_{\mathrm{out}}^t$ into BMCM;

11. Observe $s_{t+1}$ and determine $a_{t+1}$ based on (22);

12. Calculate $r(s_t,a_t)$ based on (26);

13. Update $Q(s_t,a_t)$ based on (9);

14. Update the learning factor $\alpha_t$ based on (10);

15. Update the distribution control factor $T$ based on (23);

16. $t=t+1$ ;

15. $end while$

Ⅵ. EXPERIMENT ANALYSISAt present,various obstacle avoidance algorithms have been proposed for USV. OAABHW is a typical behavior-based obstacle avoidance algorithm for USV,and its performance has been verified in simulation obstacle environments[12]. OAABHW is improved and denoted as TOAABHW to fit the basic motion control characteristics of high-speed USVs. The only difference between OAABHW and TOAABHW is the output parameters; their core mechanisms of obstacle avoidance are the same.

In this section,TOAABHW is set as the comparison standard to evaluate the basic performance of AABSRL,because TOAABHW can stand for mainstream algorithms for USV. A series of comparative experiments of TOAABHW and AABSRL has been performed using high-speed USVs in simulation environments and real marine environments. The basic performance of AABSRL is mainly evaluated by three aspects of USV,i.e.,track,course angle,and course deviation angle.

A. Simulations1)Simulation platform and relative parameter settings: The hardware of the simulation system is composed of three computers,which are host PC,graphic workstation,and PC/104-embedded computer. All sensor data,including obstacle distribution,disturbances of sea wind and currents,and state data of USV,are simulated by Vega visual simulation program running on the graphic workstation. In the PC/104,the operation system is vxWorks OS. The core obstacle avoidance program is developed and built as vxWorks image in host PC and downloaded to PC/104 by FTP. The simulated sensor data and avoidance behavior are transferred between workstation and PC/104 by UDP communications.

In the simulation environment,the random disturbances of sea wind and currents are designed according to the state parameters of three real sea-state marine environments. The direction of sea wind $\widetilde{\theta}_{\mathrm{wind}} $ in the absolute coordinates is set in the interval of $[7\pi /18,2\pi/3]$,and the velocity of sea wind $v_{\mathrm{wind}}$ is set between 2.1 and 8.4 m/s. The direction of current $\tilde{\theta}_{\mathrm{current}}$ in the absolute coordinates is set in the interval of $[\pi /3,2\pi/3]$ , and the velocity is set between 1.5 and 4 m/s. The cruising speed of USV in the non-obstacle situation is 24 knots.

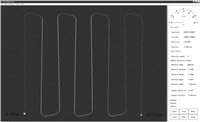

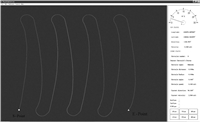

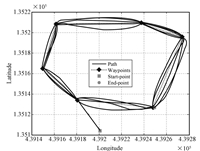

2)Experimental analysis in non-obstacle environments: In this section,the performance of AABSRL is evaluated through the comparative experiments in the non-obstacle environment. The track of USV under the guidance of TOAABHW in the non-obstacle and non-disturbance environments is shown in Fig. 6. The track is the ideal result,set as the standard of comparative analysis for the following experiments. When the interferences of random sea winds and currents in Section VI-A-1 are added into the non-obstacle environment,the result of TOAABHW is shown in Fig. 7. Compared with the result in Algorithm 1,the track of USV is obviously deviated from the ideal track.

|

Download:

|

| Fig. 6. Track of USV under the guidance of TOAABHW in the non-disturbance environment. | |

|

Download:

|

| Fig. 7. Track of USV under the guidance of TOAABHW in the disturbance environment. | |

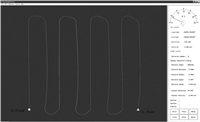

However,when USV is guided by AABSRL in the non-obstacle environment,the state of USV initially enters the exploration learning phase. After repeating the trial-critics based learning for 1 000 seconds,the course compensation strategy of sea wind and currents is formed and the state of AABSRL enters the exploitation phase. The result of AABSRL is shown in Fig. 8. The quality of the track in Fig. 8 is not as good as that in Fig. 6 but is much better than that in Fig. 7. The relative data of tracks in the comparative experiments are shown in Table Ⅰ.

|

Download:

|

| Fig. 8. Track of USV under the guidance of AABSRL in the disturbance environment. | |

|

|

Table Ⅰ RELATIVE DATA OF TRACKS IN DIFFERENT SITUATIONS |

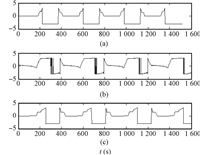

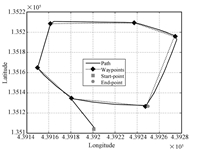

According to the comparative results of USV tracks in the disturbance situations,the performance of AABSRL is considerably better than that of TOAABHW. However,the superiority of AABSRL cannot be entirely expressed by the track of USV. Further comparisons of course angle and course deviation angle are conducted. The distribution of course angle corresponding to the experiments of Figs. 6 $\sim$ 8 is shown in Figs. 9 (a) $\sim$ (c). Compared with Fig. 9 (b),the distribution of course angle in Fig. 9 (c) is more stable and the change tendency is much closer to the distribution in Fig. 9 (a). The comparative results of course angle show that AABSRL can enhance the stability of USV in the disturbance environment.

|

Download:

|

| Fig. 9. The distribution of course angle of USV ((a) Under the guidance of TOAABHW in the non-disturbance environment; (b) Under the guidance of TOAABHW in the disturbance environment; (c) Under the guidance of AABSRL in the disturbance environment). | |

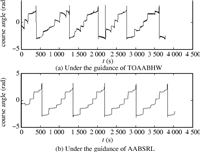

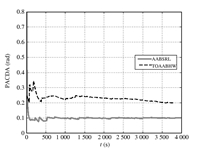

The course deviation angle is the degree of real course angle $\theta_{\mathrm{Sailing}}^{t+1}$ of USV that deviates from the guidance angle $\theta_{\mathrm{Guidance}}^{t}$. It is an important indicator in evaluating the performance of obstacle avoidance algorithms in the guidance of USV. The progressive average course deviation angle (PACDA) of USV is introduced to analyze the performance of AABSRL. PACDA is denoted as

| $ \begin{equation}\label{eq:27} \tilde{\theta}_{\mathrm{deviation}} = \frac{1}{N} \sum_{t=1}^{N} \theta_{\mathrm{deviation}}^{t+1}. \end{equation} $ | (27) |

In the non-disturbance environment,angle $\tilde{\theta}_{\mathrm{deviation}}$ of TOAABHW is shown by the thin line in Fig. 10,and the distribution is in the vicinity of 0.1 rad. In the disturbance environment,angle $\tilde{\theta}_{\mathrm{deviation}}$ of TOAABHW is shown by the dashed line in Fig. 10. Furthermore,the result of AABSRL in the disturbance environment is shown by the bold line in Fig. 10. The comparative results show that the ALM of AABSRL can reduce the deviation of USV in the disturbance situation and that the navigation capability of USV in the disturbance environments is enhanced effectively.

|

Download:

|

| Fig. 10. Distribution of PACDA of USV in different situations. | |

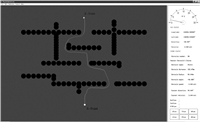

3) Experimental analysis in obstacle environments: A typical closed obstacle environment is designed to verify the performance of AABSRL in the disturbance situation of sea wind and currents. The distribution of obstacles is shown in Fig. 11.

|

Download:

|

| Fig. 11. Experiment scenario of closed obstacle environment | |

In the non-disturbance environment,the result of USV under the guidance of TOAABHW is shown in Fig. 12. The result shows that the typical obstacle avoidance algorithm can guide USVs to realize the safe navigation in the obstacle environment. In addition,the result of TOAABHW is set as the standard to evaluate the performance of AABSRL in the obstacle disturbance environment.

|

Download:

|

| Fig. 12. Result of TOAABHW in the closed obstacle region without disturbance. | |

In the obstacle disturbance environment (the disturbance datum of sea wind and currents are set as in Section VI-A-1),the result of USV under the guidance of TOAABHW is shown in Fig. 13. Compared with the results in Fig. 12,the traditional avoidance algorithms are not effective in the disturbance environments.

|

Download:

|

| Fig. 13. Result of TOAABHW in the disturbance closed obstacle region. | |

In the former comparative experiments (Section VI-A-2),AABSRL is trained in the non-obstacle disturbance environment. In the disturbance situation,the result of USV under the guidance of trained AABSRL in the closed obstacle environment is shown in Fig. 14. The course angle compensation strategy against the disturbance is obtained by the Sarsa algorithm-based ALM because of effective learning. The result shows that the disturbances from sea wind and currents are effectively dealt with by course compensation angle.

|

Download:

|

| Fig. 14. Result of AABSRL in closed obstacle region with disturbance. | |

In addition,the results verify that the proposed "divide and conquer" strategy-based architecture of AABSRL is valid. The course angle compensation strategy of the algorithm is trained in the non-obstacle disturbance environments,and the accumulated compensation course angle can be successfully used in obstacle disturbance environments. Due to the advantages of AABSRL,the danger of USV from learning phase in the obstacle environments is avoided effectively.

B. Real Boat Sea TrialsAlthough the performance of AABSRL has been verified through comparative experiments in simulation environments,the results cannot sufficiently illustrate the capacity of AABSRL yet. A real boat sea trial of USV is performed in the nearby sea of Weihai in Shandong province. The experiments are conducted in three sea-state marine environments. The real boat platform of USV in the sea trial is shown in Fig. 15. The physical design details of the real platform of the high-speed USV have been described in [24, 25]. The sensors for detecting the obstacles on the platform include radar,binocular vision,stereo vision,monocular vision, infrared cameras,and laser range finders.

|

Download:

|

| Fig. 15. Real-boat platform of USV in the sea trial. | |

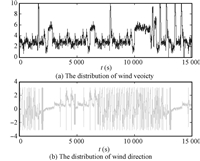

In the experiment,the states of sea wind and currents are shown in Figs. 16 and 17,and the velocity and direction are randomly distributed.

|

Download:

|

| Fig. 16. Distribution of sea wind in the sea trial. | |

|

Download:

|

| Fig. 17. Distribution of currents in the sea trial. | |

In the sea trial,the autonomous cruising speed of USV is 30 knots,and the entire distance of the navigation was approximately 46 km. The result of USV under the guidance of TOAABHW is shown in Fig. 18. For the disturbances from sea wind and currents, because TOAABHW is lack of adaptive learning mechanisms,the track of USV deviates from the ideal trajectory. When AABSRL is used to guide USV,the disturbance regularity of sea wind and currents is initially learned by ALM. Approximately 2 000 seconds later,the trained compensation behavior plays the role. The result of USV under the guidance of AABSRL is shown in Fig. 19. Compared with the trajectory of USV in Fig. 18,the navigation quality of USV is significantly improved.

|

Download:

|

| Fig. 18. The trajectory of USV under the guidance of TOAABHW. | |

|

Download:

|

| Fig. 19. The trajectory of USV under the guidance of AABSRL. | |

The course angle of USV under the guidance of TOAABHW is shown in Fig. 20 (a). The stability of the course angle of USV is not good in the navigation,because no function against the disturbances of sea wind and currents is available in TOAABHW. By contrast,the distribution of course angle of AABSRL is much better. The distribution of course angle is shown in Fig. 20 (b).

|

Download:

|

| Fig. 20. Distribution of course angle of USV in the sea trial. | |

The distributions of PACDA of USV under the guidance of the two algorithms in the sea trial are shown in Fig. 21. The comparative result shows that the disturbances from sea wind and currents can be dealt with by AABSRL and that the deviation of USV in real marine environment is effectively reduced. Meanwhile,under the guidance of AABSRL,USV can achieve a better navigation performance in real marine environment.

|

Download:

|

| Fig. 21. Distribution of PACDA of USV in the sea trial. | |

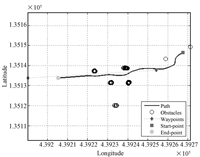

After learning in the non-obstacle environment,the ALM of AABSRL is trained sufficiently. The result of USV under the guidance of AABSRL in real marine obstacle environment is shown in Fig. 22. USV has successfully passed across the multi-obstacle region with the velocity of 20 knots. In the sea trial,the high-speed USV can achieve a good result under the guidance of AABSRL. However,the performance of the high-speed USV needs to be compared with the sea-trial results of existing USVs. The simple reactive obstacle avoidance algorithm (SROAA) has been proposed for USVs[16]. In the design process of SROAA,the external disturbance factors are ignored. The capacities of SROAA are verified by kayaks USV (the maximum velocity is 5 knots) in Singapore Harbor. The sea-trial experiment of SROAA cannot reappear; thus,the performance of SROAA and AABSRL is compared by existing results. Compared with the results of SROAA in [16],AABSRL can guide USV to achieve safe navigation with a higher velocity in a more complicated obstacle environment. The comparative results of USV under the guidance of SROAA and AABSRL are shown in Table Ⅱ.

|

Download:

|

| Fig. 22. The trajectory of USV under the guidance of AABSRL in real marine obstacle environment. | |

|

|

Table Ⅱ COMPARATIVE DATA OF SROAA AND AABSRL |

In this paper,the Sarsa algorithm-based adaptive obstacle avoidance algorithm AABSRL is proposed for USVs in complicated marine environments. Using the "divide and conquer" strategy-based architecture,we propose the ALM of AABSRL for dealing with the external disturbances from sea wind and currents. The results of comparative experiments in simulation environments and three sea-state marine environments demonstrate that the disturbances of sea wind and currents can be effectively dealt with by AABSRL. Furthermore,the performance of AABSRL is compared with that of SROAA. The comparation results show that AABSRL can guide USV to realize a higher velocity (20 knots $>$ 5 knots) navigation in more complex real marine environment than SROAA. In summary,the proposed AABSRL can enhance the navigation performance of USV and achieve safe navigation in real obstacle marine environment.

| [1] | Steimle E T, Hall M L. Unmanned surface vehicles as environmentalmonitoring and assessment tools. In: Proceedings of Oceans 2006.Massachusetts, Boston, USA: IEEE, 2006. 1-5 |

| [2] | Bibuli M, Bruzzone G, Caccia M, Indiveri G, Zizzari A A. Linefollowing guidance control: application to the Charlie unmanned surfacevehicle. In: Proceedings of IEEE/RSJ International Conference onIntelligent Robots and Systems, IROS 2008. Nice, France: IEEE, 2008.3641-3646 |

| [3] | Aditya S G, Christian S, Shu D, Daniel J S, Craig W. Guidance andcontrol of an unmanned surface vehicle exhibiting sternward motion. In:Proceedings of the Oceans. Hampton Roads, VA: IEEE, 2012. 1-9 |

| [4] | Raboin E, Svec P, Nau D, Gupta S K. Model-predictive target defense byteam of unmanned surface vehicles operating in uncertain environments.In: Proceedings of the 2013 IEEE International Conference on Roboticsand Automation (ICRA). Karlsruhe, Germany: IEEE, 2013. 3517-3522 |

| [5] | Wang J H, Chen C F, Huang P P, Gu W, Chu J X. Modeling, simulatingand experiment of an autonomous surface vehicle. Energy Procedia,2011, 11(2): 314-318 |

| [6] | Motwani A. Survey of unmanned surface vehicles, Technical ReportMIDAS.SMSE.2012.TR.001, Plymouth University, UK, 2012. |

| [7] | Tan Min, Wang Shuo. Research progress on robotics. Acta AutomaticaSinica, 2013, 39(7): 963-972 (in Chinese) |

| [8] | Naeem W, Irwin G W, Yang A. COLREGS-based collision avoidancestrategies for unmanned surface vehicles. Mechatronics, 2012, 22(6):669-678 |

| [9] | Szymak P, Praczyk T. Using neural-evolutionary-fuzzy algorithm foranti-collision system of unmanned surface vehicle. In: Proceedings of the17th International Conference on Methods and Models in Automationand Robotics (MMAR). Miedzyzdro, Poland: IEEE, 2012. 286-290 |

| [10] | Zhuang Jia-Yuan, Su Yu-Min, Liao Yu-Lei, Sun Han-Bing. Unmannedsurface vehicle local path planning based on marine radar. Journal ofShanghai Jiao Tong University, 2012, 46(9): 1371-1375 (in Chinese) |

| [11] | Yang A L, Niu Q, ZhaoWQ, Li K, Irwin GW. An efficient algorithm forgrid-based robotic path planning based on priority sorting of directionvectors. In: Proceedings of the 2010 International Conference on LifeSystem Modeling and Intelligent Computing. Berlin, Germany: Springer,2010. 456-466 |

| [12] | Huntsberger T, Aghazarian H, Howard A, Trotz D C. Stereo vision-basednavigation for autonomous surface vessels. Journal of Field Robotics,2011, 28(1): 3-18 |

| [13] | Larson J, Bruch M, Halterman R, Rogers J, Webster R. Advancesin autonomous obstacle avoidance for unmanned surface vehicles. In:Proceedings of the AUVSI Unmanned Systems North America 2007.Washington, DC, USA: AUVSI, 2007. 1-15 |

| [14] | Tang P P, Zhang R B, Liu D L, Zou Q J, Shi C T. Research on nearfieldobstacle avoidance for unmanned surface vehicle based on headingwindow. In: Proceedings of the Control and Decision Conference(CCDC). Taiyuan, China: IEEE, 2012. 1262-1267 |

| [15] | Krishnamurthy P, Khorrami F, Ng T L. Obstacle avoidance for unmannedsea surface vehicles: a hierarchical approach. In: Proceedings of the17th World Congress, the International Federation of Automatic Control.Seoul, Korea: IFAC, 2008. 6798-6803 |

| [16] | Bandyophadyay T, Sarcione L, Hover F S. A simple reactive obstacleavoidance algorithm and its application in Singapore harbor. Field andService Robotics, 2010, 62: 455-465 |

| [17] | Kuwata Y, Wolf M T, Zarzhitsky D, Huntsberger T L. Safe maritimeautonomous navigation with COLREGS, using velocity obstacles. IEEEJournal of Oceanic Engineering, 2014, 39(1): 110-119 |

| [18] | Campbell S, NaeemW, Irwin GW. A review on improving the autonomyof unmanned surface vehicles through intelligent collision avoidancemanoeuvres. Annual Reviews in Control, 2012, 36(2): 267-283 |

| [19] | Thakur A, Svec P, Gupta S K. GPU based generation of state transitionmodels using simulations for unmanned surface vehicle trajectoryplanning. Robotics and Autonomous Systems, 2012, 60(12): 1457-1471 |

| [20] | Stelzer R, Prollb T. Autonomous sailboat navigation for short courseracing. Robotics and Autonomous Systems, 2008, 56(7): 604-614 |

| [21] | Faltinsen O M. Hydrodynamics of High-Speed Marine Vehicles. Cambridge,UK: Cambridge University Press, 2005. |

| [22] | Xin Bin, Chen Jie, Peng Zhi-Hong. Intelligent optimized control:overview and prospect. Acta Automatica Sinica, 2013, 39(11):1831-1848 (in Chinese) |

| [23] | Yu Z Y, Bao X P, Nonami K. Course keeping control of an autonomousboat using low cost sensors. Journal of System Design and Dynamics,2008, 2(1): 389-400 |

| [24] | Wu G X, Sun H B, Zou J, Wan L. The basic motion control strategyfor the water-jet-propelled USV. In: Proceedings of the 2009 IEEEInternational Conference on Mechatronics and Automation. Changchun,China: IEEE, 2009.611-616 |

| [25] | Wu G X, Zou J, Wan L, Qin Z B. Design of the intelligence motioncontrol system for the high-speed USV. In: Proceedings of the 2ndInternational Conference on Intelligent Computation Technology andAutomation. Changsha, China: IEEE, 2009. 50-53 |

| [26] | Sutton R S, Barto A G. Reinforcement Learning: An Introduction.Cambridge, MA: MIT Press, 1998. |

| [27] | Singh S P, Jaakkola T, Littman M L, Szepesvari C. Convergence resultsfor single-step on-policy reinforcement-learning algorithms. MachineLearning, 2000, 38(3): 287-308 |

| [28] | Watkins C. Learning from Delayed Rewards [Ph. D. dissertation], KingsCollege, University of Cambridge, UK, 1989. |

| [29] | Rummery G A, Niranjan M. On-line Q-learning Using ConnectionistSystems. Cambridge, UK: Department of Engineering, University ofCambridge, 1994. |

| [30] | Feinberg E A, Shwartz A. Handbook of Markov Decision Processes:Methods and Applications. Boston, MA: Kluwer Academic Publishers,2002. |

| [31] | Xu Xin, Shen Dong, Gao Yan-Qing, Wang Kai. Learning controlof dynamical systems based on Markov decision processes: researchfrontiers and outlooks. Acta Automatica Sinica, 2012, 38(5): 673-687(in Chinese) |

2014, Vol.1

2014, Vol.1