FOR repeatable control tasks,iterative learning control (ILC) is the most suitable and effective control scheme. Now ILC can be classified into two categories,i.e.,traditional ILC[1, 2, 3, 4, 5, 6] and adaptive ILC (AILC)[7, 8, 9, 10, 11, 12, 13, 14, 15]. The control input of traditional ILC is improved directly by a learning mechanism using the error and input information in the previous iteration. However,traditional ILC requires the global Lipschitz continuous condition,which makes it difficult to be applied to certain systems. AILC is an alternative method to overcome this problem,which takes advantage of both adaptive control and ILC. In AILC,the control parameters are tuned in the learning process, and the so-called composite energy function (CEF)[10] is usually constructed to analyze the stability conclusions. In the past decade,several AILC schemes for uncertain nonlinear systems have been developed[11, 12, 13, 14, 15].

In practice,systems with time delays are frequently encountered. The existence of time delays may make the task more complicated and challenging,especially when the delays are not completely known. Stabilization problem of control systems with time delays has drawn much attention[16, 17, 18]. Although many approaches have been developed in the field of AILC,only few results are available for nonlinear systems with time delay[19, 20, 21]. An AILC strategy is developed for a class of simple first-order system with unknown time-varying parameters and unknown time-varying delays in [20]. In [21],an adaptive learning control scheme is designed for a class of first-order nonlinearly parameterized systems with unknown periodically time-varying delays,and then is extended to a class of high-order systems with both time-varying and time-invariant parameters. However,they all require that the investigated uncertainties satisfy the local Lipschitz condition and nonlinearly parameterized condition such that adaptive learning laws can be designed to estimate the unknown time-varying parameters and the delay-independent terms produced by the derivative of Lyapunov-Krasovskii function can be compensated for directly by the controller. As much as we know,by now,no results have been reported for nonlinear systems that are not in parametric form and local Lipschitz.

Non-smooth and nonlinear characteristics such as dead-zone, hysteresis,saturation and backlash,are common in actuators and sensors. Dead-zone is one of the most important non-smooth nonlinear characteristics in many industrial motion control systems. The existence of dead-zone can severely impact system performances. It gives rise to design difficulty of controller. Therefore,the effect of dead-zone has been taken into consideration and drawn much attention in the control community for a long time[22, 23, 24, 25, 26, 27]. To overcome the problem of unknown dead-zone in control system design,an immediate method was to construct an adaptive dead-zone inverse. This approach was pioneered by Tao and Kokotovic[22]. Continuous and discrete adaptive dead-zone inverses were built for linear systems with unmeasurable dead-zone outputs[23, 24]. Based on the assumption of the same dead-zone slopes in the positive and negative regions,a robust adaptive control method was presented for a class of special nonlinear systems without using the dead-zone inverse[25]. In the work of Zhang and Ge[26, 27],the dead-zone was reconstructed into the form of a linear system with a static time-varying gain and bounded disturbances by introducing characteristic function. In [28], input dead-zone was taken into account and it was proved that the simplest ILC scheme retained its ability of achieving satisfactory performance in tracking control. As far as we know,there are few works from the viewpoint of AILC which deals with nonlinear systems with dead-zone nonlinearity in the literature at present.

In this paper,we present a neural network (NN) based AILC scheme for a class of nonlinear time-varying systems with unknown time-varying delays and unknown input dead-zone. The main design difficulty comes from how to deal with dead-zone nonlinearity and delay-dependent uncertainty that is neither parameterized nor local Lipschitz. In our work, the dead-zone output is represented as a novel simple nonlinear system with a time-varying gain,which is more general than the linear form in [22]. The approach removes the assumption of linear function outside the dead-band without necessarily constructing a dead-zone inverse. An appropriate Lyapunov-Krasovskii functional and Young$'$s inequality are combined to eliminate the unknown time-varying delays,which makes NN parameterizations with known inputs possible. Radial basis function neural networks (RBF NNs) are used as approximator to compensate for the nonlinear unknown system uncertainties. Since the optimal weights for NNs are usually unavailable,adaptive algorithms are designed to search for suitable parameter values during the iteration process. Furthermore,the possible singularity which may be caused by the appearance of the reciprocal of tracking error,is avoided by employing the hyperbolic tangent function. By constructing a Lyapunov-like CEF,the stability conclusion is obtained in two cases by exploiting the properties of the hyperbolic tangent function via a rigorous analysis. In addition,the boundary layer function is introduced to remove the requirement of identical initial condition which is required by the majority of ILC schemes.

The rest of this paper is organized as follows. The problem formulation and preliminaries are given in Section II. The AILC design is developed in Section III. The CEF-based stability analysis is presented in Section IV. A simulation example is presented to verify the validity of the proposed scheme in Section V,followed by the conclusions in Section VI.

Ⅱ. PROBLEM FORMULATION AND PRELIMINARIES A. Problem FormulationIn this paper,we consider a class of nonlinear time-varying systems with unknown time-varying delays and dead-zone running over a finite time interval $[0,T]$ repeatedly,given by

| \begin{align} \begin{cases} {{{\dot{x}}}_{i,k}}\left( t \right)={{x}_{i+1,k}}\left( t \right),\quad i=1,2,\cdots ,n-1,\\ {{{\dot{x}}}_{n,k}}\left( t \right)=f\left( {{\pmb X}_{k}}\left( t \right),t \right)+h\left( {{\pmb X}_{\tau ,k}}\left( t \right),t \right)+\\ \quad \quad \quad \quad \quad g\left( {{\pmb X}_{k}}\left( t \right),t \right){{u}_{k}}\left( t \right)+d\left( t \right),\\ {{y}_{k}}\left( t \right)={{x}_{1,k}}\left( t \right),{{u}_{k}}\left( t \right)=D\left( {{v}_{k}}\left( t \right) \right),\quad t\in \left[0,T \right],\\ {{x}_{i,k}}\left( t \right)={{\varpi }_{i}}\left( t \right),\quad t\in \left[-{{\tau }_{\max }},0 \right),\quad i=1,2,\cdots ,n,\\ \end{cases} \end{align} | (1) |

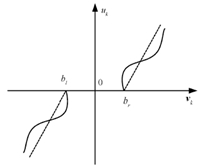

where $t$ is time,$ k\in {\bf N} $ denotes the number of iterations,${{y}_{k}}\left( t \right)\in \bf R $ and ${{x}_{i,k}}\left( t \right)\in \bf R $ $(i=1,2,\cdots ,n)$ are the system output and states,respectively,${{\pmb X}_{k}}\left( t \right):= {{\left[{{x}_{1,k}}\left( t \right),{{x}_{2,k}}\left( t \right),\cdots ,{{x}_{n,k}}\left( t \right) \right]}^{\rm T}}$ is the state vector,${{\tau }_{i}}\left( t \right)$ are unknown time-varying delays of states and $x_{{{\tau }_{i}},k}^{{}}:= {{x}_{i,k}}\left( t-{{\tau }_{i}}\left( t \right) \right)$ $(i=2,3,\cdots ,n)$,${{\pmb X}_{\tau ,k}}\left( t \right)={{\left[ {{x}_{{{\tau }_{1}},k}}\left( t \right),{{x}_{{{\tau }_{2}},k}}\left( t \right),\cdots ,{{x}_{{{\tau }_{n}},k}}\left( t \right) \right]}^{\rm T}}$,$f\left( \cdot ,\cdot \right)$ and $g\left( \cdot ,\cdot \right)$ are unknown smooth functions, $h\left( \cdot ,\cdot \right)$ is an unknown smooth function of time-delay states with upper bound,$d\left( t \right)$ is unknown bounded external disturbance,${{\varpi }_{i}}\left( t \right)$ $(i=1,2,\cdots ,n)$ denote the initial functions for delayed states, ${{v}_{k}}\left( t \right)\in \bf R$ is the control input,and the actuator nonlinearity $D\left( {{v}_{k}}\left( t \right) \right)$ is a dead-zone characteristic which is described by

| \begin{align} {u_k}\left( t \right) = D\left( {{v_k}\left( t \right)} \right) = \left\{ {\begin{array}{*{20}{l}} {m\left( t \right)\left( {{v_k}\left( t \right) - {b_r}} \right),}&{{\rm{for }}{v_k}\left( t \right) \ge {b_r},}\\ {0,}&{{\rm{for }}{b_l} < {v_k}\left( t \right) < {b_r},}\\ {m\left( t \right)\left( {{v_k}\left( t \right) - {b_l}} \right),}&{{\rm{for }}{v_k}\left( t \right) \le {b_l},} \end{array}} \right. \end{align} | (2) |

|

Download:

|

| Fig. 1.Dead-zone model. | |

The dead-zone output ${{u}_{k}}\left( t \right)$ is not available for measurement. The assumption on the dead-zone parameters is as follows.

Assumption 1. The dead-zone parameters ${{b}_{r}}$, ${{b}_{l}}$ and $m\left( t \right)$ are bounded,i.e.,there exist unknown constants ${{b}_{r\min }}$,${{b}_{r\max }}$,${{b}_{l\min }}$,${{b}_{l\max }}$,${{m}_{\min }}$,${{m}_{\max }}$,such that ${{b}_{r\min }}\le {{b}_{r}}\le {{b}_{r\max }}$,${{b}_{l\min }}\le {{b}_{l}}\le {{b}_{l\max }}$ and ${{m}_{\min }}\le m\left( t \right)\le {{m}_{\max }}$.

From a practical point of view,we can re-define the dead-zone nonlinearity as

| \begin{align} {{u}_{k}}\left( t \right)=D\left( {{v}_{k}} \right)=m\left( t \right){{v}_{k}}\left( t \right)-{{d}_{1}}\left( {{v}_{k}}\left( t \right) \right) \end{align} | (3) |

with

| \begin{align} {{d}_{1}}\left( {{v}_{k}}\left( t \right) \right)= \begin{cases} m\left( t \right){{b}_{r}},\text{ for }{{v}_{k}}\left( t \right)\ge {{b}_{r}},\\ m\left( t \right){{v}_{k}}\left( t \right),\text{ for }{{b}_{l}}<{{v}_{k}}\left( t \right)<{{b}_{r}},\\ m\left( t \right){{b}_{l}},\text{ for }{{v}_{k}}\left( t \right)\le {{b}_{l}}. \\ \end{cases} \end{align} | (4) |

It is obvious that ${{d}_{1}}\left( {{v}_{k}}\left( t \right) \right)$ is bounded.

In this paper,a desired trajectory for the state vector ${{\pmb X}_{k}}\left( t \right)$ to follow is given by ${{\pmb X}_{d}}\left( t \right)={{\left[{{y}_{d}}\left( t \right),{{{\dot{y}}}_{d}}\left( t \right),\cdots ,y_{d}^{\left( n-1 \right)}\left( t \right) \right]}^{\rm T}}$. Define the state tracking errors as ${{\pmb e}_{k}}\left( t \right)={{\left[{{e}_{1,k}},{{e}_{2,k}},\cdots ,{{e}_{n,k}} \right]}^{\rm T}}={{\pmb X}_{k}}\left( t \right)-{{\pmb X}_{d}}\left( t \right)$. The control objective of this paper is as follows. For the desired trajectory ${{\pmb X}_{d}}\left( t \right)$,design an AILC ${{u}_{k}}\left( t \right) $,such that, all the signals remain bounded and the tracking error ${{\pmb e}_{k}}\left( t \right) $ converges to a small neighborhood of the origin as $k\to \infty $,i.e.,$\lim_{k\to \infty } \int_{0}^{T}{{{\left\| {{\pmb e}_{k}}\left( \sigma \right) \right\|}^{2}}\text{d}\sigma \le {{\varepsilon }_{esk}}}$,where ${{\varepsilon }_{esk}}$ is a small positive error tolerance and $\left\| \cdot \right\|$ denotes the Euclidian norm. Define the filtered tracking error as ${{e}_{sk}}\left( t \right)=\left[{{\pmb \Lambda }^{\rm T}}\text{ 1} \right]{{\pmb e}_{k}}\left( t \right)$, where ${\pmb \Lambda} ={{\left[{{\lambda }_{1}},{{\lambda }_{2}},\cdots,{{\lambda }_{n-1}} \right]}^{\rm T}}$ and ${{\lambda }_{1}}$,${{\lambda }_{2}},\cdots,{{\lambda }_{n-1}}$ are the coefficients of Hurwitz polynomial $H\left( s \right)={{s}^{n-1}}+{{\lambda }_{n-1}}{{s}^{n-2}}+\cdots +{{\lambda }_{1}}$. It is obvious that if ${{e}_{sk}}\left( t \right)$ approaches zero as $k\to \infty $,then $\left\| {{\pmb e}_{k}}\left( t \right) \right\|$ will converge to the origin asymptotically.

To facilitate control system design,the following reasonable assumptions are made.

Assumption 2. The unknown state time-varying delays ${{\tau }_{i}}\left( t \right) $ satisfy $0\le {{\tau }_{i}}\left( t \right)\le {{\tau }_{\max }}$,${{\dot{\tau }}_{i}}\left( t \right)\le \kappa <1$,$i=1,2,\cdots ,n$,with the unknown positive scalars ${{\tau }_{\max }}$ and $\kappa $.

Assumption 3. The unknown smooth function $h\left( \cdot ,\cdot \right)$ satisfies

| \begin{align} \left| h\left( {{\pmb X}_{\tau ,k}},t \right) \right|\le \theta \left( t \right)\sum\limits_{j=1}^{n}{{{\rho }_{j}}\left( {{x}_{{{\tau }_{j}},k}}\left( t \right) \right)} \end{align} | (5) |

where $\theta \left( t \right)$ is unknown time-varying parameter and ${{\rho }_{j}}\left( \cdot \right)$ are known positive smooth functions.

Assumption 4. The sign of $g\left( \cdot ,\cdot \right)$ is known,and there exist constants $0<{{g}_{\min }}\le {{g}_{\max }}$ such that ${{g}_{\min }}\le \left| g\left( \cdot ,\cdot \right) \right|\le {{g}_{\max }}$. Without loss of generality,we always assume $g\left( \cdot ,\cdot \right)>0$.

Assumption 5[14]. The initial state errors ${{e}_{i,k}}\left( 0 \right)$ at each iteration are not necessarily zero,small or fixed,but assumed to be bounded.

Assumption 6. The desired state trajectory ${{\pmb X}_{d}}\left( t \right)$ is continuous,bounded and available.

Assumption 7. The unknown external disturbance $d\left( t \right)$ is bounded,i.e.,there exists an unknown constant $d_{\rm max}$ satisfying $\left| d\left( t \right) \right|\le {{d}_{\max }}$.

Remark 1. Assumption 2 is necessary for the control problem of time-varying delay systems,which ensures that the time-delay parts can be eliminated by Lyapunov-Krasovskii functional. And Assumption 2 is milder than that in [19, 20, 21] which requires to know the true value of ${{\tau }_{\max }}$ and $\kappa $.

Remark 2. Assumption 3 is mild on $h\left( \cdot ,\cdot \right)$. Since $h\left( \cdot ,\cdot \right)$ is continuous on $\left[0,T \right]$,$h\left( \cdot ,\cdot \right)$ is bounded, obviously. Compared with the assumption of local Lipschitz condition with known upper bound functions in [20, 21],this assumption is largely relaxed and can be easily satisfied. Actually,${{\rho }_{j}}\left( \cdot \right)$ are not necessarily known,they are approximated by NNs as part of the uncertainties,which will be given later.

Remark 3. The control gain bounds ${{g}_{\min }}$ and ${{g}_{\max }}$ are only required for analytical purposes,their true values are not necessarily known since they are not used for controller design.

B. A Motivating ExampleIn order to illustrate the main idea of CEF-based AILC,we firstly give a simple scalar system running over the time interval $\left[ 0,T \right]$ with the following dynamic model

| \begin{align} {{\dot{z}}_{k}}\left( t \right)=\theta \left( t \right)\xi \left( {{z}_{k}},t \right)+u_{k}^{z}\left( t \right), \end{align} | (6) |

where ${{z}_{k}}\left( t \right)$ is the system state in the ${k}$-th iteration,$u_{k}^{z}\left( t \right)$ is the control input,$\theta \left( t \right)$ is an unknown time-varying parameter,and $\xi \left( {{z}_{k}},t \right)$ is a known time-varying function. The desired trajectory for ${{z}_{k}}\left( t \right)$ is ${{z}_{r}}\left( t \right)$,$t\in \left[0,T \right]$. Define the tracking error as $e_{k}^{z}\left( t \right)={{z}_{k}}\left( t \right)-{{z}_{r}}\left( t \right)$ and design the control law for the ${k}$-th iteration as

| \begin{align} u_{k}^{z}\left( t \right)=-{{k}_{1}}e_{k}^{z}\left( t \right)+{{\dot{z}}_{r}}\left( t \right)-{{\hat{\theta }}_{k}}\left( t \right)\xi \left( {{z}_{k}},t \right), \end{align} | (7) |

where ${{k}_{1}}>0$ is a design parameter and ${{\hat{\theta }}_{k}}\left( t \right)$ is the estimation of $\theta \left( t \right)$ in the $k$-th iteration. Design the adaptive learning law for the unknown time-varying parameter as

| \begin{align} \begin{cases} {{{\hat{\theta }}}_{k}}\left( t \right)={{{\hat{\theta }}}_{k-1}}\left( t \right)+q\xi \left( {{z}_{k}},t \right)e_{k}^{z}\left( t \right),\\ {{{\hat{\theta }}}_{0}}\left( t \right)=0, \end{cases}\end{align} | (8) |

for $t\in \left[0,T \right]$,where $q>0$ is the learning gain.

Define the estimate error as ${{\tilde{\theta }}_{k}}\left( t \right)={{\hat{\theta }}_{k}}\left( t \right)-\theta \left( t \right)$. Then choose a Lyapunov-like CEF as follows:

| \begin{align} E_{k}^{z}\left( t \right)=\frac{1}{2}{\left( e_{k}^{z}\left( t \right) \right )}^{2}+\frac{1}{2q}\int_{0}^{t}{{{{\tilde{\theta }}}_{k}^{2}}\left( \sigma \right)\text{d}\sigma }. \end{align} | (9) |

Throughout this paper,$\sigma $ denotes the integral variable. Then it can be derived that

| \begin{align} \Delta E_{k}^{z}\left( t \right)=E_{k}^{z}\left( t \right)-E_{k-1}^{z}\left( t \right)\le-\int_{0}^{t}{{{\left( e_{k}^{z}\left( \sigma \right) \right)}^{2}}\text{d}\sigma }. \end{align} | (10) |

We can further obtain

| \begin{align} \underset{k\to \infty }{\mathop{\lim }} \int_{0}^{T}{{{\left( e_{k}^{z}\left( \sigma \right) \right)}^{2}}\text{d}\sigma }=0. \end{align} | (11) |

Thus,the system state ${{z}_{k}}\left( t \right)$ converges to the desired trajectory ${{z}_{r}}\left( t \right)$ on $\left[0,T \right]$ as $k\to \infty $.

Ⅲ. AILC DESIGNNow,we use the following process to explain the design approach. According to Assumption 5,there exist known constants ${{\varepsilon }_{i}}$,such that,$\left| {{e}_{i,k}}(0) \right|\le {{\varepsilon }_{i}}$ $(i=1,2,\cdots,n)$ for any $k\in \bf N$. In order to overcome the uncertainty from initial state errors,we introduce the boundary layer function[14, 15, 16] and define error function as follows:

| \begin{align} &{{s}_{k}}(t)={{e}_{sk}}(t)-\eta (t)\text{sat}\left( \frac{{{e}_{sk}}(t)}{\eta (t)} \right), \end{align} | (12) |

| \begin{align} \eta (t)=\varepsilon {{\rm e}^{-Kt}},\quad K>0, \end{align} | (13) |

where $\varepsilon =\left[{{\bf \Lambda}^{\rm T}}\text{ 1} \right]{{\left[{{\varepsilon }_{1}},{{\varepsilon }_{2}},\cdots ,{{\varepsilon }_{n}} \right]}^{\rm T}}$,$K$ is a design parameter, and saturation function $\text{sat}\left( \cdot \right)$ is defined as

| \begin{align} \text{sat}\left( \cdot \right)=\text{sgn} \left( \cdot \right)\cdot \min \left\{ \left| \cdot \right|,1 \right\}, \end{align} | (14) |

where ${\text{sgn} \left( \cdot \right)=\begin{cases} 1,\quad {\rm if} \quad \cdot \quad>0 \\ 0,\quad {\rm if} \quad \cdot \quad=0 \\ -1,\quad {\rm if} \quad \cdot \quad<0 \end{cases} }$ is the sign function.

Remark 4. $\eta (t)$ is time-varying boundary layer function. In [14],it has been pointed out that $\eta (t)$ decreases along time axis with initial condition $\eta (0)=\varepsilon $ and $0<\eta \left( T \right)\le \eta \left( t \right)\le \varepsilon $, $\forall t\in \left[0,T \right]$,and if ${{s}_{k}}(t)$ can be driven to zero $\forall t\in \left[0,T \right]$,then the states will asymptotically converge to the desired trajectories for all $t\in \left[0,T \right]$. It can be easily shown that

| \begin{align} \left| {{e}_{sk}}\left( 0 \right) \right| & =\left| {{\lambda }_{1}}{{e}_{1,k}}\left( 0 \right)+{{\lambda }_{2}}{{e}_{2,k}}\left( 0 \right)+\cdots +{{e}_{n,k}}\left( 0 \right) \right| \le \nonumber\\ & {{\lambda }_{1}}\left| {{e}_{1,k}}\left( 0 \right) \right|+{{\lambda }_{2}}\left| {{e}_{2,k}}\left( 0 \right) \right|+\cdots +\left| {{e}_{n,k}}\left( 0 \right) \right| \le \nonumber\\ & {{\lambda }_{1}}{{\varepsilon }_{1}}+{{\lambda }_{2}}{{\varepsilon }_{2}}+\cdots +{{\varepsilon }_{n}}=\eta \left( 0 \right), \end{align} | (15) |

which implies that ${{s}_{k}}\left( 0 \right)={{e}_{sk}}\left( 0 \right)-\eta \left( 0 \right)\frac{{{e}_{sk}}\left( 0 \right)}{\eta \left( 0 \right)}=0$ is satisfied for all $k\in \bf N$. Moreover,we note the fact that

| \begin{align} &{{s}_{k}}\left( t \right)\text{sat}\left( \frac{{{e}_{sk}}(t)}{\eta (t)} \right)= \nonumber\\ &\qquad \begin{cases} 0,\quad {\rm if} \ \left| \frac{{{e}_{sk}}(t)}{\eta (t)} \right|\le 1 \\ {{s}_{k}}\left( t \right)\text{sgn} \left( {{e}_{sk}}\left( t \right) \right), \quad {\rm if} \ \left| \frac{{{e}_{sk}}(t)}{\eta (t)} \right|>1 \end{cases} = \nonumber\\ &\qquad {{s}_{k}}\left( t \right)\text{sgn} \left( {{s}_{k}}\left( t \right) \right) =\left| {{s}_{k}}\left( t \right) \right|. \end{align} | (16) |

To find the approach for the controller design later,we first give the time derivative of ${{e}_{n,k}}\left( t \right)$ as following:

| \begin{align} &{{{\dot{V}}}_{{{s}_{k}}}}\left( t \right)={{s}_{k}}\left( t \right){{{\dot{s}}}_{k}}\left( t \right)= \nonumber\\ &\qquad \begin{cases} {{s}_{k}}\left( t \right)\left( {{{\dot{e}}}_{sk}}\left( t \right)-\dot{\eta }\left( t \right) \right),\quad {\rm if}\ {{e}_{sk}}\left( t \right)>\eta \left( t \right) \\ 0,\quad {\rm if}\ \ \left| {{e}_{sk}}\left( t \right) \right|\le \eta \left( t \right) \\ {{s}_{k}}\left( t \right)\left( {{{\dot{e}}}_{sk}}\left( t \right)+\dot{\eta }\left( t \right) \right),\quad {\rm if}\text{ }{{e}_{sk}}\left( t \right)<-\eta \left( t \right) \end{cases} = \nonumber\\ &\qquad {{s}_{k}}\left( t \right)\left( {{{\dot{e}}}_{sk}}\left( t \right)-\dot{\eta }\left( t \right)\text{sgn} \left( {{s}_{k}}\left( t \right) \right) \right)= \nonumber\\ &\qquad {{s}_{k}}\left( t \right)\left( \sum\limits_{j=1}^{n-1}{{{\lambda }_{j}}{{e}_{j+1,k}}\left( t \right)}-\dot{\eta }\left( t \right)\text{sgn}\left( {{s}_{k}}\left( t \right) \right)+\right. \nonumber\\ &\qquad f\left( {{\pmb X}_{k}}\left( t \right),t \right)+h\left( {{\pmb X}_{\tau ,k}}\left( t \right),t \right) +{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right){{v}_{k}}\left( t \right)+ \nonumber\\ & \qquad \left.{{d}_{2}}\left( {{\pmb X}_{k}}\left( t \right),t \right)-y_{d}^{\left( n \right)}\left( t \right) \right)= \nonumber \\ &\qquad {{s}_{k}}\left( t \right)\left( \sum\limits_{j=1}^{n-1}{{{\lambda }_{j}}{{e}_{j+1,k}}\left( t \right)}+K{{e}_{sk}}\left( t \right)-K{{e}_{sk}}\left( t \right)-\right. \nonumber\\ &\qquad K\eta \left( t \right)\text{sgn} \left( {{s}_{k}}\left( t \right) \right)+f\left( {{\pmb X}_{k}}\left( t \right),t \right)+h\left( {{\pmb X}_{\tau ,k}}\left( t \right),t \right)+ \nonumber\\ &\qquad \left. {{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right){{v}_{k}}\left( t \right)+{{d}_{2}}\left( {{\pmb X}_{k}}\left( t \right),t \right)-y_{d}^{\left( n \right)}\left( t \right) \right)= \nonumber\\ &\qquad {{s}_{k}}\left( t \right)\left( f\left( {{\pmb X}_{k}}\left( t \right),t \right)+h\left( {{\pmb X}_{\tau ,k}}\left( t \right),t \right) +\right.\notag\\ &\qquad\left. {{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right){{v}_{k}}\left( t \right)\!+\! \right. \left.{{\mu }_{k}}\left( t \right)\!+\! {{d}_{2}}\left( {{\pmb X}_{k}}\left( t \right),t \right)\right)\!-\!Ks_{k}^{2}\left( t \right), \end{align} | (17) |

where ${{d}_{2}}\left( {{\pmb X}_{k}}\left( t \right),t \right)=-g\left( {{\pmb X}_{k}}\left( t \right),t \right){{d}_{1}}\left( {{v}_{k}}\left( t \right) \right)+d\left( t \right)$. From Assumptions 4 and 7,we know that ${{d}_{2}}\left( {{\pmb X}_{k}},t \right)$ is bounded,i.e.,there exists unknown smooth positive function $\bar{d}\left( {{\pmb X}_{k}} \right)$ such that $\left| {{d}_{2}}\left( {{\pmb X}_{k}},t \right) \right|\le \bar{d}\left( {{\pmb X}_{k}} \right)$. For the simplification of expression,we denote $g\left( {{\pmb X}_{k}}\left( t \right),t \right)m\left( t \right)$ by ${{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right):= g\left( {{\pmb X}_{k}}\left( t \right),t \right)m\left( t \right)$. It is clear that $\underline{g}_{m}={{m}_{\min}}{{g}_{\min}}\le{{g}_{m}} \left({{x}_{k}},t\right)\le{{m}_{\max}}{{g}_{\max}}={{\bar{g}}_{m}}$.

Define a smooth scalar function as

| \begin{align} {{V}_{{{s}_{k}}}}\left( t \right)=\frac{1}{2}s_{k}^{2}\left( t \right). \end{align} | (18) |

The time derivative of ${{V}_{{{s}_{k}}}}\left( t \right)$ along (17) can be expressed as

| \begin{array}{l} {{\dot V}_{{s_k}}}\left( t \right) = {s_k}\left( t \right){{\dot s}_k}\left( t \right) = \\ \qquad \left\{ {\begin{array}{*{20}{l}} {{s_k}\left( t \right)\left( {{{\dot e}_{sk}}\left( t \right) - \dot \eta \left( t \right)} \right),\quad {\rm{if}}\;{e_{sk}}\left( t \right) > \eta \left( t \right)}\\ {0,\quad {\rm{if}}\;\;\left| {{e_{sk}}\left( t \right)} \right| \le \eta \left( t \right)}\\ {{s_k}\left( t \right)\left( {{{\dot e}_{sk}}\left( t \right) + \dot \eta \left( t \right)} \right),\quad {\rm{if }}{e_{sk}}\left( t \right) < - \eta \left( t \right)} \end{array}} \right. = \\ \qquad {s_k}\left( t \right)\left( {{{\dot e}_{sk}}\left( t \right) - \dot \eta \left( t \right){\rm{sgn}}\left( {{s_k}\left( t \right)} \right)} \right) = \\ \qquad {s_k}\left( t \right)\left( {\sum\limits_{j = 1}^{n - 1} {{\lambda _j}{e_{j + 1,k}}\left( t \right)} - \dot \eta \left( t \right){\rm{sgn}}\left( {{s_k}\left( t \right)} \right) + } \right.\\ \qquad f\left( {{X_k}\left( t \right),t} \right) + h\left( {{X_{\tau ,k}}\left( t \right),t} \right) + {g_m}\left( {{X_k}\left( t \right),t} \right){v_k}\left( t \right) + \\ \qquad \left. {{d_2}\left( {{X_k}\left( t \right),t} \right) - y_d^{\left( n \right)}\left( t \right)} \right) = \\ \qquad {s_k}\left( t \right)\left( {\sum\limits_{j = 1}^{n - 1} {{\lambda _j}{e_{j + 1,k}}\left( t \right)} + K{e_{sk}}\left( t \right) - K{e_{sk}}\left( t \right) - } \right.\\ \qquad K\eta \left( t \right){\rm{sgn}}\left( {{s_k}\left( t \right)} \right) + f\left( {{X_k}\left( t \right),t} \right) + h\left( {{X_{\tau ,k}}\left( t \right),t} \right) + \\ \qquad \left. {{g_m}\left( {{X_k}\left( t \right),t} \right){v_k}\left( t \right) + {d_2}\left( {{X_k}\left( t \right),t} \right) - y_d^{\left( n \right)}\left( t \right)} \right) = \\ \qquad {s_k}\left( t \right)\left( {f\left( {{X_k}\left( t \right),t} \right) + h\left( {{X_{\tau ,k}}\left( t \right),t} \right) + } \right.\\ \qquad {g_m}\left( {{X_k}\left( t \right),t} \right){v_k}\left( t \right) + \left. {{\mu _k}\left( t \right) + {d_2}\left( {{X_k}\left( t \right),t} \right)} \right) - Ks_k^2\left( t \right), \end{array} | (19) |

where ${{\mu }_{k}}\left( t \right)=\sum\limits_{j=1}^{n-1}{{{\lambda }_{j}}{{e}_{j+1,k}}\left( t \right)}+K{{e}_{sk}}\left( t \right)-y_{d}^{\left( n \right)}\left( t \right)$ and using the equality

| \begin{align} & {{s}_{k}}\left( t \right)\left(-K{{e}_{sk}}\left( t \right)-K\eta \left( t \right)\text{sgn} \left( {{s}_{k}}\left( t \right) \right) \right)= \nonumber\\ &\qquad {{s}_{k}}\left( t \right)\left(-K{{s}_{k}}\left( t \right)+K\eta \left( t \right)\text{sat}\left( \frac{{{e}_{sk}}\left( t \right)}{\eta \left( t \right)} \right)-\right. \nonumber\\ & \qquad \left. K\eta \left( t \right)\text{sgn} \left( {{s}_{k}}\left( t \right) \right) \right)= \nonumber\\ &\qquad-Ks_{k}^{2}\left( t \right)+K\eta \left( t \right)\left| {{s}_{k}}\left( t \right) \right|-K\eta \left( t \right)\left| {{s}_{k}}\left( t \right) \right|= \nonumber\\ &\qquad-Ks_{k}^{2}\left( t \right). \end{align} | (20) |

Using Young$'$s inequality and noting Assumption 3,it is clear that

| \begin{align} {{s}_{k}}\left( t \right)h\left( {{\pmb X}_{\tau ,k}}\left( t \right),t \right) \le \left| {{s}_{k}}\left( t \right) \right|\theta \left( t \right)\sum\limits_{j=1}^{n}{{{\rho }_{j}}\left( {{x}_{{{\tau }_{j}},k}}\left( t \right) \right)}\le \nonumber\\ \frac{n}{2}s_{k}^{2}\left( t \right){{\theta }^{2}}\left( t \right)+ \frac{1}{2}\sum\limits_{j=1}^{n}{\rho _{j}^{2}\left( {{x}_{{{\tau }_{j}},k}}\left( t \right) \right)}, \end{align} | (21) |

| \begin{align} {{s}_{k}}\left( t \right){{d}_{2}}\left( {{\pmb X}_{k}}\left( t \right),t \right)\le \frac{s_{k}^{2}\left( t \right){{{\bar{d}}}{ }^{^{2}}}\left( {{\pmb X}_{k}}\left( t \right) \right)}{2a_{1}^{2}}+\frac{a_{1}^{2}}{2}, \end{align} | (22) |

where ${{a}_{1}}$ is a given arbitrary positive constant.

Substituting (21) and (22) into (19) leads to

| \begin{align} & {{{\dot{V}}}_{{{s}_{k}}}}\left( t \right) \le {{s}_{k}}\left( t \right)\left( f\left( {{\pmb X}_{k}}\left( t \right),t \right)+{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right){{v}_{k}}\left( t \right)+\right. \nonumber\\ &\ \ \ \ \ \ \ \ \ \ \left.{{\mu }_{k}}\left( t \right)+\frac{n}{2}{{s}_{k}}\left( t \right){{\theta }^{2}}\left( t \right)+\frac{{{s}_{k}}\left( t \right){{{\bar{d}}}{ }^{^{2}}}\left( {{\pmb X}_{k}}\left( t \right) \right)}{2a_{1}^{2}} \right)- \nonumber\\ &\ \ \ \ \ \ \ \ \ \ Ks_{k}^{2}\left( t \right)+\frac{1}{2}\sum\limits_{j=1}^{n}{\rho _{j}^{2}\left( {{x}_{{{\tau }_{j}},k}}\left( t \right) \right)}+\frac{a_{1}^{2}}{2}. \end{align} | (23) |

To overcome the design difficulty arising from the unknown time-varying delay term $\rho _{j}^{2}\left( {{x}_{{{\tau }_{j}},k}}\left( t \right) \right)$,consider the following Lyapunov-Krasovskii functional:

| \begin{align} {{V}_{{{U}_{k}}}}\left( t \right)=\frac{1}{2\left( 1-\kappa \right)}\sum\limits_{j=1}^{n}{\int_{t-{{\tau }_{j}}\left( t \right)}^{t}{\rho _{j}^{2}\left( {{x}_{j,k}}\left( \sigma \right) \right)}}\text{d}\sigma. \end{align} | (24) |

According to Assumption 2,taking the time derivative of ${{V}_{{{U}_{k}}}}\left( t \right)$ leads to

| \begin{align} &{{{\dot{V}}}_{{{U}_{k}}}}\left( t \right)= \nonumber\\ &\qquad \frac{1}{2\left( 1-\kappa \right)}\sum\limits_{j=1}^{n}{\rho _{j}^{2}\left( {{x}_{j,k}} \right)}-\frac{1}{2}\sum\limits_{j=1}^{n}{\frac{1-{{{\dot{\tau }}}_{j}}\left( t \right)}{\left( 1-\kappa \right)}\rho _{j}^{2}\left( {{x}_{{{\tau }_{j}},k}} \right)} \le \nonumber\\ &\qquad \frac{1}{2\left( 1-\kappa \right)}\sum\limits_{j=1}^{n}{\rho _{j}^{2}\left( {{x}_{j,k}} \right)}- \frac{1}{2}\sum\limits_{j=1}^{n}{\rho _{j}^{2}\left( {{x}_{{{\tau }_{j}},k}} \right)}. \end{align} | (25) |

Define a Lyapunov functional as ${{V}_{k}}\left( t \right)={{V}_{{{s}_{k}}}}\left( t \right)+{{V}_{{{U}_{k}}}}\left( t \right)$,taking the derivative of ${{V}_{k}}\left( t \right)$ and recalling (23) and (25),we can obtain

| \begin{align} {{{\dot{V}}}_{k}}\left( t \right)& \le {{s}_{k}}\left( t \right)\left( f\left( {{\pmb X}_{k}}\left( t \right),t \right) +{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right){{v}_{k}}\left( t \right)+{{\mu }_{k}}\left( t \right)+\right. \nonumber\\ &\ \ \ \ \left.\frac{n}{2}{{s}_{k}}\left( t \right){{\theta }^{2}}\left( t \right)+\frac{{{s}_{k}}\left( t \right){{{\bar{d}}}{ }^{^{2}}}\left( {{\pmb X}_{k}}\left( t \right) \right)}{2a_{1}^{2}} \right)-Ks_{k}^{2}\left( t \right)+ \nonumber\\ &\ \ \ \ \frac{a_{1}^{2}}{2}+\frac{1}{2\left( 1-\kappa \right)}\sum\limits_{j=1}^{n}{\rho _{j}^{2}\left( {{x}_{j,k}}\left( t \right) \right)}. \end{align} | (26) |

For the convenience of expression,denote $\xi \left( {{\pmb X}_{k}}\left( t \right) \right):= \frac{a_{1}^{2}}{2}+\frac{1}{2\left( 1-\kappa \right)}\sum\limits_{j=1}^{n}{\rho _{j}^{2}\left( {{x}_{j,k}}\left( t \right) \right)}$,then (26) can be simplified as

| \begin{align} & {{{\dot{V}}}_{k}}\left( t \right)\le {{s}_{k}}\left( t \right)\Big( f\left( {{\pmb X}_{k}}\left( t \right),t \right)+ {{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right){{v}_{k}}\left( t \right)+{{\mu }_{k}}\left( t \right)+ \nonumber\\ &\quad\frac{n}{2}{{s}_{k}}\left( t \right){{\theta }^{2}}\left( t \right) +\frac{{{s}_{k}}\left( t \right){{{\bar{d}}}{ }^{^{2}}}\left( {{\pmb X}_{k}}\left( t \right) \right)}{2a_{1}^{2}}+ \frac{\xi \left( {{\pmb X}_{k}}\left( t \right) \right)}{{{s}_{k}}\left( t \right)} \Big)-Ks_{k}^{2} \left( t \right). \end{align} | (27) |

Next we need to design the controller based on (27). However,noting that there is a problem of singularity in (27) due to the term $\frac{\xi \left( {{\pmb X}_{k}}\left( t \right) \right)}{{{s}_{k}}\left( t \right)}$ which approaches $\infty $ as ${{s}_{k}}\left( t \right)$ approaches zero. In order to deal with this problem,we exploit the following characteristic of hyperbolic tangent function.

Lemma 1[29]. For any constant $\eta >0$ and any variable $p\in {\bf R}$,

| \begin{align} \underset{p\to 0}{\mathop{\lim }} \frac{{{\tanh }^{2}}\left( p/\eta \right)}{p}=0 . \end{align} | (28) |

Employing the hyperbolic tangent function,(27) can be rewritten as

| \begin{align} & {{{\dot{V}}}_{k}}\left( t \right) \le \nonumber\\ &\quad {{s}_{k}}\left( t \right)\left( f\left( {{\pmb X}_{k}}\left( t \right),t \right)+{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right){{v}_{k}}\left( t \right)+{{\mu }_{k}}\left( t \right)+\right. \nonumber\\ &\quad \left. \frac{n}{2}{{s}_{k}}\left( t \right){{\theta }^{2}}\left( t \right)+ \frac{{{s}_{k}} \left( t \right){{{\bar{d}}}{ }^{^{2}}}\left( {{\pmb X}_{k}}\left( t \right) \right)}{2a_{1}^{2}} \right) -Ks_{k}^{2}\left( t \right)+ \nonumber\\ &\quad \xi \left( {{\pmb X}_{k}}\left( t \right) \right)-b{{\tanh }^{2}}\left( \frac{{{s}_{k}} \left( t \right)}{\eta \left( t \right)} \right)\xi \left( {{\pmb X}_{k}}\left( t \right) \right)+ \nonumber\\ &\quad b{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( t \right)}{\eta \left( t \right)} \right)\xi \left( {{\pmb X}_{k}}\left( t \right) \right)= \nonumber\\ &\quad {{s}_{k}}\left( t \right)\left( f\left( {{\pmb X}_{k}}\left( t \right),t \right)+ {{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right){{v}_{k}}\left( t \right)+{{\mu }_{k}}\left( t \right)+\right. \nonumber\\ &\quad \frac{n}{2}{{s}_{k}}\left( t \right){{\theta }^{2}}\left( t \right)+\frac{{{s}_{k}} \left( t \right){{{\bar{d}}}{ }^{^{2}}}\left( {{\pmb X}_{k}}\left( t \right) \right)}{2a_{1}^{2}}+ \nonumber\\ &\quad \left. \frac{b}{{{s}_{k}}\left( t \right)}{{\tanh }^{2}}\left( \frac{{{s}_{k}} \left( t \right)}{\eta \left( t \right)} \right)\xi \left( {{\pmb X}_{k}}\left( t \right) \right) \right)- \nonumber\\ &\quad Ks_{k}^{2}\left( t \right)+\left( 1-b{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( t \right)} {\eta \left( t \right)} \right) \right)\xi \left( {{\pmb X}_{k}}\left( t \right) \right). \end{align} | (29) |

From Lemma 1,we know that $\lim_{{{s}_{k}}\left( t \right)\to 0} \frac{b}{{{s}_{k}}\left( t \right)}{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( t \right)}{\eta \left( t \right)} \right) $ $\times \xi \left( {{\pmb X}_{k}}\left( t \right) \right)=0$. Hence, $\frac{b}{{{s}_{k}}\left( t \right)}{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( t \right)}{\eta \left( t \right)} \right)\xi \left( {{\pmb X}_{k}}\left( t \right) \right)$ is defined at ${{s}_{k}}\left( t \right)=0$ and the problem of possible singularity is avoided,which allows us to use NNs to deal with uncertainties. Upon multiplication of (29) by $\frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}},t \right)}$,it becomes

| \begin{align} &\frac{{{{\dot{V}}}_{k}}\left( t \right)}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}\le \nonumber \\ &\quad {{s}_{k}}\left( t \right)\left( \frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}\left( f\left( {{\pmb X}_{k}}\left( t \right),t \right)+\frac{n}{2}{{s}_{k}}\left( t \right){{\theta }^{2}}\left( t \right)+\right.\right. \nonumber\\ &\quad \left. \frac{{{s}_{k}}\left( t \right){{{\bar{d}}}{ }^{^{2}}}\left( {{\pmb X}_{k}}\left( t \right) \right)}{2a_{1}^{2}} +\frac{b}{{{s}_{k}}\left( t \right)}{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( t \right)}{\eta \left( t \right)} \right)\xi \left( {{\pmb X}_{k}}\left( t \right) \right) \right)+ \nonumber\\ &\quad \left. {{v}_{k}}\left( t \right)+\frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}{{\mu }_{k}}\left( t \right) \right)-\frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}s_{k}^{2}\left( t \right)+ \nonumber\\ &\quad \frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}\left( 1-b{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( t \right)}{\eta \left( t \right)} \right) \right)\xi \left( {{\pmb X}_{k}}\left( t \right) \right)= \nonumber\\ &\quad {{s}_{k}}\left( t \right)\left( \Xi \left( {{\pmb X}_{k}},t \right)+\Psi \left( {{\pmb X}_{k}},t \right){{\mu }_{k}}\left( t \right)+{{v}_{k}}\left( t \right) \right)- \nonumber\\ &\quad \frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}s_{k}^{2}\left( t \right)+ \nonumber\\ &\quad \frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}\left( 1-b{{\tanh }^{2}} \left( \frac{{{s}_{k}}\left( t \right)}{\eta \left( t \right)} \right) \right)\xi \left( {{\pmb X}_{k}} \left( t \right) \right), \end{align} | (30) |

where $\Xi \left( {{\pmb X}_{k}},t \right)=\frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}\left( f\left( {{\pmb X}_{k}}\left( t \right),t \right)+\frac{n}{2}{{s}_{k}}\left( t \right){{\theta }^{2}}\left( t \right)\right.$ $ \left. + \frac{{{s}_{k}}\left( t \right){{{\bar{d}}}{ }^{^{2}}}\left( {{\pmb X}_{k}} \right)}{2a_{1}^{2}}+\frac{b}{{{s}_{k}}\left( t \right)}{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( t \right)}{\eta \left( t \right)} \right)\left. \xi \left( {{\pmb X}_{k}}\left( t \right) \right) \right) \right)$,$\Psi \left( {{\pmb X}_{k}},t \right)=\frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}$. In order to deal with the uncertainties in the controller design,we apply the RBF NNs to approximate the unknown nonlinear functions $\Xi \left( {{\pmb X}_{k}},t \right)$ and $\Psi \left( {{\pmb X}_{k}},t \right)$,

| \begin{align} \Xi \left( {{\pmb X}_{k}},t \right)={\pmb W}_{\Xi }^{* \rm T}\left( t \right){{\pmb \Phi }_{\Xi }}\left( {\pmb X}_{k}^{\Xi } \right)+{{\delta }_{\Xi }}\left( {\pmb X}_{k}^{\Xi },t \right), \end{align} | (31) |

| \begin{align} \Psi \left( {{\pmb X}_{k}},t \right)={\pmb W}_{\Psi }^{* \rm T}\left( t \right){{\pmb \Phi }_{\Psi }}\left( {\pmb X}_{k}^{\Psi } \right)+{{\delta }_{\Psi }}\left( {\pmb X}_{k}^{\Psi },t \right), \end{align} | (32) |

where ${\pmb X}_{k}^{\Xi }={{\left[{\pmb X}_{k}^{\rm T},{\pmb X}_{d}^{\rm T} \right]}^{\rm T}}\in {{\Omega }_{\Xi }}\subset {{\bf R}^{2n}}$ and ${\pmb X}_{k}^{\Psi }={{\pmb X}_{k}}\in {{\Omega }_{\Psi }}\subset {{\bf R}^{n}}$ are the input vectors of NN with ${{\Omega }_{\Xi }}$ and ${{\Omega }_{\Psi }}$ as two compact sets. ${{\delta }_{\Xi }}\left( {\pmb X}_{k}^{\Xi },t \right)$ and ${{\delta }_{\Psi }}\left( {\pmb X}_{k}^{\Psi },t \right)$ are inherent NN approximation errors,which can be decreased arbitrarily by increasing the NN node number,and satisfy $\left| {{\delta }_{\Xi }}\left( {\pmb X}_{k}^{\Xi },t \right) \right|\le {{\varepsilon }_{\Xi }}$,$\left| {{\delta }_{\Xi }}\left( {\pmb X}_{k}^{\Xi },t \right) \right|\le {{\varepsilon }_{\Psi }}$,$\forall t\in \left[0,T \right]$ with unknown constants ${{\varepsilon }_{\Xi }},{{\varepsilon }_{\Psi }}>0$. Define $\beta $ as the maximum of NN approximation error bounds, i.e.,$\beta =\max \left\{ {{\varepsilon }_{\Xi }},{{\varepsilon }_{\Psi }} \right\}$. ${{\pmb \Phi }_{\Xi }}\left( {\pmb X}_{k}^{\Xi } \right)$ and ${{\pmb \Phi }_{\Psi }}\left( {\pmb X}_{k}^{\Psi } \right)$ are Gaussian basis function vectors defined as

| \begin{array}{l} {\Phi _\Xi }\left( {X_k^\Xi } \right) = \left[ {{\varphi _1}\left( {X_k^\Xi } \right),{\varphi _2}\left( {X_k^\Xi } \right), \cdots ,\;\;\;\;\;\;\;\;} \right.\;\;\;\;\;\;\\ \;\;\;\;\;\;\;\;\;\;\;{\left. {{\varphi _{{l^\Xi }}}\left( {X_k^\Xi } \right)} \right]^{\rm{T}}}:{{\bf{R}}^{2n}} \mapsto {{\bf{R}}^{{l_\Xi }}}, \end{array} | (33) |

| \begin{array}{l} {\Phi _\Psi }\left( {X_k^\Psi } \right) = \left[ {{\varphi _1}\left( {X_k^\Psi } \right),{\varphi _2}\left( {X_k^\Psi } \right), \cdots ,\;\;\;\;\;\;\;\;} \right.\;\;\;\;\;\;\;\;\;\\ \;\;\;\;\;\;\;\;\;\;{\left. {{\varphi _{{l^\Psi }}}\left( {X_k^\Psi } \right)} \right]^{\rm{T}}}:{{\bf{R}}^n} \mapsto {{\bf{R}}^{{l_\Psi }}}, \end{array} | (34) |

with ${{\varphi }_{i}}\left( {\pmb Z} \right)=\exp \left( -\frac{{{\left\| {\pmb Z}-{{\pmb c}_{i}} \right\|}^{2}}}{\sigma _{i}^{2}} \right)$,where ${{\pmb c}_{i}}\in {{\Omega }_{Z}}$ and ${{\sigma }_{i}}\in {\bf R}$ are the center and width of the $i$-th NN node,respectively. ${\pmb W}_{\Xi }^{*}\left( t \right)\in {{\bf R}^{{{l}_{\Xi }}}}$ and ${\pmb W}_{\Psi }^{*}\left( t \right)\in {{\bf R}^{{{l}_{\Psi }}}}$ are ideal time-varying NN weights,which are defined as follows:

| \begin{array}{l} W_\Xi ^*\left( t \right) = \arg \mathop {\min }\limits_{{W_\Xi }\left( t \right) \in {{\bf{R}}^{{l_\Xi }}}} \left\{ {\mathop {\sup }\limits_{X_k^\Psi \in {{\bf{R}}^n}} \left| {\Xi \left( {{X_k},t} \right) - \;\;\;\;\;} \right.} \right.\;\;\;\;\;\\ \;\;\;\;\;\left. {\left. {W_\Xi ^{\rm{T}}\left( t \right){\Phi _\Xi }\left( {X_k^\Xi } \right)} \right|} \right\}, \end{array} | (35) |

| \begin{array}{l} W_\Psi ^*\left( t \right) = \arg \mathop {\min }\limits_{{W_\Psi }\left( t \right) \in {{\bf{R}}^{{l_\Psi }}}} \left\{ {\mathop {\sup }\limits_{X_k^\Psi \in {{\bf{R}}^n}} \left| {\Psi \left( {{X_k},t} \right) - \;\;\;\;\;} \right.} \right.\;\;\;\;\\ \;\;\;\;\;\;\left. {\left. {W_\Psi ^{\rm{T}}\left( t \right){\Phi _\Psi }\left( {X_k^\Psi } \right)} \right|} \right\}. \end{array} | (36) |

Thus,we can make the following reasonable assumption.

Assumption 8. The optimal weight vector ${\pmb W}_{\Xi }^{*}\left( t \right) $ and ${\pmb W}_{\Psi }^{*}\left( t \right) $ are bounded,i.e.,

| \begin{align} \left\| {\pmb W}_{\Xi }^{*}\left( t \right) \right\|\le {{\varepsilon }_{{\pmb W}_{\Xi }^{*}}},\quad \left\| {\pmb W}_{\Psi }^{*}\left( t \right) \right\|\le {{\varepsilon }_{{\pmb W}_{\Psi }^{*}}}, \end{align} | (37) |

where ${{\varepsilon }_{{\pmb W}_{\Xi }^{*}}}$ and ${{\varepsilon }_{{\pmb W}_{\Psi }^{*}}}$ are unknown positive constants.

Based on the NNs given by (34) and (35),we can design the adaptive iterative learning controller for the class of repeatable nonlinear system (1) as follows:

| \begin{align} {{v}_{k}}\left( t \right)& =-\hat{\pmb W}_{\Xi ,k}^{\rm T}\left( t \right){{\pmb \Phi }_{\Xi }}\left( {\pmb X}_{k}^{\Xi } \right)-\hat{\pmb W}_{\Psi ,k}^{\rm T}\left( t \right){{\pmb \Phi }_{\Psi }}\left( {\pmb X}_{k}^{\Psi } \right){{\mu }_{k}}\left( t \right)- \nonumber\\ &\ \ \ \text{sat}\left( \frac{{{e}_{sk}}}{\eta \left( t \right)} \right){{\hat{\beta }}_{k}}\left( t \right)\left( 1+\left| {{\mu }_{k}}\left( t \right) \right| \right), \end{align} | (38) |

where $\hat{\pmb W}_{\Xi ,k}\left( t \right)$,$\hat{\pmb W}_{\Psi ,k}\left( t \right)$ and ${{\hat{\beta }}_{k}}\left( t \right)$ are the estimates of ${\pmb W}_{\Xi }^{*}\left( t \right)$,${\pmb W}_{\Psi }^{*}\left( t \right)$ and $\beta $,respectively. The adaptive learning algorithms for unknown parameters are given as follows:

| \begin{align} &\left\{\begin{array}{*{20}l} \hat{\pmb W}_{\Xi ,k}\left( t \right)=\hat{\pmb W}_{\Xi ,k-1}\left( t \right)+{{q}_{1}}{{s}_{k}}\left( t \right){{\pmb \Phi }_{\Xi }}\left( {\pmb X}_{k}^{\Xi } \right),\\ \hat{\pmb W}_{\Xi ,0}\left( t \right)=0,\quad t\in [0,T], \end{array}\right. \end{align} | (39a) |

| \begin{align} \left\{\begin{array}{*{20}l} \hat{\pmb W}_{\Psi ,k}\left( t \right)=\hat{\pmb W}_{\Psi ,k-1}\left( t \right)+{{q}_{2}}{{s}_{k}}\left( t \right){{\mu }_{k}}\left( t \right){{\pmb \Phi }_{\Psi }}\left( {\pmb X}_{k}^{\Psi } \right), \\ \hat{\pmb W}_{\Psi ,0}\left( t \right)=0,\quad t\in [0,T], \end{array}\right. \end{align} | (39b) |

| \begin{align} \left\{\begin{array}{*{20}l} \left( 1-\gamma \right){{{\dot{\hat{\beta }}}}_{k}}\left( t \right)=-\gamma {{{\hat{\beta }}}_{k}}\left( t \right)+\gamma {{{\hat{\beta }}}_{k-1}}\left( t \right)+\\ \quad \quad \quad \quad \quad \quad \quad {{q}_{3}}\left| {{s}_{k}}\left( t \right) \right|\left( 1+\left| {{\mu }_{k}}\left( t \right) \right| \right), \\ {{{\hat{\beta }}}_{k}}\left( 0 \right)={{{\hat{\beta }}}_{k-1}}\left( T \right),\quad {{{\hat{\beta }}}_{0}}\left( t \right)=0,\quad t\in \left[ 0,T \right], \\ \end{array}\right. \end{align} | (39c) |

where ${{q}_{1}},{{q}_{2}},{{q}_{3}}>0$ and $0<\gamma <1$ are design parameters. In order to show how controller (38) can guarantee stability and convergence of tracking errors later,we define the estimation error as $\tilde{\pmb W}_{\Xi }\left( t \right)=\hat{\pmb W}_{\Xi ,k}\left( t \right)-{\pmb W}_{\Xi }^{*}\left( t \right)$,$\tilde{\pmb W}_{\Psi }\left( t \right)=\hat{\pmb W}_{\Psi ,k}\left( t \right)-{\pmb W}_{\Psi }^{*}\left( t \right)$ and ${{\tilde{\beta }}_{k}}\left( t \right)={{\hat{\beta }}_{k}}\left( t \right)-\beta \left( t \right)$.

Then,substituting controller (38) back into (30) yields

| \begin{align} & \frac{{{{\dot{V}}}_{k}}\left( t \right)}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}\le \nonumber\\[1.5mm] &\quad {{s}_{k}}\left( t \right)\left( {\pmb W}_{\Xi }^{* \rm T}\left( t \right){{\pmb \Phi }_{\Xi }}\left( {\pmb X}_{k}^{\Xi } \right)+{{\delta }_{\Xi }}\left( {\pmb X}_{k}^{\Xi },t \right)+\right. \nonumber\\[1.5mm] &\quad \left( {\pmb W}_{\Psi }^{* \rm T}\left( t \right){{\pmb \Phi }_{\Psi }}\left( {\pmb X}_{k}^{\Psi } \right)+{{\delta }_{\Xi }}\left( {\pmb X}_{k}^{\Psi },t \right) \right){{\mu }_{k}}\left( t \right)- \nonumber\\[1.5mm] &\quad \hat{\pmb W}_{\Xi ,k}^{\rm T}\left( t \right){{\pmb \Phi }_{\Xi }}\left( {\pmb X}_{k}^{\Xi } \right)-\hat{\pmb W}_{\Psi ,k}^{\rm T}\left( t \right){{\pmb \Phi }_{\Psi }}\left( {\pmb X}_{k}^{\Psi } \right){{\mu }_{k}}\left( t \right)- \nonumber\\[1.5mm] &\quad \left. \text{sat}\left( \frac{{{e}_{sk}}}{\eta \left( t \right)} \right){{{\hat{\beta }}}_{k}}\left( 1+\left| {{\mu }_{k}}\left( t \right) \right| \right) \right)-\frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}s_{k}^{2}\left( t \right)+ \nonumber\\[1.5mm] &\quad \frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}\left( 1-b{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( t \right)}{\eta \left( t \right)} \right) \right)\xi \left( {{\pmb X}_{k}}\left( t \right) \right)\le \nonumber\\[1.5mm] &\quad-{{s}_{k}}\left( t \right)\tilde{\pmb W}_{\Xi ,k}^{\rm T}\left( t \right){{\Phi }_{\Xi }}\left( {\pmb X}_{k}^{\Xi } \right)-{{s}_{k}}\left( t \right)\tilde{\pmb W}_{\Psi ,k}^{\rm T}\left( t \right){{\pmb \Phi }_{\Psi }}\left( {\pmb X}_{k}^{\Psi } \right)\times \nonumber\\[1.5mm] &\quad {{\mu }_{k}}\left( t \right)-\left| {{s}_{k}}\left( t \right) \right|{{{\tilde{\beta }}}_{k}}\left( 1+\left| {{\mu }_{k}}\left( t \right) \right| \right)-\frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}s_{k}^{2}\left( t \right)+ \nonumber\\[1.5mm] &\quad \frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}\left( 1-b{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( t \right)}{\eta \left( t \right)} \right) \right)\xi \left( {{\pmb X}_{k}}\left( t \right) \right), \end{align} | (40) |

which can be rewritten as

| \begin{align} & {{s}_{k}}\left( t \right)\tilde{\pmb W}_{\Xi ,k}^{\rm T}\left( t \right){{\pmb \Phi }_{\Xi }}\left( {\pmb X}_{k}^{\Xi } \right)-{{s}_{k}}\left( t \right)\tilde{\pmb W}_{\Psi ,k}^{\rm T}\left( t \right){{\pmb \Phi }_{\Psi }}\left( {\pmb X}_{k}^{\Psi } \right){{\mu }_{k}}\left( t \right)- \nonumber\\ &\quad \left| {{s}_{k}}\left( t \right) \right|{{{\tilde{\beta }}}_{k}}\left( 1+\left| {{\mu }_{k}}\left( t \right) \right| \right)\le \nonumber\\ &\quad-\frac{{{{\dot{V}}}_{k}}\left( t \right)}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}-\frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}s_{k}^{2}\left( t \right)+ \nonumber\\ &\quad \frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( t \right),t \right)}\left( 1-b{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( t \right)}{\eta \left( t \right)} \right) \right)\xi \left( {{\pmb X}_{k}}\left( t \right) \right). \end{align} | (41) |

In this section,we will analyze the stability of the closed-loop system and the convergence of tracking errors.

The stability of the proposed AILC scheme is summarized as follows.

Theorem 1. Considering closed-loop system (1),if Assumptions 1 $\sim$ 8 hold,by designing control law (38) with adaptive updating laws (39a) $\sim$ (39c),the following properties can be guaranteed.

1) All the signals of the closed-loop system are bounded.

2) The system tracking error ${{e}_{sk}}\left( t \right)$ converges to a small neighborhood of zero as $k\to \infty $, i.e.,$\underset{k\to \infty }{\mathop{\lim }} \int_{0}^{T}{{\left( {{e}_{sk}}\left( \sigma \right) \right)}^{2}}\text{d}\sigma$ $ \le {{\varepsilon }_{esk}}$,${{\varepsilon }_{esk}}=\frac{1}{2K}{{\left( 1+m \right)}^{2}}{{\varepsilon }^{2}}$.

3) $\lim_{k\to \infty } \left\| {{\pmb e}_{k}}\left( t \right) \right\|\le \left( 1+\left\| {\pmb \Lambda} \right\| \right)\left({{k}_{0}}\sum\nolimits_{i=1}^{n-1}{{{\varepsilon }_{i}}}+\frac{1}{{{\lambda }_{0}}-K}\times\right.$ $\left.\left( 1+m \right)\varepsilon {{k}_{0}} \right)+\left( 1+m \right)\eta \left( t \right)$,where ${{\lambda }_{0}}$ and ${{k}_{0}}$ are positive constants and will be given later.

Proof. Now we will check the stability of the proposed controller by CEF-based analysis. First of all,define a Lyapunov-Krasovskii-like CEF as

| \begin{align} & {{E}_{k}}\left( t \right)=\frac{1}{2{{q}_{1}}}\int_{0}^{t}{\tilde{\pmb W}_{\Xi ,k}^{\rm T}\left( \sigma \right){{{\tilde{\pmb W}}}_{\Xi ,k}}\left( \sigma \right)\text{d}\sigma }+\frac{1}{2{{q}_{2}}}\int_{0}^{t}{\tilde{\pmb W}_{\Psi ,k}^{\rm T}\left( \sigma \right)}\cdot \nonumber\\ &\ \ \ \ {{{\tilde{\pmb W}}}_{\Psi ,k}}\left( \sigma \right)\text{d}\sigma +\frac{\gamma }{2{{q}_{3}}}\int_{0}^{t}{\tilde{\beta }_{k}^{2}\left( \sigma \right)\text{d}\sigma }+\frac{(1-\gamma )}{2{{q}_{3}}}\tilde{\beta }_{k}^{2}. \end{align} | (42) |

Compute the difference of ${{E}_{k}}\left( t \right)$,which is

| \begin{align} \Delta {{E}_{k}}\left( t \right)& =\frac{1}{2{{q}_{1}}}\int_{0}^{t}{\left( \tilde{\pmb W}_{\Xi ,k}^{\rm T}\left( \sigma \right){{{\tilde{\pmb W}}}_{\Xi ,k}}\left( \sigma \right)-\tilde{\pmb W}_{\Xi ,k-1}^{\rm T}\left( \sigma \right)\times \right.} \nonumber\\ &\quad \left. {{{\tilde{\pmb W}}}_{\Xi ,k-1}}\left( \sigma \right) \right)\text{d}\sigma +\frac{1}{2{{q}_{2}}}\int_{0}^{t}{\left( \tilde{\pmb W}_{\Psi ,k}^{\rm T}\left( \sigma \right){{{\tilde{\pmb W}}}_{\Psi ,k}}\left( \sigma \right)-\right.} \nonumber\\ &\quad \left. \tilde{\pmb W}_{\Psi ,k-1}^{\rm T}\left( \sigma \right){{{\tilde{\pmb W}}}_{\Psi ,k-1}}\left( \sigma \right) \right)\text{d}\sigma+ \nonumber\\ &\quad \frac{\gamma }{2{{q}_{3}}}\int_{0}^{t}{\left( \tilde{\beta }_{k}^{2}\left( \sigma \right)-\tilde{\beta }_{k-1}^{2}\left( \sigma \right) \right)\text{d}\sigma }+ \nonumber\\ &\quad \frac{(1-\gamma )}{2{{q}_{3}}}\left( \tilde{\beta }_{k}^{2}\left( t \right)-\tilde{\beta }_{k-1}^{2}\left( t \right) \right). \end{align} | (43) |

Utilizing the algebraic relation ${{\left( {\pmb a}-{\pmb b} \right)}^{\text{T}}}\left( {\pmb a}-{\pmb b} \right)-{{\left( {\pmb a}-{\pmb c} \right)}^{\text{T}}}\left( {\pmb a}-{\pmb c} \right)={{\left( {\pmb c}-{\pmb b} \right)}^{\text{T}}}\left[ 2\left( {\pmb a}-{\pmb b} \right)+\left( {\pmb b}-{\pmb c} \right) \right]$ and taking the adaptive learning laws (39a) and (39b) into consideration,we have the following two equalities:

| \begin{array}{l} & \frac{1}{2{{q}_{1}}}\int_{0}^{t}\left( \tilde{\pmb W}_{\Xi ,k}^{\rm T}\left( \sigma \right)\tilde{\pmb W}_{\Xi ,k} \left( \sigma \right)- \right.\notag\\ &\left.\qquad \tilde{\pmb W}_{\Xi ,k-1}^{\rm T}\left( \sigma \right)\tilde{\pmb W}_{\Xi ,k-1}\left( \sigma \right) \right)\text{d}\sigma = \nonumber\\ & \qquad \int_{0}^{t}{{{s}_{k}}\left( \sigma \right)\tilde{\pmb W}_{\Xi ,k}^{\rm T}\left( \sigma \right){{\pmb \Phi }_{\Xi }}\left( {\pmb X}_{k}^{\Xi } \right)}\text{d}\sigma- \nonumber\\ &\qquad \frac{{{q}_{1}}}{2}{{\int_{0}^{t}{s_{k}^{2}\left( \sigma \right)\left\| {{\pmb \Phi}_{\Xi }}\left( {\pmb X}_{k}^{\Xi } \right) \right\|}}^{2}}\text{d}\sigma, \end{array} | (44) |

| \begin{array}{l} & \frac{1}{2{{q}_{2}}}\int_{0}^{t}{\left( \tilde{\pmb W}_{\Psi ,k}^{\rm T}\left( \sigma \right){{{\tilde{\pmb W}}}_{\Psi ,k}}\left( \sigma \right)- \right.} \nonumber\\ &\quad \left. \tilde{\pmb W}_{\Psi ,k-1}^{\rm T}\left( \sigma \right){{{\tilde{\pmb W}}}_{\Psi ,k-1}}\left( \sigma \right) \right)\text{d}\sigma= \nonumber\\ &\quad \int_{0}^{t}{{{s}_{k}}\left( \sigma \right)\tilde{\pmb W}_{\Psi ,k}^{\rm T}\left( \sigma \right){{\pmb \Phi }_{\Psi }}\left( {\pmb X}_{k}^{\Psi } \right){{\mu }_{k}}\left( \sigma \right)}\text{d}\sigma- \nonumber\\ &\ \quad \frac{{{q}_{2}}}{2}{{\int_{0}^{t}{s_{k}^{2}\left( \sigma \right)\mu _{k}^{2}\left( \sigma \right)\left\| {{\pmb \Phi}_{\Psi }}\left( {\pmb X}_{k}^{\Psi } \right) \right\|}}^{2}}\text{d}\sigma . \end{array} | (45) |

Recalling adaptive learning law (39c),the last two terms of (43) can be transformed into (46).

| \begin{align} & \frac{\gamma }{2{{q}_{3}}}\int_{0}^{t}{\left( \tilde{\beta }_{k}^{2}\left( \sigma \right)-\tilde{\beta }_{k-1}^{2}\left( \sigma \right) \right)\text{d}\sigma } +\frac{(1-\gamma )}{2{{q}_{3}}}\left( \tilde{\beta }_{k}^{2}\left( t \right)-\tilde{\beta }_{k-1}^{2}\left( t \right) \right)= \nonumber \\ &\qquad \frac{\gamma }{2{{q}_{3}}}\int_{0}^{t}{\left( \tilde{\beta }_{k}^{2}\left( \sigma \right)-\tilde{\beta }_{k-1}^{2}\left( \sigma \right) \right)\text{d}\sigma }+\frac{(1-\gamma )}{{{q}_{3}}}\int_{0}^{t}{{{{\tilde{\beta }}}_{k}}\left( \sigma \right){{{\dot{\tilde{\beta }}}}_{k}}\left( \sigma \right)\text{d}\sigma }+\frac{(1-\gamma )}{2{{q}_{3}}}\left[\tilde{\beta }_{k}^{2}(0)-\tilde{\beta }_{k-1}^{2}(t) \right]= \nonumber\\ & \qquad \int_{0}^{t}{\left| {{s}_{k}}\left( \sigma \right) \right|\tilde{\beta }_{k}^{{}}\left( \sigma \right)\left( 1+\left| {{\mu }_{k}}\left( \sigma \right) \right| \right)\text{d}\sigma }-\frac{\gamma }{{{q}_{3}}}\int_{0}^{t}{{{{\tilde{\beta }}}_{k}}\left( \sigma \right)\left( {{{\hat{\beta }}}_{k}}\left( \sigma \right)-{{{\hat{\beta }}}_{k-1}}\left( \sigma \right) \right)\text{d}\sigma }+ \nonumber\\ & \qquad \frac{\gamma }{2{{q}_{3}}}\int_{0}^{t}{\left( \tilde{\beta }_{k}^{2}\left( \sigma \right)-\tilde{\beta }_{k-1}^{2}\left( \sigma \right) \right)\text{d}\sigma }+\frac{(1-\gamma )}{2{{q}_{3}}}\left[\tilde{\beta }_{k}^{2}(0)-\tilde{\beta }_{k-1}^{2}(t) \right]= \nonumber\\ &\qquad \int_{0}^{t}{\left| {{s}_{k}}\left( \sigma \right) \right|\tilde{\beta }_{k}^{{}}\left( \sigma \right)\left( 1+ \left| {{\mu }_{k}}\left( \sigma \right) \right| \right)\text{d}\sigma }-\frac{\gamma }{{{q}_{3}}}\int_{0}^{t}{{{{\tilde{\beta }}}_{k}}\left( \sigma \right)\left( {{{\tilde{\beta }}}_{k}}\left( \sigma \right)-{{{\tilde{\beta }}}_{k-1}}\left( \sigma \right) \right)\text{d}\sigma }+ \nonumber \\ & \qquad \frac{\gamma }{2{{q}_{3}}}\int_{0}^{t}{\left( \tilde{\beta }_{k}^{2}\left( \sigma \right)-\tilde{\beta }_{k-1}^{2}\left( \sigma \right) \right)\text{d}\sigma }+\frac{(1-\gamma )}{2{{q}_{3}}}\left[\tilde{\beta }_{k}^{2}(0)-\tilde{\beta }_{k-1}^{2}(t) \right]= \nonumber \\ &\qquad \int_{0}^{t}{\left| {{s}_{k}}\left( \sigma \right) \right|{{{\tilde{\beta }}}_{k}}\left( \sigma \right)\left( 1+\left| {{\mu }_{k}}\left( \sigma \right) \right| \right)\text{d}\sigma }+\frac{(1-\gamma )}{2{{q}_{3}}}\left[\tilde{\beta }_{k}^{2}(0)-\tilde{\beta }_{k-1}^{2}(t) \right]-\frac{\gamma }{2{{q}_{3}}}\int_{0}^{t}{{{\left( {{{\tilde{\beta }}}_{k}}(\sigma )-{{{\tilde{\beta }}}_{k-1}}(\sigma ) \right)}^{2}}}\text{d}\sigma \end{align} | (46) |

Substituting (44) $\sim$ (46) back into (43),it follows that

| \begin{array}{l} & \Delta {{E}_{k}}\left( t \right)\le \nonumber\\ &\quad -\int_{0}^{t}{\frac{{{{\dot{V}}}_{k}}\left( \sigma \right)}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( \sigma \right),\sigma \right)}\text{d}\sigma }+\int_{0}^{t}{\frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( \sigma \right),\sigma \right)}}\times \nonumber\\ &\quad \left( 1-b{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( \sigma \right)}{\eta \left( \sigma \right)} \right) \right)\xi \left( {{\pmb X}_{k}} \right)\text{d}\sigma- \nonumber\\ &\quad \int_{0}^{t}{\frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( \sigma \right),\sigma \right)}s_{k}^{2}\left( \sigma \right)}\text{d}\sigma +\notag\\ &\quad \frac{(1-\gamma )}{2{{q}_{3}}}\left[ \tilde{\beta }_{k}^{2}(0)-\tilde{\beta }_{k-1}^{2}(t) \right]\le-\frac{1}{{{{\bar{g}}}_{m}}}{{V}_{k}}\left( t \right)+ \nonumber\\ &\quad \int_{0}^{t}\frac{1}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( \sigma \right),\sigma \right)} \left( 1-b{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( \sigma \right)}{\eta \left( \sigma \right)} \right) \right)\times \nonumber\\ &\quad \xi \left( {{\pmb X}_{k}} \right)\text{d}\sigma -\int_{0}^{t}{\frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( \sigma \right),\sigma \right)}s_{k}^{2}\left( \sigma \right)}\text{d}\sigma+ \nonumber\\ &\quad \frac{(1-\gamma )}{2{{q}_{3}}}\left[ \tilde{\beta }_{k}^{2}(0)-\tilde{\beta }_{k-1}^{2}(t) \right]. \end{array} | (47) |

To continue the analysis,we need to exploit the following property of the tangent hyperbolic function.

Lemma 2. Consider the set ${{\text{ }\!\!\Omega\!\!\text{ }}_{{{s}_{k}}}}$ defined by ${{\text{ }\!\!\Omega\!\!\text{ }}_{{{s}_{k}}}}:=\left\{ {{s}_{k}}\left( t \right)|\left| {{s}_{k}}\left( t \right) \right|\le m\eta \left( t \right) \right\}$. Then for any ${{s}_{k}}\left( t \right)\notin {{\text{ }\!\!\Omega\!\!\text{ }}_{{{s}_{k}}}}$,the following inequality is satisfied:

| \begin{align} 1-b{{\tanh }^{2}}\left( \frac{{{s}_{k}}\left( t \right)}{\eta \left( t \right)} \right)<0, \end{align} | (48) |

where $b>1$,$m=\ln \left( \sqrt{{b}/{\left( b-1 \right)}\;}+\sqrt{{1}/{\left( b-1 \right)}\;} \right)$.

Proof. The proof is in Appendix.

For analysis of stability,we consider two cases as follows.

Case 1. ${{s}_{k}}\left( t \right)\in {{\Omega }_{{{s}_{k}}}}$.

In this case,$\left| {{s}_{k}}\left( t \right) \right|\le m\eta \left( t \right)$ is satisfied. If ${{s}_{k}}\left( t \right)=0$,we know ${{e}_{sk}}\left( t \right)$ is bounded by $\eta \left( t \right)$,i.e.,$\left| {{e}_{sk}}\left( t \right) \right|\le \eta \left( t \right)$. If ${{s}_{k}}\left( t \right)>0$,we have ${{s}_{k}}\left( t \right)={{e}_{sk}}\left( t \right)-\eta \left( t \right)$. From $\left| {{s}_{k}}\left( t \right) \right|\le m\eta \left( t \right)$,we can obtain ${{s}_{k}}\left( t \right)={{e}_{sk}}\left( t \right)-\eta \left( t \right)\le m\eta \left( t \right)$ which further implies $0<{{e}_{sk}}\le \left( 1+m \right)\eta \left( t \right)$. Similarly,if ${{s}_{k}}\left( t \right)<0$ we have ${{s}_{k}}\left( t \right)={{e}_{sk}}\left( t \right)+\eta \left( t \right)\ge-m\eta \left( t \right)$ which implies $0>{{e}_{sk}}\left( t \right)\ge-\left( 1+m \right)\eta \left( t \right)$. Synthesizing the above discussion we know that $\left| {{e}_{sk}}\left( t \right) \right|\le \left( 1+m \right)\eta \left( t \right)$ holds. Obviously,${{x}_{i,k}}\left( t \right)$ are bounded,since ${{\pmb X}_{d}}\left( t \right)$ is bounded. From the updating laws (39a) $\sim$ (39c),we know that $\hat{\pmb W}_{\Xi ,k}^{{}}\left( t \right)$,$\hat{\pmb W}_{\Psi ,k}^{{}}\left( t \right)$ and ${{\hat{\beta }}_{k}}\left( t \right)$ are bounded as well. Following this chain of reasoning,the boundedness of ${{v}_{k}}\left( t \right)$ can be deduced. Thus,all closed-loop signals are bounded.

Case 2. ${{s}_{k}}\left( t \right)\notin {{\Omega }_{{{s}_{k}}}}$.

From Lemma 2,we know that the last term of $\Delta {{E}_{k}}\left( t \right)$ can be removed from the analysis. Therefore,(47) can be simplified as

| \begin{align} \Delta {{E}_{k}}\left( t \right)& <-\frac{1}{{{{\bar{g}}}_{m}}}{{V}_{k}}\left( t \right)-\int_{0}^{t}{\frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( \sigma \right),\sigma \right)}s_{k}^{2}\left( \sigma \right)}\text{d}\sigma+ \nonumber\\ &\frac{(1-\gamma )}{2{{q}_{3}}}\left[\tilde{\beta }_{k}^{2}(0)-\tilde{\beta }_{k-1}^{2}(t) \right]. \end{align} | (49) |

Let $t=T$,according to ${{\hat{\beta }}_{k}}\left( 0 \right)={{\hat{\beta }}_{k-1}}\left( T \right)$,${{\hat{\beta }}_{1}}\left( 0 \right)=0$,we have

| \begin{align} \Delta {{E}_{k}}\left( T \right)& <-\frac{1}{{{{\bar{g}}}_{m}}}{{V}_{k}}\left( T \right)- \nonumber\\ & \int_{0}^{T}{\frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( \sigma \right),\sigma \right)}s_{k}^{2}\left( \sigma \right)}\text{d}\sigma \le 0. \end{align} | (50) |

Inequality (50) shows that ${{E}_{k}}\left( T \right)$ is decreasing along iteration axis. Thus,the boundedness of ${{E}_{k}}\left( T \right)$ can be ensured provided that ${{E}_{1}}\left( T \right)$ is finite.

According to the definition of ${{E}_{k}}$,we know

| \begin{align} & {{E}_{1}}\left( t \right)=\frac{1}{2{{q}_{1}}}\int_{0}^{t}{\tilde{\pmb W}_{\Xi ,1}^{\rm T}\left( \sigma \right){{{\tilde{\pmb W}}}_{\Xi ,1}}\left( \sigma \right)\text{d}\sigma }+\frac{1}{2{{q}_{2}}}\int_{0}^{t}{\tilde{\pmb W}_{\Psi ,1}^{\rm T}\left( \sigma \right)}\times \nonumber\\ &\ \ \ \ \ {{{\tilde{\pmb W}}}_{\Psi ,1}}\left( \sigma \right)\text{d}\sigma +\frac{\gamma }{2{{q}_{3}}}\int_{0}^{t}{\tilde{\beta }_{1}^{2}\left( \sigma \right)\text{d}\sigma }+\frac{(1-\gamma )}{2{{q}_{3}}}\tilde{\beta }_{1}^{2}. \end{align} | (51) |

Taking the derivative of ${{E}_{1}}\left( t \right)$ yields

| \begin{align} {{{\dot{E}}}_{1}}\left( t \right)&=\frac{1}{2{{q}_{1}}}\tilde{\pmb W}_{\Xi ,1}^{\rm T}\left( t \right)\tilde{\pmb W}_{\Xi ,1}\left( t \right)+\frac{1}{2{{q}_{2}}}\tilde{\pmb W}_{\Psi ,1}^{\rm T}\left( t \right)\tilde{\pmb W}_{\Psi ,1}\left( t \right)+ \nonumber\\ & \ \ \ \frac{\gamma }{2{{q}_{3}}}\tilde{\beta }_{1}^{2}\left( t \right)+\frac{(1-\gamma )}{{{q}_{3}}}{{{\tilde{\beta }}}_{1}}{{{\dot{\tilde{\beta }}}}_{1}}. \end{align} | (52) |

Recalling the parameter adaptive laws (39a) $\sim$ (39c),we have $\hat{\pmb W}_{\Xi ,1}\left( t \right)={{q}_{1}}{{s}_{1}}\left( t \right){{\pmb \Phi}_{\Xi }}\left( {\pmb X}_{1}^{\Xi } \right)$, $\hat{\pmb W}_{\Psi ,1}\left( t \right)={{q}_{2}}{{s}_{1}}\left( t \right){{\mu }_{1}}\left( t \right){{\pmb \Phi }_{\Psi }}\left( X_{1}^{\Psi } \right)$,$\left( 1-\gamma \right){{\dot{\hat{\beta }}}_{1}}=-\gamma {{\hat{\beta }}_{1}}+{{q}_{3}}\left| {{s}_{1}}\left( t \right) \right|\left( 1+\left| {{\mu }_{1}}\left( t \right) \right| \right)$,then we obtain

| \begin{align} & \frac{1}{2{{q}_{1}}}\tilde{\pmb W}_{\Xi ,1}^{\rm T}\left( t \right)\tilde{\pmb W}_{\Xi ,1}\left( t \right)= \nonumber\\ &\quad \frac{1}{2{{q}_{1}}}\left( \tilde{\pmb W}_{\Xi ,1}^{\rm T}\left( t \right)\tilde{\pmb W}_{\Xi ,1}\left( t \right)-2\tilde{\pmb W}_{\Xi ,1}^{\rm T}\left( t \right)\hat{\pmb W}_{\Xi ,1}\left( t \right) \right)+ \nonumber\\ &\quad \frac{1}{{{q}_{1}}}\tilde{\pmb W}_{\Xi ,1}^{\rm T}\left( t \right)\hat{\pmb W}_{\Xi ,1}\left( t \right)= \nonumber\\ &\quad \frac{1}{2{{q}_{1}}}\left( {{\left( \hat{\pmb W}_{\Xi ,1}\left( t \right)-{\pmb W}_{\Xi }^{*}\left( t \right) \right)}^{\rm T}}\left( \hat{\pmb W}_{\Xi ,1}\left( t \right)-{\pmb W}_{\Xi }^{*}\left( t \right) \right)-\right. \nonumber\\ &\quad \left. 2{{\left( \hat{\pmb W}_{\Xi ,1}\left( t \right)-{\pmb W}_{\Xi }^{*}\left( t \right) \right)}^{\rm T}}\hat{\pmb W}_{\Xi ,1}^{{}}\left( t \right) \right)+ \nonumber\\ &\quad {{s}_{1}}\left( t \right)\tilde{\pmb W}_{\Xi ,1}^{\rm T}\left( t \right){{\pmb \Phi }_{\Xi }}\left( {\pmb X}_{1}^{\Xi } \right)= \nonumber\\ &\quad \frac{1}{2{{q}_{1}}}\left(-\hat{\pmb W}_{\Xi ,1}^{\rm T}\left( t \right)\hat{\pmb W}_{\Xi ,1}^{{}} \left( t \right)+{\pmb W}_{\Xi }^{* \rm T}\left( t \right){\pmb W}_{\Xi }^{*}\left( t \right) \right)+ \nonumber\\ &\quad {{s}_{1}}\left( t \right)\tilde{\pmb W}_{\Xi ,1}^{\rm T}\left( t \right){{\pmb \Phi }_{\Xi }}\left( {\pmb X}_{1}^{\Xi } \right). \end{align} | (53) |

| \begin{align} & \frac{1}{2{{q}_{2}}}\tilde{\pmb W}_{\Psi ,1}^{\rm T}\left( t \right)\tilde{\pmb W}_{\Psi ,1}^{{}}\left( t \right) = \nonumber\\ &\quad \frac{1}{2{{q}_{2}}}\left(-\hat{\pmb W}_{\Psi ,1}^{\rm T}\left( t \right)\hat{\pmb W}_{\Psi ,1}^{{}}\left( t \right)+{\pmb W}_{\Psi }^{* \rm T}\left( t \right){\pmb W}_{\Psi }^{*}\left( t \right) \right)+ \nonumber\\ &\quad {{s}_{1}}\left( t \right)\tilde{\pmb W}_{\Psi ,1}^{\rm T}\left( t \right){{\pmb \Phi }_{\Psi }}\left( {\pmb X}_{1}^{\Psi } \right), \end{align} | (54) |

| \begin{align} & \frac{\gamma }{2{{q}_{3}}}\tilde{\beta }_{1}^{2}\left( t \right)+\frac{(1-\gamma )}{{{q}_{3}}}{{{\tilde{\beta }}}_{1}}\left( t \right){{{\dot{\tilde{\beta }}}}_{1}}\left( t \right)= \nonumber\\ &\quad \frac{\gamma }{2{{q}_{3}}}\tilde{\beta }_{1}^{2}\left( t \right)-\frac{\gamma }{{{q}_{3}}}{{{\tilde{\beta }}}_{1}}\left( t \right){{{\hat{\beta }}}_{1}}\left( t \right)+\left| {{s}_{1}}\left( t \right) \right|{{{\tilde{\beta }}}_{1}}\left( t \right)\left( 1+\left| {{\mu }_{1}}\left( t \right) \right| \right)= \nonumber\\ &\quad \frac{\gamma }{2{{q}_{3}}}\left( \hat{\beta }_{1}^{2}\left( t \right)-2{{{\tilde{\beta }}}_{1}}\left( t \right){{{\hat{\beta }}}_{1}}\left( t \right)+\tilde{\beta }_{1}^{2}\left( t \right) \right)- \nonumber\\ &\quad \frac{\gamma }{2{{q}_{3}}}\hat{\beta }_{1}^{2}\left( t \right)+\left| {{s}_{1}}\left( t \right) \right|{{{\tilde{\beta }}}_{1}}\left( t \right)\left( 1+\left| {{\mu }_{1}}\left( t \right) \right| \right)\le \nonumber\\ &\quad \frac{\gamma }{2{{q}_{3}}}{{\left( \hat{\beta }_{1}^{{}}\left( t \right)-\tilde{\beta }_{1}^{{}}\left( t \right) \right)}^{2}}+\left| {{s}_{1}}\left( t \right) \right|{{{\tilde{\beta }}}_{1}}\left( t \right)\left( 1+\left| {{\mu }_{1}}\left( t \right) \right| \right) = \nonumber\\ &\quad \frac{\gamma }{2{{q}_{3}}}{{\beta }^{2}}+\left| {{s}_{1}}\left( t \right) \right|{{{\tilde{\beta }}}_{1}}\left( t \right)\left( 1+\left| {{\mu }_{1}}\left( t \right) \right| \right). \end{align} | (55) |

Substituting (53) $\sim$ (55) back into (52) yields

| \begin{align} & {{{\dot{E}}}_{1}}\left( t \right)\le \nonumber \\ &\quad {{s}_{1}}\left( t \right)\tilde{\pmb W}_{\Xi ,1}^{\rm T}\left( t \right){{\pmb \Phi }_{\Xi }}\left( {\pmb X}_{1}^{\Xi } \right)+{{s}_{1}}\left( t \right)\tilde{\pmb W}_{\Psi ,1}^{\rm T}\left( t \right){{\pmb \Phi }_{\Psi }}\left( {\pmb X}_{1}^{\Psi } \right)+ \nonumber \\ &\quad \left| {{s}_{1}}\left( t \right) \right|{{{\tilde{\beta }}}_{1}}\left( 1+\left| {{\mu }_{1}}\left( t \right) \right| \right)+\frac{1}{2{{q}_{1}}}{\pmb W}_{\Xi }^{* \rm T}\left( t \right) {\pmb W}_{\Xi }^{*}\left( t \right)+ \nonumber \\ &\quad \frac{1}{2{{q}_{2}}}{\pmb W}_{\Psi }^{* \rm T}\left( t \right){\pmb W}_{\Psi }^{*}\left( t \right)+\frac{\gamma }{2{{q}_{3}}}{{\beta }^{2}}\left( t \right)\le \nonumber \\ &\quad-\frac{{{{\dot{V}}}_{k}}\left( t \right)}{{{g}_{m}}\left( {{\pmb X}_{k}},t \right)}- \frac{k}{{{g}_{m}}\left( {{\pmb X}_{k}},t \right)}s_{k}^{2}\left( t \right)+\frac{1}{2{{q}_{1}}}{\pmb W}_{\Xi }^{* \rm T} \left( t \right){\pmb W}_{\Xi }^{*}\left( t \right)+ \nonumber \\ &\quad \frac{1}{2{{q}_{2}}}{\pmb W}_{\Psi }^{* \rm T}\left( t \right){\pmb W}_{\Psi }^{*}\left( t \right)+\frac{\gamma }{2{{q}_{3}}}{{\beta }^{2}}\left( t \right). \end{align} | (56) |

Let ${{c}_{\max }}=\max_{t\in \left[0,T \right]} \left\{ \frac{1}{2{{q}_{1}}} {\pmb W}_{\Xi }^{* \rm T}\left( t \right){\pmb W}_{\Xi }^{*}\left( t \right)+\frac{1}{2{{q}_{2}}}{\pmb W}_{\Psi }^{* \rm T} \left( t \right)\right.\cdot $ $\left. {\pmb W}_{\Psi }^{*}\left( t \right)+\frac{\gamma }{2{{q}_{3}}}{{\beta }^{2}\left( t \right)} \right\}$. Integrating the above inequality over $\left[0,t \right]$ leads to

| \begin{align} & {{E}_{1}}\left( t \right)-{{E}_{1}}\left( 0 \right)\le \nonumber\\ &-\frac{1}{{{{\bar{g}}}_{m}}}{{V}_{1}}\left( t \right)-\int_{0}^{t}{\frac{K}{{{g}_{m}}\left( {{\pmb X}_{1}}\left( \sigma \right),\sigma \right)}s_{1}^{2}\left( \sigma \right)}\text{d}\sigma +t\cdot {{c}_{\max }}. \end{align} | (57) |

According to ${{\hat{\beta }}_{1}}\left( 0 \right)=0$,we get

| \begin{align} {{E}_{1}}\left( 0 \right)=\frac{(1-\gamma )}{2{{q}_{3}}}\tilde{\beta }_{1}^{2}\left( 0 \right)=\frac{(1-\gamma )}{2{{q}_{3}}}\beta _{{}}^{2}\left( 0 \right). \end{align} | (58) |

Substituting (58) back into (57) results in

| \begin{align} {{E}_{1}}\left( t \right)\le t\cdot {{c}_{\max }}+\frac{(1-\gamma )}{2{{q}_{3}}}\beta _{{}}^{2}\left( 0 \right)<\infty , \end{align} | (59) |

which implies the boundedness of ${{E}_{1}}\left( t \right)$,so ${{E}_{k}}\left( t \right)$ is finite for any $k\in \bf N$. Applying (50) repeatedly,we have

| \begin{align} & {{E}_{k}}\left( T \right)={{E}_{1}}\left( T \right)+\sum\limits_{l=2}^{k}{\Delta {{E}_{l}}\left( T \right)}<{{E}_{1}}\left( T \right)- \nonumber\\ &\quad \frac{1}{{{{\bar{g}}}_{m}}}\sum\limits_{l=2}^{k}{{{V}_{l}}\left( T \right)}-\sum\limits_{l=2}^{k}{\int_{\text{0}}^{T}{\frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( \sigma \right),\sigma \right)}s_{l}^{2}\left( \sigma \right)}\text{d}\sigma }\le \nonumber\\ &\quad {{E}_{1}}\left( T \right)-\sum\limits_{l=2}^{k}{\int_{\text{0}}^{T}{\frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( \sigma \right),\sigma \right)}s_{l}^{2}\left( \sigma \right)}\text{d}\sigma }. \end{align} | (60) |

We rewrite (60) as

| \begin{align} & \sum\limits_{l=2}^{k}{\int_{\text{0}}^{T}{\frac{K}{{{g}_{m}}\left( {{\pmb X}_{k}}\left( \sigma \right),\sigma \right)}s_{l}^{2}\left( \sigma \right)}\text{d}\sigma }\le \nonumber\\ & \qquad \frac{K}{{\underline{g}}_{m}}\sum\limits_{l=2}^{k}{\int_{\text{0}}^{T} {s_{l}^{2}\left(\sigma\right)}\text{d}\sigma}\le \nonumber\\ & \qquad{{{E}_{1}}\left(T\right)-{{E}_{k}}\left(T\right)} \le{{E}_{1}}\left(T\right) \end{align} | (61) |

Taking the limitation of (61),it follows that

| \begin{align} &\underset{k\to \infty }{\mathop{\lim }} \sum\limits_{l=2}^{k}{\int_{\text{0}}^{T}{s_{l}^{2}\left( \sigma \right)}\text{d}\sigma }\le\notag\\ &\qquad \frac{{{{\underline{g}}}_{m}}}{k}\left({{E}_{1}}\left(T\right)-{{E}_{k}} \left(T\right)\right)\le \frac{{{{\underline{g}}}_{m}}}{k}{{E}_{1}} \left(T\right). \end{align} | (62) |

Since ${{E}_{1}}\left( T \right)$ is bounded,according to the convergence theorem of the sum of series,$\lim_{k\to \infty } \int_{\text{0}}^{T}{s_{k}^{2}\left( \sigma \right)}\text{d}\sigma =0$,which implies that $\lim_{k\to \infty } s_{k}^{{}}\left( t \right)={{s}_{\infty }}\left( t \right)=0$, $\forall t\in \left[0,T \right]$. Moreover,from definition (12), we can know that $\lim_{k\to \infty } \int_{0}^{T}{{{\left( {{e}_{sk}}\left( \sigma \right) \right)}^{2}}\text{d}\sigma }\le {{\varepsilon }_{e}}$,${{\varepsilon }_{e}}=\int_{0}^{T}{{{\left( \left( 1+m \right){{\eta }_{1}}\left( \sigma \right) \right)}^{2}}\text{d}\sigma }=\frac{1}{2K}{{\left( 1+m \right)}^{2}}{{\varepsilon }^{2}}\left( 1-{{\rm e}^{-2KT}} \right)\le \frac{1}{2K}{{\left( 1+m \right)}^{2}}{{\varepsilon }^{2}}={{\varepsilon }_{esk}}$. Furthermore,the bound of ${{e}_{s\infty }}\left( t \right)$ will satisfy $\lim_{k\to \infty } \left| {{e}_{sk}}\left( t \right) \right|={{e}_{s\infty }}\left( t \right)=\left( 1+m \right)\varepsilon {{\rm e}^{-Kt}}$, $\forall t\in \left[0,T \right]$.

Next we will prove the boundedness of the signals by induction. Firstly,we separate ${{E}_{k}}\left( t \right)$ into two parts, i.e.,

| \begin{align} &E_{n,k}^{1}\left( t \right)=\frac{1}{2{{q}_{1}}}\int_{0}^{t}{\tilde{\pmb W}_{\Xi ,k}^{\rm T}\left( \sigma \right)\tilde{\pmb W}_{\Xi ,k}^{{}}\left( \sigma \right)\text{d}\sigma }+\notag\\ &\qquad \qquad \frac{1}{2{{q}_{2}}}\int_{0}^{t}{\tilde{\pmb W}_{\Psi ,k}^{\rm T}\left( \sigma \right)} \tilde{\pmb W}_{\Psi ,k}\left( \sigma \right)\text{d}\sigma +\notag\\ &\qquad \qquad \frac{\gamma }{2{{q}_{3}}}\int_{0}^{t}{\tilde{\beta }_{k}^{2}\left( \sigma \right)\text{d}\sigma }, \end{align} | (63) |

| \begin{align} & E_{n,k}^{2}\left( t \right)=\frac{(1-\gamma )}{2{{q}_{3}}}\tilde{\beta }_{k}^{2}\left( t \right). \end{align} | (64) |

The boundedness of $E_{n,k}^{1}\left( T \right)$ and $E_{n,k}^{2}\left( T \right)$ is guaranteed for all iterations. Consequently,$\forall k\in {\bf N}$,there exist finite constants ${{M}_{1}}$ and ${{M}_{2}}$ satisfying

| \begin{align} &E_{n,k}^{1}\left( t \right)\le E_{n,k}^{1}\left( T \right)\le {{M}_{1}}<\infty, \end{align} | (65) |

| \begin{align} &E_{n,k}^{2}\left( T \right)\le {{M}_{2}}. \end{align} | (66) |

Then,we have

| \begin{align} {{E}_{n,k}}\left( t \right)=E_{n,k}^{1}\left( t \right)+E_{n,k}^{2}\left( t \right)\le E_{n,k}^{2}\left( t \right)+{{M}_{1}}. \end{align} | (67) |

On the other hand,from (49) and $E_{n,k+1}^{2}\left( 0 \right)=E_{n,k}^{2}\left( T \right)$,we obtain

| \begin{align} \Delta {{E}_{k+1}}\left( t \right)& <\frac{(1-\gamma )}{2{{q}_{3}}}\left[\tilde{\beta }_{k+1}^{2}(0)-\tilde{\beta }_{k}^{2}(t) \right]\le \nonumber\\ & {{M}_{2}}-E_{n,k}^{2}\left( t \right). \end{align} | (68) |

Combining (67) and (68) results in

| \begin{align} {{E}_{k+1}}\left( t \right)={{E}_{k}}\left( t \right)+\Delta {{E}_{k+1}}\left( t \right)\le {{M}_{1}}+{{M}_{2}}. \end{align} | (69) |

As we have proven that ${{E}_{1}}\left( t \right)$ is bounded, therefore,${{E}_{k}}\left( t \right)$ is finite. Furthermore,we can obtain the boundedness of ${{\hat{\pmb W}}_{\Xi ,k}}\left( t \right)$,${{\hat{\pmb W}}_{\Psi ,k}}\left( t \right)$ and ${{\hat{\beta }}_{k}}\left( t \right)$. From $\int_{0}^{t}{s_{k}^{2}\left( \sigma \right)\text{d}\sigma }\le \int_{0}^{T}{s_{k}^{2}\left( \sigma \right)\text{d}\sigma }$,we can get the boundedness of ${{s}_{k}}\left( t \right)$,which further implies that ${{x}_{i,k}}\left( t \right)$ ($i=1,2,\cdots,n$) are bounded. As a result,we can obtain that ${{v}_{k}}\left( t \right)$ is bounded.

It is clear that,for two cases,the proposed control algorithm is able to guarantee that all closed-loop signals are bounded and $\lim_{k\to \infty } \left| {{e}_{sk}}\left( t \right) \right|\le \left( 1+m \right)\eta \left( t \right)$. Therefore,the control objective is achieved,i.e.,$\lim_{k\to \infty } \int_{0}^{T}{{{\left( {{e}_{sk}}\left( \sigma \right) \right)}^{2}}\text{d}\sigma \le {{\varepsilon }_{esk}}}$, ${{\varepsilon }_{esk}}=\frac{1}{2K}{{\left( 1+m \right)}^{2}}{{\varepsilon }^{2}}$.

Define ${{\pmb \zeta }_{k}}\left( t \right)={{\left[ {{e}_{1,k}}\left( t \right),{{e}_{2,k}}\left( t \right),\cdots ,{{e}_{n-1,k}}\left( t \right) \right]}^{\rm T}}$,then a state representation of ${{e}_{sk}}\left( t \right)=\left[{{\bf \Lambda }^{\rm T}}\text{ 1} \right]{{\pmb e}_{k}}\left( t \right)$ can be expressed as

| \begin{align} {{\dot{\pmb \zeta }}_{k}}\left( t \right)={{A}_{s}}{{\pmb \zeta }_{k}}\left( t \right)+{{\pmb b}_{s}}{{e}_{sk}}\left( t \right), \end{align} | (70) |

where

$${{A}_{s}}=\left[\begin{matrix} 0 & 1 & \cdots & 0 \\ \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & \cdots & 1 \\ -{{\lambda }_{1}} &-{{\lambda }_{2}} & \cdots &-{{\lambda }_{n-1}} \\ \end{matrix} \right]\in {{\bf R}^{\left( n-1 \right)\times \left( n-1 \right)}},$$| \begin{align} {{\pmb b}_{s}}=\left[\begin{matrix} 0\quad \cdots\quad 0\quad 1\end{matrix} \right]^{\rm T}\in {{\bf R}^{n-1}} \end{align} | (71) |

with ${{A}_{s}}$ being a stable matrix. In addition,there are two constants[30] ${{k}_{0}}>0$ and ${{\lambda }_{0}}>0$ such that $\left\| {{\rm e}^{{{A}_{s}}t}} \right\|\le {{k}_{0}}{{\rm e}^{-{{\lambda }_{0}}t}}$. The solution for ${{\dot{\pmb \zeta }}_{k}}\left( t \right)$ is

| \begin{align} {{\pmb \zeta}_{k}}\left( t \right)={{\rm e}^{{{A}_{s}}t}}{{\pmb \zeta }_{k}}\left( 0 \right)+\int_{0}^{t}{{{\rm e}^{{{A}_{s}}\left( t-\sigma \right)}}{{\pmb b}_{s}}\left| {{\rm e}_{sk}}\left( \sigma \right) \right|\text{d}\sigma }. \end{align} | (72) |

Consequently,it follows that

| \begin{align} \left\| {{\pmb \zeta }_{k}}\left( t \right) \right\|={{k}_{0}}\left\| {{\pmb \zeta }_{k}}\left( 0 \right) \right\|{{\rm e}^{-{{\lambda }_{0}}t}}+{{k}_{0}}\int_{0}^{t}{{{\rm e}^{-{{\lambda }_{0}}\left( t-\sigma \right)}}\left| {{e}_{sk}}\left( \sigma \right) \right|\text{d}\sigma }. \end{align} | (73) |

When we choose suitable parameters such that ${{\lambda }_{0}}>K$, from $\lim_{k\to \infty } \left| {{e}_{sk}}\left( t \right) \right|\le \left( 1+m \right)\eta \left( t \right)$,we can have

| \begin{align} &\left\| {{\pmb \zeta }_{\infty }}\left( t \right) \right\|= \nonumber\\ &\quad{{k}_{0}}\left\| {{\pmb \zeta }_{\infty }}\left( 0 \right) \right\|{{\rm e}^{-{{\lambda }_{0}}t}}+ {{k}_{0}}\int_{0}^{t}{{{\rm e}^{-{{\lambda }_{0}}\left( t-\sigma \right)}}\left| {{\rm e}_{s\infty }} \left( \sigma \right) \right|\text{d}\sigma }\le \nonumber \\ &\quad {{k}_{0}}\left\| {{\pmb \zeta }_{\infty }}\left( 0 \right) \right\|+\left( 1+m \right)\varepsilon {{k}_{0}} \int_{0}^{t}{{{\rm e}^{-{{\lambda }_{0}}\left( t-\sigma \right)}}{{\rm e}^{-K\sigma }}\text{d}\sigma }= \nonumber \\ &\quad{{k}_{0}}\left\| {{\pmb \zeta }_{\infty }}\left( 0 \right) \right\|+\left( 1+m \right)\varepsilon {{k}_{0}} \frac{1}{{{\lambda }_{0}}-K}\left( {{\rm e}^{-Kt}}-{{\rm e}^{-{{\lambda }_{0}}t}} \right)\le \nonumber \\ &\quad {{k}_{0}}\left\| {{\pmb \zeta }_{\infty }}\left( 0 \right) \right\|+\frac{1}{{{\lambda }_{0}}-K}\left( 1+m \right)\varepsilon {{k}_{0}}. \end{align} | (74) |

Noting ${{e}_{sk}}\left( t \right)=\left[{{\pmb \Lambda }^{\rm T}}\text{ 1} \right]{{e}_{k}}\left( t \right)$ and ${{\pmb e}_{k}}\left( t \right)={{\left[{\pmb \zeta} _{k}^{\rm T}\left( t \right)\ \ {{e}_{n,k}}\left( t \right) \right]}^{\rm T}}$,we have

| \begin{align} \left\| {{\pmb e}_{k}}\left( t \right) \right\|& \le \left\| {{\pmb \zeta }_{k}}\left( t \right) \right\|+\left| {{e}_{n,k}}\left( t \right) \right|= \nonumber\\ &\left\| {{\pmb \zeta }_{k}}\left( t \right) \right\|+\left| {{e}_{sk}}\left( t \right)-{{\pmb \Lambda }^{T}}{{\pmb \zeta }_{k}}\left( t \right) \right|\le \nonumber\\ & \left( 1+\left\| {\pmb \Lambda} \right\| \right)\left\| {{\pmb \zeta }_{k}}\left( t \right) \right\|+\left| {{e}_{sk}}\left( t \right) \right|. \end{align} | (75) |

Considering the two inequalities above,we can obtain

| \begin{align} & \left\| {{\pmb e}_{\infty }}\left( t \right) \right\| \le \left( 1+\left\| {\pmb \Lambda} \right\| \right) \left\| {{\pmb \zeta }_{\infty }}\left( t \right) \right\|+\left| {{e}_{s\infty }}\left( t \right) \right|\le \nonumber\\ &\qquad \left( 1+\left\| {\pmb \Lambda} \right\| \right)\left( {{k}_{0}}\left\| {{\pmb \zeta }_{\infty }} \left( 0 \right) \right\|+\frac{1}{{{\lambda }_{0}}-K}\left( 1+m \right)\varepsilon {{k}_{0}} \right)+ \nonumber\\ &\qquad \left( 1+m \right)\eta \left( t \right)\le \nonumber\\ & \qquad \left( 1+\left\| {\pmb \Lambda} \right\| \right)\left( {{k}_{0}}\sum\limits_{i=1}^{n-1} {{{\varepsilon }_{i}}}+\frac{1}{{{\lambda }_{0}}-K}\left( 1+m \right)\varepsilon {{k}_{0}} \right)+ \nonumber\\ &\qquad \left( 1+m \right)\eta \left( t \right). \end{align} | (76) |

The third property of Theorem 1 can be concluded. Moreover,from the boundedness of ${{\pmb X}_{k}}\left( t \right)$ and ${{\pmb X}_{d}}\left( t \right)$ we know there exists a compact set $\Omega =\sup_{k\in {\bf N}} \left\{ {{\pmb X}_{k}}\left( t \right),{{\pmb X}_{d}}\left( t \right) \right\}$ on which the NNs approximate uncertainties.

This concludes the proof.

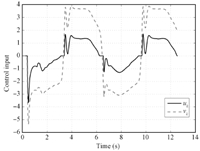

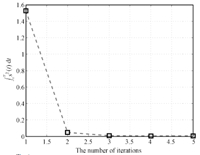

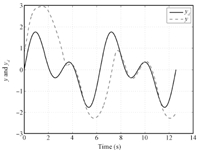

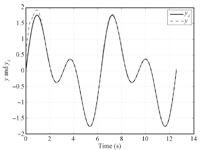

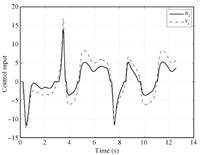

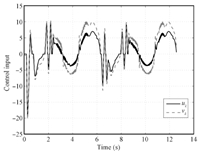

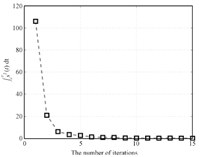

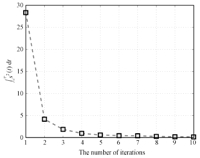

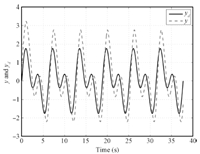

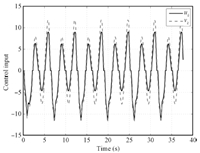

Ⅴ. SIMULATION STUDYIn this section,a simulation study is presented to verify the effectiveness of the proposed AILC scheme. Consider the following second-order nonlinear system with unknown time-varying delays and unknown dead-zone running over $\left[0,4\pi \right]$ repetitively: