2. School of Computer Science of Technology, School of Software, Nanjing University of Posts and Telecommunications, China

In recent decades,there has been an increasing research interest in reinforcement learning (RL). In the field of machine learning,RL is an important category that specializes in a large class of learning problems[1]. RL methodology (partly) originates from the biological observation that,the intention of performing some action under some circumstance can be reinforced or restrained by external stimulating signals. Well-supported by dynamic programming techniques,many RL algorithms were developed in the early 1990s,among which Q-learning,Sarsa,etc. are most popular[1].

Although it is very successful in various learning tasks,nowadays RL suffers from the low speed of learning in certain tasks. Such tasks typically have relatively large problem space,and as a consequence the conventional RL updating strategies become less efficient or even infeasible. Despite of those efforts in developing more advanced RL algorithms (such as function approximation techniques,hierarchical RL,etc.),the methodology of transfer learning has recently attracted much attention.

Without any specific context,transfer learning refers to the general idea that past learning experience may be and should be used to aid future learning. When applied to RL,transfer learning becomes concrete problems such as how to reuse previously learnt results (for example,policies,i.e.,the way of behaving under various states) or intermediate knowledge (for example $V$-values,which are quality indicators of states). From a broader point of view,such questions include also,for example,among many previous tasks how to extract the specific knowledge from the most appropriate task to help current learning. Such questions have been open since the late 1990s. During the past decade,lots of methods for inter-task transfer learning in RL have been proposed to provide different answers to the questions above[2, 3, 4, 5, 6, 7]. We will summarize these works in Section II.

In this paper,we provide our solution to the above-mentioned problems. From a systematic point of view,our method aims at maintaining a library of those reusable units collected from past learning tasks. Then,upon learning a new task,we attempt to use the library whenever it is possible and beneficial to do so. In order to search the library for a piece of knowledge to transfer,we define a similarity measure between options,of which the formal definition will be introduced in Section II-B. Our transfer learning method contains four stages. Firstly,in the subgoal discovery stage,we extract subgoals from learning tasks. Briefly speaking,a subgoal is some important state during the process of accomplishing the learning task. Secondly,in the option construction stage,we construct options from the subgoals. An option can be considered as the guideline from one subgoal to another. Hence several options together may guide the learner to fulfill the learning task quickly. Thirdly,in the similarity search stage,for a given target task,we search the library for the best option. To this end,we propose a novel similarity measure which is built upon the intuition that similar options have similar state-action probability. Finally,in the option reusing stage,the best option found in the similarity search stage is reused to speed up the current learning task. Comparing to those existing methods,our method has the following advantages.

1) Our proposed method imposes limited constraint on the tasks,thus it is widely applicable;

2) The basic reusable unit in our method is small,thus one target task can benefit from multiple reusable units;

3) Our proposed method is an automatic method that does not require any human intervention. It works as a system that automatically maintains the reusable source library.

The rest of the paper is organized as follows. After a brief introduction of necessary background knowledge in Section II. Section III introduces our proposed transfer learning method,as well as the similarity measure between options. In Section IV,we examine our proposed method by extensive experiments. Then,Section V summarizes existing methods that are closely related to our work. Finally,Section VI concludes the paper and points out possible future directions.

II. BACKGROUND A. RL and Markov Decision Process (MDP)RL is a class of learning techniques characterized by a class of learning tasks[1]. Such learning tasks can be well modeled as Markov decision processes (MDPs). A finite MDP $M$ is a four-tuple,$M = {\rm{ }} < S,A,T,R > $ where $S$ is the finite set of states; $A$ is the finite set of actions; $T\!: S\times A\times S\rightarrow[0,1]$ is the transition probability; and $R\!: S\times A\times S\rightarrow{\bf R}$ is the reward function. For example,when making an agent learn how to escape from a maze,the reward is often zero until it escapes. A learning agent can actively surf within the state space. Assume that at time $t$ the agent is in state $s_t$. It may take one of the available actions in $s_t$,say,$a_t\in A$,and it will be transported into another state $s_{t+1}\in S$ with probability $T\left(s_t,a_t,s_{t+1}\right)$. After the transportation,the agent receives a reward of $r_t=R\left(s_t,a_t,s_{t+1}\right)$.

Within such an environment,a reinforcement learner aims at finding an optimal way of behaving,i.e.,an optimal policy $\pi: S\rightarrow\wp(A)$ where $\wp(A)$ is the probability distribution over the action space $A$,such that with $\pi$ the following expected return will be maximized

$$\begin{align*} R_t = \sum_{\tau=0}^\infty\gamma^\tau r_{t+\tau+1}, \end{align*}$$where $\gamma$ is the discounting factor. $r$ takes values from the interval $(0,1)$ and in our experiments the immediate rewards $r_{t+\tau+1}\in\{0,1\}$ so that the convergence of $R_t$ can be guaranteed.

Q-learning algorithm. In practice,one of the most famous RL algorithms is Q-learning[1, 8]. Q-learning maintains and updates the expected discounted return $Q(s,a)$ for all $s\in S$ and $a\in A$. Roughly speaking,the value $Q(s,a)$ reflects how wise it is to take action $a$ in state $s$. After action $a_t$ being taken in state $s_t$,Q-learning updates the Q-matrix using the following rule:

| $\begin{array}{*{20}{l}} {Q\left( {{s_t},{a_t}} \right) \leftarrow }&{\left( {1 - \alpha } \right) \cdot Q\left( {{s_t},{a_t}} \right) + }\\ {}&{\alpha \cdot \left( {{r_{t + 1}} + \gamma {{\max }_a}Q\left( {{s_{t + 1}},a} \right)} \right).} \end{array}$ | (1) |

Algorithm 1 presents the Q-learning algorithm,in which a learning episode refers a complete process that,starting from the initial state,the learning agent manages to achieve the terminal state.

Algorithm 1. Q-learning

$Q = Q - Learning(M,N,\epsilon )$

Input.$M = < S,A,T,R > $ is a learning task,$N$ is the number of learning episodes; $\epsilon\in(0,1)$ is a small positive threshold

Output. $Q(s,a)$ values for all $s\in S$ and $a\in A$

1) Initialize $Q(s,a)$ arbitrarily

2) for $N$ learning episodes do

3) $t\leftarrow 0$ $ \triangleright {{\rm{s}}_{\rm{0}}}$ is the initial state

4) while $s_t$ is not terminal do

5) Take action $a_t$ using the $\epsilon$-greedy policy

6) Observe the reward $r_{t+1}$ and the next state $s_{t+1}$

7) Update $Q(s_t,a_t)$ using (1)

8) $t\leftarrow t+1$

9) return $Q$

The $\epsilon$-greedy action selection policy at Line 5) is to select the"best" action with probability $1-\epsilon$ and select a random action with probability $\epsilon$. That is

$$\begin{align*} a_t\leftarrow\left\{\begin{array}{r@{,\qquad}l}\arg\max_{a}Q(s_t,a) & w\mbox{/prob.}~1-\epsilon,\\ \mbox{random action}~a\in A & w\mbox{/prob.}~\epsilon.\end{array}\right. \end{align*}$$Q-learning is proven to converge to the optimal policy under standard stochastic approximation assumptions[8].

Algorithm Sarsa. Another widely-used RL algorithm is Sarsa[1, 9]. Sarsa is similar to Q-learning. The difference is that,instead of using the maximum Q-values,Sarsa directly uses the Q-values of those $\left(s_{t+1},a_{t+1}\right)$ pairs. Precisely,the update rule of Sarsa is

| $\begin{align} Q\left(s_t,a_t\right)\leftarrow&~~\left(1-\alpha\right)\cdot Q\left(s_t,a_t\right)\nonumber+\\ &\alpha\cdot\left(r_{t+1}+\gamma\cdot Q\left(s_{t+1},a_{t+1}\right)\right). \end{align}$ | (2) |

Algorithm 2 presents this Sarsa learning method. Q-learning and Sarsa will later be used in our experiments.

Algorithm 2. Sarsa

$Q=\mbox{Sarsa}(M,N,\epsilon)$

Input and output are the same as in Algorithm 1

1) Initialize $Q(s,a)$ arbitrarily

2) for $N$ learning episodes do

3) $t\leftarrow 0$ $ \triangleright {{\rm{s}}_{\rm{0}}}$ is the initial state

4) Select action $a_t$ using the $\epsilon$-greedy policy

5) while $s_t$ is not terminal do

6) Take action $a_t$; observe $r_{t+1}$ and $s_{t+1}$

7) Select action $a_{t+1}$ using the $\epsilon$-greedy policy

8) Update $Q(s_t,a_t)$ using Formula 2

9) $t\leftarrow t+1$

10) return $Q$

B. Options and Semi-Markov Decision Process SMDPQ-learning in its original form works on the finest granularity of the state-action space,i.e.,it focuses on every individual state-action pair. However practical problems often exhibit hierarchical structures and taking advantage of such hierarchies can often yield a significant gain in efficiency. Such an idea has been extensively studied under the topic of options and semi-Markov decision processes (SMDPs)[10]. The concept of option is an extension to that of primitive action. Formally,an option in MDP $M = < S,A,T,R > $ is defined as a triplet $o = {\rm{ }} < I,\pi ,\beta > $,where $I\subseteq S$ is the set of states in which the option can be initiated,$\pi: S\rightarrow\wp(A)$ is the policy during the execution of the option,and $\beta: S\rightarrow[0,1]$ is the termination condition defining the probability with which the option terminates in a given state $s$. Roughly speaking,an option can be viewed as a stored procedure that,when triggered,leads the agent into certain termination state ($s$).

SMDP is the extension of MDP with options. We omit the formal definition of SMDP here because it would be a distraction from our main focus in this paper. Nonetheless,the updating rule for learning SMDP is useful in our proposed method and thus is presented below

| $\begin{align} Q\left(s_t,a_t\right)\leftarrow&~~\left(1-\alpha\right)\cdot Q\left(s_t,o_t\right)+\alpha\cdot\sum_{i=1}^k\gamma^{i-1}r_{t+i-1}\nonumber+\\ &\alpha\cdot\gamma^k\max_{a\in O}Q\left(s_{t+k},a\right), \end{align}$ | (3) |

here $k$ is the time duration of option $o_t$. It is clear that (3) extends (1) in a natural way.

C. Transfer Learning and Our ProblemTransfer learning in general refers to any technique that uses past learning experience to speed up future learning. In this paper,we let this term refer to the technique that reuses learnt MDPs to speed up the learning in a new MDP. In this sense,an MDP is also a learning task. We will use these two terms interchangeably throughout the paper. Now we can formally describe the problem studied in this paper as follows.

Problem definition. Given a library $\mathcal{L}$ of source tasks,$M_1,M_2,\cdots,M_n$,and a target task $M$,our goal is to accelerate the reinforcement learning on $M$ by reusing knowledge extracted from $\mathcal{L}$. Especially,in this paper we require in addition that all source tasks and the target task share the same action set.

It should be noted that requiring all tasks to have the same action set does not compromise the generality of the problem very much. In fact,the constraint we impose on the problem naturally emerges from many practical learning tasks. For example,in a 2D maze game,the actions of an agent are always (typically) up,down,left,and right,regardless of the detailed maze structure (i.e.,the state space).

III. OUR METHODIn this section,we present our four-stage method for the learning task. We first manage to extract a set of local subgoals (i.e.,key states) from a source task (Section III-A). Then,we transform these local subgoals into reusable options (Section III-B). After that,on learning the target task,we manage to identify a set of states (or state-action pairs) for which some of those reusable options may be applied (Section III-C). Finally,we show how to use the reusable options to accelerate the learning (Section III-D).

Comparing to existing methods,our method has the following advantages:

1) Our proposed method only requires that the action sets be the same,and impose no limitation on the state sets,transition probabilities,or reward functions,and thus the method is widely applicable in various transfer learning scenarios.

2) The basic reusable units in our proposed method are options, which are,briefly speaking,fractional policies extracted from the source tasks. Therefore the target task can reuse multiple options and gain a significant acceleration.

3) Our proposed method is an automatic method that does not require any human intervention. Especially,it automatically discovers subgoals in the task library as well as the best matching between the target task and the reusable options.

A. Discovery of SubgoalsThe subgoal discovery phase in our proposed method aims at extracting a set of subgoals from the source library. Currently there are two classes of strategies that serve for this purpose. One is to use the frequency of states[11],and the other is to use the graph model[12]. Although they may achieve good performance in accuracy,methods based on graph models are typically much more time-consuming,and thus are not suitable for large MDPs. Hence in this paper we consider the state frequency as the indicator of subgoals. The rationale comes from the intuition that if a state is visited frequently then it is important and vice versa. (For example,imagine if there is a single state bridging two disjoint parts of the state space.)

However it is reported that frequency-based methods are sensitive to noise[13],which eventually harms the accuracy. Also,existing frequency-based methods merely take the global perspective of a state,i.e.,they only consider those states with globally highest frequencies. In this paper,we argue that an effective method should take advantage of both global and local perspectives. The idea is that if a state has a significantly higher visiting frequency than its neighbors then it should be regarded as important within its own neighborhood. We call such kind of states local subgoals. One may think of such local subgoals as the "entries" of traffic crosses in an MDP or "bridges" that connect different state subspaces.

To find all the local subgoals,we adopt a connection-based method[14]. Specifically,given an MDP $M = < S,A,T,R > $ and states $s,s'\in S$,we define

| $\begin{align} \delta\left(s,s'\right) = \max\left\{C(s)-C(s'),0\right\}, \end{align}$ | (4) |

where $C(\cdot)$ provides the visiting frequency. Briefly speaking $\delta\left(s,s'\right)$ measures the "exceeded" visiting frequency from state $s$ to state $s'$. Then,we measure the eligibility of a state $s$ being a local subgoal by summing up all the exceeded visiting frequencies from $s$ to its neighbors,weighted by the transition probability,as shown in (5)

| $\begin{align} \Delta(s) = \sum_{a\in A,s'\in S}\mbox{Pr}\{a|s\}T(s,a,s')\delta\left(s,s'\right). \end{align}$ | (5) |

Then we can give the definition of the relative subgoals.

States with high $\Delta$-values are those local subgoals we look for. Formally,given an MDP $M = < S,A,T,R > $ and an integer threshold $k$,a state $s\in S$ is a local subgoal if it satisfies the following two conditions:

1) $s\in\mbox{top}_k(S)$,where $\mbox{top}_k(S)$ is the set of $k$ states that have the largest $\Delta$-values;

2) $\Delta(s)>\sum_{a\in A,s'\in S}\mbox{Pr}\{a|s\}T(s,a,s')\Delta\left(s'\right)$.

Condition 1 guarantees that a local subgoal is also globally "not bad." Condition 2 says that the quality of a local subgoal should be larger than the quality of its neighbors. In a concrete situation,for example a maze game,this condition in fact says that when two bottleneck states are adjacent,then it is sufficient to take only one of them as a local subgoal,and therefore the set of extracted local subgoals is constrained within a reasonable size.

It remains to be clarified that how to obtain all the frequencies $C(\cdot)$ as well as the state-action probability $\mbox{Pr}\{\cdot|s\}$. In general,when positioned in an unknown environment,an agent will need excessive exploration of the environment,and then it will be able to distinguish bottlenecks and "regular" states. Based on this idea,we use a Monte Carlo sampling process to estimate the frequencies. We let the agent explore the environment (using an RL algorithm such as Q-learning) for $N$ episodes,where $N$ is a parameter related to the complexity of the environment and the expected running time. During the exploration,we collect all the non-circular trajectories from the starting state to the termination state. We then count,for each state $s$,how many times $s$ appears in these trajectories,and use the number of appearance as the estimation of $C(s)$. $\mbox{Pr}\{\cdot|s\}$ can also be estimated by counting the frequency of different actions in state $s$. The reason why we focus on non-circular trajectories is that,comparing to very complex trajectories with cycles,non-circular trajectories correspond to simpler (and thus better) ways to travel from the starting state to the termination state.

Algorithm 3 summarizes the procedure of subgoal discovery. Note that in addition to the set of subgoals,$G$,Algorithm 3 also produces the set $J$ of successful non-circular trajectories in the task MDP $M$. We shall use this set $J$ in the upcoming phase of option construction.

Algorithm 3. Subgoal discovery

$(G,J)=\mbox{SubgoalDiscovery}(M,N,k)$

Input. $M = < S,A,T,R > $ is a learning task; $N$ and $k$ are two integer thresholds

Output. 1) $G$,local subgoals in $M$; 2) $J$,successful non-circular trajectories

1) Use an RL algorithm (e.g.,Q-learning) to learn $M$ for $N$ episodes; record all successful non-circular trajectories in $J$

2) for each $s\in S$ do

3) Estimate the state frequency $C(s)$ by counting the number of appearance of $s$ in $J$

4) Estimate state-action probability $\mbox{Pr}\left\{\cdot\left|s\right.\right\}$

5) for each $s\in S$ do

6) Compute $\Delta(s)$ according to (4) and (5)

7) for each $s\in S$ do

8) if $s\not\in\mbox{top}_k(S)$ then continue

9) if $\Delta(s) > \sum_{a\in A,s'\in S}\mbox{Pr}\left\{a\left|s\right.\right\}T(s,a,s')\Delta(s')$

10) then add $s$ into $G$

11) return $G,J$

B. Construction of OptionsWe now construct reusable options using those discovered local subgoals. Basically,we construct options in such a way that every option can be thought of as a "guiding policy" towards some subgoal,and thus when reused,it may help to accelerate the learning process.

Algorithm4 summarizes the procedure of option construction. The procedure begins with extracting all the sub-trajectories from $J$,such that the starting state and the ending state of each sub-trajectory are both subgoals Lines 1$\sim$4. Note that such sub-trajectories correspond to (probably suboptimal but feasible) policies that guarantee the accomplishment of subgoals. Then,for each sub-trajectory we construct one option (Lines 5$\sim$10). Specifically,the initial set $I$ is the set of all the states along the sub-trajectory except for the termination subgoal (Line 7). The policy $\pi$ of the option is simply the sub-trajectory itself,i.e.,following the sub-trajectory to the terminating subgoal (Line 8). Finally,each option is further learnt in its own MDP $M$ for optimal Q-values of the termination subgoal (Lines 11 and 12). In this way,all the options are now ready to be transferred.

Algorithm 4. Option construction

$O = OptionConstruction(G,J)$Input.

$G$ and $J$ are the sets of subgoals and trajectories,respectively,of some underlying task $M$,they are the outputs of Algorithm 3 with the input $M$.Output. $O$,a set of options

1) $W\leftarrow\emptyset$

2) for each trajectory $\xi\in J$ do

3) Find $W_\xi$,the set of segments of $\xi$ where each $w\in W$ starts with some subgoal $g\in G$ and ends with another subgoal $g'\in G$

4) Add $W_\xi$ into $W$

5) for each segment $w\in W$ do

6) $\triangleright$ {Assume} $w=\left[s_1\xrightarrow{a_1}s_2\xrightarrow{a_2}\cdots\xrightarrow{a_{k-1}}s_k\right]$

7) $I\leftarrow\left\{s_1,s_2,\cdots,s_{k-1}\right\}$

8) for $i=1,2,\cdots,k-1$ do $\pi(s_i)\leftarrow a_i$

9) $\beta\leftarrow\{s_k\}$

10) Add option $o \leftarrow < I,\pi ,\beta > $ into $O$

11) for each option $o = < I,\pi ,\{ s\} > \in O$ do

12) Learn $M$ for optimal Q-values $Q(s,a)$ ($\forall a\in A$)

13) return $O$

C. Option SimilarityAs is explained before,upon learning a new task we want to reuse those options we obtained from source tasks. To do this,our basic idea is that we first let the learning agent explore the environment for a while,identifying a set of subgoals and constructing options,as what we have done for all the source tasks (see Section III-A and III-B). Then,since we have learnt the optimal Q-values for all the options in the source tasks (see Line 12 of Algorithm 4),we can reuse the optimal Q-values of a source option $o$ if it is "similar" to some target option $o'$. Intuitively,such reused Q-values could be a good "initial guess" for further optimization. The key issue here is how to measure the similarity between two different options. Note that according to the way of constructing options (Algorithm 4),an option can be illustrated as a chain of states linking one local subgoal to another. Therefore,the question essentially becomes how to measure the distance between two different chains of states.

We base our solution on the simple intuition that 1) similar chains should have similar lengths and 2) the states on two similar chains should be correspondingly similar. Note that such structural measurement has already been used to describe the homomorphism between MDPs[15]. One problem with homomorphism is that it requires strong conditions (in order to guarantee nice mathematical properties) and thus is not generally applicable[16]. Recall that our goal is to reuse options to accelerate the learning. To this end,we believe that a weaker (i.e.,with less mathematical properties) but generally applicable measurement is more preferable.

Let $M = < S,A,T,R > $ and $M' = < S',A',T',R' > $ be the source and target MDPs respectively. Let $o = < I,\pi ,\beta > $ and $o' = < I',\pi ',\beta ' > $ be two options constructed from the source and target MDPs respectively. Without loss of generality,let us assume that $|I'|\leq|I|$. In addition,assume for the moment that an injective mapping $f$ from $I'$ to $I$ is known,of which the functionality is to correlate similar states. We will explain the construction of $f$ shortly. To measure the distance between two states,$s$ and $s'$,we use the following formula with a positive (and typically small) thresholding parameter $\theta$:

| $\begin{align} d_\theta\left(s,s'\right)=\sum_{a\in A}\rho_\theta\left(\mbox{Pr}\{a|s\},\mbox{Pr}\{a|s'\}\right), \end{align}$ | (6) |

where $\rho_\theta\left(x,y\right)$ returns 0 if $|x-y|\leq\theta$ and 1 otherwise. The intuition behind (6) is that two states are similar if the actions on them are similar. $\rho_\theta$ is in fact used to penalize any dissimilarity.

Based on (6),the overall distance between two options $o$ and $o'$ can thus be defined as

| $\begin{align} D_\theta(o,o') = C_1\sum_{s'\in I'}d_\theta(f(s'), s')+C_2\left(\left|I\right|-\left|I'\right|\right). \end{align}$ | (7) |

The two additive terms in (7) have clear meanings. As the mapping $f$ matches some states into pairs and leaves others (if any) unmatched,the first term sums up the distance between every matched states,while the second term penalizes the degree of non-matching. $C_1$ and $C_2$ are two positive constants controlling the relative importance of the corresponding factors. The distance $D_\theta$ can be easily transformed into a similarity measure as

| $\begin{align} \mbox{sim}_\theta\left(o,o'\right)=\frac{1}{1+D_\theta\left(o,o'\right)}. \label{eq:sim} \end{align}$ | (8) |

We now explain how to obtain the mapping $f$ from $I'$ to $I$. First of all,given two MDPs $M = < S,A,T,R > $ and $M' = < S',A',T',R' > $,there are methods to automatically find the mapping. For example,Taylor et al. proposed a MASTER algorithm[13] to find one mapping between $S$ and $S'$,another between $A$ and $A'$. Their basic idea is to examine all the possible mappings and land on the ones with the minimum error. Secondly,it is also possible to derive the mapping $f$ from a priori domain knowledge. For example,in maze games,it is natural to map a termination state into a termination state since usually the agent receives positives rewards after it accomplishes some goal (or subgoal). The mapping can then propagate reversely along the two option chains. Last but not least,the mapping $f$ can also be determined manually,as is suggested by many researchers[17].

D. Reusing OptionsNow given an option $o$ in the target task $M = < S,A,T,R > $,we can find some similar option $o'$ from some source task $M' = < S',A',T',R' > $,

| $\begin{align} o' = \left[s_1\xrightarrow{a_1}s_2\xrightarrow{a_2}\cdots\xrightarrow{a_{k-1}}s_k\right] \label{eqn:option} \end{align}$ | (9) |

Note that (9) is merely another representation of the option $o' = < I',\pi ',\beta ' > $ where $I'=\left\{s_1,s_2,\cdots,s_{k-1}\right\}$,$\pi'(s_i)=a_i$ for $i=1,2,\cdots,k-1$,and $\beta'=\left\{s_k\right\}$. In addition to the states and actions,there are in fact two more pieces of knowledge about $o'$: (i) $r'_i=R'(s_i,a_i,s_{i+1})$,which can be estimated when taking sample episodes (Line 2 of Algorithm 3),and (ii) $Q'(s_k,a)$ for any valid action $a$ in state $s_k$,which is learnt already (Line 12 of Algorithm 4).

The next step is to inject such knowledge of $o'$ into the learning of $M$. The idea is straightforward,that whenever $o$ is initiated we use those $r'_i$ and $Q'(s_k,a)$ of $o'$ to update $Q(\cdot,o)$ in $M$. To implement the idea,we augment the action set $A$ to include options: an option $o = < I,\pi ,\beta > $ is valid in state $s\in S$ if $s\in I$. After the augmentation, the learning agent chooses actions as usual (using for example the $\epsilon$-greedy policy[1]). If in state $s_t$ the agent chooses a primitive action $a_t$,then $Q(s_t,a_t)$ is updated using (1) (direct learning without transfer). If the chosen action is an option $o_t$,and we can find a similar option $o'$ from the library,then we update $Q(s_t,o_t)$ using the following formula,

| $\begin{align} Q(s_t,o_t)\leftarrow&~(1-\alpha)\cdot Q(s_t,o_t) + \alpha\cdot\sum_{i=1}^k\gamma^{i-1}r'_{t+i-1}\nonumber+\\ &\alpha\cdot\gamma^k\max_{a\in \tilde{A}}Q'(f^{-1}(s_{t+k}),a), \label{eqn:tl-update} \end{align}$ | (10) |

where $f$ is the mapping discussed in Section II-B. Comparing to the rule of SMDP (3),(10) reuses past experience ($r'_{t+i-1}$ and $Q'$) to "skip" the learning of $o_t$.

Algorithm 5 shows the pseudo-code for option transfer. Lines 6$\sim$17 illustrate the transfer learning process. Note that before transfer learning,we preprocess the option set $O$ to exclude those options for which we cannot find any suitable counterpart in the source library (Line 4). Such preprocessing is important due to two reasons. On one hand,it guarantees that during the transfer learning,whenever an option is initiated we can always find a reusable source option (Line 15). On the other hand,it reduces the risk of negative transfer. When a negative transfer happens,the learning agent will not be able to benefit from the transfer. On the contrary,it will take the agent some extra effort to neutralize the effects. Finally,note that after the transfer learning process,a conventional learning method (such as Q-learning) is called to learn the optimal policy (Line 18).

Algorithm 5. Transfer learning

$Q = \mbox{TransferLearning}(\mathcal{L},M,\psi)$

Input. $\mathcal{L}$ is the library of reusable options, $M = < S,A,T,R > $ is the task to learn,$\psi$ is a positive threshold.

Output Q-values of $M$ implying the optimal policy

1) Apply Algorithms 3 and 4 to $M$ to get a set $O$ of options

2) for each option $o\in O$ do

3) Search $\mathcal{L}$ for the option $o'$ that is most similar to $o$

4) if $\mbox{sim}_\theta(o,o')<\psi$ then delete $o$ from $O$

5) Add $O$ to $A$,the action set of $M$

6) for a small number of episodes do

7) $s\leftarrow s_0$ $ \triangleright {s_0}$ is the initial state

8) while $s$ is not the termination state do

9) Select $a\in A$

10) if $a$ is a primitive action then

11) Execute $a$; observe the new state $s'$

12) Update $Q(s,a)$ using (1)

13) $s\leftarrow s'$

14) else

15) Retrieve the option $o$ that is similar to $a$

16) Update $Q(s,a)$ using (10)

17) $s\leftarrow\mbox{termination of~}a$

18) Learn $M$ until $Q$ converges

19) return $Q$

E. Maintenance of the Source LibrarySo far we have explained how to learn a target task $M$ faster with the aid of a source library $\mathcal{L}$. In practice,learning tasks come from time to time. After a target task $M$ is learnt,the learning experience (i.e.,options in our case) may be reused to speed up future tasks. Therefore from a systematic point of view,the library $\mathcal{L}$ is evolving over time and requires some maintenance.

We have two main concerns with respect to the maintenance of $\mathcal{L}$. One is the diversity of options and the other is the size of the library. Intuitively,on one hand,we expect the options in the library to be different from each other,so that an incoming option may have a higher chance to find some match in the library. On the other hand,the library should be controlled within a reasonable size,so that searching the library (Line 3 of Algorithm 5) will not become a bottleneck of the learning process.

Algorithm 6 shows in detail how we maintain the library. As is indicated in Lines 2 and 3,we do not add every option into the library; the option to be included should be sufficiently different from the existing options in the library. This principle leads also to Lines 5 and 6 when the library becomes too large: one of the two most similar options shall be expelled from the library.

Algorithm 6. Maintenance of the library

$\mbox{MaintainLibrary}(\mathcal{L},O,\mu,\kappa)$

Input. $\mathcal{L}$ is the library of source options; $O$ is a set of newly learnt options; $\mu$ is a positive threshold; $\kappa$ is the maximum size of the library.

1) for each option $o\in O$ do

2) if $\max_{o'\in\mathcal{L}}\mbox{sim}_\theta(o,o')<\mu$ then

3) Add option $o$ into $\mathcal{L}$

4) while $\left|\mathcal{L}\right|>\kappa$ do

5) $(o_1,o_2)\leftarrow\arg\max_{o,o'\in\mathcal{L}}\mbox{sim}_\theta\left(o,o'\right)$

6) Delete option $o_1$ from $\mathcal{L}$

IV. EXPERIMENTSIn this section,we experimentally evaluate our proposed method. As a point of reference we also implemented Q-learning,Sarsa,and SDHRL[18] (a subgoal-based learning method; will be briefly introduced in Section IV-B). We first compare our method with the competitors to show the effectiveness of our method (Section IV-C). Then,we study the sensitivity of our method against varying parameters (Section IV-D). All algorithms have been implemented in C programming language.

A. The Grid World and Maze GamesWe use the grid world domain to examine our method. Over decades,such a domain has been extensively used by many researchers as a benchmark to test RL algorithms[14]. Besides the popularity,we choose this domain also because it is easy to illustrate our idea. The subgoals and options we construct (Sections III-A and III-B) shall have clear physical meaning in such a domain,as we will explain shortly.

As is shown in Fig. 1,an instance of the grid world is a maze game where there is a rectangular area consisting of two different types of cells. White cells are accessible to the learning agent,whereas the black cells,which represent barriers,are inaccessible. Typically in each maze game,there is an initial position and a goal position. For example,in Fig. 1,the initial position is at the upper left corner (marked with "S") and the goal is,to the opposite,at the lower right corner (marked with "G").

|

Download:

|

| Fig. 1. Two sample maze games in the grid world domain. The left maze is simple, whereas the right one is more complicated. | |

A learning agent in a maze game should learn to travel from the initial position to the goal position. The travel has a cost which is in general proportional to length of the traveling trajectory. Therefore a maze game can be modeled as an MDP within which a learning agent will be motivated to find an optimal policy. In particular,the state space S is the set of all white cells. The action space A is the set of four possible actions up,down,left,and right. The transition probability T is set to be consistent with our intuition in general but with occasional disturbance. For example,when the agent at position "S" of Maze 1 (Fig. 2) takes the action down,then with probability 0.9 it will enter the cell right below "S",but with the rest probability of 0.1 it will be transported to a random neighboring cell of "S"1. Finally,the reward function R is set to be

|

Download:

|

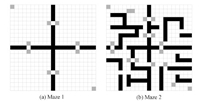

| Fig. 2. Local subgoals discovered in Maze 1 and Maze 2. | |

We fix the size of all the mazes to $21\times 21$,thus there are roughly 400 states in a maze.

B. The Competitor AlgorithmsTo our best knowledge,there is no prior work that solves exactly the same problem as we do in this paper,we compare our proposed method with most commonly-used existing methods. In particular,we compare our method with two non-transfer learning methods,Q-learning and Sarsa,as well as one transfer learning method,SDHRL.

Non-transfer learning competitors. We have introduced Q-learning and Sarsa as a piece of background knowledge in Section II-A. In our experiments,however,instead of the very basic versions introduced in Algorithms 1 and 2,we have actually implemented more advanced versions of these two algorithms,namely Q($\lambda$) and Sarsa($\lambda$). The advanced versions of these algorithms use eligibility traces to speed up the learning2. An eligibility trace is a record of certain events,such as visiting states or taking actions. One example eligibility trace $e(s,a)$ might look like the following.

1 Although it might seem counter intuitive,the uncertainty here is in fact

profound,in the sense that it compensates the somewhat optimistic reduction

of any practical (complex) environment into an MDP model.

2 The parameter , indicates the use of eligibility traces.

At every discrete time step $t$,the above eligibility trace reinforces $(s_t,a_t)$,the state-action pair occurred at $t$,while decaying (through a discount factor $\lambda$) all the other state-action pairs. The idea of using eligibility traces is to reward not only the immediate state-action pair (i.e.,$(s_t,a_t)$) but also the other state-action pairs that ever contributed to the learning episode. To avoid any distraction from our main focus in this paper,we omit the pseudocodes of Q($\lambda$) and Sarsa($\lambda$); they are,nonetheless,very similar to Algorithms 1 and 2,only with slight modifications in their update rules. The parameter $\lambda$ takes values from the range $[0,1]$. When $\lambda=0$,Q($\lambda$) and Sarsa($\lambda$) degenerates to Q-learning and Sarsa,as introduced in Section II-A.

A transfer learning competitor. In addition to the two conventional methods mentioned above,we also compare our algorithm with SDHRL[18],a well-known subgoal-based learning method. SDHRL works well in maze games with bottleneck states and it also discovers subgoals by searching a learned policy model for certain structural properties to accelerate learning in other tasks in which the same subgoals are useful. SDHRL uses Q-learning to learn the maze games for convergence,after the knowledge transfer.

There are several parameters in the above-mentioned learning algorithms. $\alpha$ is the learning rate and $\gamma$ defines the discounting factor,both used in update rules such as (1) and (2). Also,all the algorithms use the $\epsilon$-greedy policy (see Section II-A) to select actions,where $\epsilon$ indicates the exploration probability. Without any specific clarification,in our experiments we set $\alpha=0.05$,$\gamma=0.9$ and $\epsilon=0.1$ following the suggestions of some early studies in RL[1].

C. Quality of Subgoals and OptionsThe effectiveness of our transfer learning method relies greatly on the quality of subgoals and options. Hence we first examine the effectiveness of the subgoal discovery (Algorithm3) and option construction (Algorithm4). We carry out the experiment on Maze 1 of Fig. 2 and Maze 2 of Fig. 2. Parameters are set as follows: $N=20$,$k=100$,and $\epsilon=0.1$.

From Fig. 2,we can observe several interesting facts. Firstly,we can see that our method effectively identifies all the key states in both mazes. All the local subgoals are around some gateways through the barrier. These gateways are indeed important states in the mazes,because in order to travel from one region to another across the barrier,such gateways must be accessed. Secondly,we see that,instead of gateways themselves,our method favors pairs of states which can be interpreted as the entrances and exits of the gateways. We believe that,comparing to individual subgoals being landmarks,such entrance-exit pairs provide better guidance to learning agents. Especially,recall that we construct options exactly based on pairs of local subgoals (Line 3 of Algorithm 4). Last but not least,our method is robust against the variation of the environment. The method effectively identifies local subgoals in both simple and complex environments.

D. Effectiveness of Our Proposed MethodWe now compare our method against Q($\lambda$),Sarsa($\lambda$),and SDHRL. The methodology is as follows. We first randomly construct a series of mazes with increasing complexity (controlled by the number and positions of black cells). Then,we process these mazes using our proposed algorithm,i.e.,we first extract local subgoals,then construct the option library,and then learn these mazes. The parameters used during this off-line preparation are listed in Table I.

|

|

Table I PARAMETER SETTINGS |

After such an offline preparation,we learn (the simplest) Maze 1 and (the most complicated) Maze 2 online. We compare the online performance of our transfer learning algorithm (Algorithm 5) with the two non-transfer competitors,Q($\lambda$) and Sarsa($\lambda$),as well as the transfer learning competitor,SDHRL. The parameter $\psi$ at Line 4 of Algorithm 5 is set to 0.9. That is,an option is transferred only if the similarity is larger than 0.9. We believe that this value prevents negative learning. We run each algorithm in each maze for 5 times. In each run,there are 2000 episodes.

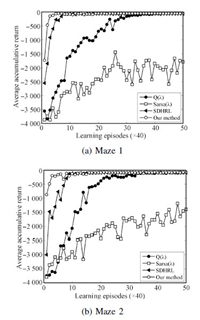

Fig. 3(a) and 3(b) show the comparison results on Maze 1 and Maze 2,respectively. The $x$-axis represents the number of learning episodes (and thus the lapse of time). For a fixed $x$-value,the corresponding $y$-value represents the average cumulative return.

|

Download:

|

| Fig. 3. Learning curves with respect to Maze 1 and Maze 2. | |

We see from the results that our method outperform Q($\lambda$),Sarsa($\lambda$) and SDHRL in both mazes. It converges very quickly in a few episodes and stick to the learnt optimal policy during the rest of the learning process. It is clear that both our method and SDHRL perform better than non-transfer methods Q($\lambda$) and Sarsa($\lambda$). In both mazes,Q($\lambda$) needs more than 1000 episodes to converge whereas this number for Sarsa($\lambda$) is even more than 2000 (i.e.,Sarsa($\lambda$) fails to converge under our experiment setting of 2000 learning episodes). In contrast,transfer learning methods (SDHRL and ours) converge to the optimal policy in merely a few dozens of episodes due to the transferred knowledge. Especially,our method has the fastest convergence speed,which is several times faster than SDHRL and roughly two orders of magnitudes faster than those non-transfer methods.

E. Parameter Sensitivity AnalysisAs has been stated above,all the algorithms studied in our experiments involve three learning parameters: 1) the learning

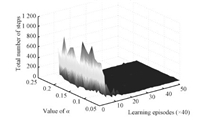

rate $\alpha$,2) the exploitation probability $\epsilon$ of the $\epsilon$-greedy policy,and 3) the eligibility trace decay factor $\lambda$. In all the experiments so far,we have set default $\alpha= 0.05$,$\epsilon = 0.1$,and $\lambda= 0$. Now we use the last set of experiments to show the sensitivity / stability of our method,i.e.,the resistance against any variation of the above-mentioned learning parameters. We carry out a set of three experiments to test the sensitivity; in each experiment we vary one of the three learning parameters and keep the other two fixed at their default values. We use the most complex maze,Maze 2 (Fig. 2),for all these experiments.

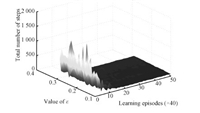

In the first experiment,we vary $\alpha$ from 0.05 to 0.2 with step size 0.01,while keeping $\epsilon$ and $\lambda$ at their default values,0.1 and 0,respectively. Fig. 4 shows the result of this experiment.

|

Download:

|

| Fig. 4. Learning curves of our method with different learning rates. | |

The $x$-axis ("Learning episodes") represents the number of learning episodes and thus the lapse of time. The $y$-axis ("Value of $\alpha$") represents the variation of the value of $\alpha$. The $z$-axis ("Total number of steps") shows the total number of steps the agent takes form the starting state "S" to the goal state "G". For a specific $(x,y,z)$,it means that under the parameter setting $\alpha=y$,the agent takes $z$ steps to arrive at the goal state during the $x$-th learning episode. As we can see,when $\alpha$ changes,there is no significant difference between the curves. After an initial vibration,all the curves rapidly converge to the optimal value.

The next experiment is conducted to test the stability of our method against $\epsilon$,the exploration probability in the $\epsilon$-greedy action selection policy. In this experiment,we fix $\alpha$ and $\lambda$ at 0.05 and 0 respectively,and vary $\epsilon$ from 0.1 to 0.3 with step size 0.01. Fig. 5 shows the result,which is very similar to the result of Fig. 5. In all cases,the learning curves converge very quickly.

|

Download:

|

| Fig. 5. Learning curves of our method with different $\epsilon$. | |

These results are very similar to those of Fig. 3(a) and 3(b). As in Fig. 3(a) and 3(b),the convergence of Sarsa($\lambda$) is slow here for either $\lambda = 0.1$ or $\lambda = 0.3$. It is clear that the relative performance as well as the converging trends in Fig. 6(a) and 6(b) (and also Fig. 3(a) and 3(b)) are basically the same. Note that the decay factor $\lambda$ or the eligibility trace mechanism is designed to improve the performance of basic RL algorithms such as Q-learning and Sarsa,thus the stability of our method against $\lambda$ indicates that our method is constantly better than those methods that rely on the eligibility trace mechanism.

|

Download:

|

| Fig. 6. Learning curves with respect to Maze 1 and Maze 2. | |

McGovern et al.[4] use multiple-instance learning (MIL) to find the subgoals and transfer their policies to target task to speed up the learning. The determination of the subgoals depends on the occurring frequencies of states in learning episodes. In particular,they consider both successful and unsuccessful learning trajectories. The subgoals are those states with the highest frequencies. However,as we have argued in Section III-A,the subgoals we really need are those bottleneck states which can be helpful in finding successful trajectory.

Lazaric et al.[3] select a most similar task from source tasks and transfer the policies to the target task to speed up the learning. By using the similarity between tasks,their algorithm chooses a most similar task from a learnt source tasks library,and then transfers the policy of the selected task to the target task. Our proposed method differs from their method,in that we are able to take advantage of multiple source tasks. We argue that two or more source tasks might be equally useful to a target task,and thus it is reasonable to consider not only the most similar task but also all the source tasks that are similar enough to the target task.

Ferns et al.[19] study the similarity measure between states in one MDP. The measure is built upon the Kantorovich distance (a.k.a earth mover’s distance,EMD) between the two transition probability distributions of two states in the same MDP. However,their distance measure is not desirable in our problem setting because of the following reasons. First of all,their distance measure requires complete knowledge about the environment (so that the distance can be calculated). This is impossible in our problem setting that the learning agent has no a priori knowledge about the incoming tasks. Second,their distance measure is only applicable to two states in the same MDP. This is because,for Kantorovich distance to make sense,the two probability distributions of which the distance is to be calculated must be defined upon the same set of "bins" (i.e.,must be defined upon the same domain). However,in our problem setting,we allow different state spaces in different tasks,therefore there is no common domain for different transition probability distributions in different tasks. Last but not least,it is well-known that computing Kantorovich distance is time-consuming. Sometimes it is even slower than learning from scratch,and therefore it cannot guarantee the acceleration of learning.

Mehta et al.[20] study transfer learning under the assumption that the tasks are identical except for the reward function. In their work,they view a reward function as a linear combination of rewarding features. They use hierarchical RL method to divide a task into subtasks,and then transfer knowledge between subtasks. Although the method is cheap in terms of time cost,in order for it to be applicable the task must have hierarchical structures. Moreover,the division of the hierarchical structure and the selection of the reward features much be known in advance and manually done.

VI. CONCLUSIONIn this paper,we study the problem of reinforcement learning transfer from a library of source tasks (to be precise,a library of reusable units). That being the purpose,we present a novel concept called local subgoals. We identify the local subgoals with two conditions. Using the local subgoals instead of the global ones,we are able to build a library of reusable options,which can aid the learning of new tasks. Upon learning a new task,we first find,within the source library,all the reusable options that are potentially useful for this task,during which process the key is our proposed novel similarity measure between options. We carry out extensive experimental studies,of which the results show that our method significantly outperforms the state-of-the-art methods.

In the future,we shall tackle more challenge tasks. For example,instead of the maze games in the grid world,we would like to study RL and transfer learning in practical domains such as computer video games. We would also like to consider other (and possibly better) similarity measures between options and MDPs. Last but not least,we are interested in maintaining the source task library from the data engineering perspective; we wish to efficiently maintain the library for a large amount of source tasks.

| [1] | Sutton R S, Barto A G. Reinforcement Learning: An Introduction. Cambridge, MA: The MIT Press, 1998. |

| [2] | Fernández F, García J, Veloso M. Probabilistic policy reuse for intertask transfer learning. Robotics and Autonomous Systems, 2010, 58(7): 866-871 |

| [3] | Lazaric A, Restelli M, Bonarini A. Transfer of samples in batch reinforcement learning. In: Proceedings of the 25th Annual International Conference on Machine Learning. . New York, NY: ACM, 2008544-551 |

| [4] | McGovern A, Barto A G. Automatic discovery of subgoals in reinforcement learning using diverse density. In: Proceedings of the 18th International Conference on Machine Learning. San Francisco, CA, USA: Morgan Kaufmann Publishers, 2001. 361-368 |

| [5] | Pan S J, Tsang I W, Kwok J T, Yang Q. Domain adaptation via transfer component analysis. IEEE Transactions on Neural Networks, 2011, 22(2): 199-210 |

| [6] | Taylor M E, Stone P. An introduction to intertask transfer for reinforcement learning. AI Magazine, 2011, 32(1): 15-34 |

| [7] | Zhu Mei-Qiang, Cheng Yu-Hu, Li Ming, Wang Xue-Song, Feng Huan-Ting. A hybrid transfer algorithm for reinforcement learning based on spectral method. Acta Automatica Sinica, 2012, 38(11): 1765-1776 (in Chinese) |

| [8] | Watkins C, Dayan P. Q-learning. Machine Learning, 1992, 8(3): 279-292 |

| [9] | Rummery G A, Niranjan M. On-line Q-learning using Connectionist Systems, Technical Report CUED/F-INFENG/TR 166, Department of Engineering, Cambridge University, Cambridge, UK, 1994. |

| [10] | Sutton R S, Precup D, Singh S. Between MDPs and semi-MDPs: a framework for temporal abstraction in reinforcement learning. Artificial Intelligence, 1999, 112(1): 181-211 |

| [11] | Stolle M, Precup D. Learning options in reinforcement learning. In: Proceedings of the 5th International Symposium on Abstraction, Reformulation and Approximation. Kananaskis, Alberta, Canada: Springer, 2002. 212-223 |

| [12] | Ö zgurü S, Alicia P W, Andrew G B. Identifying useful subgoals in reinforcement learning by local graph partitioning. In: Proceeding of the 22nd International Conference on Machine Learning. Bonn, Germany: ACM, 2005. 816-823 |

| [13] | Taylor M E, Kuhlmann G, Stone P. Autonomous transfer for reinforcement learning. In: Proceedings of the 7th International Joint Conference on Autonomous Agents and Multiagent Systems. Estoril, Portugal: ACM, 2008. 283-290 |

| [14] | Chen F, Gao F, Chen S F, Ma Z D. Connect-based subgoal discovery for options in hierarchical reinforcement learning. In: Proceedings of the 3rd International Conference on Natural Computation (ICNC002). Haikou, China: IEEE, 2007.698-702 |

| [15] | Ravindran B, Barto A G. An Algebraic Approach to Abstraction in Reinforcement Learning [Ph. D. dissertation], University of Massachusetts Amherst, USA, 2004 |

| [16] | Soni V, Singh S. Using homomorphisms to transfer options across continuous reinforcement learning domains. In: Proceedings of the 21st International Conference on Artificial Intelligence (AAAI006). Boston, Massachusetts: AAAI Press, 2006. 494-499 |

| [17] | Wolfe A P, Barto A G, Andrew G. Decision tree methods for finding reusable MDP homomorphism. In: Proceedings of the 21st International Conference on Artificial Intelligence (AAAI006). Boston, Massachusetts: AAAI Press, 2006. 530-535 |

| [18] | Goel S, Huber M. Subgoal discovery for hierachical reinforcement learning using learned policies. In: Proceedings of the 16th International FLAIRS Conference. California, USA: AAAI, 2003. 346-350 |

| [19] | Ferns N, Panangaden P, Precup D. Metrics for finite Markov decision processes. In: Proceeding of the 20th Conference on Uncertainty in Artificial Intelligence. Banff, Canada: AUAI Press, 2004. 162-169 |

| [20] | Mehta N, Natarajan S, Tadepalli P, Fern A. Transfer in variable-reward hierarchical reinforcement learning. Machine Learning, 2008, 73(3): 289-312 |

2014, Vol.1

2014, Vol.1