2. National Center for Mathematics and Interdisciplinary Sciences, Academy of Mathematics and Systems Science, Chinese Academy of Sciences, Beijing 100190, China;

3. Key Laboratory of Systems and Control, Academy of Mathematics and Systems Science, Chinese Academy of Sciences, Beijing 100190, China

Recent years have witnessed increasing research interests concerning the collective dynamics and distributed control in a variety of scenarios,including consensus,flocking and distributed optimization[1, 2, 3, 4, 5, 6, 7, 8]. In fact,distributed multi-agent optimization of a sum of convex functions has become a hot topic with various applications in sensor network,source localization,and robust estimation[9, 10, 11, 12].

The current literature offers a large body of work on designing discrete-time algorithms to find a solution of the distributed optimization problem,including the subgradient-based incremental methods with deterministic or randomized iteration,where each agent is able to compute a local subgradient of its own objective function[11, 12, 13, 14]. In addition,there are many non-subgradient-based methods. For instance,Gossip algorithms were developed to solve unconstrained optimization problems in [15, 16]. Moreover,an augmented Lagrangian method was proposed in [17].

In this study,we consider a multi-agent convex optimization problem,whose goal is to minimize the sum of local objective functions,subject to a global constraint. This problem is motivated by those in distributed source localization,network utility maximization,optimal flow control in power systems and so on[18, 19, 20]. A recent paper [21] studied the problem by proposing two distributed primal-dual subgradient algorithms. These algorithms are based on the characterization of the primal-dual optimal solutions as the saddle points of the Lagrangian (penalty) functions. From the optimization theory,we know that a vector $d$ can be selected as a feasible descent direction of differentiable function $f$ if it satisfies $\langle d,\nabla f\rangle<0$. Clearly,antigradient $-\nabla f$ is one of the feasible descent directions. However,in practical applications,it may be hard to obtain the exact gradient which,however can be computed approximately. Therefore,we propose an approximate gradient algorithm in this paper. The communication graph is assumed to be directed,switching,periodically strongly connected; we prove that the multi-agent system can achieve a global optimal consensus with some approximate gradient accuracy conditions.

This paper is organized as follows. In Section II,we introduce concepts and preliminary results in graph theory and convex analysis along with the formulation of the constrained distributed optimization problem under investigation. Then the approximate gradient algorithm is provided in Section III. The main results and convergence analysis are obtained for the considered algorithm in Section IV. Simulation results are provided in Section V. Finally, concluding remarks are made in Section VI.

II. PRELIMINARYIn this section,we review some useful related concepts in algebraic graph theory[22] and convex analysis[23],and then formulate the constrained distributed optimization problem under investigation.

A. Graph Theory and Convex AnalysisA directed graph is a pair,$\mathcal{G}=(\mathcal{V},\mathcal{E})$, where $\mathcal{V}=\{1$,$2$,$\cdots,N\}$ is the node set and $\mathcal{E}\subseteq \mathcal{V}\times \mathcal{V}$ is the edge set. Node $i$ is a neighbor of $j$ if $(i,j)\in \mathcal{E}$ and we use $\mathcal{N}_i$ to denote the set of neighbors of node $i$. A path of length $r$ from node $i_1$ to node $i_{r+1}$ is a sequence of $r+1$ distinct nodes $i_1,\cdots,i_{r+1}$ such that $(i_q,i_{q+1}) \in \mathcal{E}$ for $q=1,\cdots,r$. If there is a path between any two nodes ($i,j\in \mathcal{V}$),then $\mathcal{G}$ is said to be strongly connected.

A set $Q\subseteq {\bf R}^n$ is convex if $ax+(1-a)y\in Q$ for any $x,y \in Q$ and $0\leq a \leq 1$. For any $x\in {\bf R}^n$,there is a unique element $P_Q[x]$ $\in$ $Q$ satisfying $\|x-P_Q[x]\|= \inf_{y\in Q} \|x-y\|$,which is denoted by $|x|_Q$,where $Q \subseteq {\bf R}^n$ is a closed convex set,$P_Q$ denotes the projection operator onto $Q$ and $\|\cdot\|$ is the 2-norm in the Euclidean space. A function $f(\cdot): {\bf R}^n\rightarrow {\bf R}$ is said to be convex if $f(ax+(1-a)y)\leq af(x)+(1-a)f(y)$ for any $x,y \in {\bf R}^n$ and $0\leq a \leq 1$. Function $f$ is concave if $-f$ is convex. Vector $\xi \in {\bf R}^n$ is said to be a subgradient of convex function $f$ at $y\in {\bf R}^n$ if the first-order condition of convexity holds: \begin{align*} f(x)-f(y)\geq \langle\xi,x-y\rangle,\ \forall x\in {\bf R}^n, \end{align*} where $\langle \cdot,\cdot\rangle$ denotes the Euclidean inner product. The set of all subgradients of function $f$ at $y$ is denoted as $\partial f(y)$. Function $\mathcal{L}: X_1 \times X_2 \rightarrow {\bf R}$ with $X_1\subseteq {\bf R}^n$ and $X_2\subseteq {\bf R}^m$ being closed and convex,is convex-concave if it is convex on its being first argument and concave on the second one. Point $(x_1^*,x_2^*)\in X_1 \times X_2$ is a saddle point of $\mathcal{L}$ over $X_1 \times X_2$ if $$\mathcal{L}(x_1^*,x_2)\leq \mathcal{L}(x_1^*,x_2^*)\leq \mathcal{L}(x_1,x_2^*),\ \forall x_1\in X_1,\forall x_2\in X_2. $$

B. Problem Formulation

Consider a network composed of agents $\mathcal{V}=\{1,\cdots,N\}$

that can only interact with each other through local

communication. The objective of the multi-agent system is to

cooperatively solve the following optimization problem:

\begin{align}

\min_{x\in {\bf R}^n} \sum_{i=1}^N f_i(x) \quad \mbox{s.t.} \;\; g(x)\leq 0,\ x\in X,

\end{align}

(1)

${\bf Assumption 1.}$ There exists a vector,$\bar{x} \in X$,such that $g(\bar{x})$ $<$ $0$.

The communication over the multi-agent system at time $k$ $\geq$ $0$ can be described by a directed graph $\mathcal{G}_k=(\mathcal{V}, \mathcal{E}(k))$,where $\mathcal{E}(k)\subseteq \mathcal{V}\times\mathcal{V}$ represents the set of communication links at time $k$. For any two agents $i,j\in\mathcal{V}$,$j$ can get the information from $i$ at time $k$ if and only if $(i,j)\in \mathcal{E}(k)$. Let $a_{ij}(k)$ represent the weight of edge $(j,i)$ at time $k$,with the property that $a_{ij}(k)>0$ if $(j,i)\in \mathcal{E}(k)$,and $a_{ij}(k)=0$,otherwise. In this paper,we assume $(i,i)\in \mathcal{E}(k)$ for all $i$ and $k$. The following two assumptions on the interconnection graphs for the multi-agent network are widely used in the optimization computation[4, 11, 12].

${\bf Assumption 2}$ ${\bf (Weight rule).}$ There is $0< \eta < 1$ such that,for all $i,j\in \mathcal{V}$ and $k\geq 0$, $a_{ii}(k)\geq \eta$ and $a_{ij}\geq \eta$ if $a_{ij}(k)>0$. Moreover,$\sum_{i=1}^N a_{ij}(k)=\sum_{j=1}^N a_{ij}(k)=1$.

${\bf Assumption 3}$ ${\bf (Connectivity).}$ There is a positive integer $B$ such that the directed graph $(\mathcal{V}, \cup_{t=1}^B \mathcal{E}(k+t))$ is strongly connected for $k\geq 0$.

C. Approximate GradientSub-gradient methods have been widely used to solve the distributed optimization problems[11, 12, 21]. From the optimization theory,we know that vector $d$ can be selected as a feasible descent direction of differentiable function $h$ if $\langle d,\nabla h\rangle<0$. Obviously,$-\nabla h$ is one of the feasible descent directions. However in some practical applications,the exact gradient is hard to obtain but can be computed approximately.

Next,we give the definition of approximate gradient.

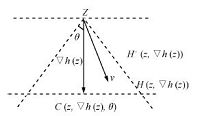

${\bf Definition 1.}$ For any $z$,denote $\nabla h(z)$ the exact gradient of differentiable function $h$ at $z$. By defining sets \begin{align*} C(z,\nabla h(z),\theta)&=z+\{v| \ \langle v,\nabla h(z)\rangle \geq \|v\| \|\nabla h(z)\| \cos \theta\};\nonumber\\ H^+(z,\nabla h(z))&=\{v| \ \langle-\nabla h(z),v-z-\nabla h(z)\rangle \geq 0\},\nonumber \end{align*} the approximate gradient ${\nabla}h^a(z,\theta)$ of point $z$ with approximate angle $\theta$ is defined as the following set: \begin{align*} {\nabla}h^a(z,\theta)= C(z,\nabla h(z),\theta)\cap H^+(z,\nabla h(z))-z. \end{align*}

In fact,$C(z,\nabla h(z),\theta)-z$ is a convex cone generated by all vectors having angles with $\nabla h(z)$ no greater than $\theta$,and $H^+(z,\nabla h(z))$ is a closed half-space (see Fig. 1 for illustration).

|

Download:

|

| Fig. 1.Illustration of the approximate gradient. | |

Define \begin{align*} H(z,\nabla h(z))=\{v| \ \langle\nabla h(z),v-z-\nabla h(z)\rangle=0\}. \end{align*} Then the supporting approximate gradient ${\nabla}h^{sa}(z,\theta)$ of point $z$ with approximate angle $\theta$ is defined as the following set: \begin{align*} {\nabla}h^{sa}(z,\theta)=C(z,\nabla h(z),\theta)\cap H(z,\nabla h(z))-z. \end{align*} According to Definition 1,for any $d^a\in {\nabla}h^a(z,\theta)$,we can associate $d^{sa}$ with $d^{sa}\in {\nabla}h^{sa}(z,\theta)$ such that $$ d^a=\zeta d^{sa} $$ for some $0\leq \zeta \leq 1$,where $\zeta$ is termed as norm relaxation.

III. APPROXIMATE GRADIENT ALGORITHMIn this section,we propose the approximate gradient algorithm for distributed convex optimization. This algorithm can be reduced to the "distributed penalty primal-dual subgradient algorithm" given in [21] when the approximate angle is zero and norm relaxation is equal to one.

At first,problem (1) is equivalent to

\begin{align}

\min_{x\in {\bf R}^n} \sum_{i=1}^N f_i(x) \quad \mbox{s.t.} \;\; Ng(x)\leq 0,\ x\in X.

\end{align}

(2)

\begin{align}

\max_{\lambda\in {\bf R}^m}q(\lambda) \quad \mbox{s.t.} \;\; \lambda\geq0.

\end{align}

(3)

The Slater$'$s condition can ensure the zero duality gap (that is, $p^*=q^*$) and the existence of the optimal solutions of the dual penalty problem (3) (that is,$D^*\neq \emptyset$). In addition, $(x^*,\lambda^*)\in X^*\times D^*$ if and only if it is a saddle point of the Lagrange penalty function $\mathcal{L}(x,\lambda)$ under Slater$'$s condition (see [21, 23] for the references therein). Let \begin{align*} \mathcal{L}_i(x,\lambda)=f_i(x)+ \langle{\lambda},[g(x)]^+ \rangle, \end{align*} which can be viewed as the Lagrange penalty function of agent $i$. In this way,we have $\mathcal{L}(x,\lambda)=\sum_{i=1}^N \mathcal{L}_i(x,\lambda)$ and $\mathcal{L}_i(x,\lambda)$ is convex in $x$ and concave (actually,linear) in $\lambda$.

The approximate gradient algorithm is described as follows:

\begin{align}

&v_x^i(k)=\sum_{j=1}^N a_{ij}(k)x_j(k),\notag\\

&v_{\lambda}^i(k)=\sum\limits_{j=1}^N a_{ij}(k) \lambda_j(k),\notag\\

&x_i(k+1)=P_{X}[v_x^i(k)-\alpha_k \mathcal{D}_{x}^i(k)],\notag\\

&\lambda_i(k+1)=v_{\lambda}^i(k)+\alpha_k \nabla_{\lambda}\mathcal{L}_i(v_x^i(k),v_{\lambda}^i(k)),

\end{align}

(4)

According to the definition of supporting approximate gradient, there exist $\delta_{ik}\in [0,1]$ and ${\nabla}\check{f}_i(v_x^i(k))\in \nabla f_i^{sa}(v_x^i(k),\theta_{ik})$ such that \begin{align*} \nabla \tilde{f}_i(v_x^i(k))=\delta_{ik} {\nabla}\check{f}_i(v_x^i(k)). \end{align*}

Let $\theta_k^+=\max_{i\in \mathcal{V}}\theta_{ik}$, $\delta_k^-=\min_{i\in \mathcal{V}} \delta_{ik}$ and $s(k)=\sum_{r=0}^k \alpha_r$,$k\geq 0$. For the approximate angle $\theta_{ik}$,step-size $\alpha_k$ and norm relaxation $\delta_{ik}$,we make the following assumptions:

${\bf Assumption 4}$ ${\bf (Approximate angle).}$ For every $i\in \mathcal{V}$,$0\leq \theta_{ik} \leq \theta^* < \pi/2$, $\forall k$.

${\bf Assumption 5}$ ${\bf (Step-sizes).}$ $\sum\alpha_k=\infty$, $\sum\alpha_k^2 <\infty$,$\sum{\alpha_k}\tan \theta_k^+ <\infty$, $\sum\alpha_{k+1}^2s^2(k)<\infty$, $\sum{\alpha_k}(1-\delta_k^-) <\infty$.

${\bf Remark 1.}$ In fact,the step-size and approximate angle conditions can be satisfied easily in various situations. For instance,$\alpha_k=\frac{1}{k+2}$,$\theta_{ik}=\frac{1}{k+2}$ and $\delta_{ik}=1-\frac{1}{k+2}$,$i\in \mathcal{V}$,$k$ $\geq$ $0$.

IV. CONVERGENCE ANALYSISIn this section,we establish some basic relations for the sequences $\{x_i(k)\}$ and $\{\lambda_i(k)\}$ obtained by the approximate gradient algorithm (4).

At first,we introduce a useful lemma in [24].

${\bf Lemma 1.}$ Let $\{a_k\}_{k=0}^{\infty}$ and $\{b_k\}_{k=0}^{\infty}$ be two non-negative sequences with $\sum_{k=0}^{\infty}b_k<\infty$. If $a_{k+1}\leq a_k+b_k$, $\forall k$ $\geq$ $0$,then $\lim_{k\rightarrow\infty}a_k$ is a finite number.

Next,we give the analysis for the proposed approximate gradient algorithm.

For any $x\in X $,by using the standard non-expansiveness property,it follows from (4) that \begin{align*} \|x_i(k+1)-x\|^2 =&\ \|P_{X}[v_x^i(k)-\alpha_k \mathcal{D}_{x}^i(k)]-P_{X}[x]\|^2\leq\\ &\ \|v_x^i(k)-\alpha_k \mathcal{D}_{x}^i(k)-x\|^2. \end{align*}

Let $d_x^i(k)={\nabla} f_i(v_x^i(k))+\langle v_{\lambda}^i(k),\mathcal{D} [{g}(v_x^i(k))]^+ \rangle$ and $d_{\lambda}^i(k)$ $=$ $\nabla_{\lambda} \mathcal{L}_i(v_x^i(k),v_{\lambda}^i(k))$. Due to the compactness of $X$,we have \[ c_0=\sup_{x,y \in X} \|x-y\| <\infty. \] Moreover,it follows from the continuity of $f_i$ and $[g(x)]^+$ that for any $x\in X$,there exists $L>0$ such that $\|[g(x)]^+\|\leq L$,$\|\mathcal{D} [g(x)]^+\|\leq L$ and $\|\nabla f_i(x)\|\leq L$,$i=1,\cdots,N$. From (4),we have $x_i(k)\in X$,then $v_x^i(k)\in X$,which implies $\|d_{\lambda}^i(k)\|$ $=$ $\|[g(v_x^i(k))]^+\|\leq L$, $\|\mathcal{D} [g(v_x^i(k))]^+\|\leq L$ and $\|\nabla f_i(v_x^i(k))\|$ $\leq$ $L$. Denote $M_{\lambda}(k)=\max_{i\in \mathcal{V}}\|\lambda_i(k)\|$,and then $\|d_x^i(k)\|$ $\leq$ $L(1+M_{\lambda}(k))$,$\|\mathcal{D}_x^i(k)\|\leq L(1+M_{\lambda}(k)+\tan\theta^*)$. Moreover,we obtain \[\|d_x^i(k)-\mathcal{D}_x^i(k)\| \leq L(\tan\theta_k^++(1-\delta_k^-)). \] Then we get \begin{align*} &\|x_i(k+1)-x\|^2\leq\\ &\qquad \bigg\|\sum_{j=1}^N a_{ij}(k)x_j(k)-x-\alpha_k \mathcal{D}_x^i(k)\bigg\|^2=\\ &\qquad\bigg\|\sum_{j=1}^N a_{ij}(k)x_j(k)-x\bigg\|^2+ \alpha_k^2\|\mathcal{D}_x^i(k) \|^2\;- \\ &\qquad 2\alpha_k\langle \mathcal{D}_x^i(k),v_x^i(k)-x\rangle \leq\\ &\qquad\sum_{j=1}^N a_{ij}(k)\|x_j(k)-x\|^2+2c_0L\alpha_k (1-\delta_k^-)\;+\\ &\qquad2c_0L\alpha_k \tan\theta_k^+ + 2L^2\alpha_k^2\big((1+ \tan\theta^* )^2+M_{\lambda}^2(k)\big) \;-\\ &\qquad2\alpha_k \langle d_x^i(k),v_x^i(k)-x\rangle. \end{align*} With a similar analysis,for any $\lambda \in {\bf R}_{\geq 0}^m$, \begin{align*} \|\lambda_i(k+1)-\lambda\|^2 =&\ \bigg\|\sum_{j=1}^N a_{ij}(k)\lambda_j(k)-\lambda+\alpha_k d_\lambda^i(k)\bigg\|^2\leq\\ &\ \sum_{j=1}^N a_{ij}(k)\|\lambda_j(k)-\lambda\|^2+ L^2\alpha_k^2\;+ \\ &\ 2\alpha_k \langle d_\lambda^i(k), v_\lambda^i(k)-\lambda\rangle. \end{align*} Based on the first-order convexity condition,it follows that $$ -\langle d_x^i(k),v_x^i(k)-x\rangle\leq \mathcal{L}_i(x,v_{\lambda}^i(k))-\mathcal{L}_i(v_{x}^i(k),v_{\lambda}^i(k)), $$ and $$ \langle d_\lambda^i(k),v_\lambda^i(k)-\lambda\rangle \leq \mathcal{L}_i(v_{x}^i(k),v_{\lambda}^i(k))-\mathcal{L}_i(v_{x}^i(k),\lambda). $$ Therefore,we have the following results.

${\bf Lemma 2.}$ For any $(x,\lambda)\in X \times {\bf R}_{\geq

0}^m$,the following inequalities hold:

\begin{align}

\sum_{i=1}^N &\|x_i(k+1)-x\|^2\leq \notag\\

&\quad\sum_{i=1}^N \|x_i(k)-x\|^2 +2NL^2\alpha_k^2\big((1+ \tan\theta^* )^2+M_{\lambda}^2(k)\big)+\notag\\

&\quad 2N c_0L\alpha_k \tan\theta_k^+ +2N c_0L\alpha_k (1-\delta_k^-)+\notag\\

&\quad

2\sum_{i=1}^N\alpha_k\big(\mathcal{L}_i(x,v_{\lambda}^i(k))-\mathcal{L}_i(v_{x}^i(k),v_{\lambda}^i(k))\big),

\end{align}

(5)

\begin{align}

\sum_{i=1}^N &\|\lambda_i(k+1)-\lambda\|^2\leq\notag\\

&\quad\sum_{i=1}^N

\|\lambda_i(k)-\lambda\|^2+NL^2\alpha_k^2+\notag\end{align}\begin{align}

&\quad2\sum_{i=1}^N

\alpha_k\big(\mathcal{L}_i(v_{x}^i(k),v_{\lambda}^i(k))-\mathcal{L}_i(v_{x}^i(k),\lambda)\big).

\end{align}

(6)

${\bf Lemma 3.}$ Let $\hat{x}(k)=\frac{1}{N} \sum_{i=1}^N x_i(k)$ and $\hat{\lambda}(k)=\frac{1}{N} \sum_{i=1}^N \lambda_i(k)$. If Assumptions 2$\sim$5 hold,then we have

1) $\sum{\alpha^2_{k}} {M_{\lambda}^2(k)}<\infty$;

2) $\lim_{k\rightarrow \infty} \|x_i(k)-\hat{x}(k)\|=0$,$ \lim_{k\rightarrow \infty} \|\lambda_i(k)-\hat{\lambda}(k)\|=0$, $i=1,\cdots,N$;

3) $\sum{\alpha_{k}}\|\hat{\lambda}(k)-v_{\lambda}^i(k)\|<\infty$, $\sum{\alpha_{k}}\|\hat{x}(k)-v_{x}^i(k)\|<\infty$, $\sum{\alpha_{k}} M_{\lambda}(k) \|\hat{x}(k)-v_{x}^i(k)\|<\infty$.

${\bf Proof.}$ 1) Since \begin{align*} \|\lambda_i(k+1)\|=&\ \|v_{\lambda}^i(k)+\alpha_{k} d_{\lambda}^i(k)\|\leq \\ &\ \|v_{\lambda}^i(k)\|+L\alpha_{k}\leq \\ &\ M_{\lambda}(k)+L\alpha_{k}, \end{align*} and \begin{align*} M_{\lambda}(k+1)&\leq M_{\lambda}(k)+L\alpha_{k}\leq M_{\lambda}(0)+Ls(k), \end{align*} it follows from Assumption $5$ that \begin{align*} &\sum_{k=0}^{\infty} \alpha_{k}^2{M_{\lambda}^2(k)} \leq \alpha_{0}^2 {M_{\lambda}^2(0)}+\\ &\qquad \sum_{k=0}^{\infty} 2\alpha_{k+1}^2(M_{\lambda}^2(0)+L^2s^2(k)) <\infty. \end{align*} Moreover,$\lim_{k\rightarrow \infty} \alpha_k M_{\lambda}(k)=0$.

2) Let $\Phi(k,s)=A(k)A(k-1)\cdots A(s)$,where

$A(k)=(a_{ij}(k))$,$k\geq s$. From Proposition 1 in [11],the

following result about transition matrices $\Phi(k,s)$ holds:

\begin{align}

\left|[\Phi(k,s)]_j^i-\frac{1}{N}\right|\leq \rho\beta^{k-s},\quad \forall i,j\in

\mathcal{V},\

k>s,

\end{align}

(7)

\begin{align}

&\|x_i(k+1)-\hat{x}(k+1)\| \leq\nonumber\\

&\qquad\rho\beta^k \sum_{j=1}^N \|x_j(0)\|+2L \alpha_{k}(1+M_{\lambda}(k) )\; +\nonumber\\

&\qquad2L \alpha_{k}\tan \theta_k^+ +N\rho L\sum_{s=0}^{k-1} \beta^{k-1-s}\alpha_{s}\tan \theta_s^+\; +\nonumber\\

& \qquad N\rho L\sum_{s=0}^{k-1} \beta^{k-1-s}\alpha_{s}(1+M_{\lambda}(s) ).

\end{align}

(8)

3) Multiplying both sides of inequality (8) by $\alpha_{k+1}$,we have \begin{align*} &\alpha_{k+1}\|x_i(k+1)-\hat{x}(k+1)\|\leq \\ &\quad\rho(\alpha_{k+1}^2\!+\!\beta^{2k})\sum_{j=1}^N \|x_j(0)\|\!+\!2L\alpha_{k+1}^2\!+\!2L\alpha_k^2(1\!+\! M_{\lambda}^2(k))\;+\\ &\quad2N\rho L \alpha_{k+1}^2\sum_{s=0}^{k-1}\beta^{k-1-s}\! +\!N\rho L \tan^2\theta^*\sum_{s=0}^{k-1}\beta^{k-1-s}\alpha_s^2\;+\\ &\quad2N\rho L \sum_{s=0}^{k-1} \beta^{k-1-s}\alpha_{s}^2(1+M_{\lambda}^2(s))+L\alpha_k^2 \tan^2\theta^*. \end{align*} Using Lemma 7 in [12] again,we obtain \begin{align*} \sum_{k=0}^{\infty}\alpha_{k}\|x_i(k)-\hat{x}(k)\|<\infty. \end{align*} Due to $\|\hat{x}(k)-v_{x}^i(k)\|\leq \max_{i\in \mathcal{V}}\|x_i(k)-\hat{x}(k)\|$,we have \begin{align*} \sum_{k=0}^{\infty}\alpha_{k}\|\hat{x}(k)-v_{x}^i(k)\| <\infty. \end{align*} Following the same technical line,we have \begin{align*} \sum_{k=0}^{\infty}\alpha_{k} M_{\lambda}(k)\|\hat{x}(k)-v_{x}^i(k)\| <\infty. \end{align*} Similar arguments can be employed to show that \begin{align*} \lim_{k\rightarrow \infty} \|\lambda_i(k)-\hat{\lambda}(k)\|=0,\; \sum_{k=0}^{\infty}\alpha_{k}\|\hat{\lambda}(k)-v_{\lambda}^i(k)\| <\infty. \end{align*}

${\bf Lemma 4.}$ Suppose Assumptions 1$\sim$5 hold. Let sequences $\{x_i(k)\}$ and $\{\lambda_i(k)\}$ be generated by the algorithm (4). For any $(x^*,\lambda^*)\in X^* \times D^*$,we have:

1) The limits,$\lim_{k\rightarrow\infty}\|x_i(k)-x^*\|$ and $\lim_{k\rightarrow\infty}\|\lambda_i(k)-\lambda^*\|$,are finite for all $i\in \mathcal{V}$;

2) $\lim_{k\rightarrow\infty}\frac{1}{s(k)}\sum_{r=0}^k \alpha_r\mathcal{L}(\hat{x}(r),\hat{\lambda}(r))=p^*$.

${\bf Proof.}$ 1) Let

$\mathcal{L}_i(v_x^i(k),v_{\lambda}^i(k))-\mathcal{L}_i(x,v_{\lambda}^i(k))=

E(k)$ $+$

$(\mathcal{L}_i(\hat{x}(k),\hat{\lambda}(k))-\mathcal{L}_i(x,\hat{\lambda}(k)))$,

where $E(k)=(\mathcal{L}_i(v_x^i(k)$,$v_{\lambda}^i(k))-

\mathcal{L}_i(\hat{x}(k),v_{\lambda}^i(k)))+(\mathcal{L}_i(\hat{x}(k),v_{\lambda}^i(k))

-\mathcal{L}_i(\hat{x}(k)$,$\hat{\lambda}(k)))$ $+$

$(\mathcal{L}_i(x,\hat{\lambda}(k))-\mathcal{L}_i(x,v_{\lambda}^i(k)))$.

Then we have the following inequality

\begin{align*}

\|E(k)\|\leq L(1+M_{\lambda}(k))\|v_x^i(k)-\hat{x}(k)\|+2L\|v_{\lambda}^i(k)-\hat{\lambda}(k)\|.

\end{align*}

For any $x^*\in X^*$,it follows from (5) that

\begin{align}

\sum_{i=1}^N &\|x_i(k+1)-x^*\|^2\leq \notag\\

&\qquad \sum_{i=1}^N \|x_i(k)-x^*\|^2+F(k) \;-\notag\\

&\qquad2\alpha_k\sum_{i=1}^N\big(\mathcal{L}_i(\hat{x}(k),\hat{\lambda}(k))-\mathcal{L}_i(x^*,\hat{\lambda}(k))\big),

\end{align}

(9)

With a similar analysis,for any $\lambda^*\in D^*$,it follows

from (6) that

\begin{align}

\sum_{i=1}^N &\|\lambda_i(k+1)-\lambda^*\|^2\leq\nonumber\\

&\qquad \sum_{i=1}^N \|\lambda_i(k)-\lambda^*\|^2+G(k)\;+\nonumber\\

&\qquad2\sum_{i=1}^N

\alpha_k(\mathcal{L}_i(\hat{x}(k),\hat{\lambda}(k))-\mathcal{L}_i(\hat{x}(k),\lambda^*)),

\end{align}

(10)

According to Lemma 3 and Assumption 5,we can get

$\sum_{k=0}^{\infty} F(k)<\infty$ and $\sum_{k=0}^{\infty}

G(k)<\infty$. Combining (9) and (10),we obtain:

\begin{align}

&\sum_{i=1}^N \big(\|x_i(k+1)-x^*\|^2+ \|\lambda_i(k+1)-\lambda^*\|^2\big)\leq \nonumber\\

&\qquad \sum_{i=1}^N \big(\|x_i(k)-x^*\|^2+ \|\lambda_i(k)-\lambda^*\|^2\big)+F(k)+G(k)\;+\nonumber\\

&\qquad2\alpha_k(\mathcal{L}(x^*,\hat{\lambda}(k))-\mathcal{L}(\hat{x}(k),\lambda^*)).

\end{align}

(11)

2) Summing the relations (11) over $k$,we obtain \begin{align*} 2 \sum_{r=0}^k &\alpha_r\big(\mathcal{L}(\hat{x}(r),\lambda^*)-\mathcal{L}(x^*,\hat{\lambda}(r))\big)\leq \end{align*}\begin{align*} &\quad\sum_{i=1}^N \big(\|x_i(0)-x^*\|^2+ \|\lambda_i(0)-\lambda^*\|^2\big)-\\ &\quad\sum_{i=1}^N \big(\|x_i(k+1)-x^*\|^2+ \|\lambda_i(k+1)-\lambda^*\|^2\big)\;+ \\ &\quad\sum_{r=0}^k (F(r)+G(r)). \end{align*} It can be verified that \begin{align*} \sum_{r=0}^\infty \alpha_r\big(\mathcal{L}(\hat{x}(r),\lambda^*)-\mathcal{L}(x^*,\hat{\lambda}(r))\big) <\infty. \end{align*} Since $\mathcal{L}(x^*,\hat{\lambda}(k))\leq \mathcal{L}(x^*,\lambda^*)$ and $\mathcal{L}(\hat{x}(k),\lambda^*)\geq \mathcal{L}(x^*,\lambda^*)$,we have \begin{align*} \sum_{r=0}^\infty \alpha_r\big(\mathcal{L}(\hat{x}(r),\lambda^*)-\mathcal{L}(x^*,\lambda^*)\big) <\infty, \end{align*} and \begin{align*} \sum_{r=0}^\infty \alpha_r\big(\mathcal{L}(x^*,\lambda^*)-\mathcal{L}(x^*,\hat{\lambda}(r))\big) <\infty. \end{align*} From (9),we obtain that \begin{align*} &\lim_{k\rightarrow\infty}\sup \frac{2}{s(k)} \sum_{r=0}^k \alpha_r(\mathcal{L}(\hat{x}(r),\hat{\lambda}(r))-p^*)\leq \\ &\qquad\lim_{k\rightarrow\infty}\sup \frac{2}{s(k)} \sum_{r=0}^k \alpha_r(\mathcal{L}(\hat{x}(r),\hat{\lambda}(r))-\mathcal{L}(x^*,\hat{\lambda}(r)))\;+\\ &\qquad\lim_{k\rightarrow\infty}\sup \frac{2}{s(k)} \sum_{r=0}^k \alpha_r(\mathcal{L}(x^*,\hat{\lambda}(r))-p^*) \leq \end{align*} \begin{align*} &\qquad\lim_{k\rightarrow\infty}\sup \frac{1}{s(k)}\bigg(\sum_{i=1}^N \|x_i(0)-x^*\|^2+\sum_{r=0}^k F(r)\bigg)+\\ &\qquad\lim_{k\rightarrow\infty}\sup \frac{2}{s(k)} \sum_{r=0}^k \alpha_r(\mathcal{L}(x^*,\hat{\lambda}(r))-p^*) \leq 0. \end{align*} Then we get \begin{align*} \lim_{k\rightarrow\infty}\sup \frac{1}{s(k)}\sum_{r=0}^k \alpha_r\mathcal{L}(\hat{x}(r),\hat{\lambda}(r))\leq p^*. \end{align*} With the similar analysis of (10),we can obtain \begin{align*} \lim_{k\rightarrow\infty}\inf \frac{1}{s(k)}\sum_{r=0}^k \alpha_r\mathcal{L}(\hat{x}(r),\hat{\lambda}(r))\geq p^*. \end{align*} Hence,$\lim_{k\rightarrow\infty}\frac{1}{s(k)}\sum_{r=0}^k \alpha_r\mathcal{L}(\hat{x}(r)$,$\hat{\lambda}(r))= p^*$.

${\bf Theorem 1.}$ Consider the problem (1),and let the sequence $\{x_i(k)\}$ be generated by the approximate gradient algorithm (4). Under Assumptions 1$\sim$5,if the primal optimal set $X^* $ has an interior point,then there exists $\tilde{x}\in X^*$ such that $\lim_{k\rightarrow \infty} x_i(k)=\tilde{x}$,$i\in \mathcal{V}$.

${\bf Proof.}$ For any interior point $\bar{x}\in X^* $,there exists an open sphere $S_1$ centered at $\bar{x}$ such that $S_1\subseteq X^*$. For any $x\in S_1$,from Lemma 4,we have $\lim_{k\rightarrow \infty} \|x_i(k)-{x}\|$ exists. It can be verified that there exists $\tilde{x}\in X$ such that $\lim_{k\rightarrow \infty} x_i(k)$ $=$ $\tilde{x}$,$i\in \mathcal{V}$. Following the analogous line to Claim 4 in [21],we can show that $\tilde{x}\in X^*$. Thus,the conclusion follows.

${\bf Remark 2.}$ The main difference between the approximate gradient algorithm in this paper and the algorithm in [21] is that we take the gradient computation errors into account and propose a new approximate algorithm.

V. SIMULATIONIn this section,we give simulation results to illustrate the distributed approximate gradient algorithm proposed in this paper.

${\bf Example 1.}$ Consider a group of three agents with the

switching interaction topologies to cooperatively solve the problem

(1). Let $g(x,y)=(x-y)^2-4$,constraint set $X=$ $[-2,2]$ $\times$

$[-2,2]\subseteq{\bf R}^2$,$f_i(x,y)=f_i(x)+f_i(y)$,$i=1,2,3$,

where

\begin{align}

f_1(x)&=\left\{\begin{array}{ll}

(x-2)^2,& x\geq 2,\\

0, & -2< x< 2,\\

(x+2)^2,& x\leq -2,

\end{array}\right.\nonumber\\[2mm]

f_1(y)&=\left\{\begin{array}{ll}

(y-2)^2,& y\geq 2,\\

0, & -2< y< 2,\\

(y+2)^2,& y\leq -2,

\end{array}\right.\nonumber\\[2mm]

f_2(x)&=\left\{\begin{array}{ll}

x^3-3x,&x \geq 1,\\

-2, & -1< x< 1,\\

x^3-3x-4,& x\leq -1,

\end{array}\right.\nonumber\\[2mm]

f_2(y)&=\left\{\begin{array}{ll}

(y-1)^2,& y\geq 1,\\

0, & -1< y< 1,\\

(y+1)^2,& y\leq -1,

\end{array}\right.

\end{align}

(12)

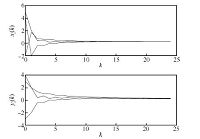

The topology ${\cal G}$ is switched between graphs ${\cal G}_i$, $i=1,2$,where ${\cal G}(2k)={\cal G}_1$ and ${\cal G}(2k+1)={\cal G}_2$ for $k=0,1,\cdots$. The corresponding adjacency matrices are described by $A_1$ and $A_2$: \begin{align*} A_1=\left(\begin{array}{ccc} 0.7&0.3&0\\ 0.3&0.7&0\\ 0&0&1 \end{array}\right),\quad A_2=\left(\begin{array}{cccc} 0.6&0&0.4\\ 0&1&0\\ 0.4&0&0.6 \end{array}\right). \end{align*} The convergence processes of algorithm (4) are shown in Fig. 2. Here we take step-sizes $\alpha_k={1}/({k+2})$,norm relaxation $\delta_{ik}=({k+1})/({k+2})$,and $\nu_{ik}={1}/({k+2})$,where $\nu_{ik}$ is the angle between the exact gradient and the approximate gradient for agent $i$ at time $k$.

|

Download:

|

| Fig. 2.The convergence of approximate gradient algorithm. | |

Denote $(x_i(k),y_i(k))$ the estimate of the optimal solution of the problem (1) for agent $i$ at time $k$. From Fig. 2,we can see that all the agents converge to the same optimal point.

VI. CONCLUSIONSThis paper investigated a multi-agent optimization problem where each agent aims to minimize the sum of local objective functions subject to a global inequality constraint and a global constraint set. Based on the distributed subgradient methods,we proposed an approximate gradient algorithm when each agent does not have the exact gradient. The conditions were obtained for the approximate gradient algorithm on how large the gradient errors can be tolerated to ensure the convergence of the proposed algorithm. More generalized and interesting cases on approximate gradient algorithms are still under investigation.

| [1] | Shi G D, Johansson K H, Hong Y G. Reaching an optimal consensus:dynamical systems that compute intersections of convex sets. IEEE Transactions on Automatic Control, 2013, 58(3):610-622 |

| [2] | Shi G D, Johansson K H. Randomized optimal consensus of multi-agent systems. Automatica, 2012, 48(12):3018-3033 |

| [3] | Lou Y C, Hong Y G. Target containment control of multi-agent systems with random switching interconnection topologies. Automatica, 2012, 48(5):879-885 |

| [4] | Lou Y C, Shi G D, Johansson K H, Hong Y G. Reaching optimal consensus for multi-agent systems based on approximate projection. In:Proceedings of the 10th World Congress on Intelligent Control and Automation (WCICA). Beijing, China:IEEE, 2012. 2794-2800 |

| [5] | Ren W, Beard R. Consensus seeking in multi-agent systems under dynamically changing interaction topologies. IEEE Transactions on Automatic Control, 2005, 50(5):655-661 |

| [6] | Olfati-Saber R. Flocking for multi-agent dynamic systems:algorithms and theory. IEEE Transactions on Automatic Control, 2006, 51(3):401-420 |

| [7] | Tang Y, Hong Y. Hierarchical distributed control design for multi-agent systems using approximate simulation. Acta Automatica Sinica, 2013, 39(6):868-874 |

| [8] | Chen J, Yu M, Dou L, Gan M. A fast averaging synchronization algorithm for clock oscillators in nonlinear dynamical network with arbitrary time-delays. Acta Automatica Sinica, 2010, 36(6):873-880 |

| [9] | Rabbat M, Nowak R. Distributed optimization in sensor networks. In:Proceedings of the 3rd International Symposium on Information Processing of Sensor Networks. Berkeley, CA:IEEE, 2004. 20-27 |

| [10] | Wan P, Lemmon M D. Event-triggered distributed optimization in sensor networks. In:Proceedings of the 2010 International Conference on Information Processing of Sensor Networks. San Francisco, CA:IEEE, 2010. 49-60 |

| [11] | Nedic A, Ozdaglar A. Distributed subgradient methods for multi-agent optimization. IEEE Transactions on Automatic Control, 2009, 54(1):48-61 |

| [12] | Nedic A, Ozdaglar A, Parrilo P A. Constrained consensus and optimization in multi-agent networks. IEEE Transactions on Automatic Control, 2010, 55(4):922-938 |

| [13] | Nedic A, Ozdaglar A. Subgradient methods for saddle-point problems. Journal of Optimization Theory and Applications, 2009, 142(1):205-228 |

| [14] | Johansson B, Rabi M, Johansson M. A randomized incremental subgradient method for distributed optimization in networked systems. SIAM Journal on Control and Optimization, 2009, 20(3):1157-1170 |

| [15] | Lu J, Tang C Y, Regier P R, Bow T D. Gossip algorithms for convex consensus optimization over network. IEEE Transactions on Automatic Control, 2011, 56(12):2917-2923 |

| [16] | Lu J, Regier P R, Tang C Y. Control of distributed convex optimization. In:Proceedings of the 49th IEEE Conference on Decision and Control. Atlanta, GA:IEEE, 2010. 489-495 |

| [17] | Jakovetic D, Xavier J, Moura J M. Cooperative convex optimization in networked systems:augmented Lagrangian algorithm with directed gossip communication. IEEE Transactions on Signal Processing, 2011, 59(8):3889-3902 |

| [18] | Rabbat M, Nowak R. Decentralized source localization and tracking. In:Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP, 2004). Montreal, Que:IEEE, 2004. 921-924 |

| [19] | Kelly F, Maulloo A, Tan D. Rate control for communication networks:shadow prices, proportional fairness and stability. Journal of the Operational Research Society, 1998, 49(3):237-252 |

| [20] | Oliveira A R L, Soares S, Nepomuceno L. Optimal active power dispatch combining network flow and interior point approaches. IEEE Transactions on Power Systems, 2003, 18(4):1235-1240 |

| [21] | Zhu M H, Martinez S. On distributed convex optimization under inequality and equality constraints. IEEE Transactions on Automatic Control, 2012, 57(1):151-164 |

| [22] | Godsil C, Royle G. Algebraic Graph Theory. New York:Springer-Verlag, 2001 |

| [23] | Bertsekas D P, Nedic A, Ozdaglar A. Convex Analysis and Optimization. Belmont, MA:Anthena Science, 2003 |

| [24] | Poljak B T. Introduction to Optimization. New York:Optimization Software Inc., 2001 |

2014, Vol.1

2014, Vol.1