2. Department of Electrical and Computer Engineering, National University of Singapore, Singapore, Singapore

For target tracking using mobile sensor networks, the placement of the sensors relative to the target can significantly affect the tracking performance. What is the optimal sensor placement that can minimize target tracking uncertainty? This problem is of both theoretical and practical importance.

There are generally two kinds of mathematical formulations for optimal sensor placement problems in the literature: one is optimal control[1, 2, 3],and the other is parameter optimization[4, 5, 6, 7, 8, 9, 10, 11]. The optimal control formulation considers sensor placement and target tracking as a whole. The formulation is also referred to simultaneous localization and planning (SLAP)[2]. As a comparison,the parameter optimization formulation only considers the sensor placement part and does not consider the target tracking part. It is assumed that an initial target estimate has already been obtained in other ways. Then the optimal sensor placement is determined based on this initial target estimate.

In fact,the above two kinds of formulations lead to consistent results. For example,the work in [2] observed that when two sensors approach a target,the target information would be maximized when the angle subtended at the target by the two sensors is 90~deg. This observation actually has been analytically proved as an optimality condition based on the parameter optimization formulation in [4,6- 7,13-14].

It should be noted that analytical conclusions can be drawn based on the parameter optimization formulation,while only numerical results can be obtained from the optimal control formulation. Considering the analytical conclusions can provide us important insights into the effects of sensor placements on target tracking,we will adopt the parameter optimization formulation to study optimal sensor placement in this paper.

Although optimal sensor placement based on the parameter optimization formulation has been investigated extensively,most of the existing results are only applicable to 2D cases[4, 5, 6, 7, 8, 10]. Since many practical applications require 3D optimal sensor placements,the general results for 3D cases will be of great importance from the practical point of view. One main difficulty to analyze 3D optimal placements is that the complexity of the conventional objective function,the determinant of the Fisher information matrix (FIM),increases dramatically as the dimension increases from two to three. Motivated by that,a new objective function,which is analytically tractable in both 2D and 3D cases, has been proposed in [13]. By employing recently developed frame theory,the work in [13] successfully proved the necessary and sufficient conditions of optimal sensor placements in both 2D and 3D spaces. It is shown that the analytical results obtained based on the new objective function are consistent with the existing 2D results in the literature. Moreover,the work in [13] proposed a unified framework for analyzing the optimal placement problems of bearing-only,range-only and received-signal-strength sensor networks.

In addition to analytically determining optimal sensor placements, it is also practically important to study how to autonomously deploy the optimal placements. There are generally three approaches to autonomous optimal sensor deployment. 1) One approach is to use the optimal control formulation mentioned above. 2) The second approach is to develop numerical methods to solve the parameter optimization formulation. For example,by numerically maximizing the determinant of the FIM,sensor trajectory optimization algorithms can be obtained[3, 9]. Compared to the optimal control approach,the second one is easy to be designed and implemented,and various constraints of sensor trajectories can be easily included. 3) The third approach is to design autonomous deployment algorithms based directly on analytical results of optimal sensor placement. For example,the work in [7] proposed a distributed motion coordination algorithm to autonomously deploy sensors for target tracking. Loosely speaking,that algorithm is designed based on the fact that a placement is optimal if range-only sensors are uniformly distributed around the target. However,one limitation of the approach used in [7] is that the uniformly distributed placement is merely a special case as pointed out by [13,~Section~5.2]. There are an infinite number of optimal placements that can optimize the objective function.

These optimal placements may be more appropriate than the uniformly distributed one given certain initial placements and sensor trajectory constraints. More importantly,the uniformly distributed placement is optimal only if the sensors are equally weighted [13,Section 5.2]. If some of the sensors can provide more accurate measurements than the others,the uniformly distributed one will no longer be optimal. With the above analysis,we will adopt the second numerical optimization approach to solve autonomous sensor deployment problems in this paper.

Based on the analytical results in [13],this work proposes a control strategy to autonomously deploy optimal placements of range-only sensors in 2D and 3D spaces. One main contribution of this work is that the control strategy can handle 3D autonomous deployment problems while the existing results are only applicable to 2D cases[1, 2, 3, 7, 9]. The objective function to be minimized is composed of two terms: an inter-sensor potential and an external potential. The inter-sensor potential actually is the frame potential among the sensors[13]; the sensor trajectory constraints are formulated as the external potential. A gradient control law is used to numerically to minimize the objective function such that an optimal placement can be achieved while the sensor trajectory constraints can be satisfied in the meantime. Since artificial potential approaches can handle various issues such as obstacle avoidance and collision avoidance among sensors, the proposed control strategy provides a flexible solution to practical autonomous optimal sensor deployment problems. In this paper,we further apply the control strategy to several specific scenarios. Simulation results verify the effectiveness of the proposed control strategy. It is also illustrated how the control strategy can improve target tracking performance.

II. OPTIMAL SENSOR PLACEMENTConsider one target in ${\bf R}^d$ where $d=2$ or 3. Suppose there are $n$ ($n\ge d$) range-only sensors. Following the existing work [4, 5, 6, 7],we assume an initial target position estimate $p$ $\in$ ${\bf R}^d$ is available already. The optimal placement will be determined based on $p$.

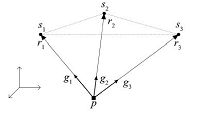

Denote $s_i\in{\bf R}^d$ as the position of sensor $i$ ($i\in\{1,\cdots,n\}$). The relative position of sensor $i$ with respect to the target is $r_i=s_i-p$. Then $\{r_i\}_{i=1}^n$ can fully describe the sensor-target placement. The unit vector \begin{align*} g_i=\frac{r_i}{\|r_i\|} \end{align*} represents the bearing of sensor $i$ relative to the target,where $\|\cdot\|$ denotes the Euclidean norm of a vector or the Frobenius norm of a matrix. The above notations are illustrated by Fig. 1.

|

Download:

|

| Fig. 1.A 3D sensor placement. | |

The measurement model of sensor $i$ is expressed as

\begin{align*}

m_i=h_i(p)+w_i,

\end{align*}

where

\begin{align}\label{eq_rangeOnly_measurement_model}

h_i(p)=\|p-s_i\|,

\end{align}

(1)

\begin{align}\label{eq_FIM}

F

= \sum_{i=1}^n \left(\frac{\partial h_i}{\partial p}\right)^{\rm T} \Sigma_i^{-1} \frac{\partial h_i}{\partial

p},

\end{align}

(2)

\begin{align}\label{eq_FIM_rangeOnly}

F=\sum_{i=1}^n c_i^2 g_i g_i^{\rm T},

\end{align}

(3)

The conventional optimization criterion for optimal sensor placement is to maximize $\det F$,which can be interpreted as maximizing the target information obtained by the sensors. This criterion,however,is not applicable to 3D cases as $\det F$ is not analytically tractable in 3D cases. Motivated by that,the work in [13] proposed a new objective function. Let $\{\lambda_i\}_{i=1}^d$ be the eigenvalues of $F$. Denote ${\bar{\lambda}}= 1/d \sum_{i=1}^d \lambda_i$. Since $\sum_{i=1}^d \lambda_i= {\rm tr} F$,it is easy to obtain $\bar{\lambda}$ $=$ $1/d\sum_{i=1}^n c_i^2$. The new objective function is defined as $\|F-\bar{\lambda}I_d\|^2$,where $I_d\in{\bf R}^{d\times d}$ is the identity matrix. Minimizing $\|F-\bar{\lambda}I_d\|^2$ is to minimize the diversity of the eigenvalues of $F$. A detailed discussion on the relationship between the new and the conventional objective functions is given in [13,Section 3.2].

A sensor placement is called optimal if it minimizes the objective function $\|F-\bar{\lambda}I_d\|^2$. By [13,Lemma 3.3],a placement is optimal if and only if it minimizes $\|G\|^2$,where \begin{align*} G=\sum_{i=1}^n c_i^2 g_ig_i^{\rm T}. \end{align*} By employing recently developed frame theory[15],necessary and sufficient conditions of optimal placements that minimize $\|G\|^2$ are obtained as below[13].

${\bf Definition 1}$ ${\bf (Irregularity).}$ For any positive non-increasing sequence $\{c_i\}_{i=1}^n$ with $c_1\ge \cdots \ge c_n>0$,and any integer $d$ satisfying $1\le d \le n$,denote $k_0$ as the smallest nonnegative integer $k$ for which \begin{align*} c_{k+1}^2 \le \frac{1}{d-k}\sum_{i=k+1}^n c_i^2. \end{align*} The integer $k_0$ is called the irregularity of $\{c_i\}_{i=1}^n$ with respect to $d$. If $k_0=0$,the sequence $\{c_i\}_{i=1}^n$ is called regular; otherwise,it is called irregular.

${\bf Theorem 1}$ ${\bf (Regular optimal placement).}$ In ${\bf R}^d$

with $d=2$ or $3$,if the positive coefficient sequence

$\{c_i\}_{i=1}^n$ is regular,then the objective function $\|G\|^2$

satisfies

\begin{align}

\|G\|^2 \ge \frac{1}{d} \left( \sum_{i=1}^n c_i^2

\right)^2.

\end{align}

(4)

\begin{align}

\sum_{i=1}^n c_i^2g_ig_i^{\rm T}=\frac{1}{d}\sum_{i=1}^n c_i^2

I_d.

\end{align}

(5)

${\bf Theorem 2}$ ${\bf (Irregular optimal placement).}$ In ${\bf R}^d$

with $d=2$ or $3$,if the positive coefficient sequence

$\{c_i\}_{i=1}^n$ is irregular with irregularity as $k_0\ge1$,

without loss of generality,$\{c_i\}_{i=1}^n$ can be assumed to be a

non-increasing sequence,and then the objective function $\|G\|^2$

satisfies

\begin{align}\label{eq_lowerboundIrregular}

\|G\|^2 \ge \sum_{i=1}^{k_0} c_i^4 + \frac{1}{d-k_0}\left( \sum_{i=k_0+1}^n c_i^2

\right)^2.

\end{align}

(6)

\begin{align}\label{eq_irregularOptimalPlacement}

\{g_i\}_{i=1}^n=\{g_i\}_{i=1}^{k_0} \cup

\{g_i\}_{i=k_0+1}^n,

\end{align}

(7)

Based on (5) and (7),optimal placements can be analytically constructed. The lower bounds of the objective function $\|G\|^2$ given in (4) and (6) are useful for numerically determining the optimality of a placement. The optimality error defined as the difference between $\|G\|^2$ and its lower bound can be used as a numerical indicator to evaluate the optimality of the placement.

Recall $F$ given in (3) is a function of the sensor-target bearings $\{g_i\}_{i=1}^n$,and irrelevant to the sensor-target ranges $\{\|r_i\|\}_{i=1}^n$. The necessary and sufficient conditions given in Theorems 1 and 2 are the conditions of $\{g_i\}_{i=1}^n$ only. As a result,the sensor-target ranges have no influence on the optimality of a placement. This feature is unique for range-only sensors. For other types of sensors such as bearing-only or received-signal-strength based sensors,the optimal placements will be influenced by sensor-target ranges[13].

III. AUTONOMOUSLY DEPLOY OPTIMAL SENSOR PLACEMENTIn practical applications,it is usually required to autonomously deploy an optimal sensor placement instead of manually deploying. In this section,we present a control strategy which can steer mobile sensors from an initial non-optimal placement to an optimal one. In the meantime,the control strategy can fulfill given trajectory constraints of the mobile sensors.

A.Control Strategy

The mobile sensors usually cannot move freely in practical

applications. For example,the trajectory of each sensor may be

constrained on the boundary of a set in ${\bf R}^d$. In order to

autonomously deploy an optimal sensor placement,we need to solve

two problems: 1) minimize the objective function $\|G\|^2$,and in

the meantime 2) fulfill the trajectory constraint of each mobile

sensor. Hence we define two artificial potentials. First,define

$V_I=\|G\|^2$ as the inter-sensor potential. The placement is

optimal if and only if $V_I$ is minimized. Second,define $V_E$ as

the external potential corresponding to the sensor trajectory

constraints. The sensor trajectory constraints are fulfilled if and

only if $V_E=0$. By combining the two potentials,we have the total

potential as

\begin{align*}

V=k_IV_I+k_EV_E,

\end{align*}

where $k_I$,$k_E>0$. The gradient control law for sensor $i$ is

designed as

\begin{align}\label{eq_controlLaw}

\dot{s}_i=-(k_I\nabla_{s_i}V_I+k_E\nabla_{s_i}V_E),

\end{align}

(8)

Recall $G=\sum_{i=1}^n c_i^2 g_i g_i^{\rm T}$ and $G^{\rm T}=G$.

Then the differential of $V_I$ is

\begin{align}\label{eq_dVI}

{\rm d} V_I

=&\ 2{\rm tr}(G {\rm d} G)= \nonumber \\

&\ 2{\rm tr}\left(G\sum_{i=1}^nc_i^2{\rm d} g_i g_i^{\rm T}\right)

+ 2{\rm tr}\left(G\sum_{i=1}^nc_i^2 g_i {\rm d} g_i^{\rm T}\right)=\nonumber \\

&4\sum_{i=1}^nc_i^2 g_i^{\rm T} G {\rm d} g_i.

\end{align}

(9)

\begin{align}\label{eq_dgi}

{\rm d} g_i=\frac{1}{\|r_i\|}P_i{\rm d} r_i,

\end{align}

(10)

\begin{align}

\nabla_{s_i} V_I = \nabla_{r_i} V_I = \frac{4c_i^2}{\|r_i\|}

P_iGg_i.

\end{align}

(11)

${\bf Remark 1.}$ The implementation of (11) requires all-to-all communications among the sensors due to the term $G$. However,it is possible to implement (11) in a distributed way. To do that,each sensor needs to maintain an estimate $\hat{G}_i$ of $G$,and $\hat{G}_i$ should converge to $G$ given certain underlying graph. The distributed estimation of $G$ actually is an average consensus problem which has been investigated extensively (see,for example, [16, 17, 18]).

C.External Force ${{\nabla}_{{ s}_{ i}}} { V}_{ E}$

The external potential $V_E$ is chosen according to practical application requirements. Here we use two specific scenarios,one of which is 2D and the other is 3D,to demonstrate how control strategy (8) can be applied to practical problems.

1) A 2D scenario

Suppose the sensors can only move on an ellipse,

while the stationary target is located arbitrarily inside the

ellipse

(see Fig. 2).

The position of sensor $i$ must satisfy

\begin{align*}

(s_i-s_0)^{\rm T} Q (s_i-s_0)=1,

\end{align*}

where $s_0\in{\bf R}^2$ is the center of the ellipse and $Q={\rm

diag}\{1/a^2,1/b^2\}\in{\bf R}^{2\times2}$ with $a>0$ and $b>0$ as

the lengths of the two semi-axes,respectively.

According to the constraint,we choose $V_E$ as

\begin{align}\label{eq_VE_2DExample}

V_E = \sum_{i=1}^n \left[(s_i-s_0)^{\rm T} Q

(s_i-s_0)-1\right]^2.

\end{align}

(12)

|

Download:

|

| Fig. 2.An illustration of the 2D scenario where all mobile sensors move on an ellipse. | |

Then it is straightforward to obtain

\begin{align}\label{eq_nablaVE_2DExample}

\nabla_{s_i}V_E = 4\left[(s_i-s_0)^{\rm T} Q

(s_i-s_0)-1\right]Q(s_i-s_0).

\end{align}

(13)

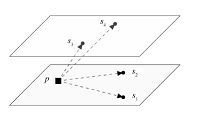

2) A 3D scenario

We now consider a 3D scenario that can be applied to cooperative air and ground surveillance[12, 19].

Suppose there are multiple unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs).

Each vehicle is equipped with a range-only sensor that can measure the range to the target.

The UAVs fly at fixed altitudes and the UGVs move on the ground with altitude as zero (see Fig. 3).

Denote $\ell_i$ as the altitude of vehicle $i$.

Then the external potential $V_E$ can be chosen as

\begin{align}\label{eq_VE_3DExample}

V_E=\sum_{i=1}^n (e_3^{\rm T}s_i - \ell_i)^2,

\end{align}

(14)

where $e_3=[0, 0, 1]^{\rm T}\in{\bf R}^3$.

Clearly,all the altitude constraints are satisfied if and only if $V_E=0$.

It is straightforward to obtain

\begin{align}\label{eq_nablaVE_3DExample}

\nabla_{s_i}V_E = 2(e_3^{\rm T}s_i - \ell_i)e_3.

\end{align}

(15)

|

Download:

|

| Fig. 3.An illustration of the 3D scenario where each sensor moves at a fixed altitude. | |

The proposed gradient control law (8) guarantees that the gradient $k_I\nabla_{s_i}V_I+k_E\nabla_{s_i}V_E$ for all $i$ will converge to zero. However,$k_I\nabla_{s_i}V_I+k_E\nabla_{s_i}V_E=0$ does not imply $\nabla_{s_i}V_I=\nabla_{s_i}V_E=0$. To have that implication, the external potential $V_E$ and the inter-sensor potential $V_I$ should be compatible with each other. By compatibility,we mean $k_I\nabla_{s_i}V_I+k_E\nabla_{s_i}V_E=0$ for all $i$ if only if $\nabla_{s_i}V_I$ $=$ $\nabla_{s_i}V_E=0$ for all $i$. If $V_E$ is not compatible with $V_I$,it is possible that $k_I\nabla_{s_i}V_I+k_E\nabla_{s_i}V_E=0$ while $\nabla_{s_i}V_I$ $\ne$ $0$ and $\nabla_{s_i}V_E\ne0$.

We next prove $V_I=\|G\|^2$ is compatible with $V_E$ given in (12) or (14). To do that,we will show $\nabla_{s_i}V_I$ in (11) is impossible to be parallel to $\nabla_{s_i}V_E$ in (13) or (15).

Firstly consider $\nabla_{s_i}V_I$ in (11). Since $P_i=I_d-g_ig_i^{\rm T}$,we have ${\rm null}(P_i)$ $=$ $\mathrm{span}\{g_i\}$ and hence $P_ir_i=0$. As a result, \begin{align*} r_i^{\rm T} \nabla_{s_i}V_I =0, \end{align*} which means $\nabla_{s_i}V_I\perp r_i$. %Intuitively the force $\nabla_{s_i}V_I$ only attempts to change the direction of $r_i$ but not $\|r_i\|$.

Secondly,consider $\nabla_{s_i}V_E$ given in (13) and (15).

1) In the 2D scenario,vector $\nabla_{s_i}V_E$ is the normal vector of the ellipse at point $s_i$. Geometrically,it is clear that $\nabla_{s_i}V_E$ is impossible to be orthogonal to $r_i$ for any $p$ inside the ellipse and any $s_i$ on the ellipse. As a result,$\nabla_{s_i}V_E$ is not parallel to $\nabla_{s_i}V_I$, and hence $k_I\nabla_{s_i}V_I+k_E\nabla_{s_i}V_E=0$ if and only if $\nabla_{s_i}V_I=\nabla_{s_i}V_E=0$ for all $i$.

2) In the 3D scenario,vector $\nabla_{s_i}V_E$ is the normal vector of the horizontal planes. Define $\mathcal{I}=\{1,\cdots,n\}$, $\mathcal{I}_{>0}=\{i$ $\in$ $\mathcal{I}: \ell_i>0\}$ and $\mathcal{I}_{=0}=\{i\in\mathcal{I}: \ell_i=0\}$. Geometrically,it is clear that if $\ell_i>0$,then $\nabla_{s_i}V_E$ is impossible to be orthogonal to $r_i$. Hence $\nabla_{s_i}V_E$ is not parallel to $\nabla_{s_i}V_I$ for all $i\in\mathcal{I}_{>0}$. As a result, $k_I\nabla_{s_i}V_I+k_E\nabla_{s_i}V_E=0$ for all $i$ $\in$ $\mathcal{I}_{>0}$ if and only if $\nabla_{s_i}V_I=\nabla_{s_i}V_E=0$ for all $i\in\mathcal{I}_{>0}$. Since the inter-sensor forces are mutual,if $\nabla_{s_i}V_I=0$ for all $i\in\mathcal{I}_{>0}$,then we have $\sum_{i\in\mathcal{I}_{=0}}\nabla_{s_i}V_I=0$. Furthermore,since $r_i$ for all $i\in\mathcal{I}_{=0}$ is located in the plane with $\ell_i=0$,we have that $\nabla_{s_i}V_I$ is within the plane and cannot be parallel to $\nabla_{s_i}V_E$. Thus $k_I\nabla_{s_i}V_I+k_E\nabla_{s_i}V_E=0$ for all $i\in\mathcal{I}_{=0}$ if and only if $\nabla_{s_i}V_I=\nabla_{s_i}V_E=0$ for all $i\in\mathcal{I}_{=0}$. Therefore,$k_I\nabla_{s_i}V_I+k_E\nabla_{s_i}V_E=0$ for all $i\in\mathcal{I}$ if and only if $\nabla_{s_i}V_I$ $=$ $\nabla_{s_i}V_E$ $=$ $0$ for all $i\in\mathcal{I}$.

In fact,if the sensors are constrained on the smooth boundary of an arbitrary convex set in ${\bf R}^d$ and the target is located inside the convex set,the norm vector $\nabla_{s_i}V_E$ is impossible to be parallel to $\nabla_{s_i}V_I$. Hence the corresponding $V_E$ will always be compatible with $V_I=\|G\|^2$. We only consider the case that sensors are constrained on the boundary of a set in this paper. But more complicated issues such as obstacle avoidance and collision avoidance among sensors could also be solved by introducing more external potentials. At last, it should be noted that the compatibility of $V_I$ and $V_E$ does not guarantee that the final converged placement is optimal. That is because $\nabla_{s_i}V_I=0$ for all $i$ is only necessary but not sufficient for a placement to be optimal. This point has been discussed in [13].

IV. SIMULATIONSWe next present simulation results to verify the proposed control strategy for the 2D and 3D scenarios. We first consider two scenarios with static targets,then we consider a scenario with a dynamic target. It is assumed that the sensor networks have all-to-all communications.

A.Autonomous Sensor Deployment for Static Targets 2D scenarioIn the 2D scenario,all sensors are constrained on an ellipse while the target is located inside the ellipse. The external force $\nabla_{s_i}V_E$ is given in (13). The standard deviations of the sensor noises are assumed to be one,i.e., $\sigma_i=1$ for all $i$. Note the final optimal placement is determined only by the relative values of $\{\sigma_i\}_{i=1}^n$ but not the absolute values. As shown in Fig. 4,given appropriate initial placements,the control strategy can steer sensors to optimal placements while ensuring the sensor trajectory constraints fulfilled. The optimality error shown in Fig. 4 refers to the difference between the objective function $\|G\|^2$ and its lower bound given in (4) or (6). As can be seen,the control strategy can efficiently reduce the optimality errors to zero.

|

Download:

|

| Fig. 4.Sensor trajectories and optimality errors for the 2D scenario. | |

Numerically,it is clear that the final placements in Fig. 4 are optimal because the optimality errors converge to zero. We next analytically determine the optimality of the final placements based on the analytical results presented in [13]. As shown in Fig. 4 (a),the angle subtended by the two sensors in the final placement is 90 deg. Hence we have $g_1\perp g_2$ in the final placement. Then the final placement is optimal by [13,Theorem 4.4]. As shown in Fig. 4 (b),the sensors are steered to equally spaced angular positions around the target. The angle subtended by any two sensors in the final placement is 120 deg. This kind of equally spaced angular placements are optimal as concluded in [13,Section 5.2].

At the first glance,it is intuitively unclear whether the final placement in Fig. 4 (c) is optimal. The final placement of the four sensors can be viewed as a combination of two sub-placements: the sub-placement of sensors 1 and 3,and the one of sensors 2 and 4. Note the angle subtended by sensors 1 and 3 and the one by sensors 2 and 4 are both 90 deg in the final placement. That means $g_1\perp g_3$ and $g_2\perp g_4$,and the two sub-placements are (regular) optimal,respectively. By [13,Theorem 5.6],the final placement in Fig. 4 (c) is optimal.

3D scenarioIn the 3D scenario,it is assumed that each sensor is carried by a UAV or UGV that moves at a fixed altitude. The standard deviations of the sensor noises are assumed to be one,i.e.,$\sigma_i=1$ for all $i$. Simulation results with $n=3$ and 4 are respectively shown in Figs. 5 (a) and 5 (b). As shown in Fig. 5,the optimality errors in the two cases are both reduced to zero efficiently.

|

Download:

|

| Fig. 5.Sensor trajectories and optimality errors for the 3D scenario. | |

In Fig. 5 (a),the angle subtended by any two sensors is 90 deg in the final placement. That means $g_1$,$g_2$,and $g_3$ are mutually orthogonal in the final placement. By [13,Theorem 4.4],the final placement is optimal. The final optimal placement shown in Fig. 5 (b) actually is the one given in [13,Fig. 7 (c)]. In both cases of $n=3$ and $n=4$,the final converged placements are optimal and the sensor position constraints are simultaneously fulfilled.

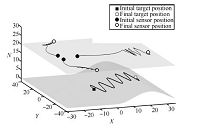

B.Autonomous Sensor Deployment for Dynamic TargetsAlthough it is assumed that the target is stationary,the proposed control strategy can also be applied to tracking of a dynamic target in practice. We next consider a 3D cooperative target tracking scenario. Suppose there are three UAVs flying at the same altitude. Each UAV carries a range-only sensor to measure the distance to the ground target. In the simulation,the target moves on a non-flat ground. The 3D maneuvering motion of the target is given as \begin{align*} p(t)=\left[ \begin{array}{c} 0.5t \\[1mm] 10\sin(\dfrac{\pi}{5}t) \\[1mm] 3-3\cos(\dfrac{\pi}{20}t) \\ \end{array} \right]. \end{align*} See Fig. 6 for the target trajectory.

|

Download:

|

| Fig. 6.Autonomous optimal sensor deployment to track a dynamic target (The target moves on the non-flat ground and the three UAVs fly at a fixed altitude. | |

In this cooperative target tracking scenario,all UAVs need to share their range measurements to estimate the target position, and move to form an optimal placement in the meantime. The autonomous optimal sensor deployment algorithm is summarized as Algorithm 1.

${\bf Algorithm 1. Autonomous optimal sensor deployment for target tracking}$

${\bf Step 1.}$ Obtain an initial estimate of the target position $\hat{p}(0)$.

${\bf Step 2.}$ At time $t$,estimate the target position $\hat{p}(t)$ using a centralized extended Kalman filter (EKF). The EKF is established based on the following process and measurement models:

\begin{align*} &\dot{p}=v,\\ &m_i=\|p-s_i\|+w_i,\quad i=1,\cdots,n, \end{align*} where $m_i\in{\bf R}$ is the measurement of sensor $i$,and $v\in{\bf R}^3$ and $w_i\in{\bf R}$ for all $i$ are white Gaussian noises.

${\bf Step 3.}$ Based on $\hat{p}(t)$,autonomously deploy the optimal sensor placement using the control strategy (8).

${\bf Step 4.}$ At time $t+1$,go to Step 2.

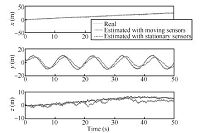

The real initial position of the target is $p(0)=[0,0,0]^{\rm T}$, while the initial target estimate for the EKF is $\hat{p}(0)=[-4, 5, 3]^{\rm T}$. The standard deviation of noise $w_i$ is set as three meters. By Algorithm 1,the trajectories of the three moving sensors are obtained as shown in Fig. 7. The behaviors of the three sensors in Fig. 7 can be explained as the following: the three sensors attempt to form a placement where each angle subtended at the target by any two sensors is 90 deg. In order to illustrate how optimal placements can improve the target tracking performance,we compare the target estimation results in the cases of moving and stationary sensors. In the case of stationary sensors,the three sensors stay statically at the initial placement shown in Fig. 7. The initial placement is obviously not optimal. The target estimation results in the two cases are shown in Fig. 7. As can be seen,the target can be tracked accurately when the sensors are steered to maintain an optimal placement,while the tracking performance is worse when the sensors are stationary. Note the parameters of the EKF are the same in the two cases.

|

Download:

|

| Fig. 7.Target position estimation results by stationary and moving sensors. | |

Based on the results in [13],this work presented a flexible control strategy for autonomously deploying optimal placements of range-only sensors for target tracking. Two practical scenarios were considered and solved using the proposed control strategy. The control strategy was also applied to a 3D cooperative target tracking scenario. It was shown that the target tracking performance can be improved when sensors are steered to maintain an optimal placement.

There are several directions for future research. First,this paper only considers the cases of all-to-all communications. Distributed autonomous deployment,distributed target tracking and the integration of them need to be investigated in the future. Second,this paper only considers homogenous sensor networks but not heterogeneous cases[20]. Cooperative target tracking using heterogeneous sensor networks is a practically important topic for future research.

| [1] | Ousingsawat J, Campbell M E. Optimal cooperative reconnaissance using multiple vehicles. Journal of Guidance, Control, and Dynamics, 2007, 30(1):122-132 |

| [2] | Sinclair A J, Prazenica R J, Jeffcoat D E. Optimal and feedback path planning for cooperative attack. Journal of Guidance, Control, and Dynamics, 2008, 31(6):1708-1715 |

| [3] | Oshman Y, Davidson P. Optimization of observer trajectories for bearings-only target localization. IEEE Transactions on Aerospace and Electronic Systems, 1999, 35(3):892-902 |

| [4] | Bishop A N, Fidan B, Anderson B D O, Dovggançay K, Pathirana P N. Optimality analysis of sensor-target localization geometries. Automatica, 2010, 46(3):479-492 |

| [5] | Bishop A N, Jensfelt P. An optimality analysis of sensor-target geometries for signal strength based localization. In:Proceedings of the 5th International Conference on Intelligent Sensors, Sensor Networks and Information Processing. Melbourne, Australia:IEEE, 2009. 127-132 |

| [6] | Dovgançay K, Hmam H. Optimal angular sensor separation for AOA localization. Signal Processing, 2008, 88(5):1248-1260 |

| [7] | Martínez S, Bullo F. Optimal sensor placement and motion coordination for target tracking. Automatica, 2006, 42(4):661-668 |

| [8] | Zhang H. Two-dimensional optimal sensor placement. IEEE Transactions on Systems, Man, and Cybernetics, 1995, 25(5):781-792 |

| [9] | Dovgançay K. Online optimization of receiver trajectories for scanbased emitter localization. IEEE Transactions on Aerospace and Electronic Systems, 2007, 43(3):1117-1125 |

| [10] | Isaacs J T, Klein D J, Hespanha J P. Optimal sensor placement for time difference of arrival localization. In:Proceedings of the 48th Conference on Decision and Control, 2009 Held Jointly with the 200928th Chinese Control Conference. Shanghai, China:IEEE, 2009. 7878-7884 |

| [11] | Moreno-Salinas D, Pascoal A M, Aranda J. Optimal sensor placement for underwater positioning with uncertainty in the target location. In:Proceedings of the 2011 IEEE International Conference on Robotics and Automation. Shanghai, China:IEEE, 2011. 2308-2314 |

| [12] | Grocholsky B, Keller J, Kumar V, Pappas G. Cooperative air and ground surveillance. IEEE Robotics & Automation Magazine, 2006, 13(3):16-25 |

| [13] | Zhao S, Chen B M, Lee T H. Optimal sensor placement for target localisation and tracking in 2D and 3D. International Journal of Control, 2013, 86(10):1687-1704 |

| [14] | Zhao S, Chen B M, Lee T H. Optimal placement of bearing-only sensors for target localization. In:Proceedings of the 2012 American Control Conference. Montreal, Canada:IEEE, 2012. 5108-5113 |

| [15] | Casazza P G, Fickus M, Kovavcević J, Leon M T, Tremain J C. A physical interpretation of tight frames. In:Harmonic Analysis and Applications, Applied and Numerical Harmonic Analysis. Cambridge, MA:Birkhäuser Boston, 2006. 51-76 |

| [16] | Olfati-Saber R, Murray R M. Consensus problems in networks of agents with switching topology and time-delays. IEEE Transactions on Automatic Control, 2004, 49(9):1520-1533 |

| [17] | Lin P, Jia Y M. Average consensus in networks of multi-agents with both switching topology and coupling time-delay. Physica A:Statistical Mechanics and Its Applications, 2008, 387(1):303-313 |

| [18] | Ren W, Cao Y C. Distributed Coordination of Multi-agent Networks. New York:Springer, 2011 |

| [19] | Hu J W, Xu J, Xie L H. Cooperative search and exploration in robotic networks. Unmanned Systems, 2013, 1(1):121-142 |

| [20] | Meng W, Xie L H, Xiao W D. Optimality analysis of sensor-source geometries in heterogeneous sensor networks. IEEE Transactions on Wireless Communications, 2013, 12(4):1958-1967 |

2014, Vol.1

2014, Vol.1